tensorflow学习

import tensorflow as tf

labels = [[0,0,1],[0,1,0]]

logits = [[2, 0.5,6],

[0.1,0, 3]]

logits_scaled = tf.nn.softmax(logits)

logits_scaled2 = tf.nn.softmax(logits_scaled)

result1 = tf.nn.softmax_cross_entropy_with_logits(labels=labels, logits=logits)

result2 = tf.nn.softmax_cross_entropy_with_logits(labels=labels, logits=logits_scaled)

result3 = -tf.reduce_sum(labels*tf.log(logits_scaled),1)

with tf.Session() as sess:

print ("scaled=",sess.run(logits_scaled))

print ("scaled2=",sess.run(logits_scaled2)) #经过第二次的softmax后,分布概率会有变化

print ("rel1=",sess.run(result1),"\n")#正确的方式

print ("rel2=",sess.run(result2),"\n")#如果将softmax变换完的值放进去会,就相当于算第二次softmax的loss,所以会出错

print ("rel3=",sess.run(result3))

('scaled=', array([[0.01791432, 0.00399722, 0.97808844],

[0.04980332, 0.04506391, 0.90513283]], dtype=float32))

('scaled2=', array([[0.21747023, 0.21446465, 0.56806517],

[0.2300214 , 0.22893383, 0.5410447 ]], dtype=float32))

('rel1=', array([0.02215516, 3.0996735 ], dtype=float32), '\n')

('rel2=', array([0.56551915, 1.4743223 ], dtype=float32), '\n')

('rel3=', array([0.02215518, 3.0996735 ], dtype=float32))

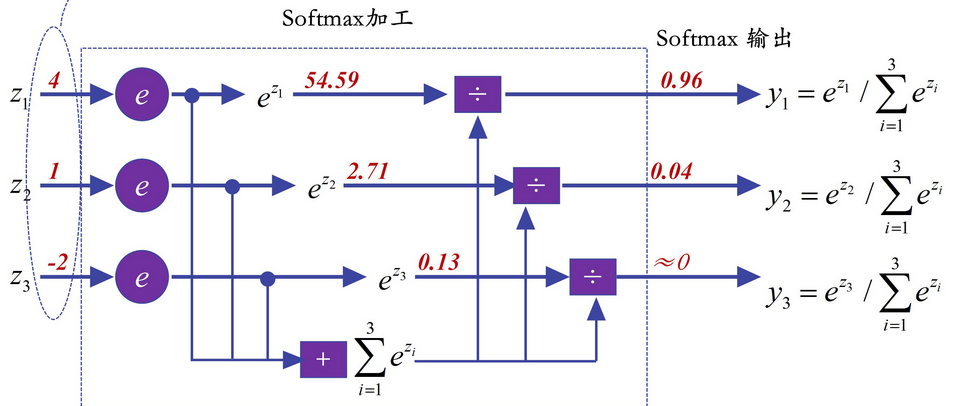

softmax的计算:

(e2/(e2+e0.5+e6),e0.5/(e2+e0.5+e6),e6/(e2+e0.5+e6)) = array([[0.01791432, 0.00399722, 0.97808844],

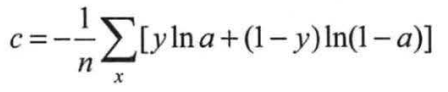

可是按照这个公式计算交叉熵的时候发现和结果不一样,看到result3 = -tf.reduce_sum(labels*tf.log(logits_scaled),1),那就是直接log一下计算的?

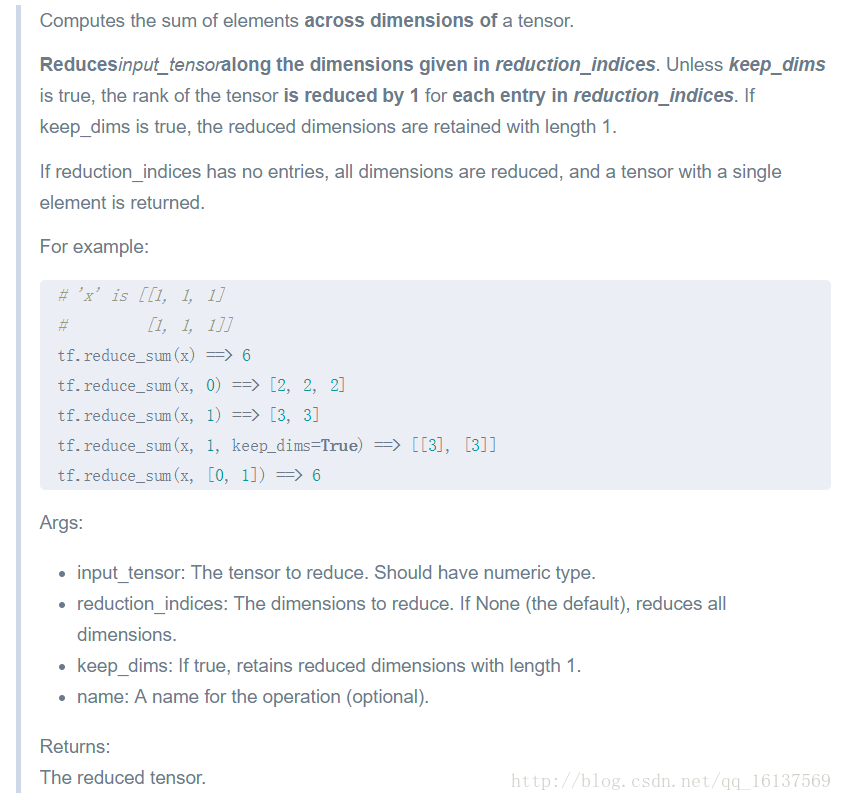

2.tf.reduce_sum

好记性不如烂键盘---点滴、积累、进步!