Docker从入门到精通<4>-网络管理以及镜像分层

docker 网络管理

docker目前支持的网络类型:bridge、host、overlay、macvlan、none、container

-

bridge 作为最常规的模式,bridge模式已经可以满足Docker容器最基本的使用需求了。然而其与外界通信使用NAT,增加了通信的复杂性,在复杂场景下使用会有诸多限制。

-

host docker不会创建独立的network namespace。Docker容器中的进程处于宿主机的网络环境中,相当于Docker容器和宿主机共用同一个networknamespace,使用宿主机的网卡、IP和端口等信息。但是,容器其他方面,如文件系统、进程列表等还是和宿主机隔离的。host模式很好地解决了容器与外界通信的地址转换问题,可以直接使用宿主机的IP进行通信,不存在虚拟化网络带来的额外性能负担。但是host驱动也降低了容器与容器之间、容器与宿主机之间网络层面的隔离性,引起网络资源的竞争与冲突。因此可以认为host驱动适用于对于容器集群规模不大的场景

-

overlay 此驱动采用IETF标准的VXLAN方式,并且是VXLAN中被普遍认为最适合大规模的云计算虚拟化环境的SDN controller模式。在使用的过程中,需要一个额外的配置存储服务,例如Consul、etcd或ZooKeeper。还需要在启动Docker daemon的的时候额外添加参数来指定所使用的配置存储服务地址。

-

macvlan 解决容器跨主机通信,一个物理网口可以分配多个MAC地址,性能比较高。但是需要手动配置IP地址,管理起来比较麻烦

-

none 使用这种驱动的时候,Docker容器拥有自己的networknamespace,但是并不为Docker容器进行任何网络配置。也就是说,这个Docker容器除了network namespace自带的loopback网卡外,没有其他任何网卡、IP、路由等信息,需要用户为Docker容器添加网卡、配置IP等。这种模式如果不进行特定的配置是无法正常使用的,但是优点也非常明显,它给了用户最大的自由度来自定义容器的网络环境。

-

container Container模式,使用 --net=container名称或者ID指定。

使用此模式创建的容器只需指定和一个已经存在的容器共享一个网络,而不是和宿主机共享网。新创建的容器不会创建自己的网卡也不会配置自己的IP,而是和一个已经存在的被指定的容器东西IP和端口范围,因此这个容器的端口不能和被指定的容器端口冲突,除了网络之外的文件系统、进程信息等仍然保持相互隔离,两个容器的进程可以通过lo网卡以及容器的IP进行通信。

桥接网络

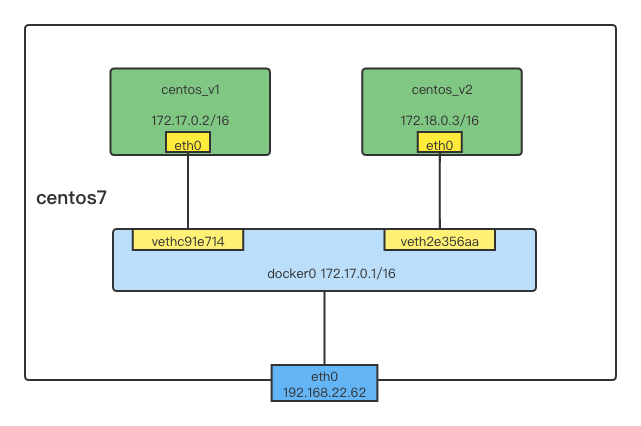

默认为桥接的模式,咱们创建两个容器连接这个网络

[root@vm1 ~]# docker run -it -d --name=centos_v1 centos:latest 42db03964da7ab2c9e636de922c31999baa124aad2ddf36465ef955897dc1257 [root@vm1 ~]# [root@vm1 ~]# docker run -it -d --name=centos_v2 centos:latest 63624337bafda78fa42cee5879fc6aa41efcf2272342cfd75b8a3ab4c1594e79 [root@vm1 ~]# [root@vm1 ~]# [root@vm1 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 63624337bafd centos:latest "/bin/bash" 7 seconds ago Up 5 seconds centos_v2 42db03964da7 centos:latest "/bin/bash" 15 seconds ago Up 13 seconds centos_v1

查看容器以及主机产生的虚拟网卡(每产生一个docker容器就会产生一个虚拟网卡veth)

[root@vm1 ~]# ifconfig

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:53ff:fe94:de33 prefixlen 64 scopeid 0x20<link>

ether 02:42:53:94:de:33 txqueuelen 0 (Ethernet)

RX packets 60237 bytes 3943871 (3.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 83973 bytes 120790154 (115.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.22.62 netmask 255.255.255.0 broadcast 192.168.22.255

inet6 fe80::5054:ff:fe8b:a835 prefixlen 64 scopeid 0x20<link>

ether 52:54:00:8b:a8:35 txqueuelen 1000 (Ethernet)

RX packets 197353580 bytes 17753564744 (16.5 GiB)

RX errors 0 dropped 6794480 overruns 0 frame 0

TX packets 1145738 bytes 92596328 (88.3 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 91 bytes 10083 (9.8 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 91 bytes 10083 (9.8 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

veth2e356aa: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::d8e9:87ff:fec4:212d prefixlen 64 scopeid 0x20<link>

ether da:e9:87:c4:21:2d txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 656 (656.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vethc91e714: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::2cf2:88ff:fecd:2580 prefixlen 64 scopeid 0x20<link>

ether 2e:f2:88:cd:25:80 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8 bytes 656 (656.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

查看这个网络中容器

[root@vm1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

2dc4b3947fdc bridge bridge local

92b5d4da70d0 host host local

dd8e8ec01fe1 none null local

[root@vm1 ~]#

[root@vm1 ~]#

[root@vm1 ~]# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "2dc4b3947fdc4a47913d02c05333163f62fe931597c6d611ad8fa4b0901ef27b",

"Created": "2021-07-07T17:10:15.557687349+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"42db03964da7ab2c9e636de922c31999baa124aad2ddf36465ef955897dc1257": {

"Name": "centos_v1",

"EndpointID": "c5aca4516c375550398b88e93b2d2e216cb39e87307a2f505361670bbba66c1a",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"63624337bafda78fa42cee5879fc6aa41efcf2272342cfd75b8a3ab4c1594e79": {

"Name": "centos_v2",

"EndpointID": "77de38c258ec5643cd37ba1f56fb068c2978b43087629bd368b3f9e79e2a844a",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

进入容器检测彼此是否可以正常通信,docker exec -it centos_v1|v2 /bin/bash,彼此可以正常通信。但是无法访问外网。

如果无法访问外网,请检linux主机是否开启ip包的转发以及防火墙规则是否禁用了流量。

sysctl net.ipv4.conf.all.forwarding=1 sudo iptables -P FORWARD ACCEPT

我们再次查看我们本机的iptables规则

[root@vm1 ~]# iptables -t nat -L -n --line-num Chain PREROUTING (policy ACCEPT) num target prot opt source destination 1 DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL Chain INPUT (policy ACCEPT) num target prot opt source destination Chain OUTPUT (policy ACCEPT) num target prot opt source destination 1 DOCKER all -- 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL Chain POSTROUTING (policy ACCEPT) num target prot opt source destination # 所有从该网段出来的地址全部伪装成了本机的物理地址发出 1 MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0 Chain DOCKER (2 references) num target prot opt source destination 1 RETURN all -- 0.0.0.0/0 0.0.0.0/0

容器访问外网

原理分析:

此时的docker0,我们可以看做是一个交换机,veth分表代表着交换机的端口,可以将多个容器或者虚拟机连接在其上,这个端口是工作在二层,所以不需要配置IP地址。docker作为网桥为连接在其上容器转发数据帧,使得同一台宿主机上的Docker容器之间可以相互通信。docker默认容器的默认网关。

当我们的docker服务启动的时候,docker自动创建一个网卡叫做docker0。我们每次创建一个容器都会自动启动一个虚拟网卡,自动桥接到docker0上面。正常的情况下,容器都是可以和外网进行正常通信的。每启动一个容器就会自动分配一个ip地址,分配的ip地址的网关都为宿主机docker0(这里为172.17.0.1)的地址。然后docker会自动配置iptables的规则,把容器的172.17.0.0/24网段的ip地址转换宿主机本地的ip地址对外网进行访问。这里注意docker服务每次在启动的时候都会自动开启数据包内核转发的功能(net.ipv4.ip_forward = 1),如果没有这条规则,docker的容器也是无法和外界进行通信的

外网访问容器

端口映射,把本机的端口映射到容器中去,前面讲过了。

docker run -d -p 源主机端口:容器服务端口 nginx:1.20

我们来观察下,我启动启动容器做好端口映射之后iptables规则发生了什么变化?

[root@vm1 ~]# iptables -t nat -L -n --line-num Chain PREROUTING (policy ACCEPT) num target prot opt source destination 1 DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL Chain INPUT (policy ACCEPT) num target prot opt source destination Chain OUTPUT (policy ACCEPT) num target prot opt source destination 1 DOCKER all -- 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL Chain POSTROUTING (policy ACCEPT) num target prot opt source destination 1 MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0 Chain DOCKER (2 references) num target prot opt source destination 1 RETURN all -- 0.0.0.0/0 0.0.0.0/0 [root@vm1 ~]# [root@vm1 ~]# [root@vm1 ~]# [root@vm1 ~]# docker run -d -p 80:80 --name=nginx nginx:1.20 16fd72bdd37c646cbe74b2af364e8e76eda8a15ac91eb106af45910e06a5986f [root@vm1 ~]# [root@vm1 ~]# iptables -t nat -L -n --line-num Chain PREROUTING (policy ACCEPT) num target prot opt source destination 1 DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL Chain INPUT (policy ACCEPT) num target prot opt source destination Chain OUTPUT (policy ACCEPT) num target prot opt source destination 1 DOCKER all -- 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL Chain POSTROUTING (policy ACCEPT) num target prot opt source destination 1 MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0 2 MASQUERADE tcp -- 172.17.0.4 172.17.0.4 tcp dpt:80 Chain DOCKER (2 references) num target prot opt source destination 1 RETURN all -- 0.0.0.0/0 0.0.0.0/0 2 DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:80 to:172.17.0.4:80 # docker自动添加了DNAT来做端口映射

大家都知道NAT在大并发的场景会存在诸多的限制,在kubernetes中在早期版本中kube-proxy也是采用iptables的NAT模式,后来就摒弃这个做法采用IPVS模式,因为iptables主要是为防火墙的功能而生的,不是作为高性能的负载均衡器而生的,我们后面会讲到。

docker网络相关的配置问题

在Linux中,可以使用brctl命令查看和管理网桥(需要安装bridge-utils软件包)如查看本机上的Linux网桥以及其上的端口。

我们刚才创建了三个容器

[root@vm1 ~]# brctl show bridge name bridge id STP enabled interfaces docker0 8000.02425394de33 no veth421f7de veth72236dd vethbd1c7a6

docker0网桥是在Docker daemon启动时自动创建的,其IP默认为172.17.0.1/16,之后创建的Docker容器都会在docker0子网的范围内选取一个未占用的IP使用,并连接到docker0网桥上。Docker提供了如下参数可以帮助用户自定义docker0的设置。

❏ ——bip=CIDR:设置docker0的IP地址和子网范围,使用CIDR格式,如192.168.100.1/24。注意这个参数仅仅是配置docker0的,对其他自定义的网桥无效。并且在指定这个参数的时候,宿主机是不存在docker0的或者docker0已存在且docker0的IP和参数指定的IP一致才行。

❏ ——fixed-cidr=CIDR:限制Docker容器获取IP的范围。Docker容器默认获取的IP范围为Docker网桥(docker0网桥或者——bridge指定的网桥)的整个子网范围,此参数可将其缩小到某个子网范围内,所以这个参数必须在Docker网桥的子网范围内。如docker0的IP为172.17.0.1/16,可将——fixed-cidr设为172.17.1.1/24,那么Docker容器的IP范围将为172.17.1.1~172.17.1.254。

❏ ——mtu=BYTES:指定docker0的最大传输单元(MTU)

修改配置文件/etc/docker/daemon.json,然后重启docker服务。

[root@vm1 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://registry.docker-cn.com"],

"bip": "192.168.1.5/24",

"fixed-cidr": "192.168.1.5/25",

"fixed-cidr-v6": "2001:db8::/64",

"mtu": 1500,

"default-gateway": "192.168.1.1",

"default-gateway-v6": "2001:db8:abcd::89",

"dns": ["114.114.114.114","100.100.2.138"]

}

重启docker我们重启创建容器验证下:

[root@vm1 ~]# docker run -d -it --name=centos8 centos 6d781aa8fea24489239b9238c643533727724f200c43dbeac4896d4fcb554f17 [root@vm1 ~]# See 'docker --help' [root@vm1 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 6d781aa8fea2 centos "/bin/bash" 37 seconds ago Up 35 seconds centos8 [root@vm1 ~]# docker exec -it centos8 /bin/bash [root@6d781aa8fea2 /]# [root@6d781aa8fea2 /]# [root@6d781aa8fea2 /]# ip ro sh default via 192.168.1.1 dev eth0 192.168.1.0/24 dev eth0 proto kernel scope link src 192.168.1.2 [root@6d781aa8fea2 /]# [root@6d781aa8fea2 /]# [root@6d781aa8fea2 /]# cat /etc/resolv.conf nameserver 114.114.114.114 nameserver 100.100.2.138 [root@6d781aa8fea2 /]#

macvlan模式

解决容器的跨主机通信的问题

| 主机名 | ip | 操作系统 | docker版本 | |

| vm0 | 192.168.22.61 | CentOS Linux release 7.9.2009 (Core) | 20.10.7 | |

| vm1 | 192.168.22.62 | CentOS Linux release 7.9.2009 (Core) | 20.10.7 |

1. 分别在两台主机上创建macvlan的网络

vm0

[root@vm0 ~]# docker network create -d macvlan \ > --subnet=192.168.22.0/24 \ > --gateway=192.168.22.1 \ > -o parent=eth0.50 macvlan50 890e11d4ab3c62631d7b88bf08947f09b6ecf5ca604b535a2948f00a7e55a1d0 [root@vm0 ~]# [root@vm0 ~]# [root@vm0 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE cce04c170c19 bridge bridge local 9fa52e2faeeb host host local 890e11d4ab3c macvlan50 macvlan local 0504722d6345 none null local

vm1

[root@vm1 ~]# docker network create -d macvlan \ > --subnet=192.168.22.0/24 \ > --gateway=192.168.22.1 \ > -o parent=eth0.50 macvlan50 cc27fdead506945cdb1289a54a80256f1fb1941f97898f00d6f45732d2b4f029 [root@vm1 ~]# [root@vm1 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 2dc4b3947fdc bridge bridge local 92b5d4da70d0 host host local cc27fdead506 macvlan50 macvlan local dd8e8ec01fe1 none null local

2. 分别在两台机器上创建连个容器连接创建网络并设置ip地址

vm0

[root@vm0 ~]# docker run -it -d --network=macvlan50 --ip=192.168.22.200 --name=centos_vm0_v1 centos 34b11ceed600e143dfa8c43bb25773f9685e7d15858e3c1037ed6626819d30f8 [root@vm0 ~]# [root@vm0 ~]# [root@vm0 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 34b11ceed600 centos "/bin/bash" 8 seconds ago Up 7 seconds centos_vm0_v1

vm1

[root@vm1 ~]# docker run -it -d --network=macvlan50 --ip=192.168.22.201 --name=centos_vm1_v1 centos 53cb8d12e20b8d4aaf2e23ed683496e81597cb0158d7b8afd3d94975ec5a2226 [root@vm1 ~]# [root@vm1 ~]# [root@vm1 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 2dc4b3947fdc bridge bridge local 92b5d4da70d0 host host local cc27fdead506 macvlan50 macvlan local dd8e8ec01fe1 none null local [root@vm1 ~]# [root@vm1 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 53cb8d12e20b centos "/bin/bash" 16 seconds ago Up 14 seconds centos_vm1_v1

3. 测试两个主机上的连个容器是否可以ping通

vm0

[root@vm0 ~]# docker exec -it centos_vm0_v1 /bin/bash [root@34b11ceed600 /]# ping 192.168.22.201 PING 192.168.22.201 (192.168.22.201) 56(84) bytes of data. 64 bytes from 192.168.22.201: icmp_seq=1 ttl=64 time=0.624 ms 64 bytes from 192.168.22.201: icmp_seq=2 ttl=64 time=0.379 ms ^C --- 192.168.22.201 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1ms rtt min/avg/max/mdev = 0.379/0.501/0.624/0.124 ms [root@34b11ceed600 /]# [root@34b11ceed600 /]# ping 192.168.22.1 PING 192.168.22.1 (192.168.22.1) 56(84) bytes of data. ^C --- 192.168.22.1 ping statistics --- 1 packets transmitted, 0 received, 100% packet loss, time 0ms [root@34b11ceed600 /]# [root@34b11ceed600 /]# ping www.baidu.com ping: www.baidu.com: Name or service not known

vm1

[root@vm1 ~]# docker exec -it centos_vm1_v1 /bin/bash [root@53cb8d12e20b /]# ping 192.168.22.200 PING 192.168.22.200 (192.168.22.200) 56(84) bytes of data. 64 bytes from 192.168.22.200: icmp_seq=1 ttl=64 time=0.346 ms 64 bytes from 192.168.22.200: icmp_seq=2 ttl=64 time=0.361 ms ^C --- 192.168.22.200 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1ms rtt min/avg/max/mdev = 0.346/0.353/0.361/0.020 ms [root@53cb8d12e20b /]# ping 192.168.22.1 PING 192.168.22.1 (192.168.22.1) 56(84) bytes of data. From 192.168.22.201 icmp_seq=1 Destination Host Unreachable From 192.168.22.201 icmp_seq=2 Destination Host Unreachable From 192.168.22.201 icmp_seq=3 Destination Host Unreachable From 192.168.22.201 icmp_seq=4 Destination Host Unreachable ^C --- 192.168.22.1 ping statistics --- 4 packets transmitted, 0 received, +4 errors, 100% packet loss, time 1002ms pipe 4 [root@53cb8d12e20b /]# ping 192.168.22.62 PING 192.168.22.62 (192.168.22.62) 56(84) bytes of data. ^C --- 192.168.22.62 ping statistics --- 2 packets transmitted, 0 received, 100% packet loss, time 1ms

两个主机的容器互通,但是容器无法通宿主机和外网通信。

Host网络

如果设置为主机网络,那么容器和主机共享网络命名空间,主机模式网络可用于优化性能,并且在容器需要处理大量端口的情况下,因为它不需要网络地址转换 (NAT),仅支持linxu主机。除了网卡、ip和端口共享,文件系统、进程、文件等都是隔离的。

在Host网络模式下,我们不需要创建network 的namespace,只需要用 --network host,即可

[root@vm0 ~]# docker run -d -it --name=centos_host --network=host centos 08178db5df01f24ff411ad15fa578d4c6fdad353b7b85481165b30cc14488948 [root@vm0 ~]# [root@vm0 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 08178db5df01 centos "/bin/bash" 4 seconds ago Up 2 seconds centos_host [root@vm0 ~]# ip ro sh default via 192.168.22.1 dev eth0 proto static metric 100 172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 192.168.22.0/24 dev eth0 proto kernel scope link src 192.168.22.61 metric 100 [root@vm0 ~]# [root@vm0 ~]# [root@vm0 ~]# docker exec -it centos_host /bin/bash [root@vm0 /]# [root@vm0 /]# [root@vm0 /]# ip ro sh default via 192.168.22.1 dev eth0 proto static metric 100 172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 192.168.22.0/24 dev eth0 proto kernel scope link src 192.168.22.61 metric 100 [root@vm0 /]#

none 和 overlay

none:不用对容器进行如何网络配置 --network=none

overlay:比较适合大规模云计算的网络模式,这里我们不做深入的研究。后面我们直接学习kubernetes中的有现成的网络插件来解决网络通信的问题,后面我们会做深入的讲解。

container模式

$ docker run -it -d --name nginx-web1 -p 81:80 --net=bridge nginx:1.21.5 9cfd1e9d5947d6194d5ec4e7245c6a05a8f3e178e9602d2123392edfb1247c57 $ docker run -it -d --name php-server --net=container:nginx-web1 php:7.3.33-fpm

docker镜像分层

详细可以参考:https://docs.docker.com/storage/storagedriver/

什么是镜像?

镜像分层

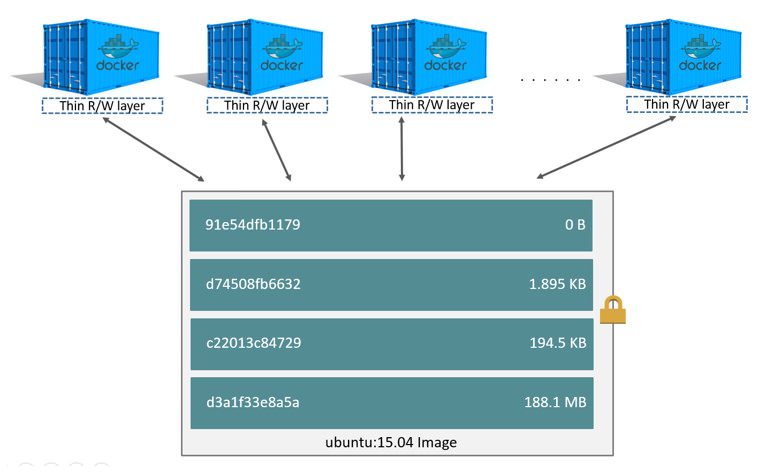

rootfs是Docker容器在启动时内部进程可见的文件系统,即Docker容器的根目录。rootfs通常包含一个操作系统运行所需的文件系统,例如可能包含典型的类Unix操作系统中的目录系统,如/dev、/proc、/bin、/etc、/lib、/usr、/tmp及运行Docker容器所需的配置文件、工具等。在传统的Linux操作系统内核启动时,首先挂载一个只读(read-only)的rootfs,当系统检测其完整性之后,再将其切换为读写(read-write)模式。而在Docker架构中,当Dockerdaemon为Docker容器挂载rootfs时,沿用了Linux内核启动时的方法,即将rootfs设为只读模式。在挂载完毕之后,利用联合挂载(union mount)技术在已有的只读rootfs上再挂载一个读写层。这样,可读写层处于Docker容器文件系统的最顶层,其下可能联合挂载多个只读层,只有在Docker容器运行过程中文件系统发生变化时,才会把变化的文件内容写到可读写层,并隐藏只读层中的老版本文件。

Docker镜像是采用分层的方式构建的,每个镜像都由一系列的“镜像层”组成。分层结构是Docker镜像如此轻量的重要原因,当需要修改容器镜像内的某个文件时,只对处于最上方的读写层进行变动,不覆写下层已有文件系统的内容,已有文件在只读层中的原始版本仍然存在,但会被读写层中的新版文件所隐藏。当使用docker commit提交这个修改过的容器文件系统为一个新的镜像时,保存的内容仅为最上层读写文件系统中被更新过的文件。分层达到了在不同镜像之间共享镜像层的效果。

来看一下前面我们基于centos8 制作的nginx镜像,在centos基础上制作前后的区别

[root@vm1 ~]# docker image history centos IMAGE CREATED CREATED BY SIZE COMMENT 300e315adb2f 7 months ago /bin/sh -c #(nop) CMD ["/bin/bash"] 0B <missing> 7 months ago /bin/sh -c #(nop) LABEL org.label-schema.sc… 0B <missing> 7 months ago /bin/sh -c #(nop) ADD file:bd7a2aed6ede423b7… 209MB [root@vm1 ~]# [root@vm1 ~]# [root@vm1 ~]# [root@vm1 ~]# docker image history centos8_nginx_1.14:v1 IMAGE CREATED CREATED BY SIZE COMMENT cae942db2582 18 minutes ago tail -F /var 107MB 基于centos8上制作nginx1.14的新镜像 300e315adb2f 7 months ago /bin/sh -c #(nop) CMD ["/bin/bash"] 0B <missing> 7 months ago /bin/sh -c #(nop) LABEL org.label-schema.sc… 0B <missing> 7 months ago /bin/sh -c #(nop) ADD file:bd7a2aed6ede423b7… 209MB

更加直观的方式,我们可以详细检查下镜像的详细信息

[root@vm1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

centos8_nginx_1.14 v1 8840d8645390 4 seconds ago 316MB

zabbix/zabbix-java-gateway alpine-5.4-latest 2552b7f42e42 9 days ago 84.4MB

nginx 1.20 7ca45f2d188b 2 weeks ago 133MB

mysql 8.0 5c62e459e087 2 weeks ago 556MB

ubuntu latest 9873176a8ff5 2 weeks ago 72.7MB

zabbix/zabbix-web-nginx-mysql alpine-5.4-latest c75fcde05a14 3 weeks ago 166MB

zabbix/zabbix-server-mysql alpine-5.4-latest 856abc12b012 3 weeks ago 69MB

busybox latest 69593048aa3a 4 weeks ago 1.24MB

centos latest 300e315adb2f 7 months ago 209MB

[root@vm1 ~]#

[root@vm1 ~]#

[root@vm1 ~]# docker image inspect centos

[

{

"Id": "sha256:300e315adb2f96afe5f0b2780b87f28ae95231fe3bdd1e16b9ba606307728f55",

"RepoTags": [

"centos:latest"

],

"RepoDigests": [

"centos@sha256:5528e8b1b1719d34604c87e11dcd1c0a20bedf46e83b5632cdeac91b8c04efc1"

],

"Parent": "",

"Comment": "",

"Created": "2020-12-08T00:22:53.076477777Z",

"Container": "395e0bfa7301f73bc994efe15099ea56b8836c608dd32614ac5ae279976d33e4",

"ContainerConfig": {

"Hostname": "395e0bfa7301",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

],

"Cmd": [

"/bin/sh",

"-c",

"#(nop) ",

"CMD [\"/bin/bash\"]"

],

"Image": "sha256:6de05bdfbf9a9d403458d10de9e088b6d93d971dd5d48d18b4b6758f4554f451",

"Volumes": null,

"WorkingDir": "",

"Entrypoint": null,

"OnBuild": null,

"Labels": {

"org.label-schema.build-date": "20201204",

"org.label-schema.license": "GPLv2",

"org.label-schema.name": "CentOS Base Image",

"org.label-schema.schema-version": "1.0",

"org.label-schema.vendor": "CentOS"

}

},

"DockerVersion": "19.03.12",

"Author": "",

"Config": {

"Hostname": "",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

],

"Cmd": [

"/bin/bash"

],

"Image": "sha256:6de05bdfbf9a9d403458d10de9e088b6d93d971dd5d48d18b4b6758f4554f451",

"Volumes": null,

"WorkingDir": "",

"Entrypoint": null,

"OnBuild": null,

"Labels": {

"org.label-schema.build-date": "20201204",

"org.label-schema.license": "GPLv2",

"org.label-schema.name": "CentOS Base Image",

"org.label-schema.schema-version": "1.0",

"org.label-schema.vendor": "CentOS"

}

},

"Architecture": "amd64",

"Os": "linux",

"Size": 209348104,

"VirtualSize": 209348104,

"GraphDriver": {

"Data": {

"MergedDir": "/var/lib/docker/overlay2/d921f6bf1d3c6ed0c2bcb71ad427688d4ef774405e78515abf18eea1cf044f80/merged",

"UpperDir": "/var/lib/docker/overlay2/d921f6bf1d3c6ed0c2bcb71ad427688d4ef774405e78515abf18eea1cf044f80/diff",

"WorkDir": "/var/lib/docker/overlay2/d921f6bf1d3c6ed0c2bcb71ad427688d4ef774405e78515abf18eea1cf044f80/work"

},

"Name": "overlay2"

},

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:2653d992f4ef2bfd27f94db643815aa567240c37732cae1405ad1c1309ee9859"

]

},

"Metadata": {

"LastTagTime": "0001-01-01T00:00:00Z"

}

}

]

[root@vm1 ~]#

[root@vm1 ~]# docker image inspect centos8_nginx_1.14:v1

[

{

"Id": "sha256:8840d8645390b224b034050d57e6a66110e00704177eac0b4a893bf1b2ea350c",

"RepoTags": [

"centos8_nginx_1.14:v1"

],

"RepoDigests": [],

"Parent": "sha256:300e315adb2f96afe5f0b2780b87f28ae95231fe3bdd1e16b9ba606307728f55",

"Comment": "make nginx images base on centos8",

"Created": "2021-07-08T10:34:42.236736605Z",

"Container": "f86b43350236befaa173f6e8e658d5aa4a8587ddd3c507296b2908434d8e90ed",

"ContainerConfig": {

"Hostname": "f86b43350236",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"Tty": false,

"OpenStdin": true,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

],

"Cmd": [

"/bin/bash"

],

"Image": "centos",

"Volumes": null,

"WorkingDir": "",

"Entrypoint": null,

"OnBuild": null,

"Labels": {

"org.label-schema.build-date": "20201204",

"org.label-schema.license": "GPLv2",

"org.label-schema.name": "CentOS Base Image",

"org.label-schema.schema-version": "1.0",

"org.label-schema.vendor": "CentOS"

}

},

"DockerVersion": "20.10.7",

"Author": "yangning",

"Config": {

"Hostname": "f86b43350236",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"Tty": false,

"OpenStdin": true,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

],

"Cmd": [

"/bin/bash"

],

"Image": "centos",

"Volumes": null,

"WorkingDir": "",

"Entrypoint": null,

"OnBuild": null,

"Labels": {

"org.label-schema.build-date": "20201204",

"org.label-schema.license": "GPLv2",

"org.label-schema.name": "CentOS Base Image",

"org.label-schema.schema-version": "1.0",

"org.label-schema.vendor": "CentOS"

}

},

"Architecture": "amd64",

"Os": "linux",

"Size": 316000198,

"VirtualSize": 316000198,

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/d921f6bf1d3c6ed0c2bcb71ad427688d4ef774405e78515abf18eea1cf044f80/diff",

"MergedDir": "/var/lib/docker/overlay2/b464884aae3726a830b6904d0d530aa501b21561068a351fa0fbe1127f3ef354/merged",

"UpperDir": "/var/lib/docker/overlay2/b464884aae3726a830b6904d0d530aa501b21561068a351fa0fbe1127f3ef354/diff",

"WorkDir": "/var/lib/docker/overlay2/b464884aae3726a830b6904d0d530aa501b21561068a351fa0fbe1127f3ef354/work"

},

"Name": "overlay2"

},

"RootFS": {

"Type": "layers",

"Layers": [

"sha256:2653d992f4ef2bfd27f94db643815aa567240c37732cae1405ad1c1309ee9859",

"sha256:d48867f14af1109fedec153bae771d260b81ee41dac30b539f2d5750fadb0355"

]

},

"Metadata": {

"LastTagTime": "2021-07-08T18:34:42.290295321+08:00"

}

}

]

我们可以很直观的看出centos8_nginx_1.14:v1镜像的parent_id刚好为centos:latest镜像的id,镜像分层可以更好节省存储空间。

浙公网安备 33010602011771号

浙公网安备 33010602011771号