Scrapy框架 之采集某电子网站产品

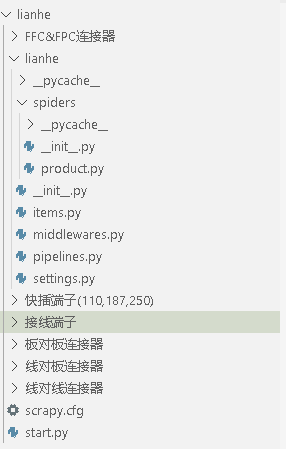

一、创建项目

第一步:scrapy startproject lianhe

第二步:cd lianhe

scrapy genspider product www.lhecn.com.cn

二、示例代码

start.py

from scrapy import cmdline import os if __name__ == '__main__': dirname = os.path.dirname(os.path.abspath(__file__)) os.chdir(dirname) cmdline.execute("scrapy crawl product".split())

items.py

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class LianheItem(scrapy.Item): # define the fields for your item here like: catname = scrapy.Field() name = scrapy.Field() pdf = scrapy.Field() pics = scrapy.Field() pass

product.py

# -*- coding: utf-8 -*- import scrapy import requests from lxml import etree from ..items import LianheItem class ProductSpider(scrapy.Spider): name = 'product' allowed_domains = ['www.lhecn.com.cn'] host = 'https://www.lhecn.com.cn' start_urls = [ {"name": '线对板连接器', "url": host + '/category/wire-to-board/page/{0}/'}, {"name": '线对线连接器', "url": host + '/category/wire-to-wire/page/{0}/'}, {"name": '板对板连接器', "url": host + '/category/pc-board-in/page/{0}/'}, {"name": '快插端子(110,187,250)', "url": host + '/category/faston-terminal/page/{0}/'}, {"name": 'FFC&FPC连接器', "url": host + '/category/ffc-fpc-connector/page/{0}/'}, {"name": '接线端子', "url": host + '/category/terminal-block/page/{0}/'}, ] # 获取总页数 def get_all_page(self, url): response = requests.get(url) html = etree.HTML(response.content, parser=etree.HTMLParser()) res = html.xpath('//div[@class="wp-pagenavi"]') if len(res) > 0: p = res[0].xpath("./a[last()-1]//text()")[0] return int(p) return 1 def start_requests(self): for item in self.start_urls: # 获取总共有多少页 url = item.get('url') total_page = self.get_all_page(url.format('1')) print("total_page = " + str(total_page)) for page in range(1, total_page+1): link = url.format(str(page)) yield scrapy.Request(link, callback=self.parse, meta={"url": link, "name": item.get('name')}) def parse(self, response): meta = response.meta for each in response.xpath('//div[@class="pro-imgs col-md-4 col-sm-6 col-xs-6"]'): url = each.xpath("./div[@class='imgallbox imgtitle-h3']//h3//a/@href").extract()[0] name = each.xpath("./div[@class='imgallbox imgtitle-h3']//h3//a//text()").extract()[0] # print(url) # print(name) item = LianheItem() item['catname'] = meta['name'] item['name'] = name.strip() yield scrapy.Request(url, callback=self.url_parse, meta={"item": item}) def url_parse(self, response): item = response.meta['item'] item['pdf'] = response.xpath("//div[@class='pdf_uploads']//a/@href").extract()[0] pics = [] for pic in response.xpath("//div[@class='sp-wrap']//a/@href").extract(): pics.append(pic) item['pics'] = pics yield item

pipelines.py

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html import os import requests class LianhePipeline(object): count = 0 cat_dict = {} def __init__(self) -> None: pass def process_item(self, item, spider): self.count += 1 # 判断分类文件夹是否存在 if not os.path.exists(item["catname"]): os.mkdir(item["catname"]) cat_product_count = self.cat_dict.get(item["catname"]) if cat_product_count is None: count = 1 else: count = int(cat_product_count) + 1 self.cat_dict[item["catname"]] = count # 网络pdf保存到本地 file = item["catname"] + '/' + str(count) + ".pdf" self.save_file(item['pdf'], file) num = 1 for pic in item['pics']: file = item["catname"] + '/' + str(count) + "_"+str(num)+".jpg" self.save_file(pic, file) num += 1 with open( item["catname"] + '/' + str(count) +'.txt','w') as f: f.write(item['name']) f.close() return item def save_file(self, url, filename): if url == '': return False response = requests.get(url) with open(filename,'wb') as f: f.write(response.content) f.close() def close_spider(self, spider): print("总共采集:{0}".format(str(self.count)))

浙公网安备 33010602011771号

浙公网安备 33010602011771号