Scrapy框架 之某视频网站采集案例

代码只能作为学习,请不要用于其他。

一、效果图

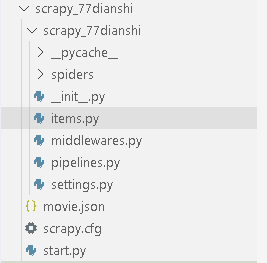

二、代码编写

1、items.py

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class Scrapy77DianshiItem(scrapy.Item): # define the fields for your item here like: #电影标题 title = scrapy.Field() #电影封面 pic = scrapy.Field() #电影描述 desc = scrapy.Field() #电影类型 remarks = scrapy.Field() #详情地址 link = scrapy.Field() pass

2、movie.py

# -*- coding: utf-8 -*- import scrapy from ..items import Scrapy77DianshiItem class MovieSpider(scrapy.Spider): name = 'movie' allowed_domains = ['77dianshi.com'] page = 1 host = "http://77dianshi.com" url = host + "/iTe5kdy/page_{0}.html" start_urls = [url.format(str(page))] def parse(self, response): print("当前采集第{0}页".format(self.page)) # 获取列表 for each in response.xpath("//ul[@class='fed-list-info fed-part-rows']//li"): item = Scrapy77DianshiItem() item['title'] = each.xpath('./a[2]//text()').extract()[0] item['link'] = self.host + each.xpath('./a[1]/@href').extract()[0] item['desc'] = each.xpath('./span[1]//text()').extract()[0] item['remarks'] = each.xpath('./a[1]//span[3]//text()').extract()[0] item['pic'] = each.xpath('./a[1]/@data-original').extract()[0] yield item #判断是否到最后一页 last_page = response.xpath("//div[@class='pages text-center']//a[last()]//text()").extract()[0] if last_page == "»": #不是最后一页 self.page += 1 yield scrapy.Request(self.url.format(self.page), callback=self.parse) else: print('结束采集,最后一页:' + str(self.page))

3、pipelines.py

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html import json class Scrapy77DianshiPipeline(object): def __init__(self): #打开json文件 self.file = open('movie.json', 'w') def process_item(self, item, spider): # print(item) # 保存到json文件 self.file.write(json.dumps(dict(item), ensure_ascii=False)+'\n') return item def close_spider(self, spider): self.file.close()

4、start.py启动文件

from scrapy import cmdline import os if __name__ == "__main__": # 获取当前路径 dirpath=os.path.dirname(os.path.abspath(__file__)) # 切换到当前目录 os.chdir(dirpath) #执行爬虫 cmdline.execute("scrapy crawl movie".split())

如果你感觉有收获,欢迎给我打赏 ———— 以激励我输出更多优质内容,联系QQ:2575404985

.png)

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY