生产级别的Ceph集群搭建

准备环境

| 主机名 | 业务IP | 集群IP | 磁盘规划 | 功能介绍 |

| ceph-deploy | 172.16.143.110 | 172.16.138.110 | 200/1 | 管理Ceph集群 |

| ceph-mon1 | 172.16.143.111 | 172.16.138.131 | 200/1 | 监控Ceph集群状态,拓扑等信息 |

| ceph-mon2 | 172.16.143.112 | 172.16.138.132 | 200/1 | 监控Ceph集群状态,拓扑等信息 |

| ceph-mon3 | 172.16.143.113 | 172.16.138.113 | 200/1 | 监控Ceph集群状态,拓扑等信息 |

| ceph-mgr1 | 172.16.143.114 | 172.16.138.114 | 200/1 | Ceph 集群的管理,实现统一接口 |

| ceph-mgr2 | 172.16.143.115 | 172.16.138.115 | 200/1 | Ceph 集群的管理,实现统一接口 |

| ceph-node1 | 172.16.143.116 | 172.16.138.116 | 200/1 100/2 | Ceph 集群的存储节点 |

| ceph-node2 | 172.16.143.117 | 172.16.138.117 | 200/1 100/2 | Ceph 集群的存储节点 |

| ceph-node3 | 172.16.143.118 | 172.16.138.118 | 200/1 100/2 | Ceph 集群的存储节点 |

| ceph-node4 | 172.16.143.119 | 172.16.138.119 | 200/1 100/2 | Ceph 集群的存储节点 |

- 配置2张网卡,一个是业务访问使用,一个是集群内部访问使用

- 关闭防火墙

- 关闭selinux

- 配置时间同步

- 配置hosts

配置Ceph的源

wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add - sudo apt-add-repository 'deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ bionic main' sudo apt update

配置ceph用户

groupadd -r -g 2022 ceph && useradd -r -m -s /bin/bash -u 2022 -g 2022 ceph && echo ceph:123456 | chpasswd echo "ceph ALL=(ALL) NOPASSWD:ALL" >> /etc/sudoers

配置ceph-deploy节点免密登录

ssh-keygen -t rsa ssh-copy-id -i .ssh/id_rsa.pub ceph@172.16.143.110 ... ... ssh-copy-id -i .ssh/id_rsa.pub ceph@172.16.143.119

安装Ceph的部署工具ceph-deploy

sudo apt install ceph-deploy

安装Ceph

初始化mon

mkdir ceph-cluster cd ceph-cluster

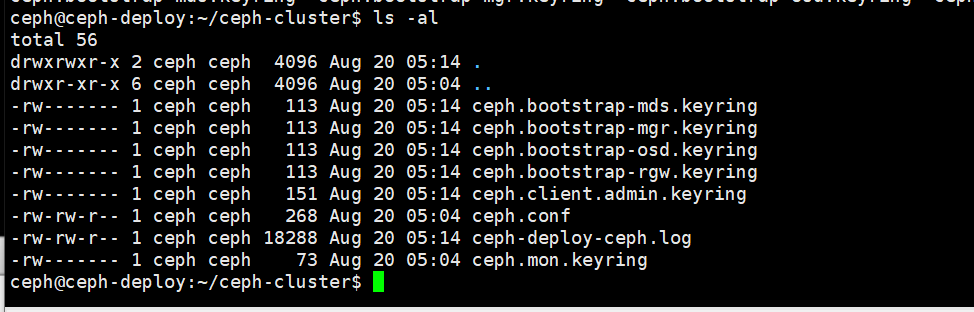

生成配置文件(ceph-deploy节点)

$ ceph-deploy new --cluster-network 172.16.138.0/24 --public-network 172.16.143.0/24 ceph-mon1 $ ls ceph.conf ceph-deploy-ceph.log ceph.mon.keyring

安装ceph-mon(ceph-moin)

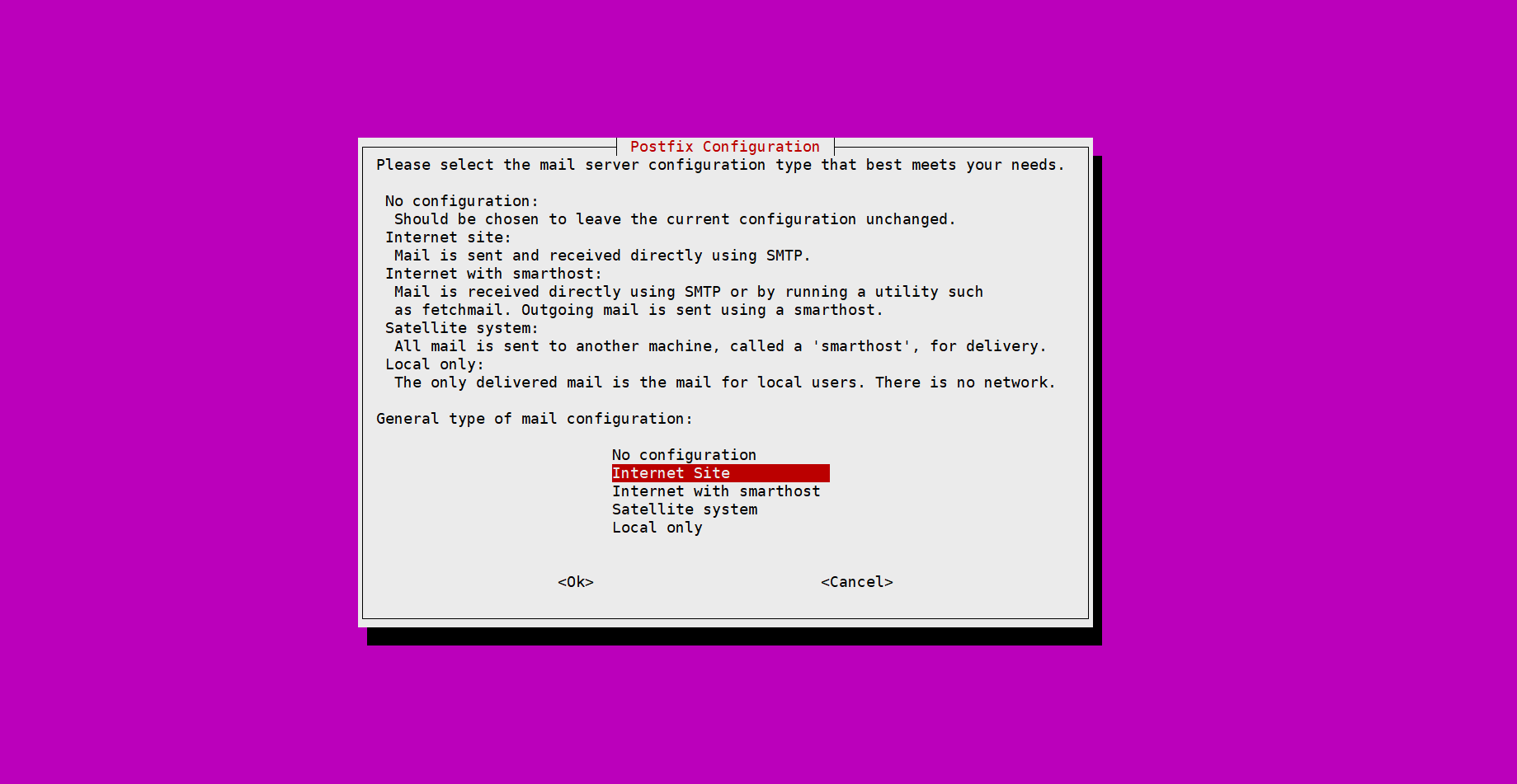

$ sudo apt install ceph-mon -y

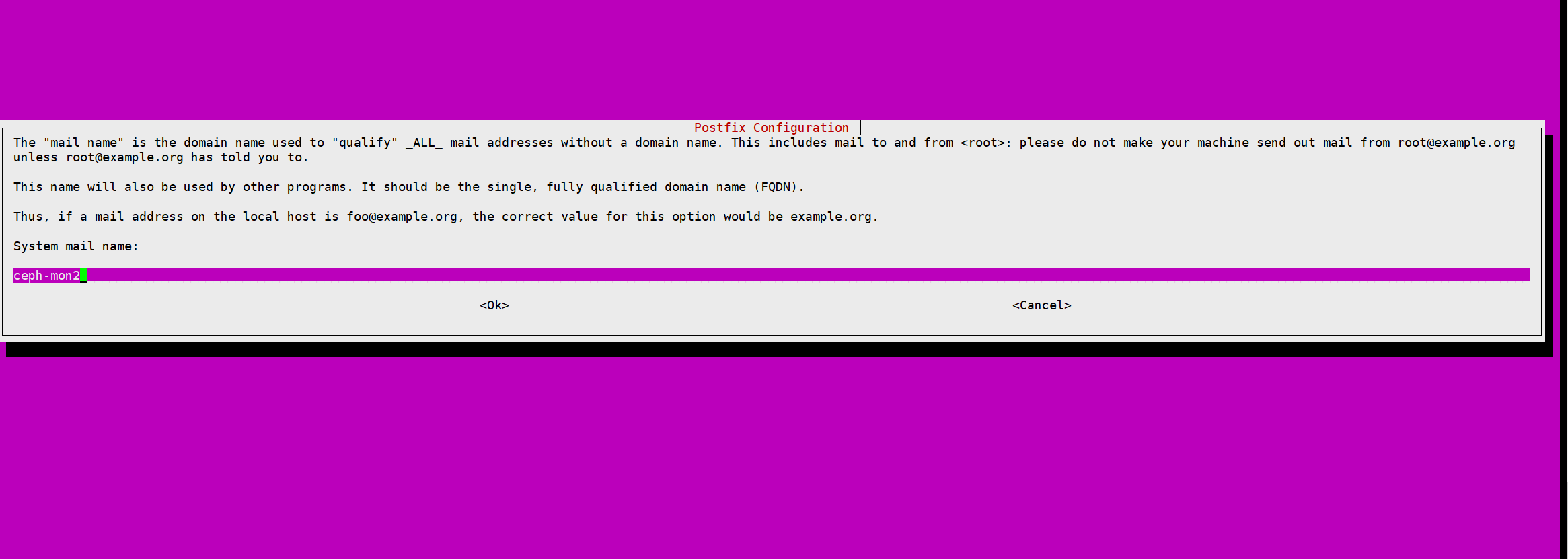

回车

回车

初始化mon

ceph-deploy mon create-initial

验证mon进程是否启动

$ ps -ef |grep mon message+ 757 1 0 04:40 ? 00:00:00 /usr/bin/dbus-daemon --system --address=systemd: --nofork --nopidfile --systemd-activation --syslog-only root 802 1 0 04:40 ? 00:00:00 /usr/lib/accountsservice/accounts-daemon daemon 832 1 0 04:40 ? 00:00:00 /usr/sbin/atd -f ceph 6739 1 0 05:14 ? 00:00:00 /usr/bin/ceph-mon -f --cluster ceph --id ceph-mon1 --setuser ceph --setgroup ceph root 7343 1566 0 05:16 pts/0 00:00:00 grep --color=auto mon

安装ceph-common及分发ceph-admin管理秘钥

//root用户执行 $ apt install ceph-common -y $ ceph-deploy admin ceph-deploy

$ sudo chown ceph:ceph /etc/ceph/ceph.client.admin.keyring

$ ceph -s

cluster:

id: 6e278817-8019-4a06-82b3-b4d24d7dd743

health: HEALTH_WARN

mon is allowing insecure global_id reclaim

services:

mon: 1 daemons, quorum ceph-mon1 (age 60m)

mgr: no daemons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

安装ceph-mgr

mgr节点执行

apt install ceph-mgr -y

deploy节点执行

ceph-deploy mgr create ceph-mgr1

安装ceph-node

deploy节点执行

//分别执行1,2,3,4节点 ceph-deploy install --no-adjust-repos --nogpgcheck ceph-node4

擦除远端磁盘(node节点的都要执行,sdb sdc)

ceph-deploy disk zap ceph-node1 /dev/sdb

添加主机OSD(node节点的所有磁盘都要执行)

ceph-deploy osd create ceph-node2 --data /dev/sdb

查看集群状态

$ ceph -s cluster: id: 6e278817-8019-4a06-82b3-b4d24d7dd743 health: HEALTH_OK services: mon: 1 daemons, quorum ceph-mon1 (age 3d) mgr: ceph-mgr1(active, since 3d) osd: 8 osds: 8 up (since 9s), 8 in (since 17s) data: pools: 1 pools, 1 pgs objects: 0 objects, 0 B usage: 45 MiB used, 800 GiB / 800 GiB avail pgs: 1 active+clean

扩展mon服务

mon节点2/3 安装ceph-mon

$ apt install ceph-mon

添加mon

$ ceph-deploy mon add ceph-mon3

$ ceph -s cluster: id: 6e278817-8019-4a06-82b3-b4d24d7dd743 health: HEALTH_OK services: mon: 3 daemons, quorum ceph-mon1,ceph-mon2,ceph-mon3 (age 29s) mgr: ceph-mgr1(active, since 3d) osd: 8 osds: 8 up (since 2h), 8 in (since 2h) data: pools: 1 pools, 1 pgs objects: 0 objects, 0 B usage: 45 MiB used, 800 GiB / 800 GiB avail pgs: 1 active+clean

查看mon状态

$ ceph quorum_status --format json-pretty

扩展mgr

mgr节点安装mgr

$ apt install ceph-mgr

添加mgr

$ ceph-deploy mgr create ceph-mgr2

查看状态

$ ceph -s cluster: id: 6e278817-8019-4a06-82b3-b4d24d7dd743 health: HEALTH_OK services: mon: 3 daemons, quorum ceph-mon1,ceph-mon2,ceph-mon3 (age 5m) mgr: ceph-mgr1(active, since 3d), standbys: ceph-mgr2 osd: 8 osds: 8 up (since 2h), 8 in (since 2h) data: pools: 1 pools, 1 pgs objects: 0 objects, 0 B usage: 45 MiB used, 800 GiB / 800 GiB avail pgs: 1 active+clean

碎片化时间学习和你一起终身学习