深度学习的始祖框架,grandfather级别的框架 —— Theano —— 示例代码学习(2)

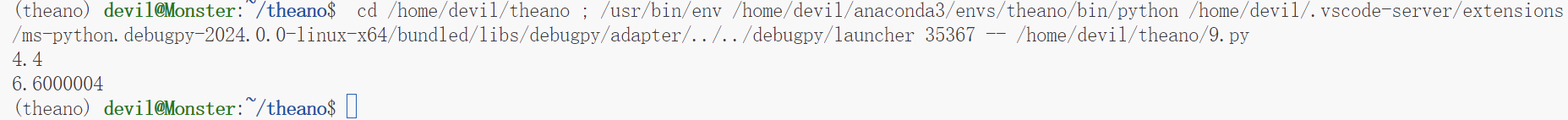

代码1:(if else判断结构)

import theano from theano import tensor from theano.ifelse import ifelse x = tensor.fscalar('x') y = tensor.fscalar('y') z = ifelse(x>0, 2*y, 3*y) # x>0的返回值是int8类型 f = theano.function([x,y], z, allow_input_downcast=True) print( f(1.1, 2.2) ) print( f(-1.1, 2.2) )

运行结果:

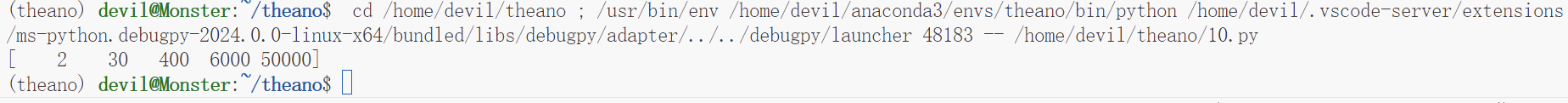

代码2:(循环:scan)

import theano import theano.tensor as tensor # import theano.scan as scan from theano.scan_module import scan import numpy as np # 定义单步的函数,实现a*x^n # 输入参数的顺序要与下面scan的输入参数对应 def one_step(coef, power, x): return coef * x ** power coefs = tensor.ivector() # 每步变化的值,系数组成的向量 powers = tensor.ivector() # 每步变化的值,指数组成的向量 x = tensor.iscalar() # 每步不变的值,自变量 # seq,out_info,non_seq与one_step函数的参数顺序一一对应 # 返回的result是每一项的符号表达式组成的list result, updates = scan(fn = one_step, sequences = [coefs, powers], outputs_info = None, non_sequences = x) # 每一项的值与输入的函数关系 f_poly = theano.function([x, coefs, powers], result, allow_input_downcast=True) coef_val = np.array([2,3,4,6,5]) power_val = np.array([0,1,2,3,4]) x_val = 10 print( f_poly(x_val, coef_val, power_val) ) # [ 2 30 400 6000 50000] # 多项式每一项的和与输入的函数关系 f_poly = theano.function([x, coefs, powers], result.sum(), allow_input_downcast=True) print( f_poly(x_val, coef_val, power_val) )

运行结果:

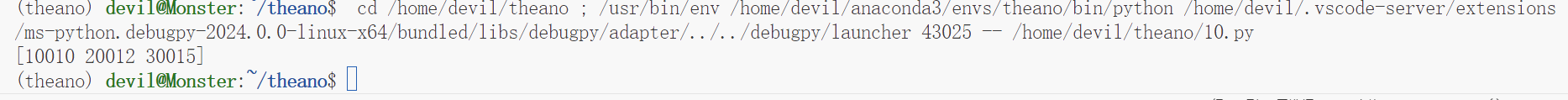

代码3:(循环:scan)

说明,scan函数的参数为fn,sequences, outputs_info, non_sequences,每一步的具体执行函数为:

ouputs_info = fn(*sequences, *outputs_info, *non_sequences)

假设 sequence为[1,2,3],non_sequences为10000,outs_info为9,fun为

def fun(sequence_in, output_in, non_sequence_in): return sequence_in + output_in + non_sequence_in

scan函数一共运行3步,

第一步:

return 1+9+10000 ,即10010

第二步:

return 2+10010+10000 ,即20012

第三步:

return 3+20012+10000 ,即30015

给出代码:

import theano import theano.tensor as tensor # import theano.scan as scan from theano.scan_module import scan import numpy as np # 定义单步的函数,实现a*x^n # 输入参数的顺序要与下面scan的输入参数对应 # def one_step(coef, power, x): # return coef * x ** power def fun(sequence_in, output_in, non_sequence_in): return sequence_in + output_in + non_sequence_in inputs = tensor.ivector() outputs = tensor.iscalar() non_seq = tensor.iscalar() result, updates = scan(fn = fun, sequences = inputs, outputs_info = outputs, non_sequences = non_seq) # 每一项的值与输入的函数关系 f = theano.function([inputs, outputs, non_seq], result, allow_input_downcast=True) print( f([1,2,3], 9, 10000) )

运行结果:

代码4:(循环:scan,实现一个单门控的RNN网络)

import theano import numpy as np import theano.tensor as tensor from theano import scan Identity = lambda x: x ReLU = lambda x: tensor.maximum(x, 0.0) Sigmoid = lambda x: tensor.nnet.sigmoid(x) Tanh = lambda x: tensor.tanh(x) class SimpleRecurrentLayer(): def __init__(self, rng, nin, nout, return_sequences): self.nin = nin self.nout = nout self.return_sequences = return_sequences w_init = np.asarray(rng.uniform(low=-1, high=1, size=(nin, nout)), dtype='float32') u_init = np.asarray(rng.uniform(low=-1, high=1, size=(nout,nout)), dtype='float32') b_init = np.asarray(rng.uniform(low=-1, high=1, size=(nout,)), dtype='float32') self.w = theano.shared(w_init, borrow=True) self.u = theano.shared(u_init, borrow=True) self.b = theano.shared(b_init, borrow=True) def _step(self, x_t, h_t, w, u, b): h_next = x_t.dot(w) + h_t.dot(u) + b return h_next def feedforward(self, x): #assert len(x.shape) == 2 # x.shape: (time, nin) init_h = np.zeros(self.nout) results, updates = scan(fn = self._step, sequences = x, outputs_info = init_h, non_sequences = [self.w, self.u, self.b]) return results if self.return_sequences else results[-1] class BatchRecurrentLayer(): def __init__(self, rng, nin, nout, batchsize, return_sequences): self.nin = nin self.nout = nout self.batchsize = batchsize self.return_sequences = return_sequences w_init = np.asarray(rng.uniform(low=-1, high=1, size=(nin, nout)), dtype='float32') u_init = np.asarray(rng.uniform(low=-1, high=1, size=(nout,nout)), dtype='float32') b_init = np.asarray(rng.uniform(low=-1, high=1, size=(nout,)), dtype='float32') self.w = theano.shared(w_init, borrow=True) self.u = theano.shared(u_init, borrow=True) self.b = theano.shared(b_init, borrow=True) def _step(self, x_t, h_t, w, u, b): h_next = x_t.dot(w) + h_t.dot(u) + b return h_next def feedforward(self, x): #assert len(x.shape) == 3 # before shuffle, x.shape: (batchsize, time, nin) x = x.dimshuffle(1,0,2) # after shuffle, x.shape: (time, batchsize, nin) init_h = np.zeros((self.batchsize, self.nout)) results, updates = scan(fn = self._step, sequences = x, outputs_info = init_h, non_sequences = [self.w, self.u, self.b]) if self.return_sequences: return results.dimshuffle(1,0,2) # (batchsize, time, nin) else: return results[-1] # (batchsize, nin) if __name__ == '__main__': rng = np.random.RandomState(seed=42) nin = 3 nout = 4 # test simpleRNN x = tensor.fmatrix('x') srnn = SimpleRecurrentLayer(rng, nin, nout, True) sout = srnn.feedforward(x) fs = theano.function([x], sout) xv = np.ones((8,3), dtype='float32') # time 8 , nin 3 print( fs(xv) ) print( fs(xv).shape ) # test batchRNN batchsize = 4 y = tensor.tensor3('y') # 维度为3的tensor, tensor3 brnn = BatchRecurrentLayer(rng, nin, nout, batchsize, True) bout = brnn.feedforward(y) fb = theano.function([y], bout) yv = np.ones((batchsize,8,3), dtype='float32') print( fb(yv) ) print( fb(yv).shape )

运行结果:

参考:

https://zhuanlan.zhihu.com/p/24282760

posted on 2024-02-12 10:44 Angry_Panda 阅读(18) 评论(0) 编辑 收藏 举报

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· C#/.NET/.NET Core技术前沿周刊 | 第 29 期(2025年3.1-3.9)

· 从HTTP原因短语缺失研究HTTP/2和HTTP/3的设计差异

2023-02-12 大连人工智能计算中心