使用scrapy框架爬取桌面背景图片

目标数据: zol桌面壁纸,[风景] [1920*1080] 分类下19页每个图册的图片

items.py

1 import scrapy 2 3 4 class Zol2Item(scrapy.Item): 5 # define the fields for your item here like: 6 # name = scrapy.Field() 7 image_urls = scrapy.Field() 8 images = scrapy.Field() 9 10 image_title = scrapy.Field()

pipelines.py

1 from scrapy import Request 2 from scrapy.pipelines.images import ImagesPipeline 3 4 class ZolPipeline(ImagesPipeline): 5 # num = 1 6 def get_media_requests(self, item, info): 7 image_url = item["image_urls"] 8 if image_url: 9 # self.num + 1 10 yield Request(url=image_url, meta={"item": item}) 11 12 def file_path(self, request, response=None, info=None): 13 ## start of deprecation warning block (can be removed in the future) 14 def _warn(): 15 from scrapy.exceptions import ScrapyDeprecationWarning 16 import warnings 17 warnings.warn('ImagesPipeline.image_key(url) and file_key(url) methods are deprecated, ' 18 'please use file_path(request, response=None, info=None) instead', 19 category=ScrapyDeprecationWarning, stacklevel=1) 20 21 # check if called from image_key or file_key with url as first argument 22 if not isinstance(request, Request): 23 _warn() 24 url = request 25 else: 26 url = request.url 27 28 # detect if file_key() or image_key() methods have been overridden 29 if not hasattr(self.file_key, '_base'): 30 _warn() 31 return self.file_key(url) 32 elif not hasattr(self.image_key, '_base'): 33 _warn() 34 return self.image_key(url) 35 ## end of deprecation warning block 36 37 return 'desk/{}.jpg'.format(request.meta["item"]["image_title"])

middlewares.py

1 from scrapy import signals 2 from zol2.useragents import agents 3 4 5 class Zol2SpiderMiddleware(object): 6 # Not all methods need to be defined. If a method is not defined, 7 # scrapy acts as if the spider middleware does not modify the 8 # passed objects. 9 10 @classmethod 11 def from_crawler(cls, crawler): 12 # This method is used by Scrapy to create your spiders. 13 s = cls() 14 crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) 15 return s 16 17 def process_spider_input(self, response, spider): 18 # Called for each response that goes through the spider 19 # middleware and into the spider. 20 21 # Should return None or raise an exception. 22 return None 23 24 def process_spider_output(self, response, result, spider): 25 # Called with the results returned from the Spider, after 26 # it has processed the response. 27 28 # Must return an iterable of Request, dict or Item objects. 29 for i in result: 30 yield i 31 32 def process_spider_exception(self, response, exception, spider): 33 # Called when a spider or process_spider_input() method 34 # (from other spider middleware) raises an exception. 35 36 # Should return either None or an iterable of Response, dict 37 # or Item objects. 38 pass 39 40 def process_start_requests(self, start_requests, spider): 41 # Called with the start requests of the spider, and works 42 # similarly to the process_spider_output() method, except 43 # that it doesn’t have a response associated. 44 45 # Must return only requests (not items). 46 for r in start_requests: 47 yield r 48 49 def spider_opened(self, spider): 50 spider.logger.info('Spider opened: %s' % spider.name) 51 52 53 class Zol2DownloaderMiddleware(object): 54 # Not all methods need to be defined. If a method is not defined, 55 # scrapy acts as if the downloader middleware does not modify the 56 # passed objects. 57 58 @classmethod 59 def from_crawler(cls, crawler): 60 # This method is used by Scrapy to create your spiders. 61 s = cls() 62 crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) 63 return s 64 65 def process_request(self, request, spider): 66 # Called for each request that goes through the downloader 67 # middleware. 68 69 # Must either: 70 # - return None: continue processing this request 71 # - or return a Response object 72 # - or return a Request object 73 # - or raise IgnoreRequest: process_exception() methods of 74 # installed downloader middleware will be called 75 return None 76 77 def process_response(self, request, response, spider): 78 # Called with the response returned from the downloader. 79 80 # Must either; 81 # - return a Response object 82 # - return a Request object 83 # - or raise IgnoreRequest 84 return response 85 86 def process_exception(self, request, exception, spider): 87 # Called when a download handler or a process_request() 88 # (from other downloader middleware) raises an exception. 89 90 # Must either: 91 # - return None: continue processing this exception 92 # - return a Response object: stops process_exception() chain 93 # - return a Request object: stops process_exception() chain 94 pass 95 96 def spider_opened(self, spider): 97 spider.logger.info('Spider opened: %s' % spider.name)

settings.py

1 # -*- coding: utf-8 -*- 2 3 # Scrapy settings for zol2 project 4 # 5 # For simplicity, this file contains only settings considered important or 6 # commonly used. You can find more settings consulting the documentation: 7 # 8 # https://doc.scrapy.org/en/latest/topics/settings.html 9 # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html 10 # https://doc.scrapy.org/en/latest/topics/spider-middleware.html 11 12 BOT_NAME = 'zol2' 13 14 SPIDER_MODULES = ['zol2.spiders'] 15 NEWSPIDER_MODULE = 'zol2.spiders' 16 17 18 # Crawl responsibly by identifying yourself (and your website) on the user-agent 19 USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.75 Safari/537.36' 20 21 # Obey robots.txt rules 22 # ROBOTSTXT_OBEY = True 23 24 # Configure maximum concurrent requests performed by Scrapy (default: 16) 25 #CONCURRENT_REQUESTS = 32 26 27 # Configure a delay for requests for the same website (default: 0) 28 # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay 29 # See also autothrottle settings and docs 30 DOWNLOAD_DELAY = 0.5 31 # The download delay setting will honor only one of: 32 #CONCURRENT_REQUESTS_PER_DOMAIN = 16 33 #CONCURRENT_REQUESTS_PER_IP = 16 34 35 # Disable cookies (enabled by default) 36 #COOKIES_ENABLED = False 37 38 # Disable Telnet Console (enabled by default) 39 #TELNETCONSOLE_ENABLED = False 40 41 # Override the default request headers: 42 #DEFAULT_REQUEST_HEADERS = { 43 # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 44 # 'Accept-Language': 'en', 45 #} 46 47 # Enable or disable spider middlewares 48 # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html 49 #SPIDER_MIDDLEWARES = { 50 # 'zol2.middlewares.Zol2SpiderMiddleware': 543, 51 #} 52 53 # Enable or disable downloader middlewares 54 # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html 55 #DOWNLOADER_MIDDLEWARES = { 56 # 'zol2.middlewares.Zol2DownloaderMiddleware': 543, 57 #} 58 59 # Enable or disable extensions 60 # See https://doc.scrapy.org/en/latest/topics/extensions.html 61 #EXTENSIONS = { 62 # 'scrapy.extensions.telnet.TelnetConsole': None, 63 #} 64 65 # Configure item pipelines 66 # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html 67 ITEM_PIPELINES = { 68 'zol2.pipelines.Zol2Pipeline': 300, 69 } 70 IMAGES_STORE = "/home/pyvip/env_spider/zol2/zol2/images" 71 72 # Enable and configure the AutoThrottle extension (disabled by default) 73 # See https://doc.scrapy.org/en/latest/topics/autothrottle.html 74 #AUTOTHROTTLE_ENABLED = True 75 # The initial download delay 76 #AUTOTHROTTLE_START_DELAY = 5 77 # The maximum download delay to be set in case of high latencies 78 #AUTOTHROTTLE_MAX_DELAY = 60 79 # The average number of requests Scrapy should be sending in parallel to 80 # each remote server 81 #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 82 # Enable showing throttling stats for every response received: 83 #AUTOTHROTTLE_DEBUG = False 84 85 # Enable and configure HTTP caching (disabled by default) 86 # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings 87 #HTTPCACHE_ENABLED = True 88 #HTTPCACHE_EXPIRATION_SECS = 0 89 #HTTPCACHE_DIR = 'httpcache' 90 #HTTPCACHE_IGNORE_HTTP_CODES = [] 91 #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

pazol2.py

1 # -*- coding: utf-8 -*- 2 import scrapy 3 from scrapy.linkextractors import LinkExtractor 4 from scrapy.spiders import CrawlSpider, Rule 5 from zol2.items import Zol2Item 6 7 class Pazol2Spider(CrawlSpider): 8 name = 'pazol2' 9 # allowed_domains = ['desk.zol.com.cn'] 10 start_urls = ['http://desk.zol.com.cn/fengjing/1920x1080/'] 11 front_url = "http://desk.zol.com.cn" 12 num = 1 13 14 rules = ( 15 # 1.解决翻页 16 Rule(LinkExtractor(allow=r'/fengjing/1920x1080/[0-1]?[0-9]?.html'), callback='parse_album', follow=True), 17 # 2.进入各个图库的每一张图片页 18 Rule(LinkExtractor(allow=r'/bizhi/\d+_\d+_\d+.html', restrict_xpaths=("//div[@class='main']/ul[@class='pic-list2 clearfix']/li", "//div[@class='photo-list-box']")), follow=True), 19 # 3.点击各个图片1920*1080按钮,获得html 20 Rule(LinkExtractor(allow=r'/showpic/1920x1080_\d+_\d+.html'), callback='get_img', follow=True), 21 ) 22 23 def get_img(self, response): 24 item = Zol2Item() 25 item['image_urls'] = response.xpath("//body/img[1]/@src").extract_first() 26 item['image_title'] = str(self.num) 27 self.num += 1 28 yield item

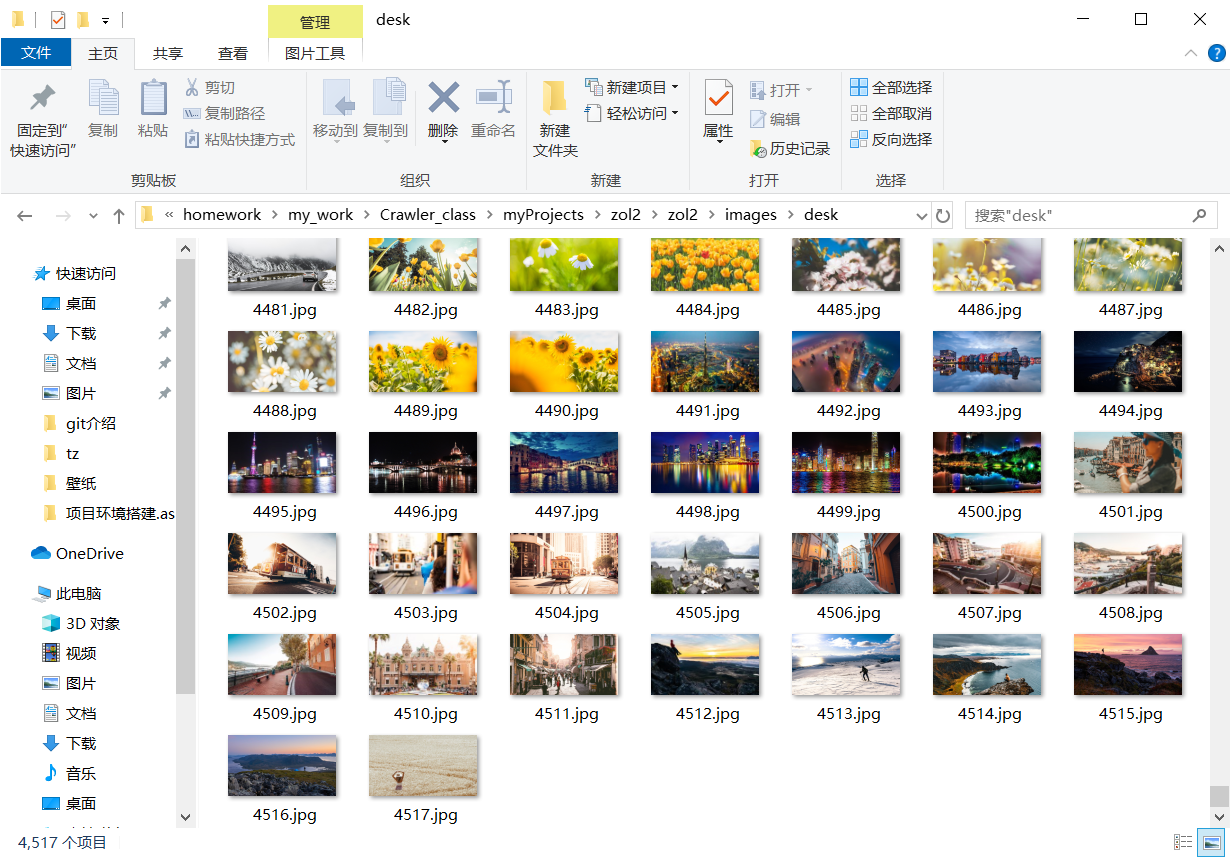

爬取结果

共爬取了4517张图片,用时108分钟

放在桌面图库,半小时换一张,美滋滋。

浙公网安备 33010602011771号

浙公网安备 33010602011771号