第五次作业

-

作业①:

-

要求:

- 熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

- 使用Selenium框架爬取京东商城某类商品信息及图片。

-

候选网站:http://www.jd.com/

-

关键词:学生自由选择

-

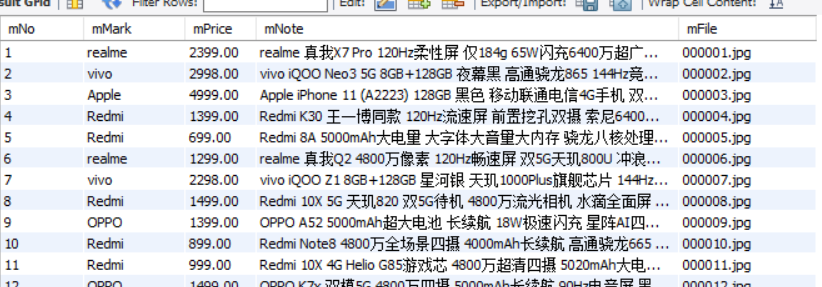

输出信息:MYSQL的输出信息如下

mNo mMark mPrice mNote mFile 000001 三星Galaxy 9199.00 三星Galaxy Note20 Ultra 5G... 000001.jpg 000002......

-

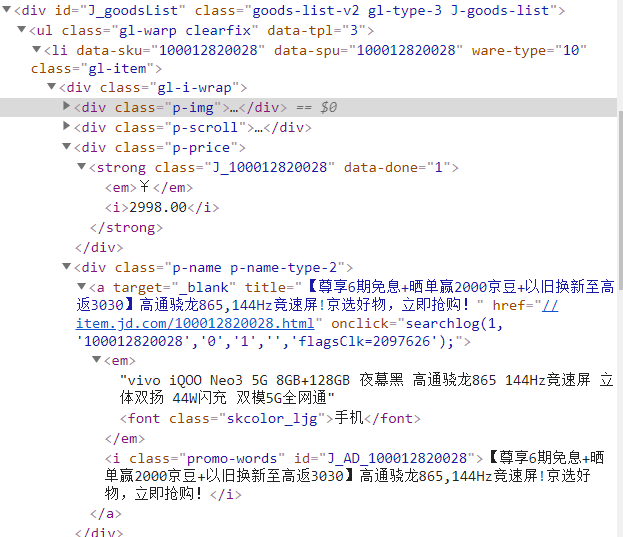

网页代码块:

代码复现:

from selenium import webdriver from selenium.webdriver.chrome.options import Options import urllib.request import threading import sqlite3 import os import datetime from selenium.webdriver.common.keys import Keys import time class MySpider: header = { "User-Agent": "Mozilla/5.0(Windows;U;Windows NT 6.0 x64;en-US;rv:1.9pre)Gecko/2008072531 Minefield/3.0.2pre" } imagepath = "download" def startUp(self, url, key): # 驱动chrome爬取下载数据 chrome_options = Options() chrome_options.add_argument("——headless") chrome_options.add_argument("——disable-gpu") self.driver = webdriver.Chrome(chrome_options=chrome_options) # self.driver = webdriver.Chrome(r'D:\Download\chromedriver.exe') self.threads = [] self.No = 0 self.imgNo = 0 # 连接数据库 try: self.con = sqlite3.connect("phones.db") self.cursor = self.con.cursor() try: self.cursor.execute("drop table phones") #删除同名表 except: pass try: sql = "create table phones(mNo varchar(32) primary key,mMark varchar(256),mPrice varchar(32),mNote varchar(1024),mFile varchar(256))" self.cursor.execute(sql) #创建表用于存储数据 except: pass except Exception as err: print(err) try: if not os.path.exists(MySpider.imagepath): os.mkdir(MySpider.imagepath) #创建文件夹保存下载的图片 images = os.listdir(MySpider.imagepath) for image in images: s = os.path.join(MySpider.imagepath, image) os.remove(s) except Exception as err: print(err) self.driver.get(url) keyInput = self.driver.find_element_by_id("key") #获取搜索点,输入关键词 keyInput.send_keys(key) keyInput.send_keys(Keys.ENTER) def closeUp(self): try: self.con.commit() self.con.close() self.driver.close() except Exception as err: print(err) def insertDB(self, mNo, mMark, mPrice, mNote, mFile): #数据插入 try: sql = "insert into phones (mNo,mMark,mPrice,mNote,mFile) values (?,?,?,?,?)" self.cursor.execute(sql, (mNo, mMark, mPrice, mNote, mFile)) except Exception as err: print(err) def showDB(self): #数据显示 try: con = sqlite3.connect("phones.db") cursor = con.cursor() print("%-8s%-16s%-8s%-16s%s" % ("No", "Mark", "Price", "Image", "Note")) cursor.execute("select mNO,mMark,mPrice,mFile,mNote from phones order by mNo") rows = cursor.fetchall() for row in rows: print("%-8s%-16s%-8s%-16s%s" % (row[0], row[1], row[2], row[3], row[4])) con.close() except Exception as err: print(err) def downloadDB(self, src1, src2, mFile): data = None #获取下载保存图片 if src1: try: req = urllib.request.Request(src1, headers=MySpider.header) resp = urllib.request.urlopen(req, timeout=10) data = resp.read() except: pass if not data and src2: try: req = urllib.request.Request(src2, headers=MySpider.header) resp = urllib.request.urlopen(req, timeout=10) data = resp.read() except: pass if data: print("download begin!", mFile) fobj = open(MySpider.imagepath + "\\" + mFile, "wb") fobj.write(data) fobj.close() print("download finish!", mFile) def processSpider(self): time.sleep(1) try: print(self.driver.current_url) lis = self.driver.find_elements_by_xpath("//div[@id='J_goodsList']//li[@class='gl-item']") for li in lis: try: src1 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("src") except: src1 = "" try: src2 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("data-lazy-img") except: src2 = "" try: price = li.find_element_by_xpath(".//div[@class='p-price']//i").text except: price = "0" note = li.find_element_by_xpath(".//div[@class='p-name p-name-type-2']//em").text mark = note.split(" ")[0] mark = mark.replace("爱心东东\n", "") mark = mark.replace(",", "") note = note.replace("爱心东东\n", "") note = note.replace(",", "") time.sleep(1) self.No = self.No + 1 no = str(self.No) #图片编号 while len(no) < 6: no = "0" + no print(no, mark, price) if src1: src1 = urllib.request.urljoin(self.driver.current_url, src1) p = src1.rfind(".") mFile = no + src1[p:] elif src2: src2 = urllib.request.urljoin(self.driver.current_url, src2) p = src2.rfind(".") mFile = no + src2[p:] if src1 or src2: T = threading.Thread(target=self.downloadDB, args=(src1, src2, mFile)) T.setDaemon(False) T.start() self.threads.append(T) else: mFile = "" self.insertDB(no, mark, price, note, mFile) try: self.driver.find_element_by_xpath("//span[@class='p-num']//a[@class='pn-next disabled']") except: nextPage = self.driver.find_element_by_xpath("//span[@class='p-num']//a[@class='pn-next']") time.sleep(10) nextPage.click() self.processSpider() except Exception as err: print(err) def executeSpider(self, url,key): #进程提示 starttime = datetime.datetime.now() print("Spider starting......") self.startUp(url, key) print("Spider processing......") self.processSpider() print("Spider closing......") self.closeUp() for t in self.threads: t.join() print("Spider complete......") endtime = datetime.datetime.now() elapsed = (endtime - starttime).seconds print("Total", elapsed, "seconds elasped") url = "https://www.jd.com" spider = MySpider() while True: print("1.爬取") print("2.显示") print("3.退出") s = input("请选择(1,2,3);") if s == "1": MySpider().executeSpider(url,'手机') continue elif s == "2": MySpider().showDB() continue elif s == "3": break

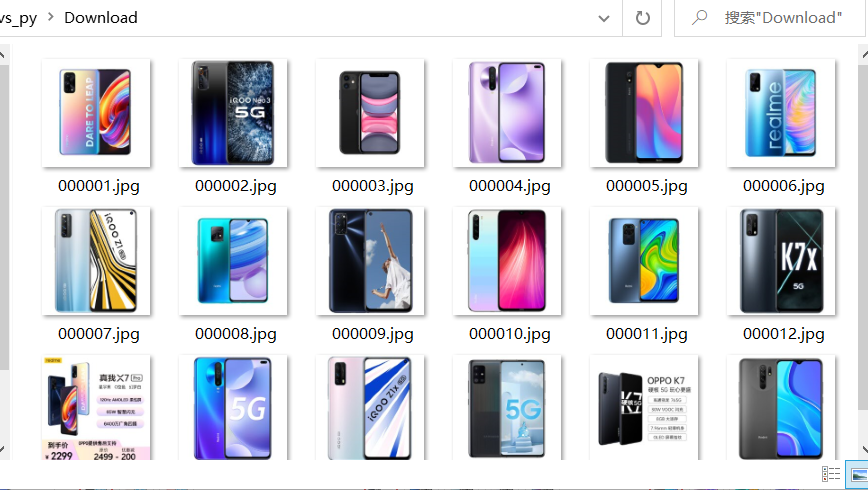

实验结果:

图片下载:

数据库记录:

心得体会:

复现ppt上的代码,初次使用selenium框架,在理解的前提下进行修改。

虽然只是复现,但出现了许多问题,最终也逐一而解。

-

作业②

-

要求:

- 熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

- 使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。

-

候选网站:东方财富网:http://quote.eastmoney.com/center/gridlist.html#hs_a_board

-

输出信息:MYSQL数据库存储和输出格式如下,表头应是英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计表头:

序号 股票代码 股票名称 最新报价 涨跌幅 涨跌额 成交量 成交额 振幅 最高 最低 今开 昨收 1 688093 N世华 28.47 62.22% 10.92 26.13万 7.6亿 22.34 32.0 28.08 30.2 17.55 2......

-

网页代码块:

代码实现:

from selenium import webdriver from selenium.webdriver.chrome.options import Options import urllib.request import threading import sqlite3 import os import datetime from selenium.webdriver.common.keys import Keys import time class MySpider: header = { "User-Agent": "Mozilla/5.0(Windows;U;Windows NT 6.0 x64;en-US;rv:1.9pre)Gecko/2008072531 Minefield/3.0.2pre" } def startUp(self, url, key): # 驱动chrome爬取下载数据 chrome_options = Options() chrome_options.add_argument("——headless") chrome_options.add_argument("——disable-gpu") self.driver = webdriver.Chrome(chrome_options=chrome_options) self.count = 0 # 连接数据库 try: self.con = sqlite3.connect("xy669.db") self.cursor = self.con.cursor() try: self.cursor.execute("drop table stocks") #删除同名表 except: pass try: sql = "create table stocks(number varchar(32),code varchar(32),name varchar(32),new varchar(32),udr varchar(32),udp varchar(32),deal_h varchar(32),deal_num varchar(32),today varchar(32),yest varchar(32))" self.cursor.execute(sql) #创建表用于存储数据 except: pass except Exception as err: print(err) def closeUp(self): try: self.con.commit() self.con.close() self.driver.close() except Exception as err: print(err) def insertDB(self, number,code,name,new,udr,udp,deal_h,deal_num,today,yest): #数据插入 try: sql = "insert into phones (number,code,name,new,udr,udp,deal_h,deal_num,today,yest) values (?,?,?,?,?,?,?,?,?,?)" self.cursor.execute(sql, (number,code,name,new,udr,udp,deal_h,deal_num,today,yest)) except Exception as err: print(err) # def showDB(self): # #数据显示 # try: # con = sqlite3.connect("xy669.db") # cursor = con.cursor() # print("%-8s%-16s%-8s%-16s%-8s%-16s%-8s%-16s%-8s%-16s%s" % ("number","code","name","new","udr","udp","deal_h","deal_num","today","yest")) # cursor.execute("select number,code,name,new,udr,udp,deal_h,deal_num,today,yest from stocks order by number") # rows = cursor.fetchall() # for row in rows: # print("%-8s%-16s%-8s%-16s%-8s%-16s%-8s%-16s%-8s%-16s%s" % (row[0], row[1], row[2], row[3], row[4], row[5], row[6], row[7], row[8], row[9])) # con.close() # except Exception as err: # print(err) def processSpider(self): time.sleep(1) try: print(self.driver.current_url) lis = self.driver.find_elements_by_xpath("//div[@id='J_goodsList']/table[@id='table_wrapper-table']/tbody/tr") for li in lis: # try: # number = li.find_element_by_xpath(".//td[position()=1]").text # except: # number = "" try: code = li.find_element_by_xpath(".//td[position()=2]/a").text except: code = "" try: name = li.find_element_by_xpath(".//td[@class='mywidth']/a").text except: name = "" try: new = li.find_element_by_xpath(".//td[position()=5]/span").text except: new = "" try: udr = li.find_element_by_xpath(".//td[position()=6]/span").text except: udr = "" try: udp = li.find_element_by_xpath(".//td[position()=7]/span").text except: udp = "" try: deal_h = li.find_element_by_xpath(".//td[position()=8]").text except: deal_h = "" try: deal_num = li.find_element_by_xpath(".//td[position()=9]").text except: deal_num = "" try: today = li.find_element_by_xpath(".//td[position()=13]/span").text except: today = "" try: yest = li.find_element_by_xpath(".//td[position()=14]").text except: yest = "" self.count = self.count + 1 number = str(self.count) self.insertDB(number,code,name,new,udr,udp,deal_h,deal_num,today,yest) except Exception as err: print(err) def executeSpider(self, url): starttime = datetime.datetime.now() print("Spider starting......") self.startUp(url) print("Spider processing......") self.processSpider() print("Spider closing......") self.closeUp() print("Spider completed......") endtime = datetime.datetime.now() elapsed = (endtime - starttime).seconds print("Total ", elapsed, " seconds elapsed") url = "http://quote.eastmoney.com/center/gridlist.html#sh_a_board" spider = MySpider() while True: print("1.爬取") print("2.显示") print("3.退出") s = input("请选择(1,2,3);") if s == "1": MySpider().executeSpider(url) continue # elif s == "2": # MySpider().showDB() # continue # elif s == "3": # break else: break

实验结果:

心得体会:

这个实验是运用框架进行的,修改参数和爬取内容。

在理解了第一题的基础上,相对顺利。

-

作业③:

-

要求:

- 熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

- 使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

-

候选网站:中国mooc网:https://www.icourse163.org

-

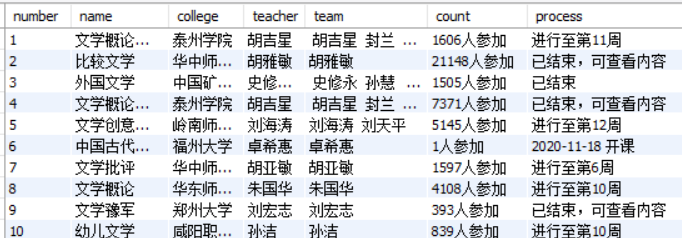

输出信息:MYSQL数据库存储和输出格式

Id cCourse cCollege cTeacher cTeam cCount cProcess cBrief 1 Python数据分析与展示 北京理工大学 嵩天 嵩天 470 2020年11月17日 ~ 2020年12月29日 “我们正步入一个数据或许比软件更重要的新时代。——Tim O'Reilly” 运用数据是精准刻画事物、呈现发展规律的主要手段,分析数据展示规律,把思想变得更精细! ——“弹指之间·享受创新”,通过8周学习,你将掌握利用Python语言表示、清洗、统计和展示数据的能力。 2......

-

网页代码块:

代码实现:

from selenium import webdriver from selenium.webdriver.chrome.options import Options import urllib.request import threading import sqlite3 import os import datetime from selenium.webdriver.common.keys import Keys import time class MySpider: header = { "User-Agent": "Mozilla/5.0(Windows;U;Windows NT 6.0 x64;en-US;rv:1.9pre)Gecko/2008072531 Minefield/3.0.2pre" } def startUp(self, url, key): # 驱动chrome爬取下载数据 chrome_options = Options() chrome_options.add_argument("——headless") chrome_options.add_argument("——disable-gpu") self.driver = webdriver.Chrome(chrome_options=chrome_options) self.num = 0 # 连接数据库 try: self.con = sqlite3.connect("xy669.db") self.cursor = self.con.cursor() try: self.cursor.execute("drop table courses") #删除同名表 except: pass try: sql = "create table courses(number varchar(64),name varchar(64),college varchar(64),teacher varchar(64),team varchar(64),count varchar(64),process varchar(64),brief varchar(512))" self.cursor.execute(sql) #创建表用于存储数据 except: pass except Exception as err: print(err) def closeUp(self): try: self.con.commit() self.con.close() self.driver.close() except Exception as err: print(err) def insertDB(self, ): #数据插入 try: sql = "insert into courses (number,name,college,teacher,team,count,process,brief) values (?,?,?,?,?,?,?,?)" self.cursor.execute(sql, (number,name,college,teacher,team,count,process,brief)) except Exception as err: print(err) # def showDB(self): # #数据显示 # try: # con = sqlite3.connect("xy669.db") # cursor = con.cursor() # print("%-8s%-16s%-8s%-16s%-8s%-16s%-8s%-16s%s" % (number,name,college,teacher,team,count,process,brief)) # cursor.execute("select number,name,college,teacher,team,count,process from stocks order by number") # rows = cursor.fetchall() # for row in rows: # print("%-8s%-16s%-8s%-16s%-8s%-16s%-8s%-16s%s" % (row[0], row[1], row[2], row[3], row[4], row[5], row[6], row[7])) # con.close() # except Exception as err: # print(err) def processSpider(self): time.sleep(1) try: print(self.driver.current_url) divs = self.driver.find_elements_by_xpath("//div[@class='m-course-list']/div/div") #不同的课程块 for div in divs: lis = div.find_element_by_xpath(".//div[@class='cnt f-pr']/a") #课程的文本信息块 # imgs = div.find_element_by_xpath(".//div[@class='u-img f-fl']") try: name= li.find_element_by_xpath(".//span[@class='u-course-name f-thide']").text except: name = "" try: # college = li.find_element_by_xpath(".//a[@class='t21 f-fc9']").text college = li.find_element_by_xpath(".//div[@class='t2 f-fc3 f-nowrp f-f0']/a[position()=1]").text except: colloge = "" try: teacher = li.find_element_by_xpath(".//div[@class='t2 f-fc3 f-nowrp f-f0']/a[position()=2]").text except: teacher = "" try: team = li.find_element_by_xpath(".//span[@class='f-fc9']/a").text # team = teacher + team except: team = "" try: count = li.find_element_by_xpath(".//span[@class='hot']").text except: count = "" try: process = li.find_element_by_xpath(".//span[@class='txt']").text except: process = "" try: brief = li.find_element_by_xpath(".//span[@class='p5 brief f-ib f-f0 f-cb']").text except: brief = "" self.num = self.num + 1 number = str(self.num) self.insertDB(number,name,college,teacher,team,count,process,brief) except Exception as err: print(err) def executeSpider(self, url): starttime = datetime.datetime.now() print("Spider starting......") self.startUp(url) print("Spider processing......") self.processSpider() print("Spider closing......") self.closeUp() print("Spider completed......") endtime = datetime.datetime.now() elapsed = (endtime - starttime).seconds print("Total ", elapsed, " seconds elapsed") url = "https://www.icourse163.org/search.htm?search=%E6%96%87%E5%AD%A6#/" spider = MySpider() while True: print("1.爬取") print("2.显示") print("3.退出") s = input("请选择(1,2,3);") if s == "1": MySpider().executeSpider(url) continue # elif s == "2": # MySpider().showDB() # continue # elif s == "3": # break else: break

实验结果:

心得体会:

过程与第二题相似,但有些标签的内容同类但结构不同,这点要注意一下。