hadoop集群部署

HADOOP集群搭建环境准备

3台云主机centos6.9 64位

hadoop-2.6.0-cdh5.7.0.tar.gz

jdk-8u45-linux-x64.gz

zookeeper-3.4.6.tar.gz

本地搭建虚拟机;我们采用.net内网模式

hadoop01 172.16.202.238

hadoop02 172.16.202.239

hadoop03 172.16.202.239

(没有特别说明就是三台机器同时执行)

第一步:SSH相互信任配置和host文件配置

1.创建hadoop用户

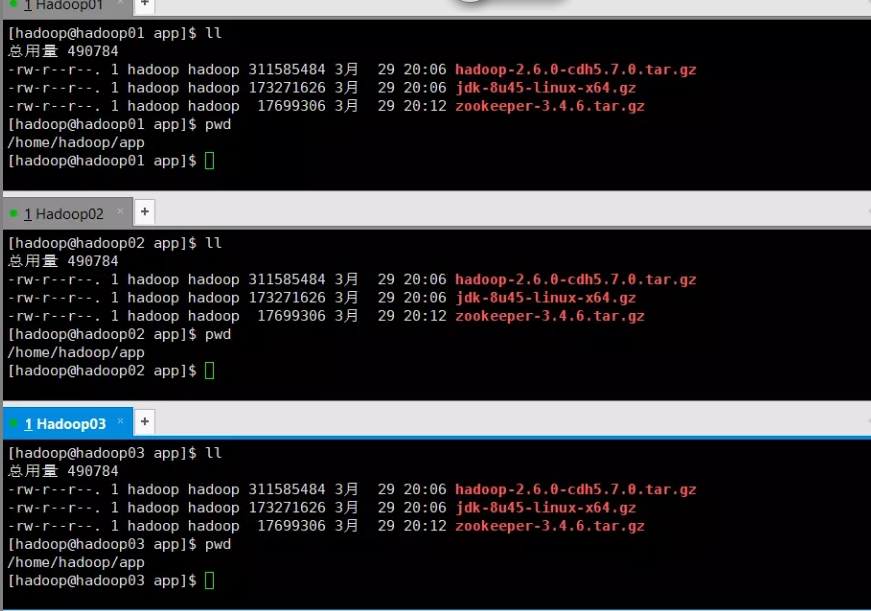

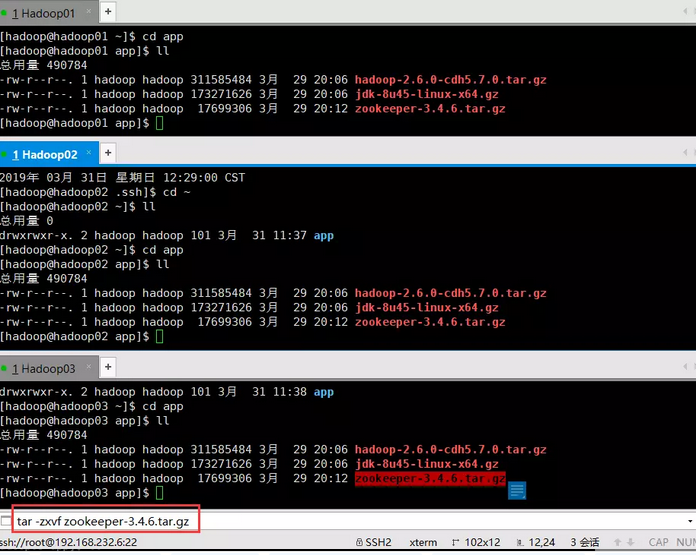

2.在hadoop的家目录下创建app目录存放安装包,并把

hadoop-2.6.0-cdh5.7.0.tar.gz

jdk-8u45-linux-x64.gz

zookeeper-3.4.6.tar.gz

这三个包上传,使用rz命令,如果是新机器,可能会找不到rz命令,这时候需要下载

在任意目录下执行yum -y install lrzsz

然后cd切到app目录下rz上传hadoop,jdk和zookeeper

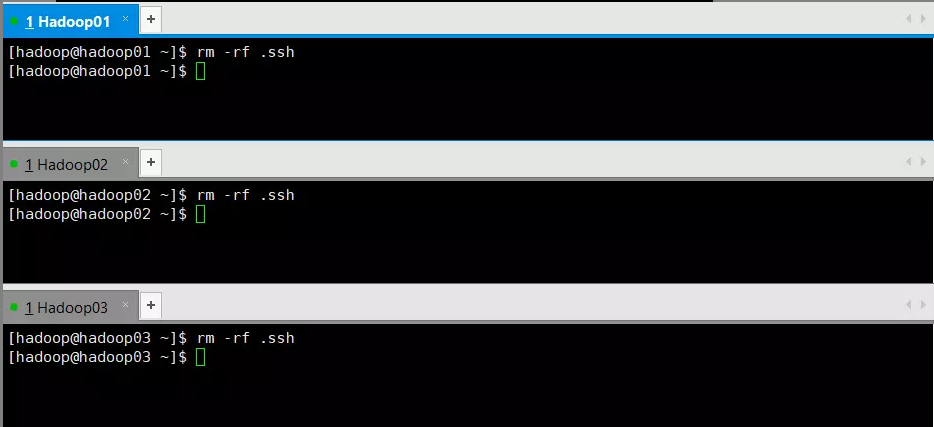

3.先删除hadoop用户家目录下原来的.ssh目录(如果是刚刚创建的用户,可以不用操作这一步。)

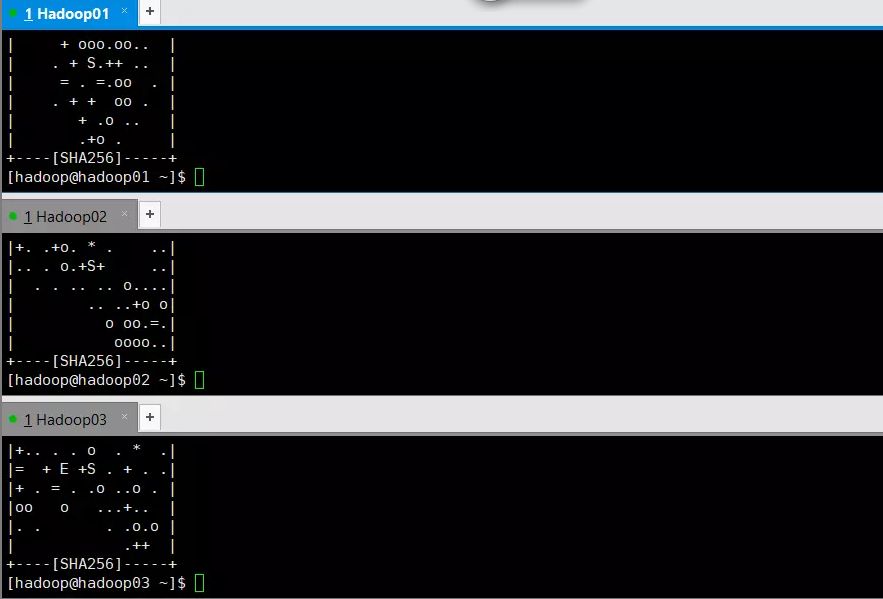

4.执行命令ssh-keygen,然后连续回车3次

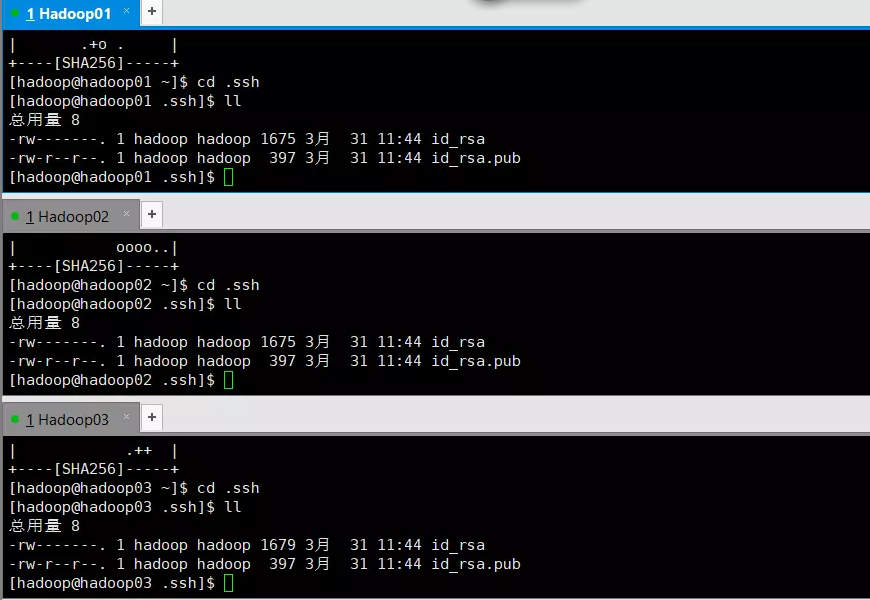

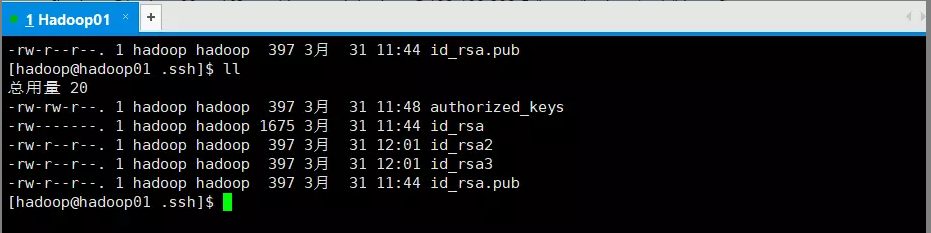

5.这时候我们刚刚删除的.ssh目录就又出现了,切到.ssh目录 并且查看目录下的文件ll

6.单台机器操作将公钥文件(id_rsa.pub)追加到authorized_keys(自建,就在.ssh目录下)中

[hadoop@hadoop01 .ssh]$ cat id_rsa.pub >> authorized_keys --

[hadoop@hadoop02 .ssh]$ scp id_rsa.pub root@192.168.232.5:/home/hadoop/.ssh/id_rsa2 --过程中会让输入密码,你输入云主机的密码即可,下同理

[hadoop@hadoop03 .ssh]$ scp id_rsa.pub root@192.168.232.5:/home/hadoop/.ssh/id_rsa3

经过以上操作,hadoop001,hadoop002,hadoop003的公钥都被放到了hadoop001的.ssh目录一下。

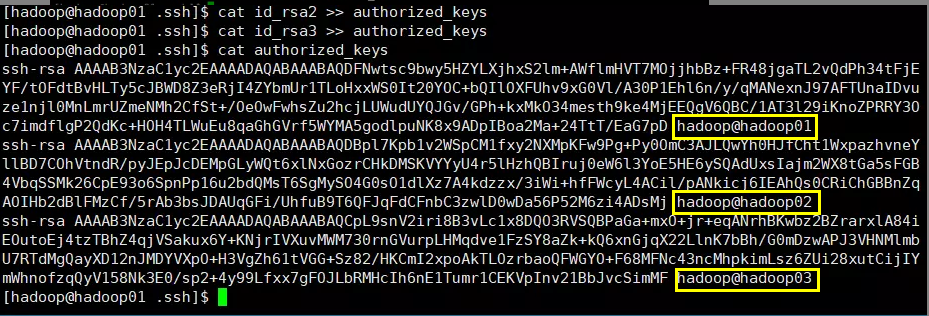

7.在hadoop001机器上,将新传入的id_rsa2和id_rsa3都追加到authoeized_keys文件中

[hadoop@hadoop01 .ssh]$ cat id_rsa2 >> authorized_keys

[hadoop@hadoop01 .ssh]$ cat id_rsa3 >> authorized_keys

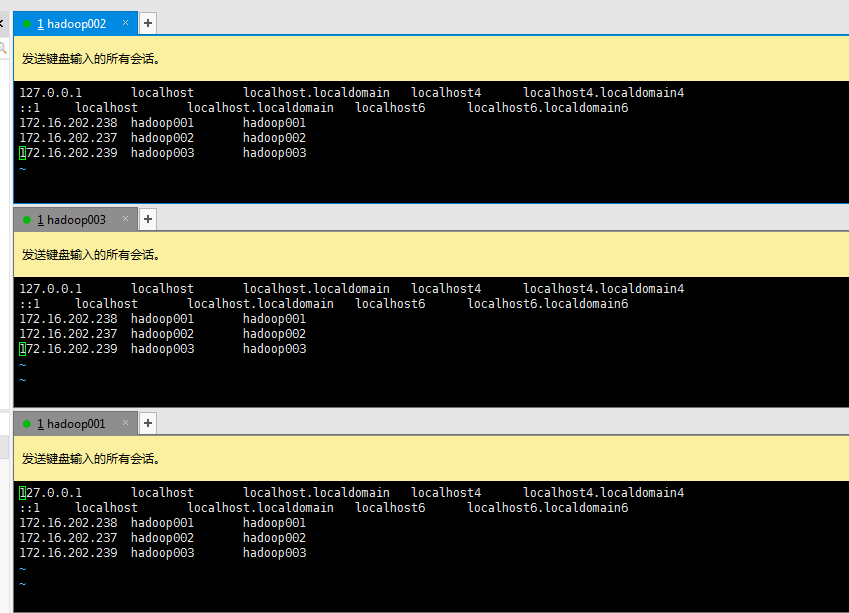

8.配置/etc/hosts文件 ,把hadoop001,hadoop002,hadoop003的内网配置到hosts中

9.将第7部在hadoop001配置好的authorized_keys文件复制到hadoop002和hadoop003的.ssh目录下

先在hadoop001机器上。切入到~/.ssh目录

[hadoop@hadoop001 ~]$ cd ~/.ssh

[hadoop@hadoop01 .ssh]$ scp authorized_keys hadoop@hadoop02:/home/hadoop/.ssh/

[hadoop@hadoop01 .ssh]$ scp authorized_keys hadoop@hadoop03:/home/hadoop/.ssh/

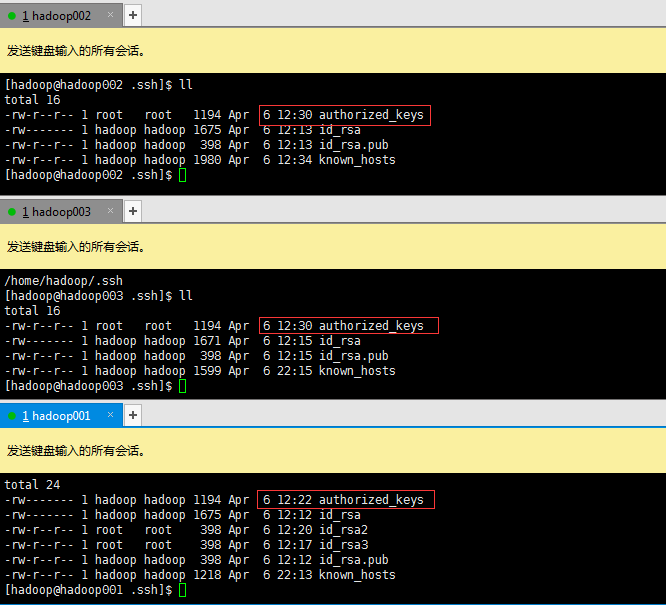

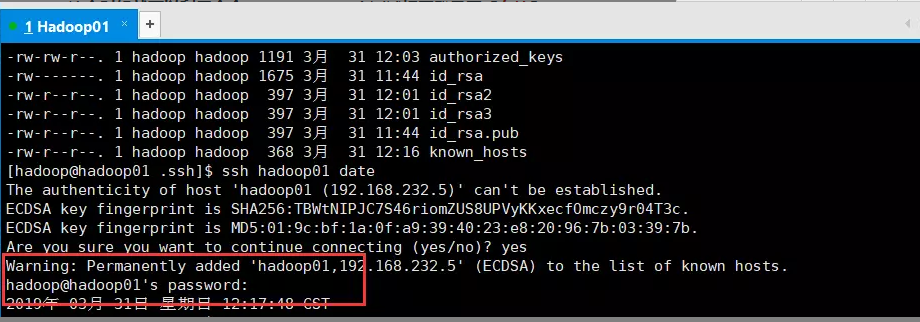

到这里免密配置基本上就完毕了,但是这里可能会有【坑】,不管怎么样,我们先来验证一下

果然有坑,这里显示让我们输入密码,但是我们并没有设置密码,所以这里是有问题的,那该怎么解决呢?

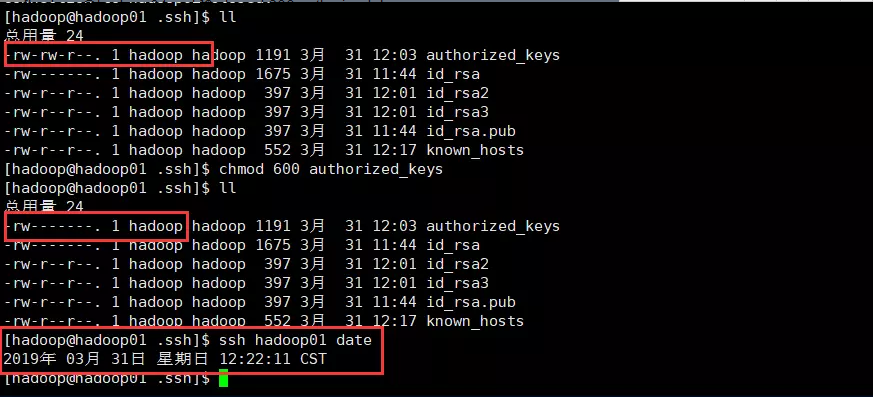

老师带我们看了hadoop官网的单节点的部署文档,其中在免密配置中就有提到,authorized_keys文件的权限必须改为600

[hadoop@hadoop01 .ssh]$ chmod 600 authorized_keys

[hadoop@hadoop02 .ssh]$ chmod 600 authorized_keys

[hadoop@hadoop03 .ssh]$ chmod 600 authorized_keys

然后再次进行尝试

OK啦!!!!

配置完成以后这里一定要注意,每台机器都要跟自己跟其他两台机器链接一下,因为第一次链接要输入yes,如果没有操作这一步,后边的部署会有麻烦。

ssh hadoop001 date

ssh hadoop002 date

ssh hadoop003 date

再次提醒每台机器上都要执行以上三个命令

拓展:若果秘钥变更,千万不要把整个known_hosts文件清空,会造成整个分布式系统的瘫痪,而是在known_hosts文件中找到要变更的秘钥,把它删掉,否则,在读取known_hosts文件的时候会从上往下读,原来的记录还在,就会一直读错的那一个,所以需要把老记录给删掉

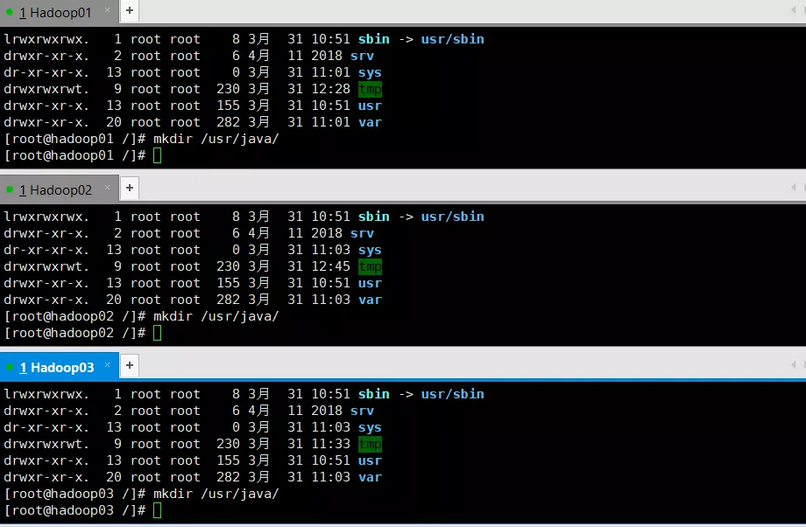

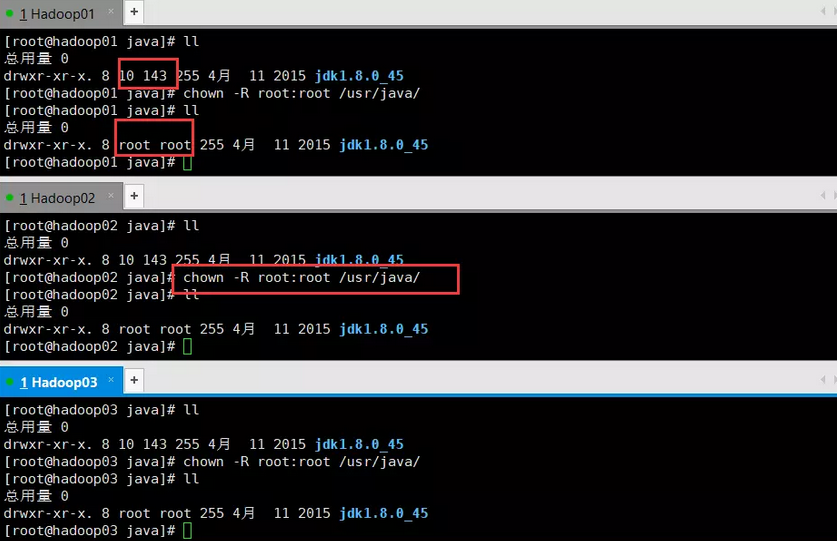

第一步:JDK的配置(因为JDK的部署是给整个环境用的,所以要用root用户部署)

1.创建目录 /usr/java/【一定要把jdk放到这个目录下,为了避免以后踩坑,这个是硬性要求,因为CDH shell脚本默认java安装目录是/usr/java/ 】

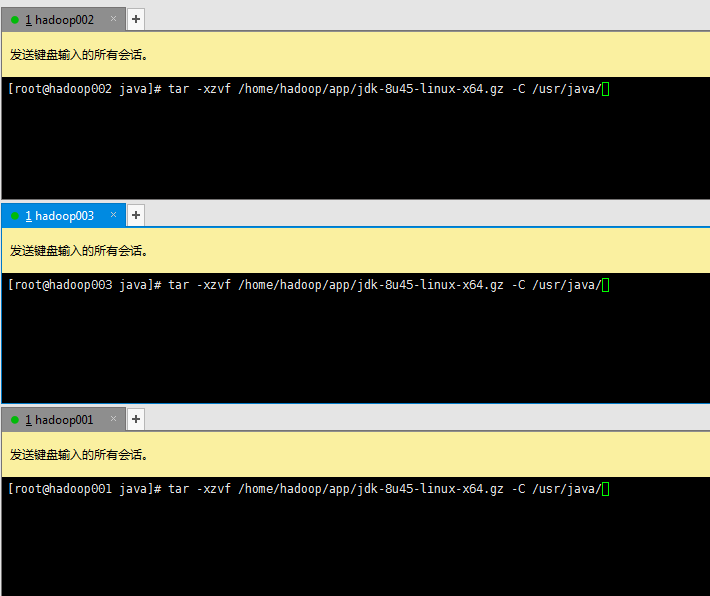

2解压jdk到/usr/java/目录

解压完成后,这里又有一个【坑】:jdk解压后的用户和用户组会发生改变,我们需要把解压后的jdk的用户和用户组给纠正成root:root

第3部:【防火墙】

在hadoop集群部署中防火墙是一个很重要的需要考虑的问题,它不重要,但是你不关掉就会坏事。

虚拟机关闭防火墙的操作:

CentOS7使用firewalld打开关闭防火墙与端口

1、firewalld的基本使用

启动: systemctl start firewalld

查看状态: systemctl status firewalld

停止: systemctl stop firewalld

禁用: systemctl disable firewalld

2.systemctl是CentOS6 service iptables

service iptables stop

service iptables status

service iptables disable

云主机防火墙操作:

如果你使用的是云主机,那么你只需要把端口号给加到安全组内即可

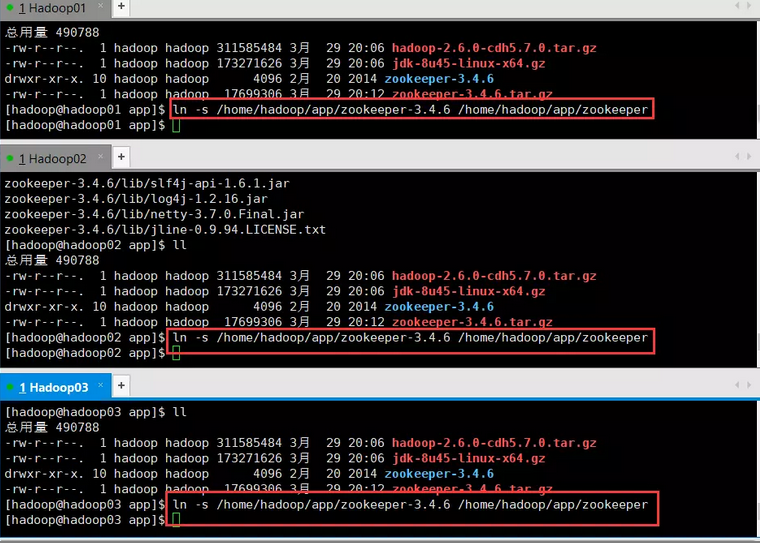

第四步:【Zookeeper的部署及定位】

1.解压ZK

2.建立软连接

3.在hadoop001配置zk的zoo.cfg文件:

[hadoop@hadoop001 data]$ mkdir -p /home/hadoop/app/zookeeper/data

[hadoop@hadoop001~]$cd /home/hadoop/app/zookeeper/conf

[hadoop@hadoop001 conf]$ cp zoo_sample.cfg zoo.cfg ---把模板文件 zoo_sample.cfg重名为zoo.cfg

[hadoop@hadoop001 conf]$ vi zoo.cfg ##添加以下内容

dataDir=/home/hadoop/app/zookeeper/data

server.1=hadoop001:2888:3888

server.2=hadoop002:2888:3888

server.3=hadoop003:2888:3888

[hadoop@hadoop001 data]$ pwd

/home/hadoop/app/zookeeper/data

[hadoop@hadoop001 data]$ touch myid

[hadoop@hadoop001 data]$ echo 1 > myid

【坑】myid的大小是两个字节【也就是只有一个数字;不要有空格】{查看方法就是vi进去以后光标闪烁是在1上,并且移动光标移动不了}

【复制zoo.cfg到hadoop002和hadoop003】

[hadoop@hadoop01 zookeeper]$ scp conf/zoo.cfg hadoop02:/home/hadoop/app/zookeeper/conf/

zoo.cfg 100% 1023 130.5KB/s 00:00

[hadoop@hadoop01 zookeeper]$ scp conf/zoo.cfg hadoop03:/home/hadoop/app/zookeeper/conf/

zoo.cfg 100% 1023 613.4KB/s 00:00

【复制data文件夹到hadoop002和hadoop003】

[hadoop@hadoop01 zookeeper]$ scp -r data hadoop03:/home/hadoop/app/zookeeper/

myid 100% 2 1.6KB/s 00:00

[hadoop@hadoop01 zookeeper]$ scp -r data hadoop02:/home/hadoop/app/zookeeper/

myid 100% 2 0.9KB/s 00:00

【修改hadoop002和hadoop003的myid文件】

[hadoop@hadoop002 zookeeper]$ echo 2 > data/myid ## 一个>代表覆盖原来的内容,两个>>代表追加到原来内容的后边

[hadoop@hadoop003 zookeeper]$ echo 3 > data/myid

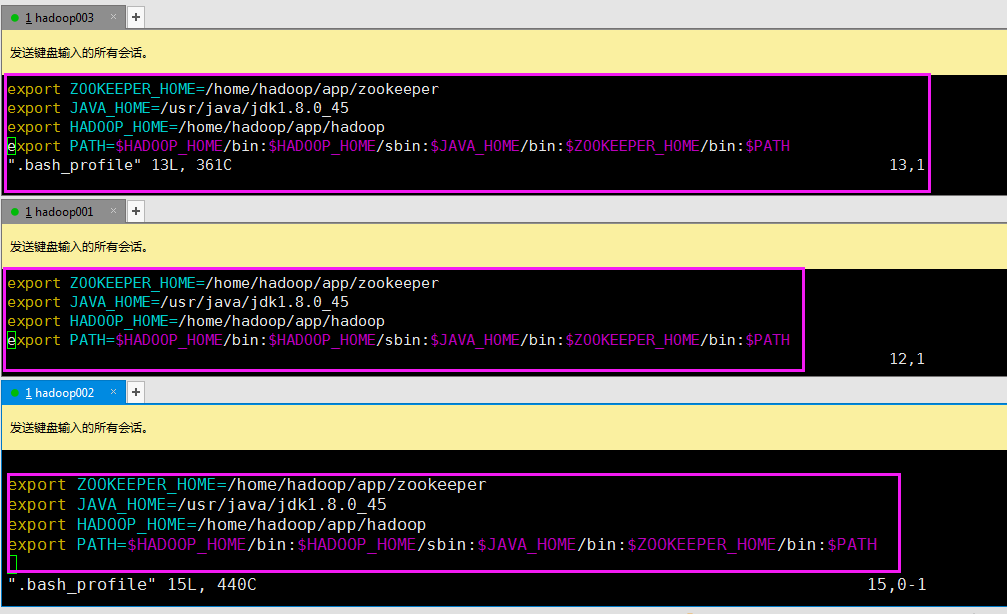

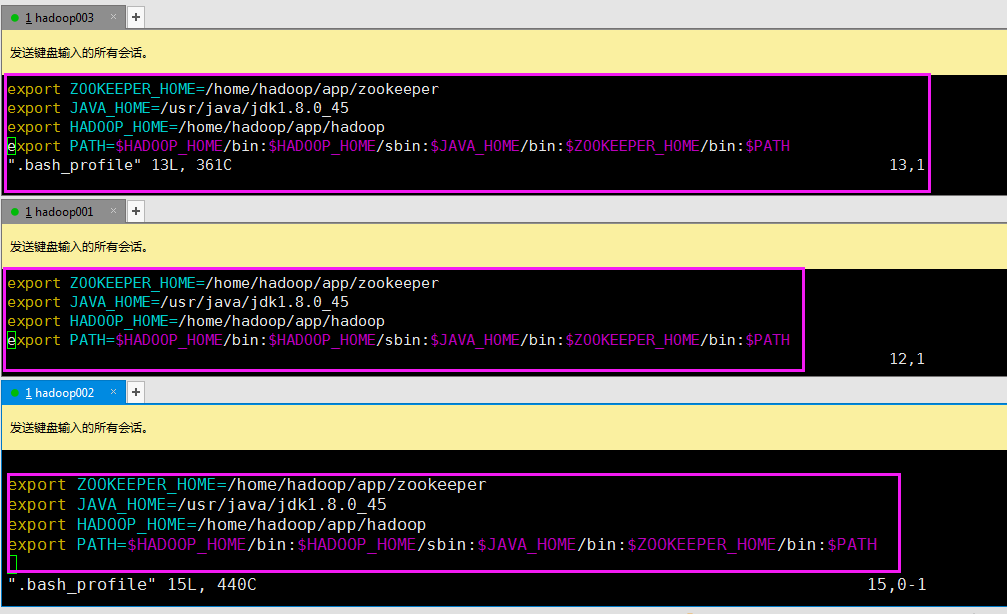

第四步【配置环境变量】

[hadoop@hadoop001 ~]$ vi .bash_profile

[hadoop@hadoop002 ~]$ vi .bash_profile

[hadoop@hadoop003 ~]$ vi .bash_profile

[hadoop@hadoop003 ~]$ source ~/.bash_profile ###【一定不要忘记】

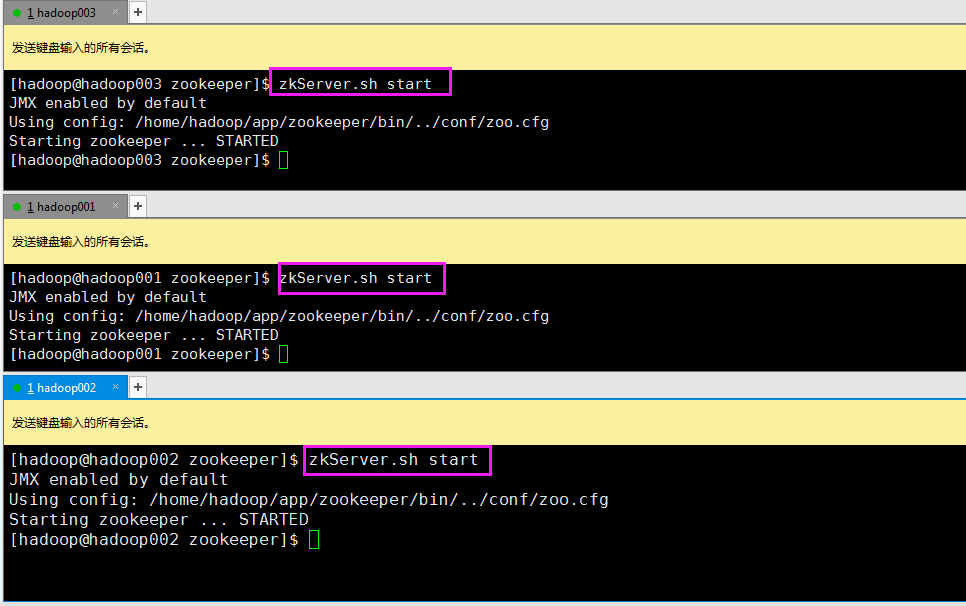

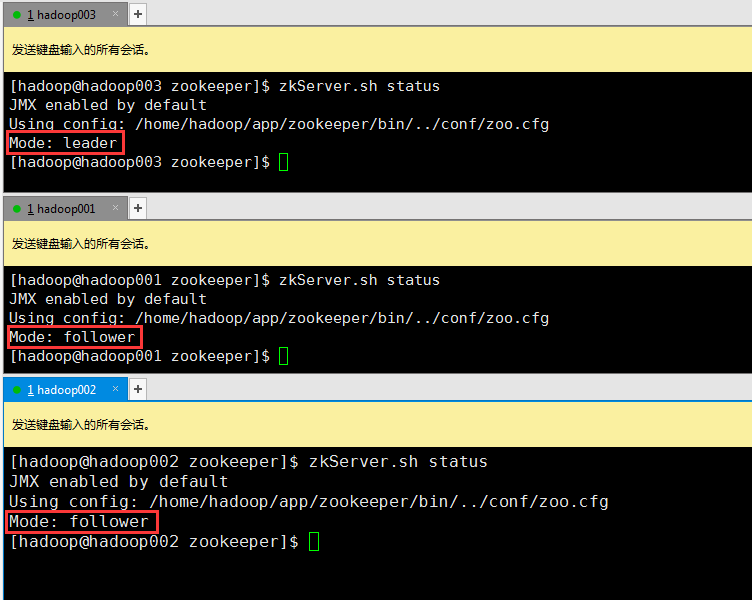

第五步【启动ZK,并查看状态是否正常】

1.启动ZK

2.查看状态 【一定是有一个leader,其余的都是follower】

【错误积累,我在配置文件zoo.cfg 的时候,把

server.1=hadoop001:2888:3888

server.2=hadoop002:2888:3888

server.3=hadoop003:2888:3888

配置中中的hadoop全都写成了hadoop001,导致了leader和follower那一直出不来报错内容忘记了好像是not running】

【注意:如有出错以debug模式检查;shell脚本启动打开debug模式的方法在第一行加入(-x)即可如下:

#!/usr/bin/env bash -x

运行这个脚本即可看到运行debug模式来定位问题】

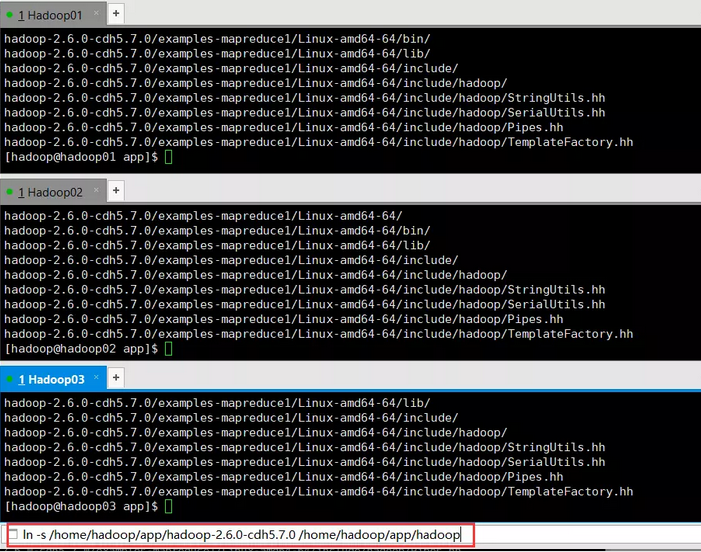

第五步【hadoop的部署】

1.解压hadoop压缩包,并建立软连接

2.配置环境变量

[hadoop@hadoop001 ~]$ vi .bash_profile

[hadoop@hadoop002 ~]$ vi .bash_profile

[hadoop@hadoop003 ~]$ vi .bash_profile

[hadoop@hadoop003 ~]$ source ~/.bash_profile ###【一定不要忘记】

3.创建文件夹【hadoop001,hadoop002和hadoop003三台都要创建】##这一步是根据我们的配置文件来创建的

mkdir -p /home/hadoop/app/hadoop-2.6.0-cdh5.7.0/tmp

mkdir -p /home/hadoop/app/hadoop-2.6.0-cdh5.7.0/data/dfs/name

mkdir -p /home/hadoop/app/hadoop-2.6.0-cdh5.7.0/data/dfs/data

mkdir -p /home/hadoop/app/hadoop-2.6.0-cdh5.7.0/data/dfs/jn

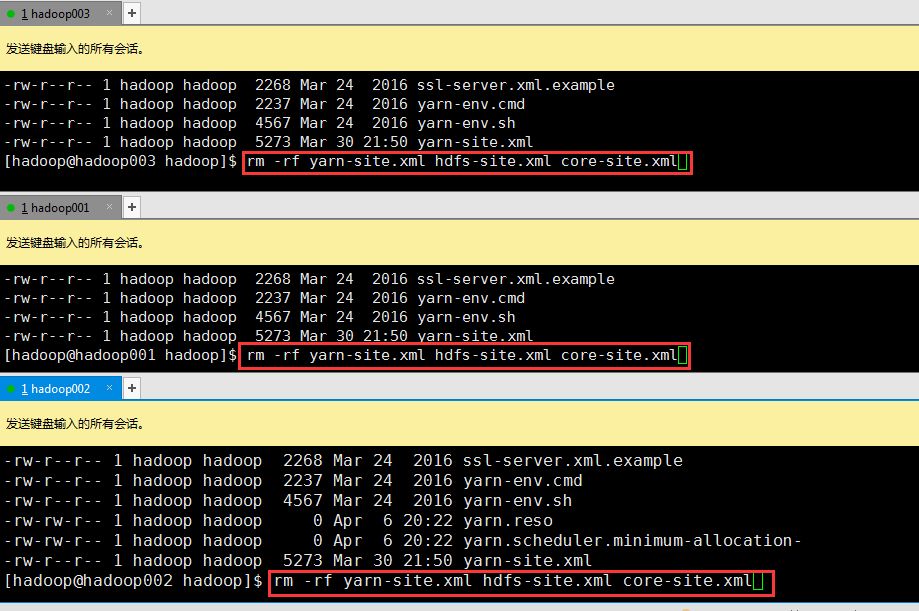

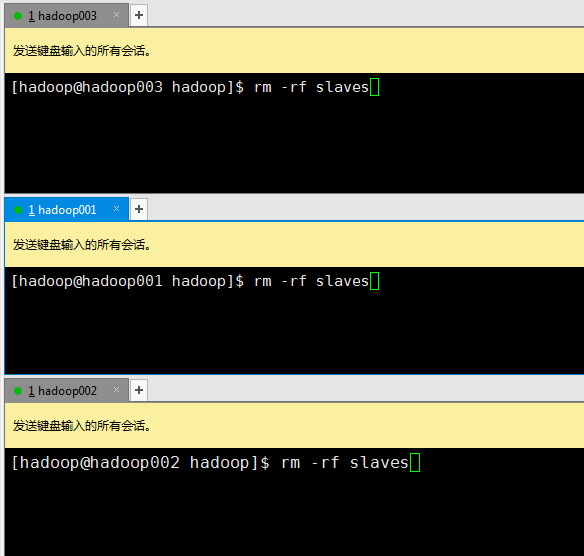

4.把原来的配置文件都删掉

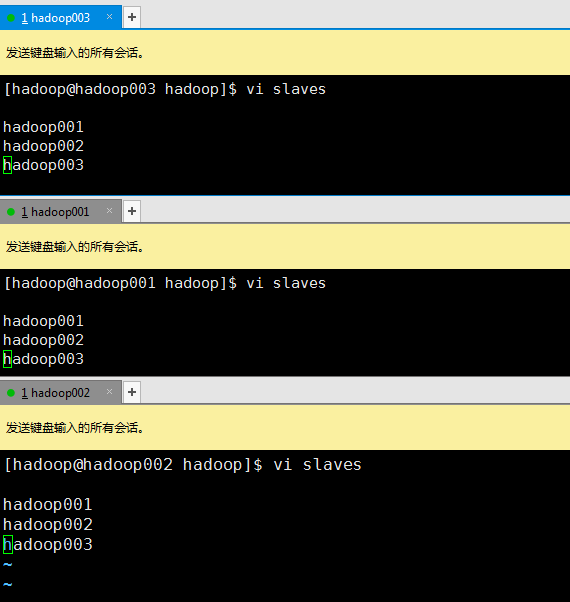

5.【上传三个文件,单独vi slaves】把我们预先准备好的yarn-site.xml,core-site.xml,hdfs-site.xml上传到以下目录

[hadoop@hadoop002 hadoop]$ pwd

/home/hadoop/app/hadoop/etc/hadoop

但是slaves需要自己vi填写否则有大坑:【Name or service not knownstname hadoop01;这是识别不了slaves里配置的服务】

【core-site.xml】

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!--Yarn 需要使用 fs.defaultFS 指定NameNode URI -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ruozeclusterg6</value>

</property>

<!--==============================Trash机制======================================= -->

<property>

<!--多长时间创建CheckPoint NameNode截点上运行的CheckPointer 从Current文件夹创建CheckPoint;默认:0 由fs.trash.interval项指定 -->

<name>fs.trash.checkpoint.interval</name>

<value>0</value>

</property>

<property>

<!--多少分钟.Trash下的CheckPoint目录会被删除,该配置服务器设置优先级大于客户端,默认:0 不删除 -->

<name>fs.trash.interval</name>

<value>1440</value>

</property>

<!--指定hadoop临时目录, hadoop.tmp.dir 是hadoop文件系统依赖的基础配置,很多路径都依赖它。如果hdfs-site.xml中不配 置namenode和datanode的存放位置,默认就放在这>个路径中 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/tmp</value>

</property>

<!-- 指定zookeeper地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop001:2181,hadoop002:2181,hadoop003:2181</value>

</property>

<!--指定ZooKeeper超时间隔,单位毫秒 -->

<property>

<name>ha.zookeeper.session-timeout.ms</name>

<value>2000</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

<property>

<name>io.compression.codecs</name>

<value>org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

org.apache.hadoop.io.compress.BZip2Codec,

org.apache.hadoop.io.compress.SnappyCodec

</value>

</property>

</configuration>

【hdfs-site.xml】

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!--HDFS超级用户 -->

<property>

<name>dfs.permissions.superusergroup</name>

<value>hadoop</value>

</property>

<!--开启web hdfs -->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/data/dfs/name</value>

<description> namenode 存放name table(fsimage)本地目录(需要修改)</description>

</property>

<property>

<name>dfs.namenode.edits.dir</name>

<value>${dfs.namenode.name.dir}</value>

<description>namenode粗放 transaction file(edits)本地目录(需要修改)</description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/data/dfs/data</value>

<description>datanode存放block本地目录(需要修改)</description>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- 块大小256M (默认128M) -->

<property>

<name>dfs.blocksize</name>

<value>268435456</value>

</property>

<!--======================================================================= -->

<!--HDFS高可用配置 -->

<!--指定hdfs的nameservice为ruozeclusterg6,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservices</name>

<value>ruozeclusterg6</value>

</property>

<property>

<!--设置NameNode IDs 此版本最大只支持两个NameNode -->

<name>dfs.ha.namenodes.ruozeclusterg6</name>

<value>nn1,nn2</value>

</property>

<!-- Hdfs HA: dfs.namenode.rpc-address.[nameservice ID] rpc 通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ruozeclusterg6.nn1</name>

<value>hadoop001:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ruozeclusterg6.nn2</name>

<value>hadoop002:8020</value>

</property>

<!-- Hdfs HA: dfs.namenode.http-address.[nameservice ID] http 通信地址 -->

<property>

<name>dfs.namenode.http-address.ruozeclusterg6.nn1</name>

<value>hadoop001:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.ruozeclusterg6.nn2</name>

<value>hadoop002:50070</value>

</property>

<!--==================Namenode editlog同步 ============================================ -->

<!--保证数据恢复 -->

<property>

<name>dfs.journalnode.http-address</name>

<value>0.0.0.0:8480</value>

</property>

<property>

<name>dfs.journalnode.rpc-address</name>

<value>0.0.0.0:8485</value>

</property>

<property>

<!--设置JournalNode服务器地址,QuorumJournalManager 用于存储editlog -->

<!--格式:qjournal://<host1:port1>;<host2:port2>;<host3:port3>/<journalId> 端口同journalnode.rpc-address -->

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop001:8485;hadoop002:8485;hadoop003:8485/ruozeclusterg6</value>

</property>

<property>

<!--JournalNode存放数据地址 -->

<name>dfs.journalnode.edits.dir</name>

<value>/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/data/dfs/jn</value>

</property>

<!--==================DataNode editlog同步 ============================================ -->

<property>

<!--DataNode,Client连接Namenode识别选择Active NameNode策略 -->

<!-- 配置失败自动切换实现方式 -->

<name>dfs.client.failover.proxy.provider.ruozeclusterg6</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!--==================Namenode fencing:=============================================== -->

<!--Failover后防止停掉的Namenode启动,造成两个服务 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<property>

<!--多少milliseconds 认为fencing失败 -->

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<!--==================NameNode auto failover base ZKFC and Zookeeper====================== -->

<!--开启基于Zookeeper -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!--指定hadoop临时目录, hadoop.tmp.dir 是hadoop文件系统>依赖的基础配置,很多路径都依赖它。如果hdfs-site.xml中不配 置namenode和datanode的存放位置,默认就放在这>个路径中 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/tmp</value>

</property>

<!--动态许可datanode连接namenode列表 -->

<property>

<name>dfs.hosts</name>

<value>/home/hadoop/app/hadoop-2.6.0-cdh5.7.0/etc/hadoop/slaves</value>

</property>

</configuration>

【yarn-site.xml】

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- nodemanager 配置 ================================================= -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.nodemanager.localizer.address</name>

<value>0.0.0.0:23344</value>

<description>Address where the localizer IPC is.</description>

</property>

<property>

<name>yarn.nodemanager.webapp.address</name>

<value>0.0.0.0:23999</value>

<description>NM Webapp address.</description>

</property>

<!-- HA 配置 =============================================================== -->

<!-- Resource Manager Configs -->

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>2000</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 使嵌入式自动故障转移。HA环境启动,与 ZKRMStateStore 配合 处理fencing -->

<property>

<name>yarn.resourcemanager.ha.automatic-failover.embedded</name>

<value>true</value>

</property>

<!-- 集群名称,确保HA选举时对应的集群 -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yarn-cluster</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!--这里RM主备结点需要单独指定,(可选)

<property>

<name>yarn.resourcemanager.ha.id</name>

<value>rm2</value>

</property>

-->

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms</name>

<value>5000</value>

</property>

<!-- ZKRMStateStore 配置 -->

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>hadoop001:2181,hadoop002:2181,hadoop003:2181</value>

</property>

<property>

<name>yarn.resourcemanager.zk.state-store.address</name>

<value>hadoop001:2181,hadoop002:2181,hadoop003:2181</value>

</property>

<!-- Client访问RM的RPC地址 (applications manager interface) -->

<property>

<name>yarn.resourcemanager.address.rm1</name>

<value>hadoop001:23140</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm2</name>

<value>hadoop002:23140</value>

</property>

<!-- AM访问RM的RPC地址(scheduler interface) -->

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>hadoop001:23130</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>hadoop002:23130</value>

</property>

<!-- RM admin interface -->

<property>

<name>yarn.resourcemanager.admin.address.rm1</name>

<value>hadoop001:23141</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.rm2</name>

<value>hadoop002:23141</value>

</property>

<!--NM访问RM的RPC端口 -->

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm1</name>

<value>hadoop001:23125</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm2</name>

<value>hadoop002:23125</value>

</property>

<!-- RM web application 地址 -->

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>hadoop001:8088</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>hadoop002:8088</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.rm1</name>

<value>hadoop001:23189</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.rm2</name>

<value>hadoop002:23189</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log.server.url</name>

<value>http://hadoop001:19888/jobhistory/logs</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

<discription>单个任务可申请最少内存,默认1024MB</discription>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>2048</value>

<discription>单个任务可申请最大内存,默认8192MB</discription>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>2</value>

</property>

</configuration>

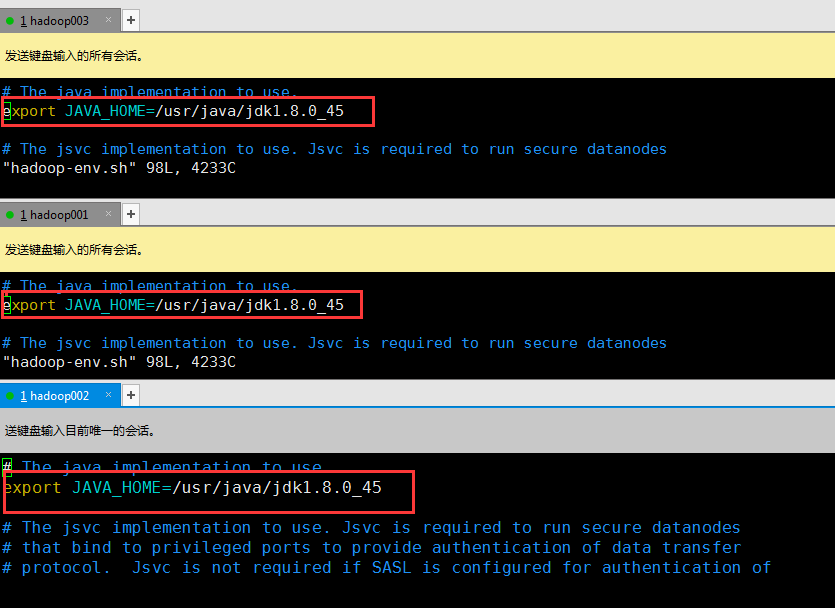

6.配置hadoop-env.sh的java家目录(三台都要配置)

7.【第一次启动集群的步骤】

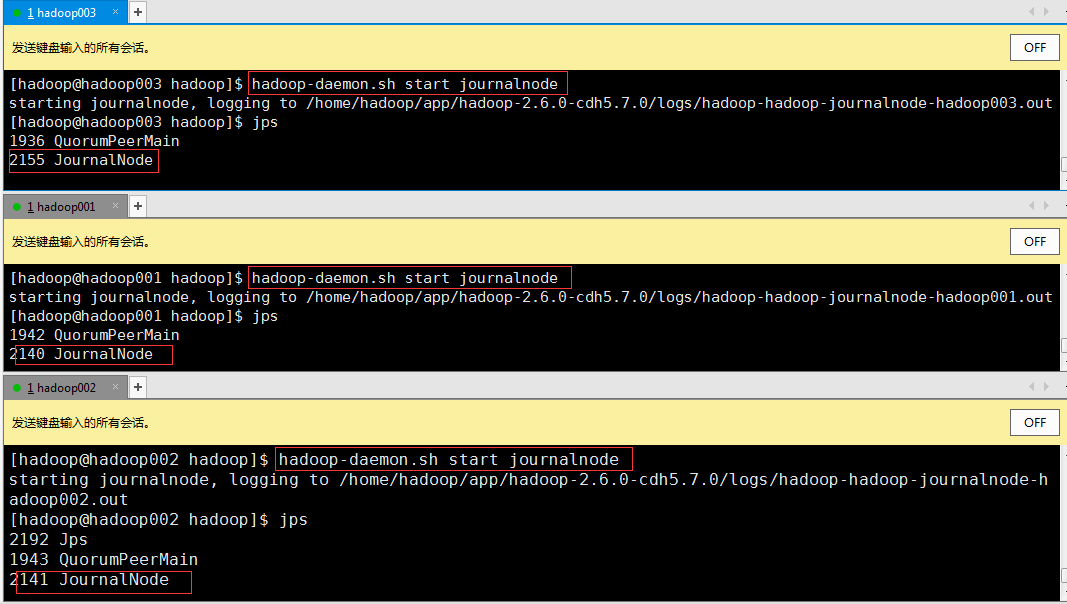

1.启动jn

2.格式化hadoop【hadoop001进行,并将hadoop001的data传入hadoop002】

[hadoop@hadoop001 hadoop]$ pwd

/home/hadoop/app/hadoop

[hadoop@hadoop001 hadoop]$ hadoop namenode -format

[hadoop@hadoop001 hadoop]$ scp -r data/ hadoop02:/home/hadoop/app/hadoop

3.【初始化zkfc】

[hadoop@hadoop001 hadoop]$ hdfs zkfc -formatZK

[hadoop@hadoop001 hadoop]$ start-dfs.sh

4.【在hadoop001启动dfs集群】

[hadoop@hadoop001 hadoop]$ start-dfs.sh

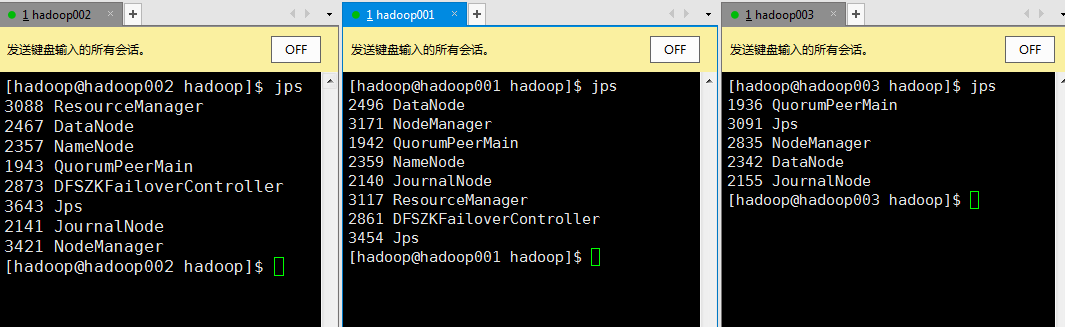

dn,zkfc,jn,zk有三台

nn有两台分别在hadoop001和hadoop002

5【启动yarn集群,并在hadoop002手动启动rm】

[hadoop@hadoop001 hadoop]$ start-yarn.sh

[hadoop@hadoop002 hadoop]$ yarn-daemon.sh start resourcemanager

6.【启动日志管理】

[hadoop@hadoop01 hadoop]$ mr-jobhistory-daemon.sh start historyserver

停止集群 并进行第二次启动

停止集群 并进行第二次启动

[hadoop@hadoop01 hadoop]$ stop-all.sh

[hadoop@hadoop01 hadoop]$ zkServer.sh stop

[hadoop@hadoop02 hadoop]$ zkServer.sh stop

[hadoop@hadoop03 hadoop]$ zkServer.sh stop

启动集群

[hadoop@hadoop01 hadoop]$ zkServer.sh start

[hadoop@hadoop02 hadoop]$ zkServer.sh start

[hadoop@hadoop03 hadoop]$ zkServer.sh start

[hadoop@hadoop01 hadoop]$ start-dfs.sh

[hadoop@hadoop01 hadoop]$ start-yarn.sh

[hadoop@hadoop02 hadoop]$ yarn-daemon.sh start resourcemanager

[hadoop@hadoop01 hadoop]$ mr-jobhistory-daemon.sh start historyserver

7、集群监控

HDFS: http://hadoop01:50070/

HDFS: http://hadoop02:50070/

ResourceManger (Active ):http://hadoop01:8088

ResourceManger (Standby ):http://hadoop02:8088/cluster/cluster

JobHistory: http://hadoop01:19888/jobhistory

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律