kubeadm安装kubernetes1.18.5

前言

尝试安装helm3,kubernetes1.18,istio1.6是否支持现有集群平滑迁移

版本

Centos7.6 升级内核4.x

kubernetes:v1.18.5

helm:v3.2.4

istio:v1.6.4

安装

添加kubernetes源

cat /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-$basearch enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg exclude=kubelet kubeadm kubectl

查看历史版本有那些

查看历史版本有那些 #yum list --showduplicates kubeadm|grep 1.18 本地下载rpm包 #yum install kubectl-1.18.5-0.x86_64 kubeadm-1.18.5-0.x86_64 kubelet-1.18.5-0.x86_64 --downloadonly --downloaddir=./rpmKubeadm

查看kubernetes1.18需要的镜像列表

#kubeadm config images list --kubernetes-version=v1.18.5 W0709 15:55:40.303088 7778 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io] k8s.gcr.io/kube-apiserver:v1.18.5 k8s.gcr.io/kube-controller-manager:v1.18.5 k8s.gcr.io/kube-scheduler:v1.18.5 k8s.gcr.io/kube-proxy:v1.18.5 k8s.gcr.io/pause:3.2 k8s.gcr.io/etcd:3.4.3-0 k8s.gcr.io/coredns:1.6.7

下载镜像并导出

#使用脚本

#/bin/bash

url=k8s.gcr.io

version=v1.18.5

images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)

for imagename in ${images[@]} ; do

#echo $imagename

echo "docker pull $url/$imagename"

docker pull $url/$imagename

done

images=(`kubeadm config images list --kubernetes-version=$version`)

echo "docker save ${images[@]} -o kubeDockerImage$version.tar"

docker save ${images[@]} -o kubeDockerImage$version.tar

最终文件目录如下

[root@dev-k8s-master-1-105 v1-18]# tree ./

./

├── images.sh

├── kubeDockerImagev1.18.5.tar

└── rpmKubeadm

├── cri-tools-1.13.0-0.x86_64.rpm

├── kubeadm-1.18.5-0.x86_64.rpm

├── kubectl-1.18.5-0.x86_64.rpm

├── kubelet-1.18.5-0.x86_64.rpm

└── kubernetes-cni-0.8.6-0.x86_64.rpm

1 directory, 7 files

[root@dev-k8s-master-1-105 v1-18]#

安装前系统设置

/etc/sysctl.conf 添加参数 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 生效:sysctl -p 关闭防火墙和Selinux 关闭swap

升级内核

1、查看当前内核

[root@dev-k8s-master-1-105 ~]# uname -a

Linux dev-k8s-master-1-105 3.10.0-957.1.3.el7.x86_64 #1 SMP Thu Nov 29 14:49:43 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

[root@dev-k8s-master-1-105 ~]#

2、导入ELRepo仓库的公共密钥

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

3、安装ELRepo仓库的yum源

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

4、查看可用的系统内核包

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

#lt稳定版 安装这个

5、安装内核

yum --enablerepo=elrepo-kernel install kernel-lt.x86_64 -y

--enablerepo 选项开启 CentOS 系统上的指定仓库。默认开启的是 elrepo,这里用 elrepo-kernel 替换。

6、

awk -F \' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

7、

8、

9. 查看当前实际启动顺序

grub2-editenv list

10. 设置默认启动

grub2-set-default 0

grub2-editenv list

11、重启

reboot

12、卸载老版本

安装rpm并导入镜像

yum install -y ./rpmKubeadm/*.rpm docker load -i kubeDockerImagev1.18.5.tar

初始化集群

kubeadm init --kubernetes-version=1.18.5 --apiserver-advertise-address=192.168.1.105 --pod-network-cidr=10.81.0.0/16

部分信息

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.105:6443 --token xnlao7.5qsgih5vft0n2li6 \

--discovery-token-ca-cert-hash sha256:1d341b955245da64a5e28791866e0580a5e223a20ffaefdc6b2729d9fb1739b4

安装网络

wget https://docs.projectcalico.org/v3.14/manifests/calico.yaml kuberctl apply -f calico.yaml

安装完成

[root@dev-k8s-master-1-105 v1-18]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-76d4774d89-kp8nz 1/1 Running 0 46h calico-node-k5m4b 1/1 Running 0 46h calico-node-l6hq7 1/1 Running 0 46h coredns-66bff467f8-hgbvw 1/1 Running 0 47h coredns-66bff467f8-npmxl 1/1 Running 0 47h etcd-dev-k8s-master-1-105 1/1 Running 0 47h kube-apiserver-dev-k8s-master-1-105 1/1 Running 0 47h kube-controller-manager-dev-k8s-master-1-105 1/1 Running 0 47h kube-proxy-6dx95 1/1 Running 0 46h kube-proxy-926mq 1/1 Running 0 47h kube-scheduler-dev-k8s-master-1-105 1/1 Running 0 47h [root@dev-k8s-master-1-105 v1-18]#

安装helm3

主要变化:release不在是全局的,要指定release名称,没有tiller

安装

wget https://get.helm.sh/helm-v3.2.4-linux-amd64.tar.gz cp helm /usr/local/bin/ 初始化可以使用helm init 添加公共仓库 helm repo add stable https://kubernetes-charts.storage.googleapis.com 查看 helm repo list 搜索 helm search repo nginx

查看版本信息

[root@dev-k8s-master-1-105 ~]# which helm

/usr/local/bin/helm

[root@dev-k8s-master-1-105 ~]# helm version

version.BuildInfo{Version:"v3.2.4", GitCommit:"0ad800ef43d3b826f31a5ad8dfbb4fe05d143688", GitTreeState:"clean", GoVersion:"go1.13.12"}

[root@dev-k8s-master-1-105 ~]#

查看配置信息

[root@dev-k8s-master-1-105 ~]# helm env HELM_BIN="helm" HELM_DEBUG="false" HELM_KUBEAPISERVER="" HELM_KUBECONTEXT="" HELM_KUBETOKEN="" HELM_NAMESPACE="default" HELM_PLUGINS="/root/.local/share/helm/plugins" HELM_REGISTRY_CONFIG="/root/.config/helm/registry.json" HELM_REPOSITORY_CACHE="/root/.cache/helm/repository" HELM_REPOSITORY_CONFIG="/root/.config/helm/repositories.yaml" [root@dev-k8s-master-1-105 ~]#

指定k8s集群

v3版本不再需要Tiller,而是通过ApiServer与k8s交互,可以设置环境变量KUBECONFIG来指定存有ApiServre的地址与token的配置文件地址,默认为~/.kube/config

export KUBECONFIG=/root/.kube/config #可以写到/etc/profile里

安装gitlab插件

[root@dev-k8s-master-1-105 ~]# helm plugin install https://github.com/diwakar-s-maurya/helm-git [root@dev-k8s-master-1-105 ~]# helm plugin ls NAME VERSION DESCRIPTION helm-git 1.0.0 Let's you use private gitlab repositories easily [root@dev-k8s-master-1-105 ~]# 添加gitlab仓库地址

helm repo add myhelmrepo gitlab://username/project:master/kubernetes/helm-chart helm repo list

使用istioctl安装istio1.6.4

下载

wget https://github.com/istio/istio/releases/download/1.6.4/istioctl-1.6.4-linux-amd64.tar.gz cp istioctl /usr/local/bin/

显示可用配置文件列表

[root@dev-k8s-master-1-105 ~]# istioctl profile list

Istio configuration profiles:

demo

empty

minimal

preview

remote

default

安装默认配置

istioctl install istioctl install --set profile=default istioctl install --set addonComponents.grafana.enabled=true --set components.policy.enabled=true

安装之前生成配置清单

istioctl manifest generate > default-generated-manifest.yaml istioctl manifest generate --set components.policy.enabled=true > default-generated-manifest.yaml istioctl manifest generate --set components.telemetry.enabled=true --set components.citadel.enabled=true --set components.pilot.enabled=true --set components.policy.enabled=true > default-generated-manifest.yaml

主要用于查看安装资源,可以使用apply -f安装 但未测试

验证是否安装成功

[root@dev-k8s-master-1-105 ~]# istioctl manifest generate > default-generated-manifest.yaml [root@dev-k8s-master-1-105 ~]# istioctl verify-install -f default-generated-manifest.yaml ClusterRole: prometheus-istio-system.default checked successfully ClusterRoleBinding: prometheus-istio-system.default checked successfully ConfigMap: prometheus.istio-system checked successfully Deployment: prometheus.istio-system checked successfully Service: prometheus.istio-system checked successfully ServiceAccount: prometheus.istio-system checked successfully ClusterRole: istiod-istio-system.default checked successfully ClusterRole: istio-reader-istio-system.default checked successfully ClusterRoleBinding: istio-reader-istio-system.default checked successfully ClusterRoleBinding: istiod-pilot-istio-system.default checked successfully ServiceAccount: istio-reader-service-account.istio-system checked successfully ServiceAccount: istiod-service-account.istio-system checked successfully ValidatingWebhookConfiguration: istiod-istio-system.default checked successfully CustomResourceDefinition: httpapispecs.config.istio.io.default checked successfully CustomResourceDefinition: httpapispecbindings.config.istio.io.default checked successfully CustomResourceDefinition: quotaspecs.config.istio.io.default checked successfully CustomResourceDefinition: quotaspecbindings.config.istio.io.default checked successfully CustomResourceDefinition: destinationrules.networking.istio.io.default checked successfully CustomResourceDefinition: envoyfilters.networking.istio.io.default checked successfully CustomResourceDefinition: gateways.networking.istio.io.default checked successfully CustomResourceDefinition: serviceentries.networking.istio.io.default checked successfully CustomResourceDefinition: sidecars.networking.istio.io.default checked successfully CustomResourceDefinition: virtualservices.networking.istio.io.default checked successfully CustomResourceDefinition: workloadentries.networking.istio.io.default checked successfully CustomResourceDefinition: attributemanifests.config.istio.io.default checked successfully CustomResourceDefinition: handlers.config.istio.io.default checked successfully CustomResourceDefinition: instances.config.istio.io.default checked successfully CustomResourceDefinition: rules.config.istio.io.default checked successfully CustomResourceDefinition: clusterrbacconfigs.rbac.istio.io.default checked successfully CustomResourceDefinition: rbacconfigs.rbac.istio.io.default checked successfully CustomResourceDefinition: serviceroles.rbac.istio.io.default checked successfully CustomResourceDefinition: servicerolebindings.rbac.istio.io.default checked successfully CustomResourceDefinition: authorizationpolicies.security.istio.io.default checked successfully CustomResourceDefinition: peerauthentications.security.istio.io.default checked successfully CustomResourceDefinition: requestauthentications.security.istio.io.default checked successfully CustomResourceDefinition: adapters.config.istio.io.default checked successfully CustomResourceDefinition: templates.config.istio.io.default checked successfully CustomResourceDefinition: istiooperators.install.istio.io.default checked successfully HorizontalPodAutoscaler: istio-ingressgateway.istio-system checked successfully Deployment: istio-ingressgateway.istio-system checked successfully PodDisruptionBudget: istio-ingressgateway.istio-system checked successfully Role: istio-ingressgateway-sds.istio-system checked successfully RoleBinding: istio-ingressgateway-sds.istio-system checked successfully Service: istio-ingressgateway.istio-system checked successfully ServiceAccount: istio-ingressgateway-service-account.istio-system checked successfully HorizontalPodAutoscaler: istiod.istio-system checked successfully ConfigMap: istio.istio-system checked successfully Deployment: istiod.istio-system checked successfully ConfigMap: istio-sidecar-injector.istio-system checked successfully MutatingWebhookConfiguration: istio-sidecar-injector.default checked successfully PodDisruptionBudget: istiod.istio-system checked successfully Service: istiod.istio-system checked successfully EnvoyFilter: metadata-exchange-1.4.istio-system checked successfully EnvoyFilter: stats-filter-1.4.istio-system checked successfully EnvoyFilter: metadata-exchange-1.5.istio-system checked successfully EnvoyFilter: tcp-metadata-exchange-1.5.istio-system checked successfully EnvoyFilter: stats-filter-1.5.istio-system checked successfully EnvoyFilter: tcp-stats-filter-1.5.istio-system checked successfully EnvoyFilter: metadata-exchange-1.6.istio-system checked successfully EnvoyFilter: tcp-metadata-exchange-1.6.istio-system checked successfully EnvoyFilter: stats-filter-1.6.istio-system checked successfully EnvoyFilter: tcp-stats-filter-1.6.istio-system checked successfully Checked 25 custom resource definitions Checked 1 Istio Deployments Istio is installed successfully [root@dev-k8s-master-1-105 ~]#

开启envoy访问日志

安装前生产清单查看:

[root@dev-k8s-master-1-105 istio-1.6.4]# istioctl manifest generate --set meshConfig.accessLogFile="/dev/stdout" --set meshConfig.accessLogEncoding="JSON"> default-generated-manifest.yaml

安装时:

istioctl install --set meshConfig.accessLogFile="/dev/stdout" --set meshConfig.accessLogEncoding="JSON"

修改configmap

kubectl edit cm -n istio-system istio

修改:

accessLogEncoding: JSON

accessLogFile: "/dev/stdout"

accessLogFormat: ""

安装完成

[root@dev-k8s-master-1-105 ~]# kubectl get pods -n istio-system NAME READY STATUS RESTARTS AGE istio-ingressgateway-66c994c45c-msmgz 1/1 Running 0 3h5m istiod-6cf8d4f9cb-w8cr2 1/1 Running 0 3h5m prometheus-7bdc59c94d-ktf8n 2/2 Running 0 3h5m [root@dev-k8s-master-1-105 ~]#

卸载istio

#卸载 istioctl manifest generate | kubectl delete -f - istioctl manifest generate --set components.telemetry.enabled=true --set components.citadel.enabled=true --set components.pilot.enabled=true --set components.policy.enabled=true | kubectl delete -f -

安装 Bookinfo Application

开启空间默认注入

kubectl label namespace default istio-injection=enabled kubectl get namespace -L istio-injection

安装

kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml

查看istio-ingressgateway开放端口,使用haproxy转发

[root@dev-k8s-master-1-105 ~]# kubectl get svc -n istio-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE istio-ingressgateway LoadBalancer 10.102.28.198 <pending> 15021:30962/TCP,80:30046/TCP,443:31320/TCP,15443:32185/TCP 3h17m istiod ClusterIP 10.104.67.53 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP,53/UDP,853/TCP 3h17m prometheus ClusterIP 10.104.115.121 <none> 9090/TCP 3h17m

安装haproxy

如在本机192.168.11.33安装

haproxy主要配置

## HTTP frontend http_front bind *:80 default_backend http_backend backend http_backend server k8s-master 192.168.1.105:30046 check ## HTTPS frontend https_front bind *:443 default_backend https_backend backend https_backend server k8s-master 192.168.1.105:31320 check

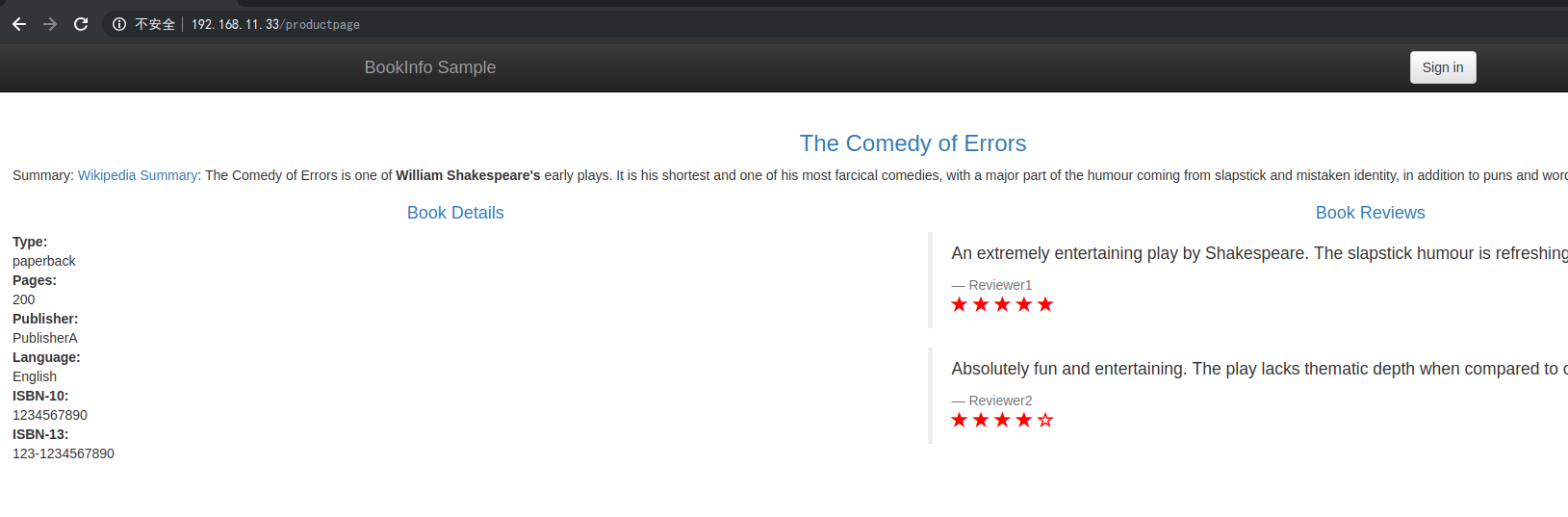

使用本机ip访问:http://192.168.11.33/productpage

dashboard安装

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

https://github.com/kubernetes/dashboard/blob/master/aio/deploy/recommended.yaml

metric-server安装

https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

修改

- name: metrics-server image: k8s.gcr.io/metrics-server-amd64:v0.3.6 imagePullPolicy: IfNotPresent command: - /metrics-server - --metric-resolution=30s - --cert-dir=/tmp - --secure-port=4443 - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalDNS,InternalIP,ExternalDNS,ExternalIP,Hostname