kubernetes 集群搭建 -- 二进制方式

主机信息

作为练习,只准备一台master,一台node,操作系统为centos7.9,多台node的添加只是重复工作。

参照上一篇,和kubeadm方式安装时的初始化一样,进行关闭防火墙、关闭 selinux、关闭swap、修改主机名、在 master 添加 hosts、将桥接的IPv4流量传递到 iptables 的链以及时间同步等初始化工作。

部署Etcd 集群

Etcd 是一个分布式键值存储系统,Kubernetes 使用 Etcd 进行数据存储,所以先准备一个 Etcd 数据库,为解决 Etcd 单点故障,应采用集群方式部署,这里只使用 2 台组建集群。

3台组件的集群,可容忍 1 台机器故障;5 台组建的集群,可容忍 2 台机器故障。

注:为了节省机器,这里与 K8s 节点机器复用;当然也可以独立于 k8s 集群之外部署,只要apiserver 能连接到就行。

1.安装 cfssl 证书生成工具

cfssl 是一个开源的证书管理工具,使用 json 文件生成证书,相比 openssl 更方便使用。

找任意一台服务器操作,这里用 Master 节点,进行安装:

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl mv cfssljson_linux-amd64 /usr/local/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

就可以使用这三个命令了:

[root@binary-master bin]# pwd /usr/local/bin [root@binary-master bin]# ll total 18808 -rwxr-xr-x 1 root root 10376657 Mar 30 2016 cfssl -rwxr-xr-x 1 root root 6595195 Mar 30 2016 cfssl-certinfo -rwxr-xr-x 1 root root 2277873 Mar 30 2016 cfssljson

2.生成 Etcd 证书

(1)自签证书颁发机构(CA)

创建工作目录:

mkdir -p ~/TLS/{etcd,k8s} cd TLS/etcd

自签CA,两个json文件:

cat > ca-config.json<< EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "www": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF

cat > ca-csr.json<< EOF { "CN": "etcd CA", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing" } ] } EOF

生成证书(初始化):cfssl gencert -initca ca-csr.json | cfssljson -bare ca –

[root@binary-master etcd]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca - 2021/03/22 17:36:34 [INFO] generating a new CA key and certificate from CSR 2021/03/22 17:36:34 [INFO] generate received request 2021/03/22 17:36:34 [INFO] received CSR 2021/03/22 17:36:34 [INFO] generating key: rsa-2048 2021/03/22 17:36:35 [INFO] encoded CSR 2021/03/22 17:36:35 [INFO] signed certificate with serial number 558179141153695072425640815117027228526462947382 [root@binary-master etcd]# ls *pem ca-key.pem ca.pem

(2)使用自签 CA 签发 Etcd HTTPS 证书

创建证书申请文件:

cat > server-csr.json<< EOF { "CN": "etcd", "hosts": [ "172.31.93.210", "172.31.93.211" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing" } ] } EOF

注:上述文件 hosts 字段中 IP 为所有 etcd 节点的集群内部通信 IP,一个都不能少!为了方便后期扩容可以多写几个预留的 IP。

生成证书:cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

[root@binary-master etcd]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server 2021/03/22 17:50:56 [INFO] generate received request 2021/03/22 17:50:56 [INFO] received CSR 2021/03/22 17:50:56 [INFO] generating key: rsa-2048 2021/03/22 17:50:56 [INFO] encoded CSR 2021/03/22 17:50:56 [INFO] signed certificate with serial number 487201065380785180427832079165743752767773807902 2021/03/22 17:50:56 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). [root@binary-master etcd]# ls *.pem ca-key.pem ca.pem server-key.pem server.pem

其中server-key.pem和server.pem就是最后需要的etcd证书。

3.部署etcd集群

Etcd下载地址:https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

以下在master上操作,为简化操作,待会将master 生成的所有文件拷贝到node节点。

(1)创建工作目录并解压二进制包,

mkdir -p /opt/etcd/{bin,cfg,ssl} tar zxvf etcd-v3.4.9-linux-amd64.tar.gz mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

(2)创建 etcd 配置文件

cat > /opt/etcd/cfg/etcd.conf << EOF #[Member] ETCD_NAME="etcd-1" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://172.31.93.210:2380" ETCD_LISTEN_CLIENT_URLS="https://172.31.93.210:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.31.93.210:2380" ETCD_ADVERTISE_CLIENT_URLS="https://172.31.93.210:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://172.31.93.210:2380,etcd-2=https://172.31.93.211:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF 说明: ETCD_NAME:节点名称,集群中唯一 ETCD_DATA_DIR:数据目录 ETCD_LISTEN_PEER_URLS:集群通信监听地址 ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址 ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址 ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址 ETCD_INITIAL_CLUSTER:集群节点地址 ETCD_INITIAL_CLUSTER_TOKEN:集群 Token ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new 是新集群,existing表示加入已有集群

(3)systemd 管理 etcd

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/cfg/etcd.conf ExecStart=/opt/etcd/bin/etcd \ --cert-file=/opt/etcd/ssl/server.pem \ --key-file=/opt/etcd/ssl/server-key.pem \ --peer-cert-file=/opt/etcd/ssl/server.pem \ --peer-key-file=/opt/etcd/ssl/server-key.pem \ --trusted-ca-file=/opt/etcd/ssl/ca.pem \ --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \ --logger=zap Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

(4)拷贝已经生成好的证书

把刚才生成的证书拷贝到etcd.service文件中设置的路径:

cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

即四个.pem文件:

[root@binary-master ssl]# pwd /opt/etcd/ssl [root@binary-master ssl]# ll total 16 -rw------- 1 root root 1679 Mar 22 22:03 ca-key.pem -rw-r--r-- 1 root root 1265 Mar 22 22:03 ca.pem -rw------- 1 root root 1679 Mar 22 22:03 server-key.pem -rw-r--r-- 1 root root 1330 Mar 22 22:03 server.pem

到此,Master上的etcd部署已完成。

(5)将上面节点master所有生成的文件拷贝到节点node

scp -r /opt/etcd/ root@172.31.93.211:/opt/ scp /usr/lib/systemd/system/etcd.service root@172.31.93.211:/usr/lib/systemd/system/

(6)在node节点上修改 etcd.conf 配置文件中的节点名称和当前服务器 IP

(7)在master和node节点上都启动并设置开机启动

systemctl daemon-reload

systemctl start etcd

systemctl enable etcd

(8)使用etcdctl查看集群状态

/opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://172.31.93.210:2379,https://172.31.93.211:2379" endpoint health

[root@binary-master ssl]# /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://172.31.93.210:2379,https://172.31.93.211:2379" endpoint health https://172.31.93.210:2379 is healthy: successfully committed proposal: took = 11.468435ms https://172.31.93.211:2379 is healthy: successfully committed proposal: took = 12.058593ms

如果输出上面信息,就说明etcd集群部署成功。如果有问题第一步先看日志:/var/log/message 或 journalctl -u etcd

所有节点二进制安装docker

之前使用kubeadm安装k8s集群时,是用yum安装的docker,这次用二进制安装k8s集群,所以也用二进制安装docker。当然用yum也可以,只是不同的安装方式而已。如果在不联网的情况下,只能上传二进制包来安装。

下载地址:https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz

1.解压二进制包

tar zxvf docker-19.03.9.tgz mv docker/* /usr/bin

2.systemd 管理 docker

cat > /usr/lib/systemd/system/docker.service << EOF [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify ExecStart=/usr/bin/dockerd ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target EOF

3.创建配置文件,配置阿里云镜像加速器

mkdir /etc/docker cat > /etc/docker/daemon.json << EOF { "registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"] } EOF

4.启动并设置开机启动

systemctl daemon-reload

systemctl start docker

systemctl enable docker

5.把docker-19.03.9.tgz包scp到其他节点上,按照上述步骤都安装一遍

部署master节点

Master节点包括三个组件:apiserver、controller-manager、scheduler

部署步骤都一致:

- 创建配置文件

- 由systemd管理组件服务

- 启动并设置开机启动

其中apiserver还需要生成并配置上证书(和etcd一样),并启用 TLS Bootstrapping 机制。

1.生成 kube-apiserver 证书

这个过程和上面生成etcd证书的过程一样。在TLS目录下,之前创建了etcd和k8s两个文件夹,apiserver证书生成的操作就在k8s文件夹中执行:

[root@binary-master TLS]# pwd /root/TLS [root@binary-master TLS]# ls etcd k8s [root@binary-master TLS]# cd k8s/

(1)自签证书颁发机构(CA)

cat > ca-config.json<< EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF

cat > ca-csr.json<< EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" } ] } EOF

(2)生成证书(初始化)

cfssl gencert -initca ca-csr.json | cfssljson -bare ca –

[root@binary-master k8s]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca - 2021/03/23 16:48:08 [INFO] generating a new CA key and certificate from CSR 2021/03/23 16:48:08 [INFO] generate received request 2021/03/23 16:48:08 [INFO] received CSR 2021/03/23 16:48:08 [INFO] generating key: rsa-2048 2021/03/23 16:48:08 [INFO] encoded CSR 2021/03/23 16:48:08 [INFO] signed certificate with serial number 674155349167693061708935668878485858330936601612 [root@binary-master k8s]# ls *pem ca-key.pem ca.pem

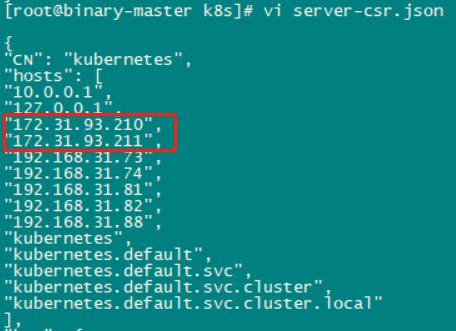

(3)使用自签 CA 签发 kube-apiserver HTTPS 证书

创建证书申请文件:

cat > server-csr.json<< EOF { "CN": "kubernetes", "hosts": [ "10.0.0.1", "127.0.0.1", "172.31.93.210", "172.31.93.211", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF

注意,其中一定要hosts中添加集群的所有可信任IP。

(4)生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

[root@binary-master k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server 2021/03/23 16:54:41 [INFO] generate received request 2021/03/23 16:54:41 [INFO] received CSR 2021/03/23 16:54:41 [INFO] generating key: rsa-2048 2021/03/23 16:54:41 [INFO] encoded CSR 2021/03/23 16:54:41 [INFO] signed certificate with serial number 321029219572130619553803174282705384482149958458 2021/03/23 16:54:41 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). [root@binary-master k8s]# ls server*pem server-key.pem server.pem

其中server-key.pem和server.pem就是最后需要的apiserver自签证书。

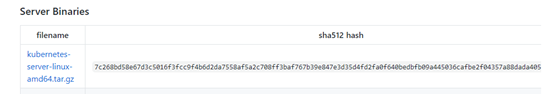

2.从GitHub下载 kubernetes-server-linux-amd64.tar.gz 包

下载地址:https://github.com/kubernetes/kubernetes/tree/master/CHANGELOG

选择想要下载的版本,进入后里面会有很多包,注意要下载服务端的版本包(Server Binaries),其中已经包含了Master和Node二进制文件。

3.解压二进制包,并创建相应工作目录,复制需要的命令工具

tar zxvf kubernetes-server-linux-amd64.tar.gz

目录结构:

[root@binary-master kubernetes]# ll total 32792 drwxr-xr-x 2 root root 6 Aug 26 2020 addons -rw-r--r-- 1 root root 33576969 Aug 26 2020 kubernetes-src.tar.gz drwxr-xr-x 3 root root 49 Aug 26 2020 LICENSES drwxr-xr-x 3 root root 17 Aug 26 2020 server

然后执行:

#创建工作目录 mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs} #复制相关命令 cd kubernetes/server/bin cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin cp kubectl /usr/bin/

4.部署 kube-apiserver

(1)创建配置文件kube-apiserver.conf

cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOF KUBE_APISERVER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --etcd-servers=https://172.31.93.210:2379,https://172.31.93.211:2379 \\ --bind-address=172.31.93.210 \\ --secure-port=6443 \\ --advertise-address=172.31.93.210 \\ --allow-privileged=true \\ --service-cluster-ip-range=10.0.0.0/24 \\ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\ --authorization-mode=RBAC,Node \\ --enable-bootstrap-token-auth=true \\ --token-auth-file=/opt/kubernetes/cfg/token.csv \\ --service-node-port-range=30000-32767 \\ --kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\ --kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\ --tls-cert-file=/opt/kubernetes/ssl/server.pem \\ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\ --client-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --etcd-cafile=/opt/etcd/ssl/ca.pem \\ --etcd-certfile=/opt/etcd/ssl/server.pem \\ --etcd-keyfile=/opt/etcd/ssl/server-key.pem \\ --audit-log-maxage=30 \\ --audit-log-maxbackup=3 \\ --audit-log-maxsize=100 \\ --audit-log-path=/opt/kubernetes/logs/k8s-audit.log" EOF

说明: 上面两个\ \ 第一个是转义符,第二个是换行符,使用转义符是为了使用 EOF 保留换行符。 –logtostderr:启用日志 —v:日志等级 –log-dir:日志目录 –etcd-servers:etcd 集群地址 –bind-address:监听地址 –secure-port:https 安全端口 –advertise-address:集群通告地址 –allow-privileged:启用授权 –service-cluster-ip-range:Service 虚拟 IP 地址段 –enable-admission-plugins:准入控制模块 –authorization-mode:认证授权,启用 RBAC 授权和节点自管理 –enable-bootstrap-token-auth:启用 TLS bootstrap 机制 –token-auth-file:bootstrap token 文件 –service-node-port-range:Service nodeport 类型默认分配端口范围 –kubelet-client-xxx:apiserver 访问 kubelet 客户端证书 –tls-xxx-file:apiserver https 证书 –etcd-xxxfile:连接 Etcd 集群证书 –audit-log-xxx:审计日志

一般只需修改其中的几个ip地址即可。

(2)启用 TLS Bootstrapping 机制

TLS Bootstraping:Master apiserver 启用 TLS 认证后,Node 节点 kubelet 和 kube-proxy 要与 kube-apiserver 进行通信,必须使用 CA 签发的有效证书才可以,当 Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes 引入了 TLS bootstraping 机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向 apiserver 申请证书,kubelet 的证书由 apiserver 动态签署。所以强烈建议在 Node 上使用这种方式,目前主要用于 kubelet,kube-proxy 还是由我们统一颁发一个证书。

即上述配置文件中的:

--enable-bootstrap-token-auth=true \\ 表示开启 --token-auth-file=/opt/kubernetes/cfg/token.csv \\ 指定bootstrap token文件

所以创建上述配置文件中 token 文件:

cat > /opt/kubernetes/cfg/token.csv << EOF c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node- bootstrapper" EOF 文件格式为:token,用户名,UID,用户组

(3)拷贝刚才生成的apiserver证书到配置文件中指定的路径:

cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/

[root@binary-master ssl]# pwd /opt/kubernetes/ssl [root@binary-master ssl]# ll total 16 -rw------- 1 root root 1675 Mar 24 16:02 ca-key.pem -rw-r--r-- 1 root root 1359 Mar 24 16:02 ca.pem -rw------- 1 root root 1675 Mar 24 16:02 server-key.pem -rw-r--r-- 1 root root 1659 Mar 24 16:02 server.pem

(4)systemd 管理 apiserver

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

(5)启动并设置开机启动

systemctl daemon-reload systemctl start kube-apiserver systemctl enable kube-apiserver

(6)授权 kubelet-bootstrap 用户允许请求证书

kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap [root@binary-master ssl]# kubectl create clusterrolebinding kubelet-bootstrap \ > --clusterrole=system:node-bootstrapper \ > --user=kubelet-bootstrap clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

5. 部署 kube-controller-manager

(1)创建配置文件

cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --leader-elect=true \\ --master=127.0.0.1:8080 \\ --bind-address=127.0.0.1 \\ --allocate-node-cidrs=true \\ --cluster-cidr=10.244.0.0/16 \\ --service-cluster-ip-range=10.0.0.0/24 \\ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --root-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --experimental-cluster-signing-duration=87600h0m0s" EOF 说明: –master:通过本地非安全本地端口 8080 连接 apiserver。 –leader-elect:当该组件启动多个时,自动选举(HA) –cluster-signing-cert-file/–cluster-signing-key-file:自动为 kubelet 颁发证书的CA,与 apiserver 保持一致

这里的配置基本不用改,都是内部通信。

(2)systemd 管理 controller-manager

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

(3)启动并设置开机启动

systemctl daemon-reload systemctl start kube-controller-manager systemctl enable kube-controller-manager

6.部署 kube-scheduler

(1)创建配置文件

cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOF KUBE_SCHEDULER_OPTS="--logtostderr=false \ --v=2 \ --log-dir=/opt/kubernetes/logs \ --leader-elect \ --master=127.0.0.1:8080 \ --bind-address=127.0.0.1" EOF 说明: –master:通过本地非安全本地端口 8080 连接 apiserver。 –leader-elect:当该组件启动多个时,自动选举(HA)

(2)systemd 管理 scheduler

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

(3)启动并设置开机启动

systemctl daemon-reload systemctl start kube-scheduler systemctl enable kube-scheduler

7.查看集群状态

此时3个组件都已经启动成功,通过 kubectl 工具查看当前集群组件状态:

[root@binary-master cfg]# kubectl get cs Warning: v1 ComponentStatus is deprecated in v1.19+ NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-1 Healthy {"health":"true"} etcd-0 Healthy {"health":"true"}

如上输出说明 Master 节点各组件运行正常,master节点部署完成。

部署Worker Node节点

1.创建工作目录并拷贝二进制文件

创建工作目录:

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

从 master 节点scp拷贝下载安装包里的二进制命令文件:

[root@binary-master bin]# pwd /root/kubernetes/server/bin [root@binary-master bin]# ls apiextensions-apiserver kube-apiserver kube-controller-manager kubectl kube-proxy.docker_tag kube-scheduler.docker_tag kubeadm kube-apiserver.docker_tag kube-controller-manager.docker_tag kubelet kube-proxy.tar kube-scheduler.tar kube-aggregator kube-apiserver.tar kube-controller-manager.tar kube-proxy kube-scheduler mounter [root@binary-master bin]# scp kubelet kube-proxy root@172.31.93.211:/opt/kubernetes/bin

worker node上就有kubelet和kube-proxy命令了:

[root@binary-node bin]# pwd /opt/kubernetes/bin [root@binary-node bin]# ll total 145296 -rwxr-xr-x 1 root root 109974472 Mar 30 14:41 kubelet -rwxr-xr-x 1 root root 38805504 Mar 30 14:41 kube-proxy [root@binary-node bin]#

2.部署 kubelet

(1)创建配置文件

cat > /opt/kubernetes/cfg/kubelet.conf << EOF KUBELET_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --hostname-override=binary-node \\ --network-plugin=cni \\ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\ --config=/opt/kubernetes/cfg/kubelet-config.yml \\ --cert-dir=/opt/kubernetes/ssl \\ --pod-infra-container-image=lizhenliang/pause-amd64:3.0" EOF 说明: –hostname-override:显示名称,集群中唯一 –network-plugin:启用 CNI –kubeconfig:空路径,会自动生成,后面用于连接 apiserver –bootstrap-kubeconfig:首次启动向 apiserver 申请证书 –config:配置参数文件 –cert-dir:kubelet 证书生成目录 –pod-infra-container-image:管理 Pod 网络容器的镜像

一般只要改一下--hostname-override即可。

(2)配置参数文件

cat > /opt/kubernetes/cfg/kubelet-config.yml << EOF kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 0.0.0.0 port: 10250 readOnlyPort: 10255 cgroupDriver: cgroupfs clusterDNS: - 10.0.0.2 clusterDomain: cluster.local failSwapOn: false authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /opt/kubernetes/ssl/ca.pem authorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30s evictionHard: imagefs.available: 15% memory.available: 100Mi nodefs.available: 10% nodefs.inodesFree: 5% maxOpenFiles: 1000000 maxPods: 110 EOF

(3)在master上生成bootstrap.kubeconfig文件,然后复制到node节点

需要用到kubectl命令,所以在master上操作:

# apiserver IP:PORT KUBE_APISERVER="https://172.31.93.210:6443" # 与 token.csv 里保持一致 TOKEN="c47ffb939f5ca36231d9e3121a252940" # 生成 kubelet bootstrap kubeconfig 配置文件 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=bootstrap.kubeconfig kubectl config set-credentials "kubelet-bootstrap" \ --token=${TOKEN} \ --kubeconfig=bootstrap.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user="kubelet-bootstrap" \ --kubeconfig=bootstrap.kubeconfig kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

一步步执行,执行后会生成一个bootstrap.kubeconfig文件:

[root@binary-master ~]# KUBE_APISERVER="https://172.31.93.210:6443" [root@binary-master ~]# TOKEN="c47ffb939f5ca36231d9e3121a252940" [root@binary-master ~]# kubectl config set-cluster kubernetes \ > --certificate-authority=/opt/kubernetes/ssl/ca.pem \ > --embed-certs=true \ > --server=${KUBE_APISERVER} \ > --kubeconfig=bootstrap.kubeconfig Cluster "kubernetes" set. [root@binary-master ~]# kubectl config set-credentials "kubelet-bootstrap" \ > --token=${TOKEN} \ > --kubeconfig=bootstrap.kubeconfig User "kubelet-bootstrap" set. [root@binary-master ~]# kubectl config set-context default \ > --cluster=kubernetes \ > --user="kubelet-bootstrap" \ > --kubeconfig=bootstrap.kubeconfig Context "default" created. [root@binary-master ~]# kubectl config use-context default --kubeconfig=bootstrap.kubeconfig Switched to context "default". [root@binary-master ~]# cat bootstrap.kubeconfig apiVersion: v1 clusters: - cluster: certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR2akNDQXFhZ0F3SUJBZ0lVZGhZcjMwN2hSRVJQc3lJbGZYMXBPTlFiN0F3d0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pURUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEREQUtCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByCmRXSmxjbTVsZEdWek1CNFhEVEl4TURNeU16QTRORE13TUZvWERUSTJNRE15TWpBNE5ETXdNRm93WlRFTE1Ba0cKQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbGFXcHBibWN4RERBSwpCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByZFdKbGNtNWxkR1Z6Ck1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBd2RvYzcyOWNkd2dEODBGUzdZb1EKRjlScEdHb1BqMEJaTzdpL3dNaGRkRi9XVXZSem81ZHF0N1pzM25VS2VPU3dGUzg5SlNMOWZndVhuQVZ5RXZNcQpwQzBxMk8zZlAvbG5ua203bmlzeDJXYStHYVZuYUphRkRQMmkrbG1CWnhVeXVibTlHMFdydXJ3aEcydGxuVHMxClpwaG9JVWJoMngxRHJub2VldFI3Rk11UXpDZzJrUmhQOTFFY0xITVR6YUhwVnNjYnk1ZTh3NXZCbjU4YWRselEKZThKVkFSemFHUVZMREZ0OUpZQlNoaUYwSmF6RHJCMmlBdzE5VklFY3MrSUdjdjlFWHVMTjdkKytGbWwyZWJoego1WmNoWEIxZHhvUUhadFZvcDRTVzUwQ005YzRuQkdCbFVJVTN4VTBvVTlnVkNSQnI2MDlGTkZzUmJ1RjZMc2VZCmVRSURBUUFCbzJZd1pEQU9CZ05WSFE4QkFmOEVCQU1DQVFZd0VnWURWUjBUQVFIL0JBZ3dCZ0VCL3dJQkFqQWQKQmdOVkhRNEVGZ1FVK3BKL0dPczRrL3hub2N2L3FGajZyaW5Uc244d0h3WURWUjBqQkJnd0ZvQVUrcEovR09zNAprL3hub2N2L3FGajZyaW5Uc244d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFCTW5LV1lUeXQxQmJ1Q0h0SDRFCjNhVDB4S0RNNUZKcVR6bU1vV1ZWWExtZjlTV0V5UU13QlErK0dvOUo3NlBqOVYwV0tSSk0rNFdOam9WWEViZnMKaUdDSlhubE5kZk5aSFplSzN6U1dhNFl2M1kzTmlaUVpxaGtHUHFTdXJuempjd0FKVlU4dzlZUWxXaTBFaFdjVApXanBBSitWRVlWWjh6aWF2RWZ3OXhjaTZ0UGhSYlpxazU2MlBBUkNvWUx5RC9DQmpnSFljejQ3YVcvSWNINHNECmFJODBuUWpzbldOSEFvR0JBQmJ2TnJRNzRSanF0M3pQcnlRYWhxVGp6OUczVmRSS2REYnJKVjN2VG8zN3dZQ3AKRkpVTTgvdlc0dGhxUTBwd3dTRXdHWUNOYXVaRnczWWg5SzA5SDg3T2NTUWxlTEdvYWc4YzdqelAyUk0xcFZyNQo0c2M9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K server: https://172.31.93.210:6443 name: kubernetes contexts: - context: cluster: kubernetes user: kubelet-bootstrap name: default current-context: default kind: Config preferences: {} users: - name: kubelet-bootstrap user: token: c47ffb939f5ca36231d9e3121a252940

把这个文件拷贝到node节点的配置文件路径:

scp bootstrap.kubeconfig root@172.31.93.211:/opt/kubernetes/cfg

(4)systemd 管理 kubelet

cat > /usr/lib/systemd/system/kubelet.service << EOF [Unit] Description=Kubernetes Kubelet After=docker.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

(5)启动并设置开机启动

systemctl daemon-reload

systemctl start kubelet

systemctl enable kubelet

3.在master的apiserver上批准 kubelet 证书申请并加入集群

由于之前在apiserver上设置了启用 TLS Bootstrapping 机制,kubelet 启动时会向 kube-apiserver 发送 TLS bootstrapping 请求。

在master上使用命令kubectl get csr可以看到还未认证的节点:

[root@binary-master k8s]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-UdCmaoVbWhqqdxWo7gkP3pH5vzoqliQPtvvCzJ54DBM 7s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

然后在master上批准证书:kubectl certificate approve 'nodename'

[root@binary-master k8s]# kubectl certificate approve node-csr-UdCmaoVbWhqqdxWo7gkP3pH5vzoqliQPtvvCzJ54DBM

certificatesigningrequest.certificates.k8s.io/node-csr-UdCmaoVbWhqqdxWo7gkP3pH5vzoqliQPtvvCzJ54DBM approved

此时再使用命令kubectl get csr可以看到状态已经变为approved:

[root@binary-master k8s]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-UdCmaoVbWhqqdxWo7gkP3pH5vzoqliQPtvvCzJ54DBM 10m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

查看节点:

[root@binary-master k8s]# kubectl get node NAME STATUS ROLES AGE VERSION binary-node NotReady <none> 13m v1.19.0

由于网络插件还没有部署,节点会没有准备就绪,所以是 NotReady。

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

ps:这里遇到一个问题,kubectl get csr 显示No Resources Found,即apiserver没有收到kubelet的认证请求

[root@binary-master k8s]# kubectl get csr

No resources found

出现这个问题,一般检查:kubelet 使用的 bootstrap.kubeconfig 文件中User 是否是 kubelet-boostrap,token是否是kube-apiserver 使用的 token.csv 文件中的token

检查后发现都是正确的,再查看kubelet的日志,发现有错误:无法创建证书签名请。

F0330 17:59:28.096982 2887 server.go:265] failed to run Kubelet: cannot create certificate signing request: Post "https://172.31.93.210:6443/apis/certificates.k8s.io/v1/certificatesigningrequests": x509: certificate is valid for 10.0.0.1, 127.0.0.1, 192.168.31.71, 192.168.31.72, 192.168.31.73, 192.168.31.74, 192.168.31.81, 192.168.31.82, 192.168.31.88, not 172.31.93.210 goroutine 1 [running]:

原来是在自签CA签发apiserver证书时,创建的证书申请文件server-csr.json中未添加集群的所有可信任IP。

修改这个文件,重新生成证书,并把新证书放到apiserver的证书目录,最后重启apiserver,问题解决。

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

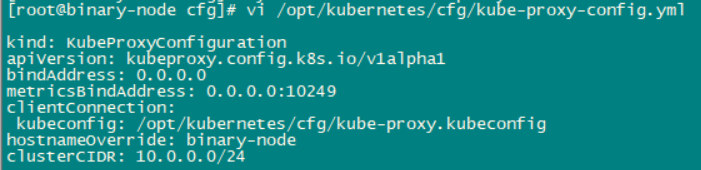

4.部署 kube-proxy

(1)创建配置文件

cat > /opt/kubernetes/cfg/kube-proxy.conf << EOF KUBE_PROXY_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --config=/opt/kubernetes/cfg/kube-proxy-config.yml" EOF

(2)配置参数文件kube-proxy-config.yml

cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOF kind: KubeProxyConfiguration apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0.0.0 metricsBindAddress: 0.0.0.0:10249 clientConnection: kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig hostnameOverride: binary-node clusterCIDR: 10.0.0.0/24 EOF

一般只要改一下--hostname-override即可。

(3)生成 kube-proxy.kubeconfig 文件

先在master节点生成 kube-proxy 证书,因为生成 .kubeconfig 文件需要用到证书:

# 切换工作目录 [root@binary-master k8s]# cd ~/TLS/k8s # 创建证书请求文件 [root@binary-master k8s]# cat > kube-proxy-csr.json<< EOF > { > "CN": "system:kube-proxy", > "hosts": [], > "key": { > "algo": "rsa", > "size": 2048 > }, > "names": [ > { > "C": "CN", > "L": "BeiJing", > "ST": "BeiJing", > "O": "k8s", > "OU": "System" > } > ] > } > EOF # 生成证书 [root@binary-master k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy 2021/03/31 15:04:11 [INFO] generate received request 2021/03/31 15:04:11 [INFO] received CSR 2021/03/31 15:04:11 [INFO] generating key: rsa-2048 2021/03/31 15:04:11 [INFO] encoded CSR 2021/03/31 15:04:11 [INFO] signed certificate with serial number 223055766142513681177047308544368105041301659941 2021/03/31 15:04:11 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). #查看证书 [root@binary-master k8s]# ls kube-proxy*pem kube-proxy-key.pem kube-proxy.pem

在master上生成kube-proxy.kubeconfig文件,然后复制到node节点

需要用到kubectl命令,所以也在master上操作:

# apiserver IP:PORT KUBE_APISERVER="https://172.31.93.210:6443" #生成kube-proxy.kubeconfig文件 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy \ --client-certificate=/root/TLS/k8s/kube-proxy.pem \ --client-key=/root/TLS/k8s/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

一步步执行,执行后会生成一个 kube-proxy.kubeconfig文件:

[root@binary-master ~]# KUBE_APISERVER="https://172.31.93.210:6443" [root@binary-master ~]# kubectl config set-cluster kubernetes \ > --certificate-authority=/opt/kubernetes/ssl/ca.pem \ > --embed-certs=true \ > --server=${KUBE_APISERVER} \ > --kubeconfig=kube-proxy.kubeconfig Cluster "kubernetes" set. [root@binary-master ~]# kubectl config set-credentials kube-proxy --client-certificate=/root/TLS/k8s/kube-proxy.pem --client-key=/root/TLS/k8s/kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig User "kube-proxy" set. [root@binary-master ~]# kubectl config set-context default \ > --cluster=kubernetes \ > --user=kube-proxy \ > --kubeconfig=kube-proxy.kubeconfig Context "default" created. [root@binary-master ~]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig Switched to context "default".

把这个文件拷贝到node节点的配置文件路径:

scp kube-proxy.kubeconfig root@172.31.93.211:/opt/kubernetes/cfg

(4) systemd 管理 kube-proxy

cat > /usr/lib/systemd/system/kube-proxy.service << EOF [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF

(5)启动并设置开机启动

systemctl daemon-reload systemctl start kube-proxy systemctl enable kube-proxy

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

ps:这里遇到一个问题,kube-proxy无法启动,查看错误日志:unable to load in-cluster configuration, KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT must be defined

[root@binary-node logs]# cat kube-proxy.binary-node.root.log.FATAL.20210402-181634.77665 Log file created at: 2021/04/02 18:16:34 Running on machine: binary-node Binary: Built with gc go1.15 for linux/amd64 Log line format: [IWEF]mmdd hh:mm:ss.uuuuuu threadid file:line] msg F0402 18:16:34.526797 77665 server.go:495] unable to load in-cluster configuration, KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT must be defined

是因为kube-proxy-config.yml这个配置文件中,kubeconfig:/opt/kubernetes/cfg/kube-proxy.kubeconfig,这一行最前面应该有一个空格:

修改后重启即可。

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

5.部署 CNI 网络

(1)在node节点准备好 CNI 二进制文件

下载地址:https://github.com/containernetworking/plugins/releases/download/v0.8.6/cni-plugins-linux-amd64-v0.8.6.tgz

解压二进制包并移动到默认工作目录:

mkdir /opt/cni/bin tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin

二进制文件都在 /opt/cni/bin/:

[root@binary-node ~]# ll total 95336 -rw-------. 1 root root 1260 Mar 19 01:44 anaconda-ks.cfg -rw-r--r-- 1 root root 36878412 Mar 31 18:20 cni-plugins-linux-amd64-v0.8.6.tgz drwxrwxr-x 2 1000 1000 6 Mar 23 14:35 docker -rw-r--r-- 1 root root 60730088 Mar 23 14:35 docker-19.03.9.tgz -rw-r--r-- 1 root root 4821 Mar 31 17:43 kube-flannel.yml [root@binary-node ~]# tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin ./ ./flannel ./ptp ./host-local ./firewall ./portmap ./tuning ./vlan ./host-device ./bandwidth ./sbr ./static ./dhcp ./ipvlan ./macvlan ./loopback ./bridge [root@binary-node ~]# ls /opt/cni/bin/ bandwidth bridge dhcp firewall flannel host-device host-local ipvlan loopback macvlan portmap ptp sbr static tuning vlan

(2)在master节点使用 kubectl apply 部署CNI网络,这一步和上一篇kubeadm方式中的操作一样

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml kubectl apply -f kube-flannel.yml

如下:

[root@binary-master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml --2021-04-01 10:40:48-- https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.110.133, 185.199.111.133, 185.199.108.133, ... Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 4821 (4.7K) [text/plain] Saving to: ‘kube-flannel.yml’ 100%[===================================================================================================================================================>] 4,821 --.-K/s in 0s 2021-04-01 10:40:48 (24.9 MB/s) - ‘kube-flannel.yml’ saved [4821/4821] [root@binary-master ~]# kubectl apply -f kube-flannel.yml podsecuritypolicy.policy/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds created

部署好网络插件,Node 就准备就绪:

[root@binary-master ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE kube-flannel-ds-8zjwr 1/1 Running 6 9m33s [root@binary-master ~]# kubectl get node NAME STATUS ROLES AGE VERSION binary-node Ready <none> 40h v1.19.0

6.若要新增work node

假设需要新增work节点:172.31.93.212

(1)拷贝已部署好的 Node 相关文件到新节点

从已部署好的work节点(172.31.93.211)上将涉及的文件拷贝到新work节点(172.31.93.212):

#kubernetes工作目录 scp -r /opt/kubernetes root@172.31.93.212:/opt/ #服务注册 scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@172.31.93.212:/usr/lib/systemd/system #cni网络插件 scp -r /opt/cni/ root@172.31.93.212:/opt/ #证书 scp /opt/kubernetes/ssl/ca.pem root@172.31.93.212:/opt/kubernetes/ssl

(2)拷贝完成后,删除 kubelet 证书和 kubeconfig 文件

rm /opt/kubernetes/cfg/kubelet.kubeconfig rm -f /opt/kubernetes/ssl/kubelet*

这几个文件是证书申请审批后自动生成的,每个 Node 不同,必须删除重新生成。

(3)修改主机名

#这两个文件中的主机名要修改掉

vi /opt/kubernetes/cfg/kubelet.conf --hostname-override= vi /opt/kubernetes/cfg/kube-proxy-config.yml hostnameOverride:

(4) 启动并设置开机启动

systemctl daemon-reload systemctl start kubelet systemctl enable kubelet systemctl start kube-proxy systemctl enable kube-proxy

(5) 在 Master 上批准新 Node kubelet 证书申请

kubectl get csr

kubectl certificate approve xxxxxxx

(6)查看 Node 状态验证

Kubectl get node

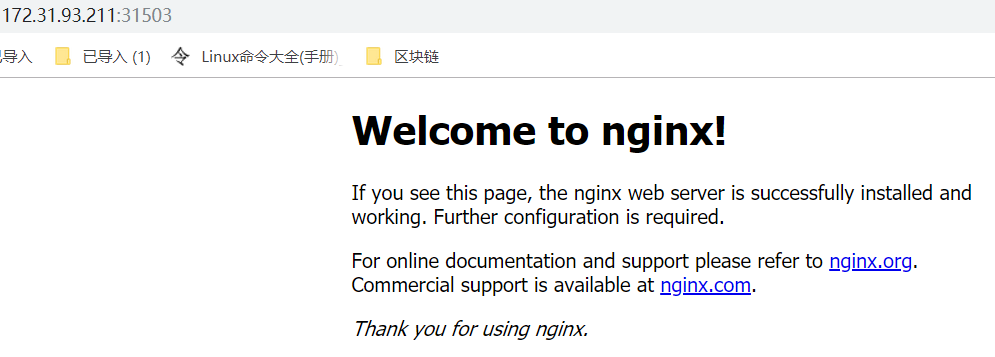

测试 kubernetes 集群

在 Kubernetes 集群中创建一个 pod(以nginx为例),验证是否正常运行:kubectl create deployment nginx --image=nginx

[root@binary-master ~]# kubectl create deployment nginx --image=nginx deployment.apps/nginx created [root@binary-master ~]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-6799fc88d8-2tf8z 0/1 ContainerCreating 0 15s

暴露内部端口80:

kubectl expose deployment nginx --port=80 --type=NodePort

查看pod与svc:kubectl get pod,svc

[root@binary-master flannel]# kubectl get pod,svc NAME READY STATUS RESTARTS AGE pod/nginx-6799fc88d8-6g9q2 1/1 Running 0 12m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 10d service/nginx NodePort 10.0.0.149 <none> 80:31503/TCP 38m

对外暴露的端口为31503,可以通过浏览器访问集群中任一work node的IP加31503端口来测试:

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

ps:这里遇到一个问题,创建了nginx的pod后,该pod一直处于ContainerCreating状态:

[root@binary-master flannel]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-6799fc88d8-6g9q2 0/1 ContainerCreating 0 5s

通过 kubectl describe pod nginx 查看pod详细信息:

[root@binary-master ~]# kubectl describe pod nginx Name: nginx-6799fc88d8-mlc22 Namespace: default Priority: 0 Node: binary-node/172.31.93.211 Start Time: Sat, 03 Apr 2021 20:59:57 +0800 Labels: app=nginx pod-template-hash=6799fc88d8 Annotations: <none> Status: Pending IP: IPs: <none> Controlled By: ReplicaSet/nginx-6799fc88d8 Containers: nginx: Container ID: Image: nginx Image ID: Port: <none> Host Port: <none> State: Waiting Reason: ContainerCreating Ready: False Restart Count: 0 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-74sf7 (ro) Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled True Volumes: default-token-74sf7: Type: Secret (a volume populated by a Secret) SecretName: default-token-74sf7 Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 95s Successfully assigned default/nginx-6799fc88d8-mlc22 to binary-node Warning FailedCreatePodSandBox 95s kubelet, binary-node Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "5240a4b7f8d25d59542c8ffb75937999c7d09c58056e7e7d7b9131d99635333b" network for pod "nginx-6799fc88d8-mlc22": networkPlugin cni failed to set up pod "nginx-6799fc88d8-mlc22_default" network: open /run/flannel/subnet.env: no such file or directory Warning FailedCreatePodSandBox 94s kubelet, binary-node Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "09f9728baca36797f2efcc111fd8bd63749e8e40dd0a84780a6ddae447c10ffd" network for pod "nginx-6799fc88d8-mlc22": networkPlugin cni failed to set up pod "nginx-6799fc88d8-mlc22_default" network: open /run/flannel/subnet.env: no such file or directory Warning FailedCreatePodSandBox 93s kubelet, binary-node Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "3453f4e978941bd442bcbaece321c79b48dff8633c87f33f7ef86c2d5de3ada4" network for pod "nginx-6799fc88d8-mlc22": networkPlugin cni failed to set up pod "nginx-6799fc88d8-mlc22_default" network: open /run/flannel/subnet.env: no such file or directory Warning FailedCreatePodSandBox 92s kubelet, binary-node Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "25b8a4368c66c23b4ed5ab55b8f40b7020ff2fe5d1be25b0c605cd187be88ec4" network for pod "nginx-6799fc88d8-mlc22": networkPlugin cni failed to set up pod "nginx-6799fc88d8-mlc22_default" network: open /run/flannel/subnet.env: no such file or directory Warning FailedCreatePodSandBox 91s kubelet, binary-node Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "eb2dcd49db7e1366df8cb2842b37b5dc86b7b07b9a87cb8572aa5907262631d3" network for pod "nginx-6799fc88d8-mlc22": networkPlugin cni failed to set up pod "nginx-6799fc88d8-mlc22_default" network: open /run/flannel/subnet.env: no such file or directory Warning FailedCreatePodSandBox 90s kubelet, binary-node Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "8e65cc2af4f387b0dcd307d22513e074900f2879c323608a3986c960bcf3f4dd" network for pod "nginx-6799fc88d8-mlc22": networkPlugin cni failed to set up pod "nginx-6799fc88d8-mlc22_default" network: open /run/flannel/subnet.env: no such file or directory Warning FailedCreatePodSandBox 89s kubelet, binary-node Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "67af2477a1e0aeac6f5254922ac83518a9cb54af6e667a9dc7e8643095fc2430" network for pod "nginx-6799fc88d8-mlc22": networkPlugin cni failed to set up pod "nginx-6799fc88d8-mlc22_default" network: open /run/flannel/subnet.env: no such file or directory Warning FailedCreatePodSandBox 87s kubelet, binary-node Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "09d99848e555b858c0f76f2b55d3c55e73552f464e916ccc56547aa0d24995f1" network for pod "nginx-6799fc88d8-mlc22": networkPlugin cni failed to set up pod "nginx-6799fc88d8-mlc22_default" network: open /run/flannel/subnet.env: no such file or directory Warning FailedCreatePodSandBox 86s kubelet, binary-node Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "5d1c24620fe5620f7f4abc098b011a3df99d0c9391e5724d6f8dcdc75568d7d2" network for pod "nginx-6799fc88d8-mlc22": networkPlugin cni failed to set up pod "nginx-6799fc88d8-mlc22_default" network: open /run/flannel/subnet.env: no such file or directory Normal SandboxChanged 83s (x12 over 94s) kubelet, binary-node Pod sandbox changed, it will be killed and re-created. Warning FailedCreatePodSandBox 82s (x4 over 85s) kubelet, binary-node (combined from similar events): Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "662c7162eb2922ba76cd4fd3eafd917e3d7e986331896fcee3abae2130e12613" network for pod "nginx-6799fc88d8-mlc22": networkPlugin cni failed to set up pod "nginx-6799fc88d8-mlc22_default" network: open /run/flannel/subnet.env: no such file or directory

提示:binary-node上的kubelet报错:networkPlugin cni failed to set up pod "nginx-6799fc88d8-mlc22_default" network: open /run/flannel/subnet.env: no such file or directory

应该是cni网络插件的问题,推测是之前binary-node节点上kube-proxy没有启动成功而导致。

查了很多网上资料,也没有解决问题,最后重启了下binary-node节点的docker,就可以了。

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------