使用kubeadm部署kubernetes集群(1.20.7)(亲测,有效)

环境需求

环境:centos 7.9

硬件需求:CPU>=2c ,内存>=4G

环境角色

|

IP |

主机名 |

角色 |

|

192.167.100.11 |

k8smaster |

master |

|

192.167.100.12 |

k8snode1 |

node1 |

|

192.167.100.13 |

k8snode2 |

node2 |

环境初始化(在所有三个节点执行)

关闭防火墙及selinux

# systemctl stop firewalld && systemctl disable firewalld

# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config && setenforce 0

关闭 swap 分区

# swapoff -a # 临时#

# sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab #永久#

分别在三台主机上设置主机名及配置hosts

# hostnamectl set-hostname k8smaster #192.167.100.11主机打命令#

# hostnamectl set-hostname k8snode1 #192.167.100.12主机打命令#

# hostnamectl set-hostname k8snode2 #192.167.100.13主机打命令#

在所有主机上面配置hosts文件

# cat >> /etc/hosts << EOF

192.167.100.11 k8smaster

192.167.100.12 k8snode0

192.167.100.13 k8snode2

EOF

设置系统时区并同步时间服务器

# yum install -y ntpdate

# ntpdate time.windows.com

内核开启IPVS支持

# yum install ipset #安装ipset软件,系统一般默认已安装#

创建/etc/sysconfig/modules/ipvs.modules文件,内容如下:

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in ${ipvs_modules}; do

/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe ${kernel_module}

fi

done

执行如下命令使配置生效:

# chmod 755 /etc/sysconfig/modules/ipvs.modules

# sh /etc/sysconfig/modules/ipvs.modules

# yum install ipvsadm -y #安装ipvs规则查看工具,选装#

# lsmod | grep ip_vs #验证是否已开启ipvs#

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 15

ip_vs 155648 21 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 147456 5 xt_conntrack,nf_nat,nf_conntrack_netlink,xt_MASQUERADE,ip_vs

nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs

libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs

#

配置内核参数,将桥接的IPv4流量传递到iptables的链

在/etc/sysctl.conf中添加如下内容:

vm.swappiness = 0

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

执行如下命令,以使设置生效:

# sysctl -p

安装docker环境,并使docker开机自启

# yum install -y yum-utils device-mapper-persistent-data lvm2

# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# yum makecache fast

# yum install docker-ce -y

# systemctl restart docker

# systemctl enable docker

# docker version

安装Kubeadm、kubelet、kubectl工具

配置阿里云的源,使用yum命令安装Kubeadm、kubelet、kubectl:

# cat <<EOF > /etc/yum.repos.d/k8s.repo

[k8s]

name=k8s

enabled=1

gpgcheck=0

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

EOF

先查询可安装的版本,然后指定版本进行安装。

# yum list kubelet --showduplicates | sort -r

kubelet.x86_64 1.21.1-0 k8s

kubelet.x86_64 1.20.7-0 k8s

kubelet.x86_64 1.20.7-0 k8s

kubelet.x86_64 1.20.6-0 k8s

kubelet.x86_64 1.20.5-0 k8s

下面指定版本进行安装:

[root@k8smaster ~]# yum install -y kubelet-1.20.7-0 kubeadm-1.20.7-0 kubectl-1.20.7-0

然后将 kubelet 设置成开机启动:

# systemctl daemon-reload && systemctl enable kubelet

初始化k8s集群

可以通过如下命令导出默认的初始化配置,根据需求进行自定义修改配置

# kubeadm config print init-defaults > kubeadm.yaml

# ll kubeadm.yaml

-rw-r--r-- 1 root root 765 Jun 9 14:53 kubeadm.yaml

主要修改advertiseAddress,imageRepository ,kubernetesVersion的值,添加了kube-proxy的模式为ipvs,指定cgroupDriver为systemd。

# cat kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.167.100.11

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: node

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.21.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

注:因默认image源地址由google提供,可以通过自行下载所需image,修改tag名称,

# cat images.sh

#!/bin/bash

url=registry.cn-hangzhou.aliyuncs.com/google_containers

version=v1.20.7

images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)

for imagename in ${images[@]} ; do

docker pull $url/$imagename

docker tag $url/$imagename k8s.gcr.io/$imagename

docker rmi -f $url/$imagename

done

# sh image.sh

所有镜像下载完成后,可通过docker Images查看

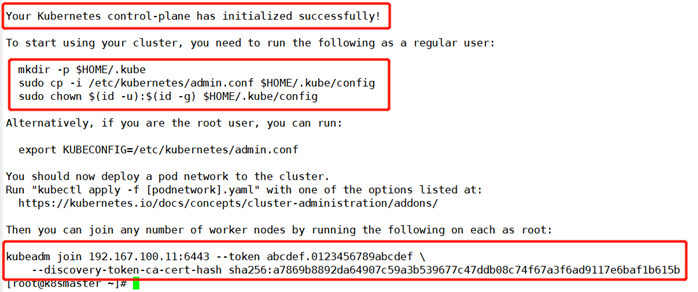

通过配置文件进行master节点的初始化

# kubeadm init --config=kubeadm.yaml

集群安装完成后,按输出提示,配置集群权限文件

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

将node节点加入集群

所有node使用kubeadm join命令,加入集群,kubeadm join信息为master节点集群初始化时,最后输出内容,如没有记录,可以通过kubeadm token create --ttl 0 --print-join-command,命令找回,token有效期24小时

Master节点找回信息:

# kubeadm token create --ttl 0 --print-join-command

kubeadm join 192.167.100.11:6443 --token 67liat.xdtttfwb0p61sqeu --discovery-token-ca-cert-hash sha256:a7869b8892da64907c59a3b539677c47ddb08c74f67a3f6ad9117e6baf1b615b

#

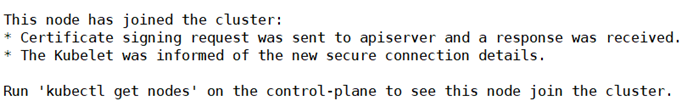

Node节点执行kubeadm join命令加入集群:

# kubeadm join 192.167.100.11:6443 --token 67liat.xdtttfwb0p61sqeu --discovery-token-ca-cert-hash sha256:a7869b8892da64907c59a3b539677c47ddb08c74f67a3f6ad9117e6baf1b615b

显示node节点已加入集群

#

Node节点安装完成后,在master节点,查询集群状态

# kubectl get node

NAME STATUS ROLES AGE VERSION

k8smaster NotReady control-plane,master 6m3s v1.20.7

k8snode1 NotReady <none> 4m51s v1.20.7

k8snode2 NotReady <none> 4m46s v1.20.7

#

此时看到所有节点都是NotReady 状态,这是因为还没有安装网络插件

安装网络插件calico,

下载配置文件

# wget https://docs.projectcalico.org/manifests/calico.yaml

在master节点执行kubectl apply安装calico网络插件

# kubectl apply -f calico.yaml

可以通过docker images查看多了几个calico的images

# docker images | grep calico

calico/node v3.19.1 c4d75af7e098 3 weeks ago 168MB

calico/pod2daemon-flexvol v3.19.1 5660150975fb 3 weeks ago 21.7MB

calico/cni v3.19.1 5749e8b276f9 3 weeks ago 146MB

calico/kube-controllers v3.19.1 5d3d5ddc8605 3 weeks ago 60.6MB

集群显示正常

# kubectl get node

NAME STATUS ROLES AGE VERSION

k8smaster Ready control-plane,master 4m37s v1.20.7

k8snode1 Ready <none> 2m52s v1.20.7

k8snode2 Ready <none> 2m47s v1.20.7

#

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-7f4f5bf95d-g2clg 1/1 Running 0 2m51s

calico-node-6hp8w 1/1 Running 0 2m51s

calico-node-cx5xm 1/1 Running 0 2m51s

calico-node-vcmzn 1/1 Running 0 2m51s

coredns-74ff55c5b-tnzgk 1/1 Running 0 6m6s

coredns-74ff55c5b-wd29t 1/1 Running 0 6m6s

etcd-k8smaster 1/1 Running 0 6m9s

kube-apiserver-k8smaster 1/1 Running 0 6m9s

kube-controller-manager-k8smaster 1/1 Running 0 6m9s

kube-proxy-bvh5n 1/1 Running 0 4m24s

kube-proxy-hhqfl 1/1 Running 0 4m29s

kube-proxy-pfmbx 1/1 Running 0 6m6s

kube-scheduler-k8smaster 1/1 Running 0 6m9s

#

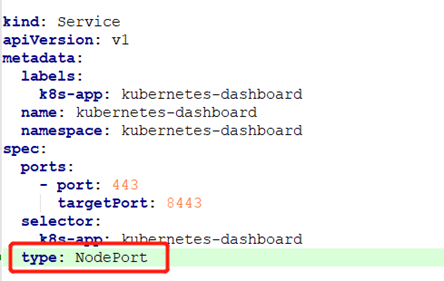

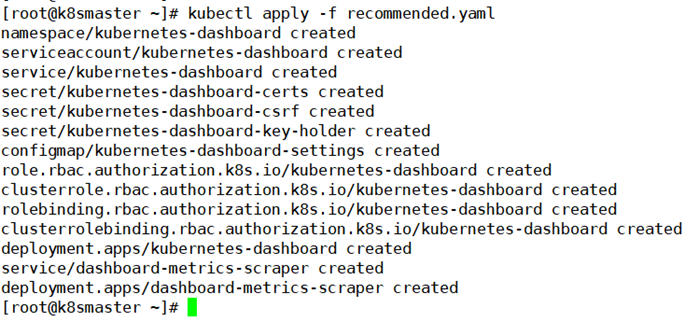

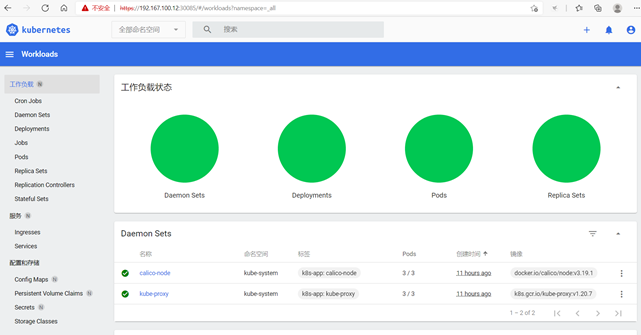

安装kubernetes-dashboard

Dashboard是官方提供的一个UI界面,可以从 https://github.com/kubernetes/dashboard/releases/tag/v2.3.0 这个地址下载最新的的yaml文件,可以修文件中的镜像地址(非必要),再增加"type: NodePort",这样是随机分配一个端口,也可以指定端口,在“targetPort”下面增加"nodePort: 30001 "

再通过以下命令进行安装# kubectl apply -f recommended.yaml

安装完成后,查看kubernetes-dashboard随机的访问端口

# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.103.231.96 <none> 8000/TCP 16s

kubernetes-dashboard NodePort 10.102.32.163 <none> 443:30085/TCP 16s

#

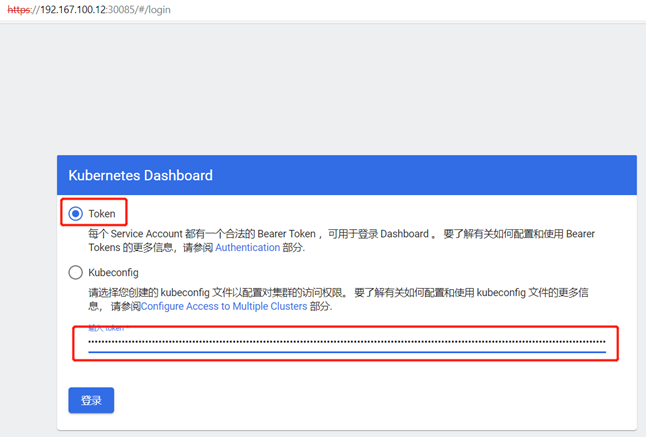

可以通过集群任意一个节点的ip加上30085端口访问kubernetes-dashboard

创建dashboard登录认证

访问dashboard还需要创建一个ServiceAccount用户dashboard-admin

# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

将dashboard-admin用户与角色绑定

# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

通过以下密码,得到token的令牌,然后进行登录

# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-8856f

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 4ae39dd0-8bf6-4ada-b8d6-2922a427a1f7

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjdfYWlKOE1GVWNEaGV5RHR5eXJHcDdzaktUcHpZUjU3ZDNoeXdiNVZ4ckEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tODg1NmYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNGFlMzlkZDAtOGJmNi00YWRhLWI4ZDYtMjkyMmE0MjdhMWY3Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.GIsk6yk7z7tsNL6O-KPw1PFKyWZHGAXfIIw2IctzptvTbHGkDc7aXvQ41R_iRQoSCbO8qdUImVaAEROSQVnRnNQZfmyi-z_oO3T4v1Ad019Z1J7P8bGiSyA72xjwuKLLxgqrrb0zVS3zP7GsO2DHG6RHycRrKSG_kpyDLk4KhL52ja71wQWD42X9LbIgPl-GuNLYKCZ92MtILgEELtIBKvi551HnDnTQtOkA2ETjzqKtgj4TPkpf5kzjxaN1iW4Hsg_76eWXE3lotPweBD6jz5swEVw1O1cY_ZiKLwxGeHD7cFT-JUleSBRZJq5i0q6XydkT6UzPFNnznosk3v8jIg

#

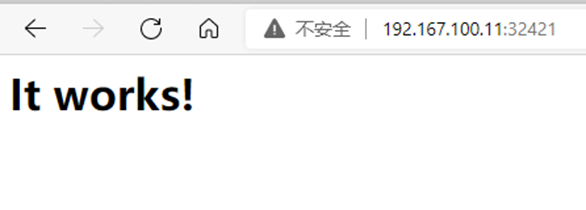

在k8s集群编排nginx容器,并发布公网

创建一个http的yaml文件

# cat http.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: http-deployment

spec:

replicas: 3

selector:

matchLabels:

app: http_server

template:

metadata:

labels:

app: http_server

spec:

containers:

- name: http-web

image: httpd

#

指定资源类型为:Deployment

副本数为:3

指定镜像名:httpd

通过以下命令进行创建

# kubectl apply -f http.yaml

deployment.apps/http-deployment created

通过kuberctl get pod命令,查看pod,已经创建完成,并且三个副本运行在两个node节点上面

# kubectl get pod

NAME READY STATUS RESTARTS AGE

http-deployment-6784589985-2ckzt 1/1 Running 0 70s

http-deployment-6784589985-8frwl 1/1 Running 0 70s

http-deployment-6784589985-tq2jm 1/1 Running 0 70s

#

# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

http-deployment-6784589985-2ckzt 1/1 Running 0 3m 192.168.249.15 k8snode1 <none> <none>

http-deployment-6784589985-8frwl 1/1 Running 0 3m 192.168.249.14 k8snode1 <none> <none>

http-deployment-6784589985-tq2jm 1/1 Running 0 3m 192.168.185.194 k8snode2 <none> <none>

[root@k8smaster ~]#

创建一个service的yaml,做一下服务暴露,允许从外部访问服务

# cat service.yaml

apiVersion: v1

kind: Service

metadata:

name: service-httpd

spec:

type: NodePort

selector:

app: http_server

ports:

- protocol: TCP

port: 8080

targetPort: 80

#

指定资源类型为:service

指定资源暴露方式为:NodePort

指定资源名:http_server #对应http的资源名

通过以下命令创建service

# kubectl apply -f service.yaml

service/service-httpd created

#

可以通过以下命令查看service,是否创建完成,和访问的端口

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14h

service-httpd NodePort 10.109.238.197 <none> 8080:32421/TCP 84s

#