Proj CDeepFuzz Paper Reading: Muffin: Testing Deep Learning Libraries via Neural Architecture Fuzzing

Abstract

背景:已有的方法仅仅利用了已经存在的models,只在model inference阶段检测bugs

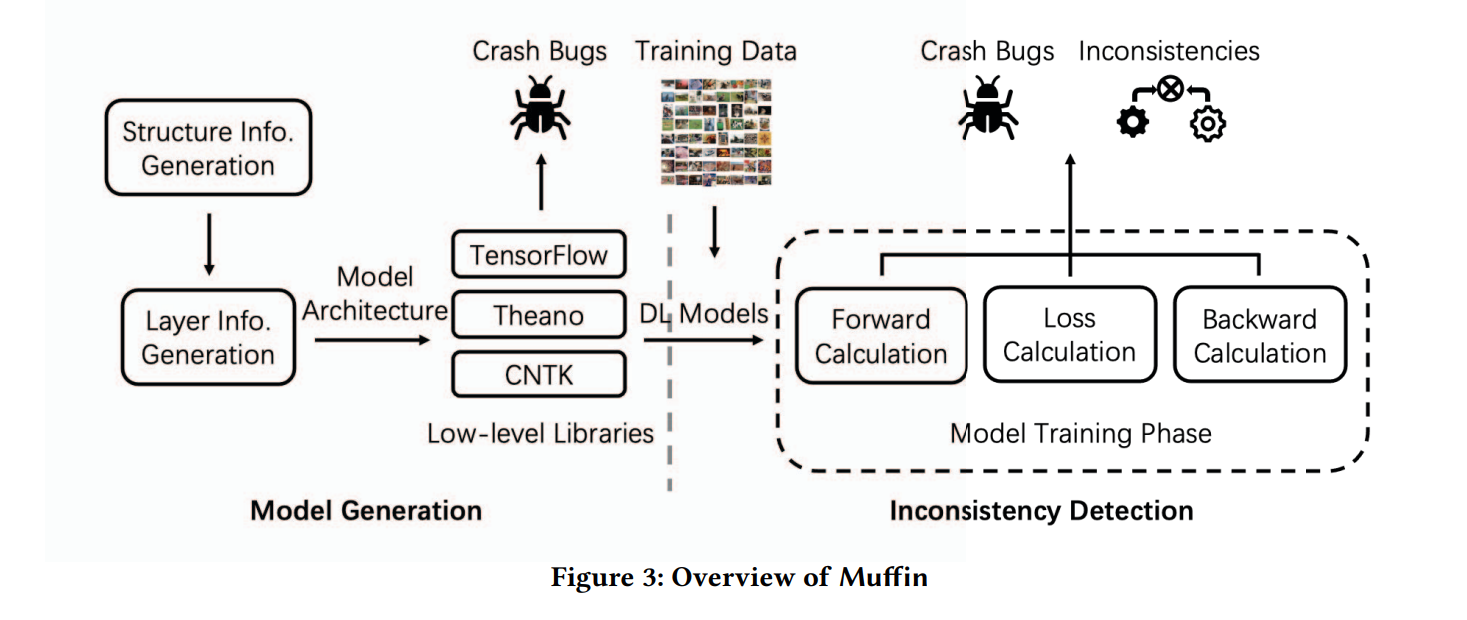

本文: Muffin

方法:specifically-designed model fuzzing approach + 定制一组指标来衡量不同DL库之间的不一致differential testing

实验:

对象:Tensorflow, CNTK, Theano

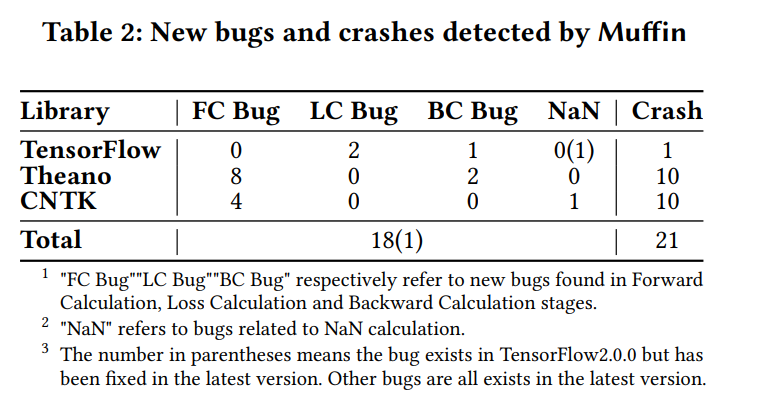

效果:+39 new bugs

1. Intro

P1-P3: 深度学习系统鲁棒性的重要性

P4:

CRADLE, LEMON: 不同DL库之间的differential testing

缺点:

- 仅集中于inference phase,只能测试一小部分库函数

- 训练阶段没有可以用来在不同机器学习库之间差分比较的输出

P5: Muffin,

model generation algo: DAG,

differential testing: data trace analysis, 将模型划分为forward calculation, loss calculation, gradient calculation, 为每个阶段设计不同的metrics来衡量inconsistencies。

实验1:

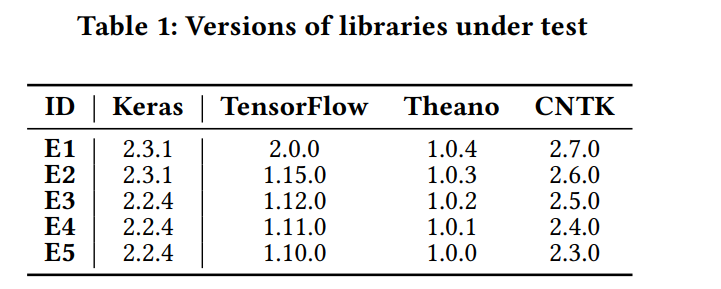

对象:15 release versions of 3 DL libraries, TensorFlow, CNTK, Theano

效果:+39 new bugs(21 crash)

实验2:

数据集:6 datasets

目的:比较

效果:在同等时间内能够发现的inconsistencies更高

2. Background

DL model, Forward Calculation, Loss Calculation, Backward Calculation, CPU, GPU, TPU, the different implementations of DL libraries, loading the data, defining the model architecture, training the model with the data, high-level library function, low-level library function, auxiliary codes,

Challenges: 1. 获取能够覆盖大多数library API的DL models很困难 2. test oracles:训练时的weight values

3. Approach

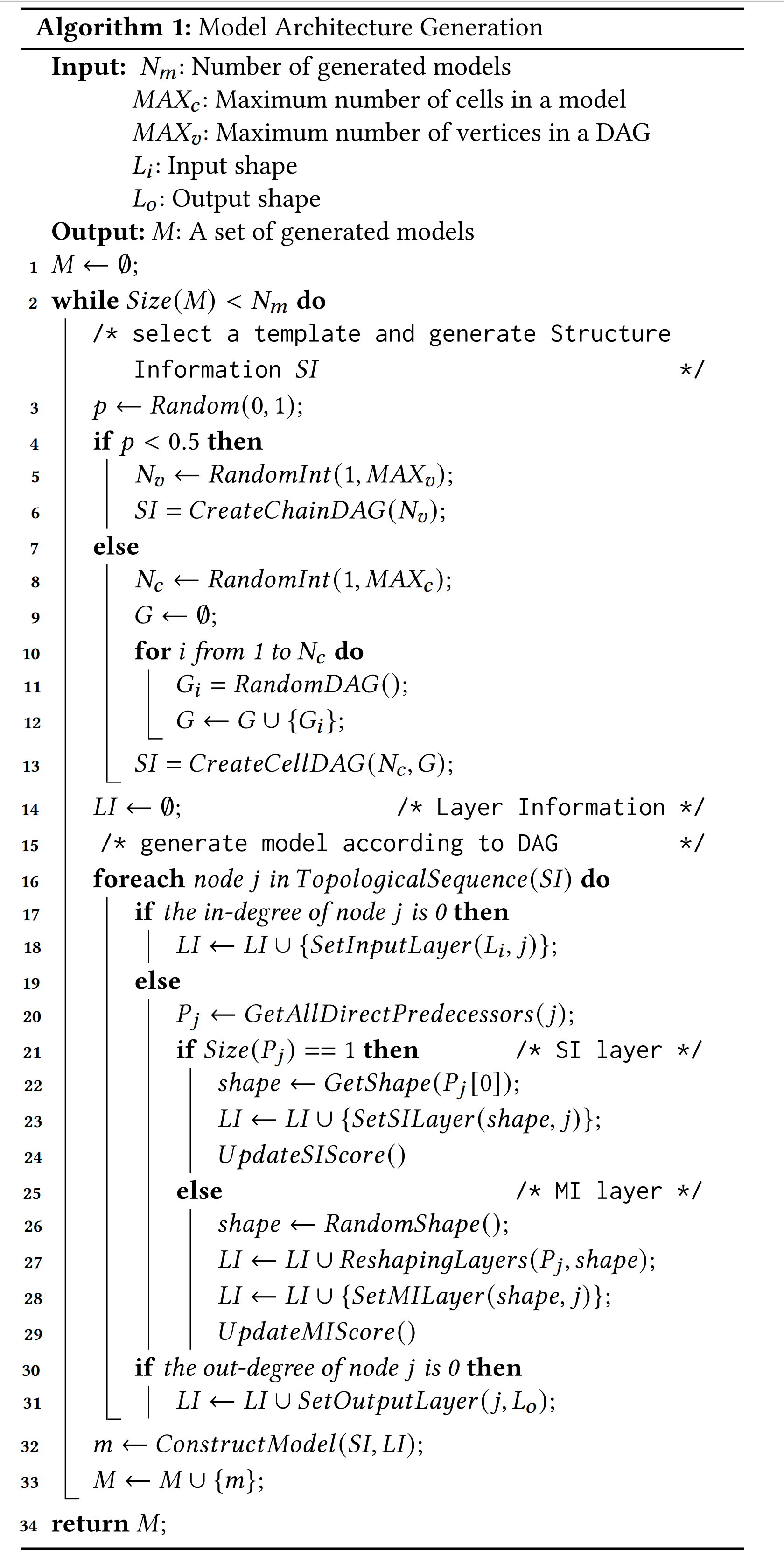

3.2 Model Generation

自上而下,先生成结构信息,再生成具体层类型。

- Structure Information Generation

- DAG抽象:顶点代表Layer, 边代表层之间的链接

- 生成DAG:

- 要点:避免模型结构过于简单或复杂

- 启发:Neural Architecture Search

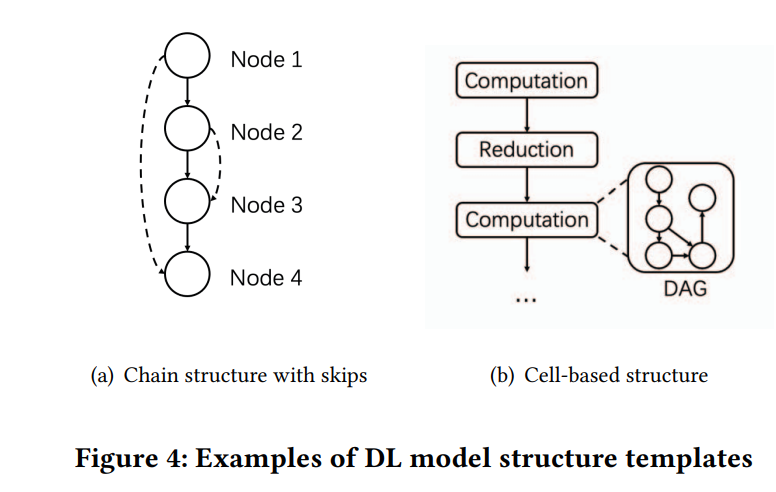

- 方法:两种模板:1. Chain-based Structure 2. Cell-based Structure

- Chain-based Structure: 用于模仿全局结构,允许arbitrary skip connections between nodes

- Cell-based Structure: 用于捕捉类似ResNet这种带有重复性结构的网络

- 其他约束:只有1个入度为0,出度为0的

- Layer Information Generation

- input number restriction:the in-degree of the vertex

- Single Input: 如Convolution

- Multiple Input: 如Concatenation

- input/output shape restriction: 使用reshape来重塑输出尺寸

- layer selection procedure: 给使用较少的layer更多权重, Fitness Proportionate Selection

- 目的:增加generated model的多样性

- 分数设置:l为给定layer,c为被选中次数,s为分数,p为被选中的概率,r为全体layers的数目

$p = \frac{s}{\sum^r_{k=1}{S_k}},s = \frac{1}{c+1}, $

- Entire Algo: This algorithm takes 5 parameters, where 𝑁𝑚 is the total number of models to generate, serving as the terminating condition; 𝑀𝐴𝑋𝑐 and 𝑀𝐴𝑋𝑣 are parameters to control the size of DAG; 𝐿𝑖 and 𝐿𝑜 should be manually set according to the input data and target task.

3.3 Inconsistency Detection

已有指标:对已经训练好的模型:模型输出,ground-truth label

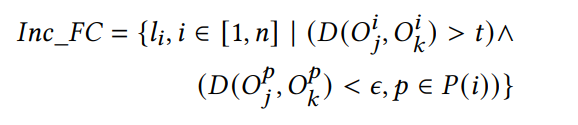

本文:基于连续层输出方差的度量(based on the variance of outputs in consecutive layers)

挑战:由于浮点数误差等,无法区分value difference是来自误差还是潜在的bugs,且误差会在接下来的计算中被不断放大

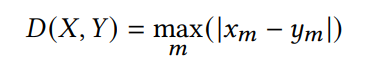

解决方法:1. 只考虑difference-changes between two consecutive layers 2. Chebyshev Distance

步骤:

- 使用Keras来跟踪FC, LC, BC每一层的输出

- 定义每层的inconsistency:

- FC:

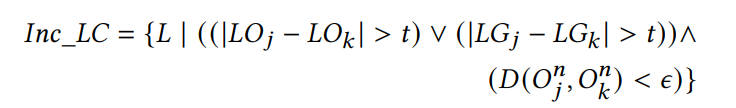

- LC:

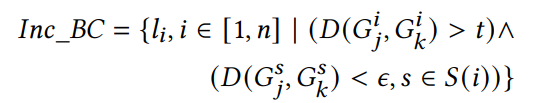

- BC:

where:

𝑋 and 𝑌 are two tensors (i.e., output of a layer is typically a high-dimensional tensor), while 𝑥𝑝 and 𝑦𝑝 are elements in 𝑋 and 𝑌, respectively

In FC stage, Muffin compares the differences of the output tensors from 𝑙𝑖 and its predecessors 𝑙𝑝. If the difference of 𝑙𝑝 is smaller than 𝜖, while the difference of 𝑙𝑖 is larger than a user-defined threshold 𝑡, then Muffin determines that an inconsistency is detected in 𝑙𝑖.

LC: 𝐿 denotes the loss function, 𝐿𝑂𝑗 and 𝐿𝑂𝑘 are the output results of 𝐿, 𝐿𝐺𝑗 and 𝐿𝐺𝑘 are the gradient results of 𝐿, 𝑂𝑛𝑗 and 𝑂𝑛𝑘 are the model outputs, using library 𝑗 and k

BC: 𝑆(𝑖) denotes the set of layers that are direct successors of 𝑙𝑖;𝐺𝑖 𝑗 and𝐺𝑖 𝑘 denote the gradient result of𝑙𝑖 using different libraries.

4. Evaluation Setup

RQ1: How does Muffin perform in detecting bugs in DL libraries?

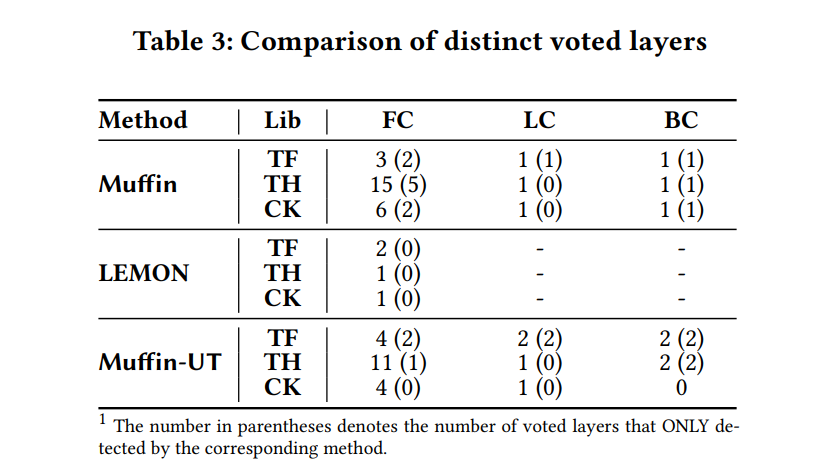

RQ2: Can Muffin achieve better performance compared to other

methods?

RQ3: How do the different parameter settings affect the performance of Muffin?

4.1 Libraries and Datasets

Datasets: MNIST, F-MNIST, CIFAR-10, ImageNet, Sine-Wave and Stock-Price.

4.2 Competitors

Competitors: LEMON

种子集:AlexNet、LeNet5、 ResNet50、MobileNetV1、InceptionV3、DenseNet121、VGG16、VGG19、 Xception、LSTM-1、LSTM-2

metrics: 1. bug和inconsistent数量 2. 功能覆盖率

内部对比Competitor: Muffin-UT: ⼀种基于单元测试的简化

Muffin 版本⽅法创建的模型只有⼀个功能层,以及简单的reshape来处理输⼊/输出,即进⾏维度变换以匹配待测试层的输⼊/输出要求

4.3 Measurements

- 保存所有中间层输出

- 检查机制:首先由两位作者检查不一致对应的source codes in different libraries,如果这两位作者的implementation idea(Q?)不一致,第三位作者将检查When an inconsistency is reported, two authors check the corresponding source codes in different libraries and compare the results. If their identical layer produces different results and their implementation ideas are different, the third author will join manual inspection so as to conclude whether the report is true or false positive.

Metrics: 1. Number of inconsistencies 2. Number of detected bugs 3. Number of NaN/Crash bugs,仅在⾄少⼀个深度学习库可以正常执⾏时才计算 NaN/崩溃

5 Results and Analysis

6. Discussion

许多层的不⼀致只能由特定的输⼊触发, 例如输⼊张量中的多个元素具有相同的最⼤值,DL库规范不明确

TensorFlow、Theano 和 CNTK 库可以使⽤相同的前端库(即Keras)来调⽤.Muffin ⽬前不⽀持其他不⽀持Keras 的库