Proj CDeepFuzz Paper Reading: SkipFuzz: Active Learning-based Input Selection for Fuzzing Deep Learning Libraries

Abstract

背景:

挑战:

- need valid input domain of each API function

- hard to trigger new behavior

本文:SkipFuzz

任务: fuzz machine learning libraries using active learning to infer API constraints.

方法:

- Use active learning to infer constraints of input APIs to generate semantic-valid inputs

- have an active learner that query the executor to construct the oracle

- use input constraints to construct hypotheses, then refine those hypotheses usng feedback

- feedback: 库是否接受或拒绝输⼊,即它是否满⾜输⼊约束

- 来自不同categories的输入用来检查一组输入是否满足函数的输入约束

- category: ⼀个类别中的输⼊与其他类别的区别在于它们可能满⾜的输⼊约束,例如它们是某种形状的张量

- 消除一些constraints candidates

- 进提出可以提供新信息的查询

效果:

- 产生更多的崩溃输入,43 crashes, 28 confirmed bugs, 13CVEs

1. Intro

P1: 深度学习 popular

P2: test ML libraries的意义

P3: 挑战:

- 输入控件很大

- 输入冗余

输入约束未知,为生成结构化输入带来困难

现有方法:

- DocTer从API文档推断约束

- FreeFuzz: 开源代码,文档和其他资源(model in the wild)

- DeepRel: based on FreeFuzz, API

SkipFuzz: 通过输入的结构、形状、值对应的输入限制来减少冗余

介绍Active learning:

The active learner aims to identify a hypothesis of the input constraints that is consistent with the observed outcome.

A consistent hypothesis is one where the behavior of the program under the hypothesis matches that of the actual program.

An ideal hypothesis is a set of categories that contain the valid inputs but exclude the invalid inputs.

本文使用precision和recall来衡量hypothesis与observed test outcomes之间的一致性

实验:

对象:Tensorflow 2.7.0, PyTorch 1.10

结果:

- crashes in 108 functions in Tensorflow, 58 functions in PyTorch

- 23 bugs confirmed or fixed in Tensorflow, 6 in PyTorch

- can trigger 65% crashes found by previous approaches

- generate valid inputs for 37% of TensorFlow and PyTorch’s API

- SKIPFUZZ is able to generate valid inputs over 70% of the time,

2. Background

Architecture(kernel: C/C++, validate: Python),

Input domain of deep learning libraries: DocTer: structure, type, shape, values(e.g: non-negative integers)

Bugs of deep learning libraries: An empirical study on bugs inside TensorFlow: type confusion, dimension mismatches, unhandled corner cases

Testing deep learning libraries: FreeFuzz, DocTer, DeepRel

3. Preliminaries

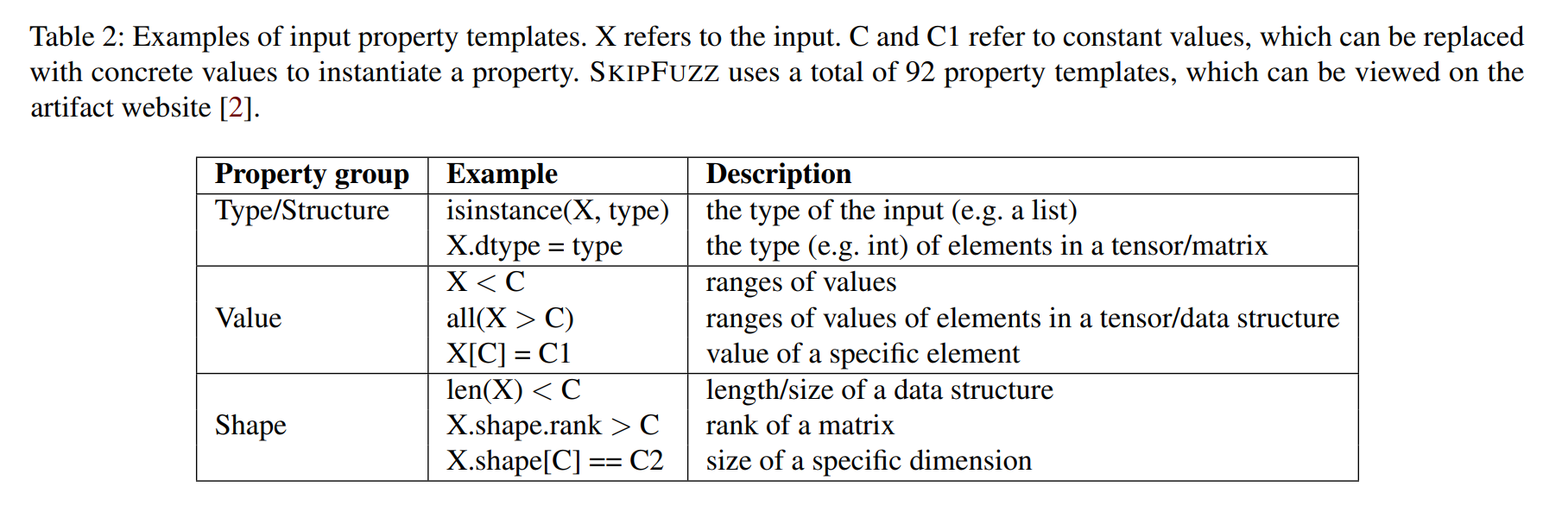

Active Learning, Consistency, Input constraints, Input properties, Input categories, Hypothesis

Table 1: A glossary of terms used in the Active Learning literature and this paper.

Active Learning: An algorithm that learns by interactively querying an oracle.

Consistency: The extent to which executions under the inferred hypothesis matches the actual program

Input constraints: The validation checks performed by the library on its inputs

Input properties: Predicates which describe inputs

Input categories: Conjunction of input properties.

Hypothesis: A model of the input constraints as inferred by SKIPFUZZ. A disjunction of properties associated with a set of input categories

Input properties:

* float(X) < !C!,

* float(X) > !C!,

* float(X) == !C!,

* float(X) == !C!,

* float(X) > !C!,

* float(X) < !C!,

* 0 <= float(X) <= 1,

* isinstance(X, bool),

* isinstance(X, int),

* isinstance(X, float),

* isinstance(X, list),

* isinstance(X, dict),

* isinstance(X, str),

* isinstance(X, tuple),

* isinstance(X, numpy.ndarray),

* type(X).__name__ == 'Tensor',

* type(X).__name__ == 'EagerTensor', / isinstance(x, torch.sparse_coo_tensor)

* type(X).__name__ == 'RaggedTensor', / isinstance(x, torch.sparse_csr_tensor)

* type(X).__name__ == 'SparseTensor',

* len(X) == !C!,

* len(X) < !C!,

* len(X) > !C!,

* X.dtype == tf.float64, / X.dtype == torch.float64

* X.dtype == tf.float32, / X.dtype == torch.float32

* X.dtype == tf.float16, / X.dtype == torch.float

* X.dtype == tf.int64, / X.dtype == torch.double

* X.dtype == tf.int32, / X.dtype == torch.complex64

* X.dtype == tf.int8, / X.dtype == torch.cfloat

* X.dtype == tf.int16, / X.dtype == torch.complex128

* X.dtype == tf.uint16, / X.dtype == torch.cdouble

* X.dtype == tf.uint8, / X.dtype == torch.float16

* X.dtype == tf.string, / X.dtype == torch.half

* X.dtype == tf.bool, / X.dtype == torch.bfloat16

* X.dtype == tf.complex64, / X.dtype == torch.uint8

* X.dtype == tf.complex128, / X.dtype == torch.int8

* X.dtype == tf.qint8, / X.dtype == torch.int16

* X.dtype == tf.quint8, / X.dtype == torch.short

* X.dtype == tf.qint16, / X.dtype == torch.int32

* X.dtype == tf.quint16, / X.dtype == torch.int

* X.dtype == tf.qint32, / X.dtype == torch.int64

* X.dtype == tf.bfloat16, / X.dtype == torch.long

* X.dtype == tf.resource, / X.dtype == torch.qint8

* X.dtype == tf.half, / X.dtype == torch.uqint8

* X.dtype == tf.variant, / X.dtype == torch.qint32

/ X.dtype == torch.quint4x2

/ X.dtype == torch.bool

* X.shape.rank == 0, / torch.linalg.matrix_rank(X) == 0

* X.shape.rank > !C!, / torch.linalg.matrix_rank(X) > !C!

* X.shape.rank < !C!, / torch.linalg.matrix_rank(X) < !C!

* X.shape[0] == int(!C!),

* X.shape[1] == int(!C!),

* X.shape[2] == int(!C!),

* X.shape[0] > int(!C!),

* X.shape[1] > int(!C!),

* X.shape[2] > int(!C!),

* X.shape[0] < int(!C!),

* X.shape[1] < int(!C!),

* X.shape[2] < int(!C!),

* tf.experimental.numpy.all(X > !C! ) .numpy(),

* tf.experimental.numpy.all(X < !C! ) .numpy(),

* tf.experimental.numpy.any(X == !C!) .numpy(),

tf.experimental.numpy.all(X == !C!) .numpy()

* isinstance(X, sparse_tensor.SparseTensor),

* isinstance(X, ragged_tensor.RaggedTensor),

* all(i > !C! for i in X),

* all(i == !C! for i in X),

* all(i < !C! for i in X),

* all(i != 0 for i in X),

* all(i is not None for i in X),

* all(i > !C! for i in X.shape),

* all(i == !C! for i in X.shape),

* all(i < !C! for i in X.shape),

* all(type(X) == !T!),

* any(type(X) == !T!),

* all([type(x) == !T! for x in X]),

* all([type(x) == !T! for x in X.values()]),

* any([type(x) == !T! for x in X]),

* any([type(x) == !T! for x in X.values()]),

* all([x.dtype == !T! for x in X]),

* any([x.dtype == !T! for x in X]),

* X.isupper(),

* X[0].isupper(),

* X.islower(),

* X[0].islower(),

* X[0] == !C!,

* X[1] == !C!,

* X[-1] == !C!,

* X[-2] == !C!,

* all([x.dtype == !T! for x in X]),

* any([x.dtype == !T! for x in X]),

* X is None,

* X is not None,

Input Categories

Definition 1. Two inputs, x and y, belong to the same input category, C, if every property that x satisfies matches a property that y satisfies, and vice versa.

Definition 2. An input category, C1, is weaker than an input category, C2, if the set of inputs associated with C1 is a superset of the set of inputs associated with C2.

Definition 3. A hypothesis is a disjunction of properties associated with a set of input categories.

3.4 Motivating Example

import tensorflow as tf

# generate inputs

input1 = tf. constant ([1 , 2 , 3])

shape = [4]

# invoke the target API function with

# the generated inputs

tf. placeholder_with_default ( input1 , shape )

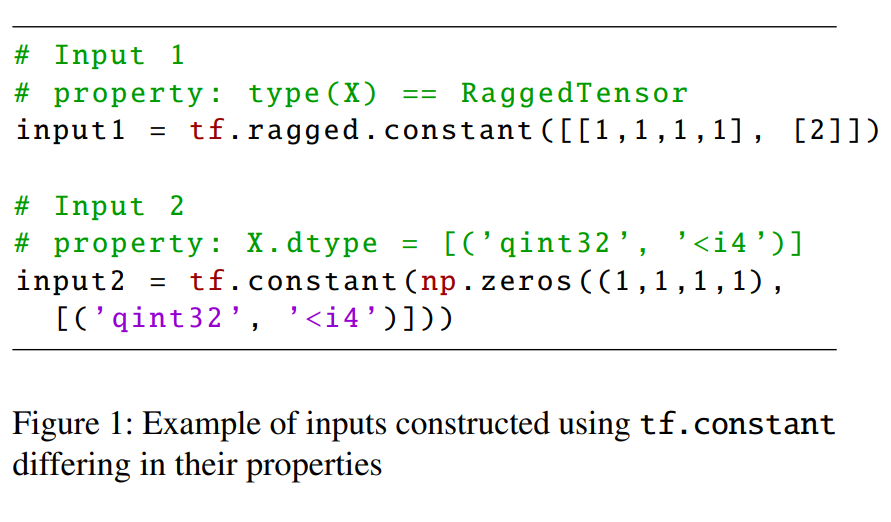

Figure 2: Example of a test input generated for placeholder_with_default. Inputs for each argument (e.g. shape) are generated. A valid input of shape can be a tf.TensorShape or a list of int

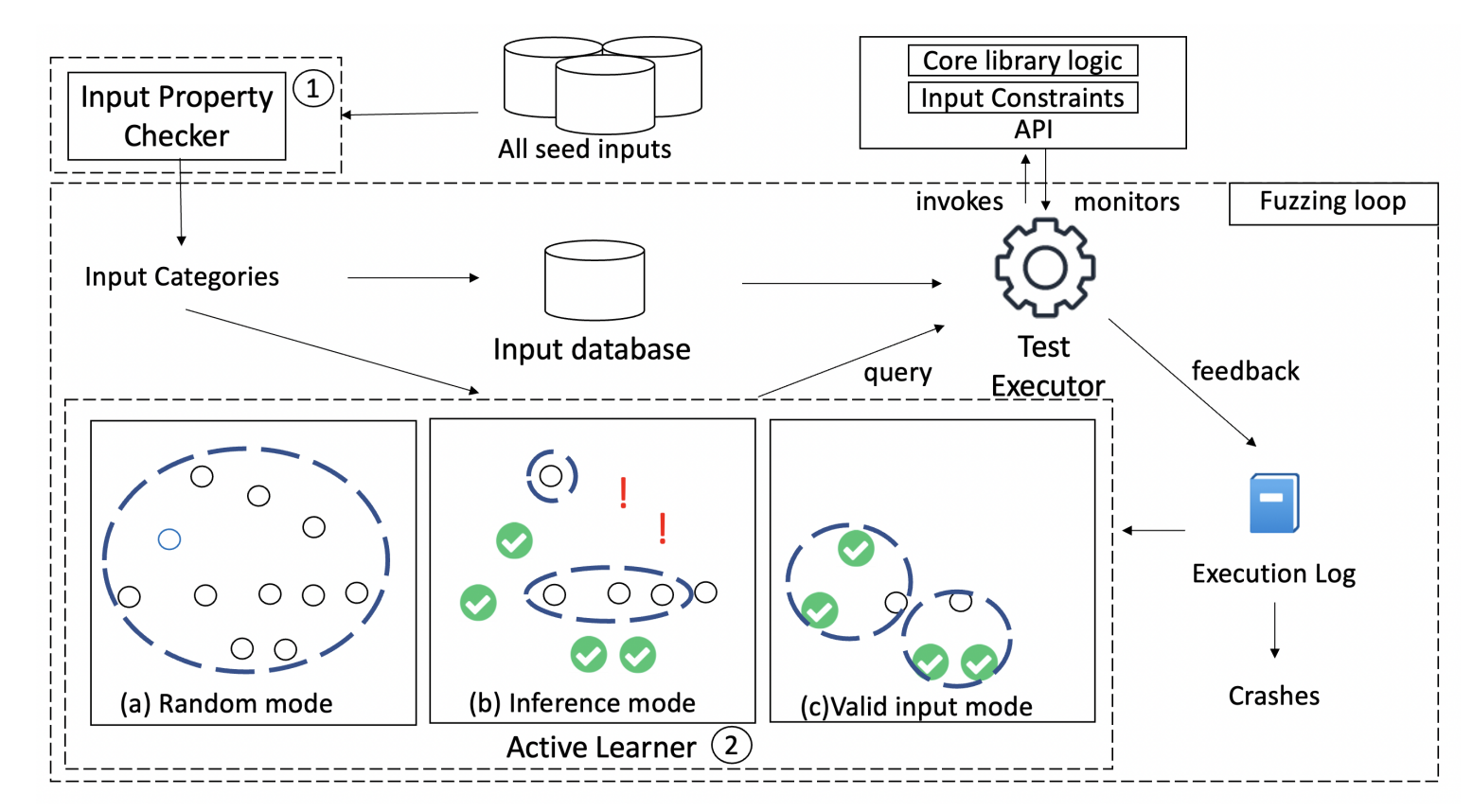

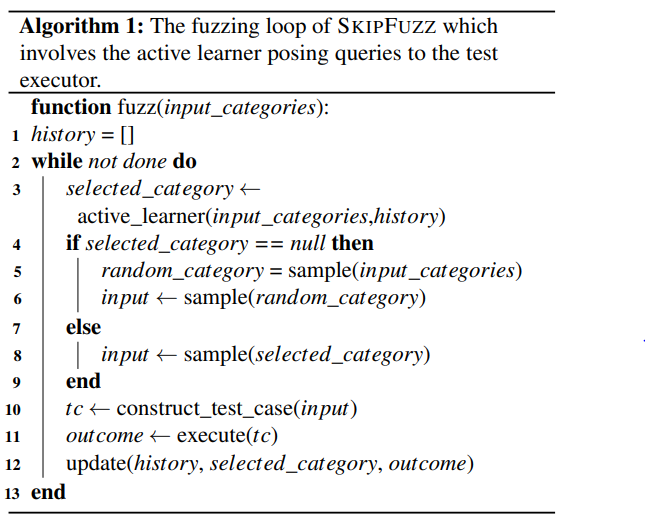

4 SKIPFUZZ

4.1 Overview

The test executor has the role of the oracle;

Each query is one input category.

On receiving the query, the test executor samples an input that satisfies the input category and constructs a Python program that invokes a function from the library’s API.

Fuzzing a function:

- random generation mode

- inference mode

- valid input generation mode

4.2 Step 1: Input property checking and input category construction

Seed: Developer Test Suite

Q: 将满⾜的属性与每个输⼊相关联并分组后,Input category在此时已经固定:也就是说Input Category本身仅仅依赖于Developer Test Suite,与Active learner无关

4.3 Step 2: Active learning-driven fuzzing

4.4 Input constraint inference

不可能推断出完全⼀致的假设, 用精确度和召回率衡量

5 Implementation

Building the input database: instrument python code, seed: developer test suite, The functions to construct the inputs, the returned values of their invocations, and the input properties satisfied by the inputs are stored in the database.

Crash Oracle: DoS attacks

Active Learning: threshold of precision & recall: 0.25; allowing the fuzzer to focus on a broad region of inputs that include the valid domain of inputs of the functions is more beneficial than precisely identifying the valid domain of inputs

Interleaving of target functions: clingo to execute the logic programs

6 Evaluation

RQ1. Does SKIPFUZZ produce crashing inputs?

RQ2. Does SKIPFUZZ sample diverse inputs?

RQ3. Does SKIPFUZZ sample valid inputs?

RQ4. Which components of SKIPFUZZ contribute to its ability to find crashing inputs?

6.2 Experimental Setup

Targets: TensorFlow 2.7.0, PyTorch 1.10

运行48hr,每个函数允许1000个testcases或者2000个testcases

Q:意思是生成式工具只允许48小时,还是先生成之后再去选择48小时的?

Metrics: #crashes, Input property coverage, API coverage,

6.3 Experimental Results

6.3.1 RQ1. Vulnerabilities detected

We do not perform this analysis for the crashing inputs to PyTorch as its developers do not assign CVEs to potential security weaknesses.

6.3.2 RQ2. Reducing input redundancy

SKIPFUZZ实现了具有不同测试结果的分布,⽽DeepRel成功执⾏ TensorFlow 的⽐例更⼤

6.3.3 RQ3. Generating valid input

6.3.4 RQ4. Ablation analysis

7 Discussion and Limitations

观察到的⾏为不太接近程序的实际⾏为;可能⽆法捕获真实输⼊的某些属性