Proj CDeepFuzz Paper Reading: Fuzzing Automatic Differentiation in Deep-Learning Libraries

Abstract

背景:每个深度学习库 API 都可以抽象为处理张量/向量的函数(each DL library API can be abstracted into a function processing tensors/vectors),可用来差分测试

本文:∇Fuzz

Task: API-level fuzzer targeting AD in DL Libraries using differential testing

方法:用不同场景下的差分测试来测试一阶和高阶梯度(first-order and high-order gradients),增加filtering strategies用于去除numerical instability造成的假阳报告

实验:

测试对象:PyTorch, Tensorflow, JAX, OneFlow

效果:

- ∇Fuzz的code coverage和bug detection都更好

- 发现173 bugs, 144 confirmed, 107 related to AD and confirmed.

- ∇Fuzz contributed 58.3% (7/12) of all high-priority AD bugs for PyTorch and JAX during a two-month period.

- None of the confirmed AD bugs were detected by existing fuzzers

1. Intro

Dos and ddos in named data networking 自动微分会带来潜在的DDos攻击?

与Muffin的区别:

- Muffin needs to manually annotate the input constraints of considered DL APIs and use reshaping operations to ensure the validity of the generated models, can only cover a small set of APIs

- whole model testing is inefficient

- 没有做numerical instability filter,造成假阳

- requires the same API interfaces across different DL libraries

- only covers part of reverse mode AD and ignores forward mode AD

假设:

1.DL 库中的每个 API 都可以抽象为处理张量/向量的函数,可以在各种执⾏场景下进⾏差异化测试(⽤于计算不同实现的输出/梯度)。例如,同⼀个DL API可以在没有AD的情况下执⾏,也可以在不同的AD模式下执⾏,但在

不同的执⾏场景下,API的输出和梯度应该保持⼀致

2.可以进⼀步将每个API转化为其梯度函数,以测试⾼阶梯度计算的正确性

BaseTool: FreeFuzz

II. Background

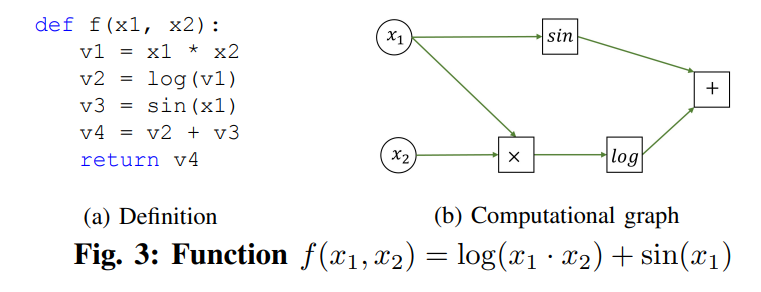

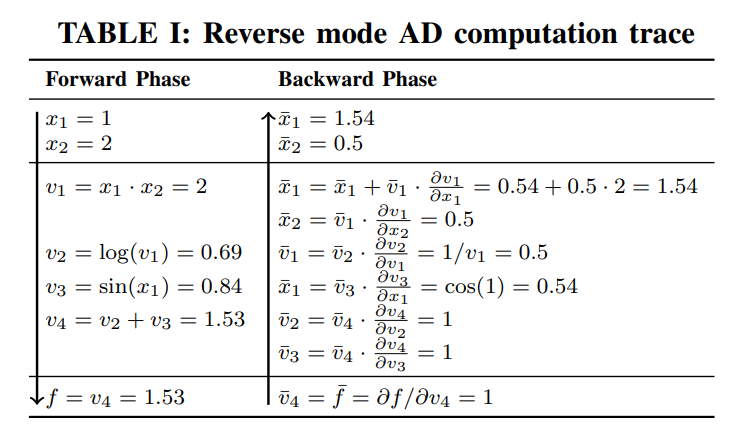

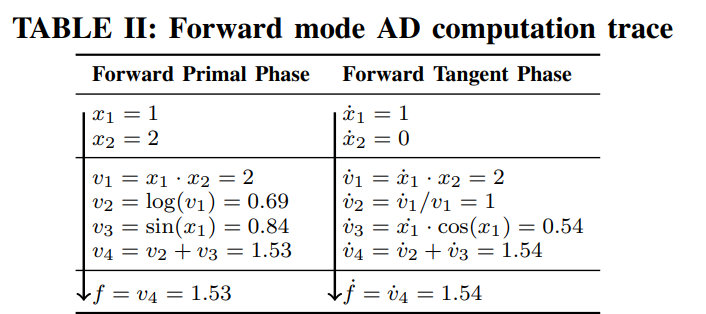

定义以下概念,用例子表示forward mode和backword mode的异同,优缺点(forward mode不用存储许多中间变量,对Rn->Rm, n pass, n小优势, backward mode m pass, m小优势),列出了一些常见API

DL APIs, DK model, training phase, inference phase, automatic differentiation, reverse mode, forward phase, backward phase, Forward mode, forward primal phase, forward tangent phase, gradient, Jacobian, Vector-Jacobian Product, Jacobian-Vector Product, Numerical Differentiation, Hessian, Differentiability

IV. Approach

A. API-Level Fuzzer

- FreeFuzz can generate inputs for both input tensors and configuration arguments.

- ∇Fuzz would automatically create a wrapper function to transform the API invocation into a function mapping from the input to the output tensor(s).

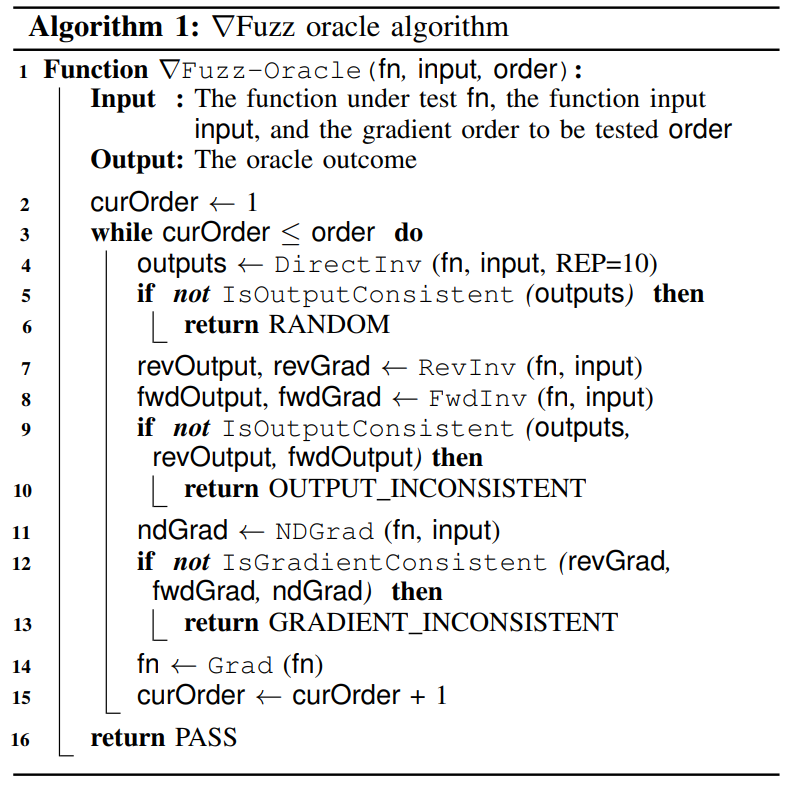

B. Test Oracles

1. Output Check

Q: 在反向或正向AD模式下计算梯度时,总是会产⽣⼀些额外的操作,例如跟踪或形状检查。因此,使⽤ AD 的调⽤可能具有与直接调⽤不同的输出。

假设:不同执⾏场景中的输出不应有所不同,这意味着任何不⼀致都可能是错误。

def fn(input):

return dynamic_index_in_dim(input, index=-7, axis=1)

input = array([[1., 2., 3., 4., 5.]])

DirectValue(fn, input) # [[1.0]]

RevValue(fn, input) # [[4.0]]

Fig. 5: Inconsistent outputs w/ and w/o AD

2. Gradient Check

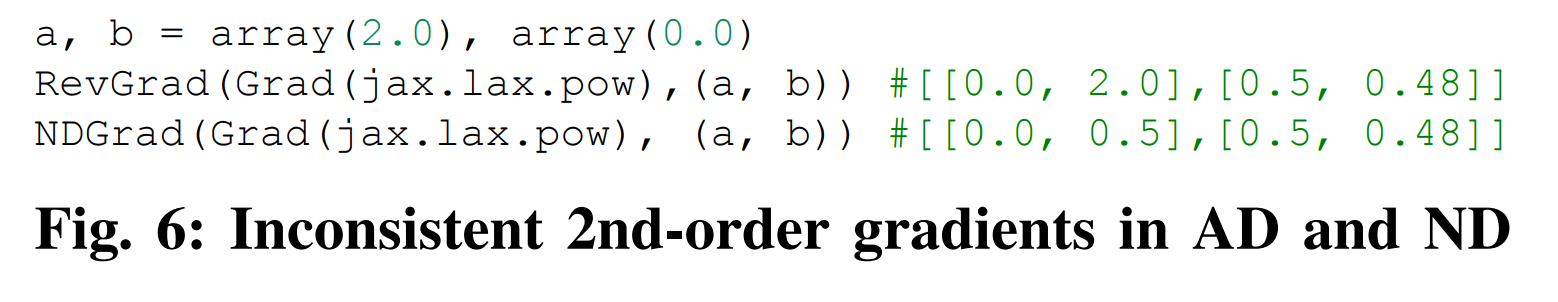

∇Fuzz compares gradients computed by reverse mode AD, forward mode AD, and ND to detect bugs.

a PyTorch API torch.trace [46] returns the sum of the diagonal elements of the input 2-D matrix. Obviously, an input with shape (4,2) has two elements in its diagonal, so this API will return the sum of these two elements. However, three elements of the gradient computed in reverse mode have gradient 1, compared to only two elements in forward mode. This inconsistency is caused by the wrong formula used in reverse mode AD for torch.trace. This bug found by ∇Fuzz has been confirmed by the developers.

Here is another example showing the value of further leveraging ND. The PyTorch API hardshrink(x,lambd) [47] returns x when |x|>lambd; otherwise, it just returns 0. That said, when lambd is 0, this API is equivalent to the linear function y = x. However, it will have different gradients for input 0 in AD and ND with lambd=0. Both reverse and forward mode AD return 0 as the gradient, while ND returns 1 as the gradient. Obviously, the gradient should be 1. This bug detected by ∇Fuzz has also been confirmed in PyTorch

3. High-order Gradients

现有的深度学习库很少提供计算三阶以上梯度的 API。

∂2f/∂a∂b != ∂2f/∂b∂a

C. Filtering Strategies

- 不可微点:我们将对 N 个(默认 5)个随机邻居进⾏采样以检查可微性。更具体地说,我们将随机邻居定义为 random(x) = x +uniform(−δ, +δ),其中采样距离 δ 是⼀个超参数(默认为10−4 )。如果任何邻居的输出或梯度与点 x 不同,∇Fuzz 将认为 f 在点 x 处不可微。回到绝对函数⽰例。对于0点,很明显它的左邻点的梯度为-1,右邻点的梯度为1。两者都不同于0,即ND在0点计算的梯度。因此, ∇Fuzz 将过滤掉这种误报情况。

- 精度转换:排除了 API 输⼊和输出具有不同精度的所有情况。

5. Experimental setup

RQ1: Is ∇Fuzz effective in detecting real-world bugs and improving code coverage?

RQ2: How do different components of ∇Fuzz oracle affect its performance?

RQ3: How do the filter strategies contribute to the reduction of the false positive rate of ∇Fuzz?

数据集: PyTorch、TensorFlow、JAX 和 OneFlow

Competitors: Muffin, FreeFuzz

6. Result

∇Fuzz 贡献了 7.7% 的⾼优先级 bug,55.5% 的⾼优先级 AD bug