LevelDB的源码阅读(三) Get操作

在Linux上leveldb的安装和使用中我们写了这么一段测试代码,内容以及输出结果如下:

#include <iostream>

#include <string>

#include <assert.h>

#include "leveldb/db.h"

using namespace std;

int main(void)

{

leveldb::DB *db;

leveldb::Options options;

options.create_if_missing = true;

// open

leveldb::Status status = leveldb::DB::Open(options,"/tmp/testdb", &db);

assert(status.ok());

string key = "name";

string value = "chenqi";

// write

status = db->Put(leveldb::WriteOptions(), key, value);

assert(status.ok());

// read

status = db->Get(leveldb::ReadOptions(), key, &value);

assert(status.ok());

cout<<value<<endl;

// delete

status = db->Delete(leveldb::WriteOptions(), key);

assert(status.ok());

status = db->Get(leveldb::ReadOptions(),key, &value);

if(!status.ok()) {

cerr<<key<<" "<<status.ToString()<<endl;

} else {

cout<<key<<"==="<<value<<endl;

}

// close

delete db;

return 0;

}

chenqi name NotFound:

Leveldb的读数据入口为db文件夹下db_impl.cc文件中的DBImpl::Get函数,首先获取当前的版本号,然后依次在三个数据源memtable,immutable table,和sst表中进行查找,返回之前再判断一下是否需要启动Compact任务.

Status DBImpl::Get(const ReadOptions& options, const Slice& key, std::string* value) { Status s; MutexLock l(&mutex_); SequenceNumber snapshot; if (options.snapshot != NULL) { snapshot = reinterpret_cast<const SnapshotImpl*>(options.snapshot)->number_; } else { //获取版本号 snapshot = versions_->LastSequence(); } //三个查找源,memtable,immutable,sstable MemTable* mem = mem_; MemTable* imm = imm_; Version* current = versions_->current(); mem->Ref(); if (imm != NULL) imm->Ref(); current->Ref(); bool have_stat_update = false; Version::GetStats stats; // Unlock while reading from files and memtables { mutex_.Unlock(); // First look in the memtable, then in the immutable memtable (if any). LookupKey lkey(key, snapshot); if (mem->Get(lkey, value, &s)) { //在memtable中查找 // Done } else if (imm != NULL && imm->Get(lkey, value, &s)) {//在imutable中查找 // Done } else { s = current->Get(options, lkey, value, &stats); //在磁盘文件中查找,当前Version have_stat_update = true; } mutex_.Lock(); } //判断是否需要调度Compact if (have_stat_update && current->UpdateStats(stats)) { MaybeScheduleCompaction(); } mem->Unref(); if (imm != NULL) imm->Unref(); current->Unref(); return s; }

首先,按照leveldb代码的惯例线上锁,然后生成一个SequenceNumber作为标记, 后续不管线程会不会被切出去, 结果都要相当于在这个时间点瞬间完成,memtable、immemtable以及Version都由于采用了引用计数, 因此要Ref().快照建立完了, 接下来的操作只会有单纯的读, 可以把锁暂时释放.查询的顺序是先找memtable, 再immemtable, 最后是SSTable.这里调用了db文件夹下dbformat.cc中的LookupKey::LookupKey, 源码内容如下:

LookupKey::LookupKey(const Slice& user_key, SequenceNumber s) { size_t usize = user_key.size(); size_t needed = usize + 13; // A conservative estimate char* dst; if (needed <= sizeof(space_)) { dst = space_; } else { dst = new char[needed]; } start_ = dst; dst = EncodeVarint32(dst, usize + 8); kstart_ = dst; memcpy(dst, user_key.data(), usize); dst += usize; EncodeFixed64(dst, PackSequenceAndType(s, kValueTypeForSeek)); dst += 8; end_ = dst; }

这个类主要的功能是把输入的key转换成用于查询的key. 比如key是"Sherry", 实际在数据库中的表达可能会是"6Sherry", 6是长度. 这样比对key是否相等时速度会更快.LookupKey格式 = 长度 + key + SequenceNumber + type,这里用了两个tricks:

1.在栈上分配一个200长度的数组, 如果运行时发现长度不够用再从堆上new一个, 可以极大避免内存分配

2.黑科技函数"EncodeVarint32", 一般key的长度不可能用满32bit. 大量很短的Key却要用32bit来描述长度无疑是很浪费的. 这个函数让小数值用更少的空间, 代价是最糟要多花一字节(8bit).EncodeVarint32的代码出现在util文件夹下的coding.cc文件里,源码内容如下:

char* EncodeVarint32(char* dst, uint32_t v) { // Operate on characters as unsigneds unsigned char* ptr = reinterpret_cast<unsigned char*>(dst); static const int B = 128; if (v < (1<<7)) { *(ptr++) = v; } else if (v < (1<<14)) { *(ptr++) = v | B; *(ptr++) = v>>7; } else if (v < (1<<21)) { *(ptr++) = v | B; *(ptr++) = (v>>7) | B; *(ptr++) = v>>14; } else if (v < (1<<28)) { *(ptr++) = v | B; *(ptr++) = (v>>7) | B; *(ptr++) = (v>>14) | B; *(ptr++) = v>>21; } else { *(ptr++) = v | B; *(ptr++) = (v>>7) | B; *(ptr++) = (v>>14) | B; *(ptr++) = (v>>21) | B; *(ptr++) = v>>28; } return reinterpret_cast<char*>(ptr); }

现在回到DBImpl::Get函数,memtable和immutable table都是通过内存中的skiplist进行的,磁盘文件的查找是通过db文件夹下version_set.cc中Version::Get来进行的.源码内容如下:

Status Version::Get(const ReadOptions& options, const LookupKey& k, std::string* value, GetStats* stats) { Slice ikey = k.internal_key(); Slice user_key = k.user_key(); const Comparator* ucmp = vset_->icmp_.user_comparator(); Status s; stats->seek_file = NULL; stats->seek_file_level = -1; FileMetaData* last_file_read = NULL; int last_file_read_level = -1; // We can search level-by-level since entries never hop across // levels. Therefore we are guaranteed that if we find data // in an smaller level, later levels are irrelevant. //查找用户提供的key可能在的文件,通过各个level的文件的最小值,最大值来判断 //按层查找 std::vector<FileMetaData*> tmp; FileMetaData* tmp2; for (int level = 0; level < config::kNumLevels; level++) { size_t num_files = files_[level].size(); if (num_files == 0) continue; // Get the list of files to search in this level FileMetaData* const* files = &files_[level][0]; if (level == 0) { // Level-0 files may overlap each other. Find all files that // overlap user_key and process them in order from newest to oldest. tmp.reserve(num_files); for (uint32_t i = 0; i < num_files; i++) { FileMetaData* f = files[i]; if (ucmp->Compare(user_key, f->smallest.user_key()) >= 0 && ucmp->Compare(user_key, f->largest.user_key()) <= 0) { tmp.push_back(f); } } if (tmp.empty()) continue; std::sort(tmp.begin(), tmp.end(), NewestFirst); files = &tmp[0]; num_files = tmp.size(); } else { // Binary search to find earliest index whose largest key >= ikey. //查找用户提供的key可能在的文件 uint32_t index = FindFile(vset_->icmp_, files_[level], ikey); if (index >= num_files) { files = NULL; num_files = 0; } else { tmp2 = files[index]; if (ucmp->Compare(user_key, tmp2->smallest.user_key()) < 0) { // All of "tmp2" is past any data for user_key files = NULL; num_files = 0; } else { files = &tmp2; num_files = 1; } } } for (uint32_t i = 0; i < num_files; ++i) { if (last_file_read != NULL && stats->seek_file == NULL) { // We have had more than one seek for this read. Charge the 1st file. stats->seek_file = last_file_read; stats->seek_file_level = last_file_read_level; } FileMetaData* f = files[i]; last_file_read = f; last_file_read_level = level; Saver saver; saver.state = kNotFound; saver.ucmp = ucmp; saver.user_key = user_key; saver.value = value; //在table_cache中查找key对应的value s = vset_->table_cache_->Get(options, f->number, f->file_size, ikey, &saver, SaveValue); if (!s.ok()) { return s; } switch (saver.state) { case kNotFound: break; // Keep searching in other files case kFound: return s; case kDeleted: s = Status::NotFound(Slice()); // Use empty error message for speed return s; case kCorrupt: s = Status::Corruption("corrupted key for ", user_key); return s; } } } return Status::NotFound(Slice()); // Use an empty error message for speed }

FindFile源码内容如下:

int FindFile(const InternalKeyComparator& icmp, const std::vector<FileMetaData*>& files, const Slice& key) { uint32_t left = 0; uint32_t right = files.size(); while (left < right) { uint32_t mid = (left + right) / 2; const FileMetaData* f = files[mid]; if (icmp.InternalKeyComparator::Compare(f->largest.Encode(), key) < 0) { // Key at "mid.largest" is < "target". Therefore all // files at or before "mid" are uninteresting. left = mid + 1; } else { // Key at "mid.largest" is >= "target". Therefore all files // after "mid" are uninteresting. right = mid; } } return right; }

Version::Get函数首先查找key可能存在的sst表,然后调用table_cache->Get进行查找。即对SSTable的查询就是对table_cache_的查询, 这个cache是不可取消的, 解决了什么问题呢?

LevelDB的数据库"文件"是一个文件夹, 里面包含大量的文件. 这是把复杂度甩锅给操作系统的做法, 但很多系统资源是有限的. 比如, file handle(文件句柄). 一个程序如果开了1W个file handle会浪费大量资源. 这里做个LRU cache, 只有常用的SSTable才会开一个活跃的file handle.

另外就是索引的问题. LSMT是没有主索引的, 只有在各个SSTable内有微缩版索引. 所以, 最最优的情况下也需要2次硬盘读写. 第一张SSTable就存着key, 先读微型索引, 然后二分法找到具体位置, 再读value.

TableCache把热点SSTable的微型索引预先放在内存里, 这样只要1次硬盘读取就能取到key. 这个优化对于LSMT的数据库来说尤为重要, 因为很可能会不止查询一张SSTable. 情况会劣化非常快.

总结, TableCache既承担管理资源(file handle)的作用, 又加速索引的读取.

TableCache的实现在db文件夹下table_cache.cc中,源码内容如下:

Status TableCache::Get(const ReadOptions& options, uint64_t file_number, uint64_t file_size, const Slice& k, void* arg, void (*saver)(void*, const Slice&, const Slice&)) { Cache::Handle* handle = NULL; Status s = FindTable(file_number, file_size, &handle);//查找table,没有则新建table结构并插入table_cache if (s.ok()) { Table* t = reinterpret_cast<TableAndFile*>(cache_->Value(handle))->table; s = t->InternalGet(options, k, arg, saver); //在table中查找 cache_->Release(handle); } return s; }

该函数流程很简单,先从table_cache中获取Table结构,没有则新建Table结构加入table_cache,然后调用Table::InternalGet在具体的sst表中查找.需要注意的是,在util文件夹下查看cache.cc可以看到

virtual Handle* Insert(const Slice& key, void* value, size_t charge, void (*deleter)(const Slice& key, void* value)) { const uint32_t hash = HashSlice(key); return shard_[Shard(hash)].Insert(key, hash, value, charge, deleter); } virtual Handle* Lookup(const Slice& key) { const uint32_t hash = HashSlice(key); return shard_[Shard(hash)].Lookup(key, hash); }

这个hash table做了两遍hash, 先把key分片一遍, 然后再扔给真正的hash table cache(有锁)去lookup.这么做的逻辑是可以减少锁的使用率和提升并发.

进一步查看table_cache.cc中的Table::InternalGet函数:

Status Table::InternalGet(const ReadOptions& options, const Slice& k, void* arg, void (*saver)(void*, const Slice&, const Slice&)) { Status s; Iterator* iiter = rep_->index_block->NewIterator(rep_->options.comparator); iiter->Seek(k);//在索引中找,是否存在某个块可能包含这个key if (iiter->Valid()) { Slice handle_value = iiter->value(); FilterBlockReader* filter = rep_->filter; BlockHandle handle; if (filter != NULL && handle.DecodeFrom(&handle_value).ok() && !filter->KeyMayMatch(handle.offset(), k)) { // Not found } else { //在具体的block中找 Iterator* block_iter = BlockReader(this, options, iiter->value()); block_iter->Seek(k); if (block_iter->Valid()) { (*saver)(arg, block_iter->key(), block_iter->value()); } s = block_iter->status(); delete block_iter; } } if (s.ok()) { s = iiter->status(); } delete iiter; return s; }

Table::Get函数先在table的indexblock中查找该key所处的block,然后利用Bloom Filter来过滤,最后在具体的block中查找。在查找过程中使用了Iterator机制。

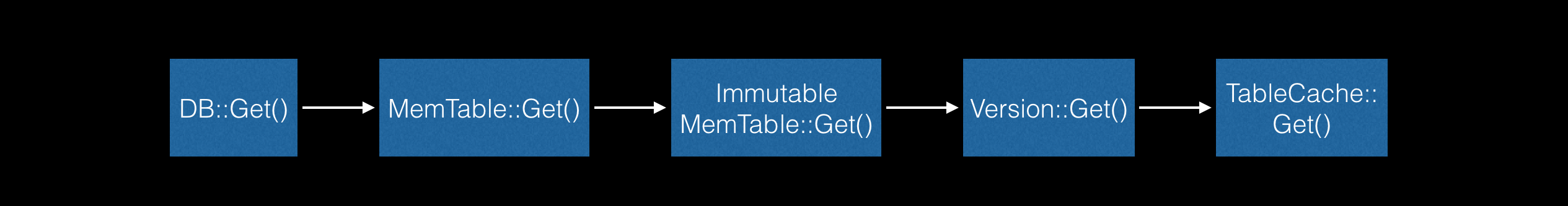

总体来说,leveldb中Get操作的流程可以用下图来说明:

参考文献:

1.http://blog.csdn.net/joeyon1985/article/details/47154249

2.http://masutangu.com/2017/06/leveldb_1/

3.https://zhuanlan.zhihu.com/jimderestaurant?topic=LevelDB

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!

· 周边上新:园子的第一款马克杯温暖上架