清华GLM

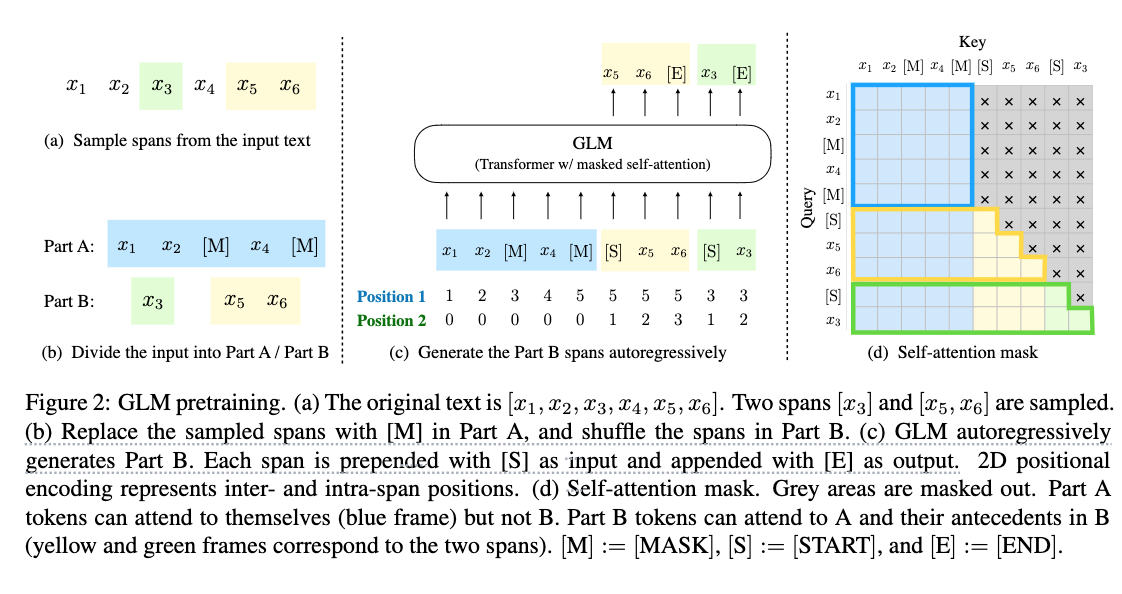

GLM: General Language Model Pretraining with Autoregressive Blank Infilling

https://arxiv.org/abs/2103.10360

https://readpaper.com/paper/661863844583419904

https://github.com/THUDM/GLM

GLM-130B:一个开源双语预训练语言模型

《GLM-130B: An open bilingual pre-trained model》

https://chatglm.cn/blog

https://arxiv.org/pdf/2210.02414.pdf

https://readpaper.com/paper/4675577550479572993

https://models.aminer.cn/glm-130b/

https://github.com/THUDM/ChatGLM-130B

https://huggingface.co/spaces/THUDM/GLM-130B

开源,模型申请地址:https://models.aminer.cn/glm/zh-CN/download/GLM-130B

资料:

https://readpaper.com/paper/4675577550479572993

https://www.zhihu.com/question/554665350/answer/2853326322

一流+GLM,https://github.com/Oneflow-Inc/one-glm

https://zhuanlan.zhihu.com/p/614508046

https://zhuanlan.zhihu.com/p/550220516

https://blog.csdn.net/bqw18744018044/article/details/129132457,原论文翻译