025-Cinder服务-->安装并配置一个本地存储节点(ISCSI)

一:Cinder提供块级别的存储服务,块存储提供一个基础设施为了管理卷,以及和OpenStack计算服务交互,为实例提供卷。此服务也会激活管理卷的快照和卷类型的功能,块存储服务通常包含下列组件:

cinder-api:接受API请求,并将其路由到“cinder-volume“执行,即请求cinder要先请求此对外API。

cinder-volume:与块存储服务和例如“cinder-scheduler“的进程进行直接交互。它也可以与这些进程通过一个消息队列进行交互。“cinder-volume“服务响应送到块存储服务的读写请求来维持状态。它也可以和多种存储提供者在驱动架构下进行交互。

cinder-scheduler守护进程:选择最优存储提供节点来创建卷。其与“nova-scheduler“组件类似。

cinder-backup守护进程:“cinder-backup“服务提供任何种类备份卷到一个备份存储提供者。就像“cinder-volume“服务,它与多种存储提供者在驱动架构下进行交互。

消息队列:在块存储的进程之间路由信息。

怎样为块存储服务安装并配置存储节点。为简单起见,这里配置一个有一个空的本地块存储设备的存储节点。这个向导用的是 /dev/sdb,此处选用linux-node1节点作为存储节点,需要在vmware中添加一块磁盘。

Cinder :本身不是存储软件,是管理后端存储用的

配置控制节点,

mysql -u root -p

MariaDB [(none)]> CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'cinder';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'cinder';

openstack user create --domain default --password-prompt cinder

openstack role add --project service --user cinder admin

openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev2

openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

openstack endpoint create --region RegionOne volumev2 public http://192.168.1.230:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne volumev2 internal http://192.168.1.230:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne volumev2 admin http://192.168.1.230:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 public http://192.168.1.230:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 internal http://192.168.1.230:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 admin http://192.168.1.230:8776/v3/%\(project_id\)s

如报错:Multiple service matches found for 'volumev2', use an ID to be more specific.

则如下:

[root@linux-node1 ~]# openstack service list

+----------------------------------+-----------+-----------+

| ID | Name | Type |

+----------------------------------+-----------+-----------+

| 02180476df5840809d26655ba09b76e3 | cinderv2 | volumev2 |

| 1ae8fbe0cd164f6ab72ab1a9ec954631 | cinderv2 | volumev2 |

| 44bee342cbb341cca16840ff6f9bff85 | cinderv3 | volumev3 |

| 6ac06360d9b0464fbc811ea12d4ae848 | glance | image |

| 6b840f69965e43ad94813be38b56a6de | neutron | network |

| 929cbc7c7b264ad08d098409ad8dbab7 | placement | placement |

| 94b62aab1fde462583ec57c0becacb6f | keystone | identity |

| ab62662d8f734cadaf96f86b6dad4a8b | nova | compute |

| d2ab86cdf41347a2bc635036ead19204 | cinderv3 | volumev3 |

+----------------------------------+-----------+-----------+

[root@linux-node1 ~]# openstack service delete 02180476df5840809d26655ba09b76e3

[root@linux-node1 ~]# openstack service delete 1ae8fbe0cd164f6ab72ab1a9ec954631

[root@linux-node1 ~]# openstack service delete 44bee342cbb341cca16840ff6f9bff85

[root@linux-node1 ~]# openstack service delete d2ab86cdf41347a2bc635036ead19204

[root@linux-node1 ~]# openstack service list

+----------------------------------+-----------+-----------+

| ID | Name | Type |

+----------------------------------+-----------+-----------+

| 6ac06360d9b0464fbc811ea12d4ae848 | glance | image |

| 6b840f69965e43ad94813be38b56a6de | neutron | network |

| 929cbc7c7b264ad08d098409ad8dbab7 | placement | placement |

| 94b62aab1fde462583ec57c0becacb6f | keystone | identity |

| ab62662d8f734cadaf96f86b6dad4a8b | nova | compute |

+----------------------------------+-----------+-----------+

yum install openstack-cinder

[root@linux-node1 ~]# cat /etc/cinder/cinder.conf | grep -v "#" | grep -v "^$"

[DEFAULT]

transport_url = rabbit://openstack:openstack@192.168.1.230

auth_strategy = keystone

[backend]

[backend_defaults]

[barbican]

[brcd_fabric_example]

[cisco_fabric_example]

[coordination]

[cors]

[database]

connection = mysql+pymysql://cinder:cinder@192.168.1.230/cinder

[fc-zone-manager]

[healthcheck]

[key_manager]

[keystone_authtoken]

auth_uri = http://192.168.1.230:5000

auth_url = http://192.168.1.230:35357

memcached_servers = 192.168.1.230:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[matchmaker_redis]

[nova]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[profiler]

[ssl]

su -s /bin/sh -c "cinder-manage db sync" cinder

配置计算服务使用cinder

vim /etc/nova/nova.conf

[cinder]

os_region_name = RegionOne

systemctl restart openstack-nova-api.service

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

[root@linux-node1 ~]# tail -f /var/log/cinder/api.log

2019-07-11 09:59:17.727 20055 INFO cinder.api.extensions [req-10d244f1-d517-4c00-89f5-b6b9bd4dba9c - - - - -] Loaded extension: os-volume-transfer

2019-07-11 09:59:17.727 20055 INFO cinder.api.extensions [req-10d244f1-d517-4c00-89f5-b6b9bd4dba9c - - - - -] Loaded extension: os-volume-type-access

2019-07-11 09:59:17.728 20055 INFO cinder.api.extensions [req-10d244f1-d517-4c00-89f5-b6b9bd4dba9c - - - - -] Loaded extension: encryption

2019-07-11 09:59:17.730 20055 INFO cinder.api.extensions [req-10d244f1-d517-4c00-89f5-b6b9bd4dba9c - - - - -] Loaded extension: os-volume-unmanage

2019-07-11 09:59:17.907 20055 INFO oslo.service.wsgi [req-10d244f1-d517-4c00-89f5-b6b9bd4dba9c - - - - -] osapi_volume listening on 0.0.0.0:8776

2019-07-11 09:59:17.908 20055 INFO oslo_service.service [req-10d244f1-d517-4c00-89f5-b6b9bd4dba9c - - - - -] Starting 4 workers

2019-07-11 09:59:18.124 20088 INFO eventlet.wsgi.server [-] (20088) wsgi starting up on http://0.0.0.0:8776

2019-07-11 09:59:18.165 20089 INFO eventlet.wsgi.server [-] (20089) wsgi starting up on http://0.0.0.0:8776

2019-07-11 09:59:18.344 20090 INFO eventlet.wsgi.server [-] (20090) wsgi starting up on http://0.0.0.0:8776

2019-07-11 09:59:18.377 20091 INFO eventlet.wsgi.server [-] (20091) wsgi starting up on http://0.0.0.0:8776

监听在8776

配置存储节点:

只要在一台机器上装上cinder-volume 就是存储节点

没有cinder的时候 存在本地硬盘如下路径:存在本地硬盘的优势是:性能好,因为存在别的机器,首先要经过网络传输,劣势是:不灵活,比如迁移

[root@linux-node2 ~]# cd /var/lib/nova/

[root@linux-node2 nova]# ls

buckets instances keys networks tmp

[root@linux-node2 nova]# cd instances/

[root@linux-node2 instances]# ls

_base compute_nodes locks

[root@linux-node2 instances]# tree

官方默认用iscsi:就是通过网络封装将数据传输,但和NFS不一样,NFS穿的是文件,iscsi传的是块

该服务使用LVM驱动程序在此设备上配置逻辑卷, 并通过iSCSI传输将它们提供给实例。您可以按照这些说明进行微小修改,以使用其他存储节点水平扩展您的环境。

本案例装在控制节点上:

yum install lvm2 device-mapper-persistent-data

注:要将cinder-volume 和lvm ,iscsi 装在一台机器上,因为cinder-volume 要管理lvm和iscsi

systemctl enable lvm2-lvmetad.service

systemctl start lvm2-lvmetad.service

Create the LVM physical volume /dev/sdb:如下

添加磁盘后验证是否有sdb:

[root@linux-node1 ~]# fdisk -l

磁盘 /dev/sdb:26.8 GB, 26843545600 字节,52428800 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 512 字节

磁盘 /dev/sda:21.5 GB, 21474836480 字节,41943040 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 512 字节

磁盘标签类型:dos

磁盘标识符:0x000c3d86

设备 Boot Start End Blocks Id System

/dev/sda1 * 2048 2099199 1048576 83 Linux

/dev/sda2 2099200 41943039 19921920 8e Linux LVM

磁盘 /dev/mapper/centos-root:18.2 GB, 18249416704 字节,35643392 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 512 字节

磁盘 /dev/mapper/centos-swap:2147 MB, 2147483648 字节,4194304 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 512 字节

[root@linux-node1 ~]# pvcreate /dev/sdb

Physical volume "/dev/sdb" successfully created.

[root@linux-node1 ~]# vgcreate cinder-volumes /dev/sdb

Volume group "cinder-volumes" successfully created

[root@linux-node1 ~]# vgdisplay #验证是否创建成功

--- Volume group ---

VG Name cinder-volumes

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size <25.00 GiB

PE Size 4.00 MiB

Total PE 6399

Alloc PE / Size 0 / 0

Free PE / Size 6399 / <25.00 GiB

VG UUID yNfh0o-3HwS-tUnt-KOfv-CjSf-Bisa-bZuN2f

--- Volume group ---

VG Name centos

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 3

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 2

Open LV 2

Max PV 0

Cur PV 1

Act PV 1

VG Size <19.00 GiB

PE Size 4.00 MiB

Total PE 4863

Alloc PE / Size 4863 / <19.00 GiB

Free PE / Size 0 / 0

VG UUID chtJNI-2SnD-3lRy-YJR2-lC4B-kwdn-xOmThi

vim /etc/lvm/lvm.conf

devices {

filter = [ "a/sdb/", "r/.*/"]

如果存储节点在操作系统磁盘上使用LVM,则还必须将关联的设备添加到过滤器。例如,如果/dev/sda设备包含操作系统:如果存储节点和控制节点在一起则替换掉上面的filter为下:

filter = [ "a/sda/", "a/sdb/", "r/.*/"]

同样,如果计算节点在操作系统磁盘上使用LVM,则还必须修改/etc/lvm/lvm.conf这些节点上的文件中的过滤器 以仅包括操作系统磁盘。例如,如果/dev/sda 设备包含操作系统:

filter = [ "a/sda/", "r/.*/"]

yum install openstack-cinder targetcli python-keystone

注:targetcli 是管理iscsi的

vim /etc/cinder/cinder.conf

[database]

connection = mysql+pymysql://cinder:cinder@192.168.1.230/cinder

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@192.168.1.230

auth_strategy = keystone

enabled_backends = lvm ###后端存储启动lvm,lvm这个名字是任意,是对应上面的[lvm] 的名字,就是[ ]里写的什么,这里就填什么

glance_api_servers = http://192.168.1.230:9292

[keystone_authtoken]

auth_uri = http://192.168.1.230:5000

auth_url = http://192.168.1.230:35357

memcached_servers = 192.168.1.230:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

iscsi_protocol = iscsi

iscsi_helper = lioadm ####iscsi的管理工具是使用lioadm

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[root@linux-node1 ~]# cat /etc/cinder/cinder.conf | grep -v "#" | grep -v "^$"

[DEFAULT]

transport_url = rabbit://openstack:openstack@192.168.1.230

auth_strategy = keystone

enabled_backends = lvm

glance_api_servers = http://192.168.1.230:9292

iscsi_ip_address = 192.168.1.230

[backend]

[backend_defaults]

[barbican]

[brcd_fabric_example]

[cisco_fabric_example]

[coordination]

[cors]

[database]

connection = mysql+pymysql://cinder:cinder@192.168.1.230/cinder

[fc-zone-manager]

[healthcheck]

[key_manager]

[keystone_authtoken]

auth_uri = http://192.168.1.230:5000

auth_url = http://192.168.1.230:35357

memcached_servers = 192.168.1.230:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[matchmaker_redis]

[nova]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[profiler]

[ssl]

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

iscsi_protocol = iscsi

iscsi_helper = lioadm

systemctl enable openstack-cinder-volume.service target.service

systemctl start openstack-cinder-volume.service target.service

ps aux | grep cinder

[root@linux-node1 ~]# openstack volume service list

+------------------+-----------------+------+---------+-------+----------------------------+

| Binary | Host | Zone | Status | State | Updated At |

+------------------+-----------------+------+---------+-------+----------------------------+

| cinder-scheduler | linux-node1 | nova | enabled | up | 2019-07-11T15:23:03.000000 |

| cinder-volume | linux-node1@lvm | nova | enabled | up | 2019-07-11T15:23:03.000000 |

+------------------+-----------------+------+---------+-------+----------------------------+

Install and configure the backup service

-

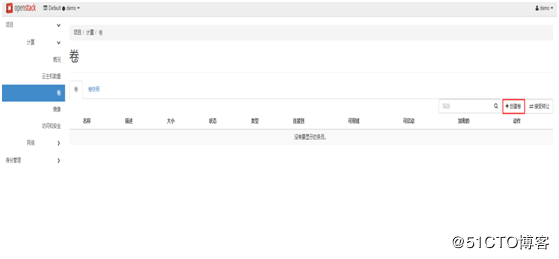

6.创建云硬盘并挂载

(1)打开dashboard,进入卷,点击"创建卷"

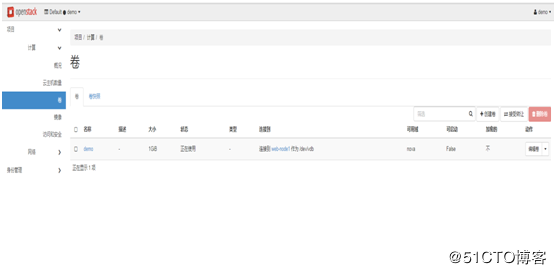

(2)将卷加入到云主机中,"编辑卷"-->"管理连接"-->"链接云主机"

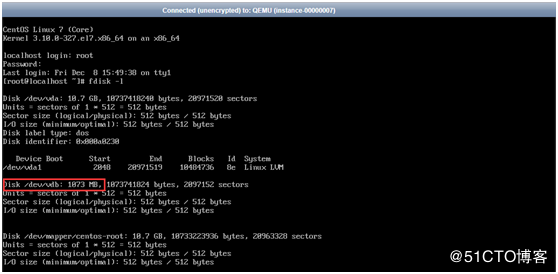

(3)进入云主机进行查看磁盘挂载情况

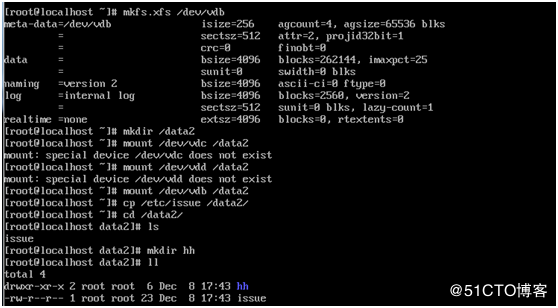

fdisk -l,成功挂载1G的云硬盘,对该磁盘进行格式化,并写入数据

tips:当有多个节点需要挂载该云盘,需要将该磁盘进行umount,再到"管理连接"-->"分离卷"。分离卷时,该磁盘数据不会丢失。挂到其他云主机时,不再需要格式化,直接可挂载。

存储节点可选用的存储类型

1.使用本地硬盘

2.系统使用本地硬盘+云硬盘(数据盘) ISCSI NFS GlusterFS Ceph

创建卷 --> 编辑卷 --> 分给虚拟机 --> 虚拟机查看是否加载 --> mkfs.xfs /dev/vdc --> mount /dev/vdc /data2/

注:在使用中这个云硬盘是不可以删的,要是将云硬盘挂载到其他虚拟机则要在原虚拟机先umount --> 然后分离卷,然后在挂载到新的上面,并且新虚拟机可以直接使用云硬盘里的数据

1.使用本地硬盘

2.系统使用本地硬盘 + 云硬盘(数据盘) ISCSI NFS ClusterFS Ceph,生产环境用后面两个

在上面当中,数据文件都保存在服务器本地,以下将介绍如何使用NFS共享作为Openstack后端存储

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步