16、k8s运行zookeeper集群

k8s运行zookeeper集群

构建zookeeper基础镜像

Dockerfile文件

root@k8s-deploy-01:/opt/Dockerfile/apps/zookeeper# cat Dockerfile

FROM xmtx.harbor.com/baseimages/slim_java:8

ENV ZK_VERSION 3.4.14

ADD repositories /etc/apk/repositories

# Download Zookeeper

COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz

COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc

COPY KEYS /tmp/KEYS

RUN apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

tar \

wget && \

#

# Install dependencies

apk add --no-cache \

bash && \

#

#

# Verify the signature

export GNUPGHOME="$(mktemp -d)" && \

gpg -q --batch --import /tmp/KEYS && \

gpg -q --batch --no-auto-key-retrieve --verify /tmp/zk.tgz.asc /tmp/zk.tgz && \

#

# Set up directories

#

mkdir -p /zookeeper/data /zookeeper/wal /zookeeper/log && \

#

# Install

tar -x -C /zookeeper --strip-components=1 --no-same-owner -f /tmp/zk.tgz && \

#

# Slim down

cd /zookeeper && \

cp dist-maven/zookeeper-${ZK_VERSION}.jar . && \

rm -rf \

*.txt \

*.xml \

bin/README.txt \

bin/*.cmd \

conf/* \

contrib \

dist-maven \

docs \

lib/*.txt \

lib/cobertura \

lib/jdiff \

recipes \

src \

zookeeper-*.asc \

zookeeper-*.md5 \

zookeeper-*.sha1 && \

#

# Clean up

apk del .build-deps && \

rm -rf /tmp/* "$GNUPGHOME"

COPY conf /zookeeper/conf/

COPY bin/zkReady.sh /zookeeper/bin/

COPY entrypoint.sh /

ENV PATH=/zookeeper/bin:${PATH} \

ZOO_LOG_DIR=/zookeeper/log \

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \

JMXPORT=9010

ENTRYPOINT [ "/entrypoint.sh" ]

CMD [ "zkServer.sh", "start-foreground" ]

EXPOSE 2181 2888 3888 9010

集群配置文件脚本

root@k8s-deploy-01:/opt/Dockerfile/apps/zookeeper# cat entrypoint.sh

#!/bin/bash

echo ${MYID:-1} > /zookeeper/data/myid

if [ -n "$SERVERS" ]; then

IFS=\, read -a servers <<<"$SERVERS"

for i in "${!servers[@]}"; do

printf "\nserver.%i=%s:2888:3888" "$((1 + $i))" "${servers[$i]}" >> /zookeeper/conf/zoo.cfg

done

fi

cd /zookeeper

exec "$@"

配置文件

root@k8s-deploy-01:/opt/Dockerfile/apps/zookeeper/conf# cat zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/zookeeper/data

dataLogDir=/zookeeper/wal

#snapCount=100000

autopurge.purgeInterval=1

clientPort=2181

检查脚本

root@k8s-deploy-01:/opt/Dockerfile/apps/zookeeper/bin# cat zkReady.sh

#!/bin/bash

/zookeeper/bin/zkServer.sh status | egrep 'Mode: (standalone|leading|following|observing)'

构建镜像

root@k8s-deploy-01:/opt/Dockerfile/apps/zookeeper# docker build -t xmtx.harbor.com/k8s-base/zookeeper:v1 .

root@k8s-deploy-01:/opt/Dockerfile/apps/zookeeper# docker push xmtx.harbor.com/k8s-base/zookeeper:v1

k8s资源构建

pv

root@k8s-master-01:/opt/yaml/project/zookeeper# cat zookeeper-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-pv-1

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.3.140

path: /opt/nfs/zookeeper/zookeeper1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-pv-2

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.3.140

path: /opt/nfs/zookeeper/zookeeper2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-pv-3

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.3.140

path: /opt/nfs/zookeeper/zookeeper3

pvc

root@k8s-master-01:/opt/yaml/project/zookeeper# cat zookeeper-persistentvolumeclaim.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-pvc-1

namespace: myserver

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-pv-1

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-pvc-2

namespace: myserver

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-pv-2

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-pvc-3

namespace: myserver

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-pv-3

resources:

requests:

storage: 10Gi

pod

root@k8s-master-01:/opt/yaml/project/zookeeper# cat zookeeper.yaml

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: myserver

spec:

ports:

- name: client

port: 2181

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

namespace: myserver

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32181

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "1"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

namespace: myserver

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32182

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "2"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

namespace: myserver

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32183

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "3"

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper1

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: xmtx.harbor.com/k8s-base/zookeeper:v1

imagePullPolicy: Always

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-pvc-1

volumes:

- name: zookeeper-pvc-1

persistentVolumeClaim:

claimName: zookeeper-pvc-1

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper2

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: xmtx.harbor.com/k8s-base/zookeeper:v1

imagePullPolicy: Always

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-pvc-2

volumes:

- name: zookeeper-pvc-2

persistentVolumeClaim:

claimName: zookeeper-pvc-2

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper3

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: xmtx.harbor.com/k8s-base/zookeeper:v1

imagePullPolicy: Always

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-pvc-3

volumes:

- name: zookeeper-pvc-3

persistentVolumeClaim:

claimName: zookeeper-pvc-3

创建

root@k8s-master-01:/opt/yaml/project/zookeeper# kubectl apply -f zookeeper-persistentvolume.yaml

persistentvolume/zookeeper-pv-1 created

persistentvolume/zookeeper-pv-2 created

persistentvolume/zookeeper-pv-3 created

root@k8s-master-01:/opt/yaml/project/zookeeper# kubectl apply -f zookeeper-persistentvolumeclaim.yaml

persistentvolumeclaim/zookeeper-pvc-1 created

persistentvolumeclaim/zookeeper-pvc-2 created

persistentvolumeclaim/zookeeper-pvc-3 created

root@k8s-master-01:/opt/yaml/project/zookeeper# kubectl apply -f zookeeper.yaml

service/zookeeper created

service/zookeeper1 created

service/zookeeper2 created

service/zookeeper3 created

deployment.apps/zookeeper1 created

deployment.apps/zookeeper2 created

deployment.apps/zookeeper3 created

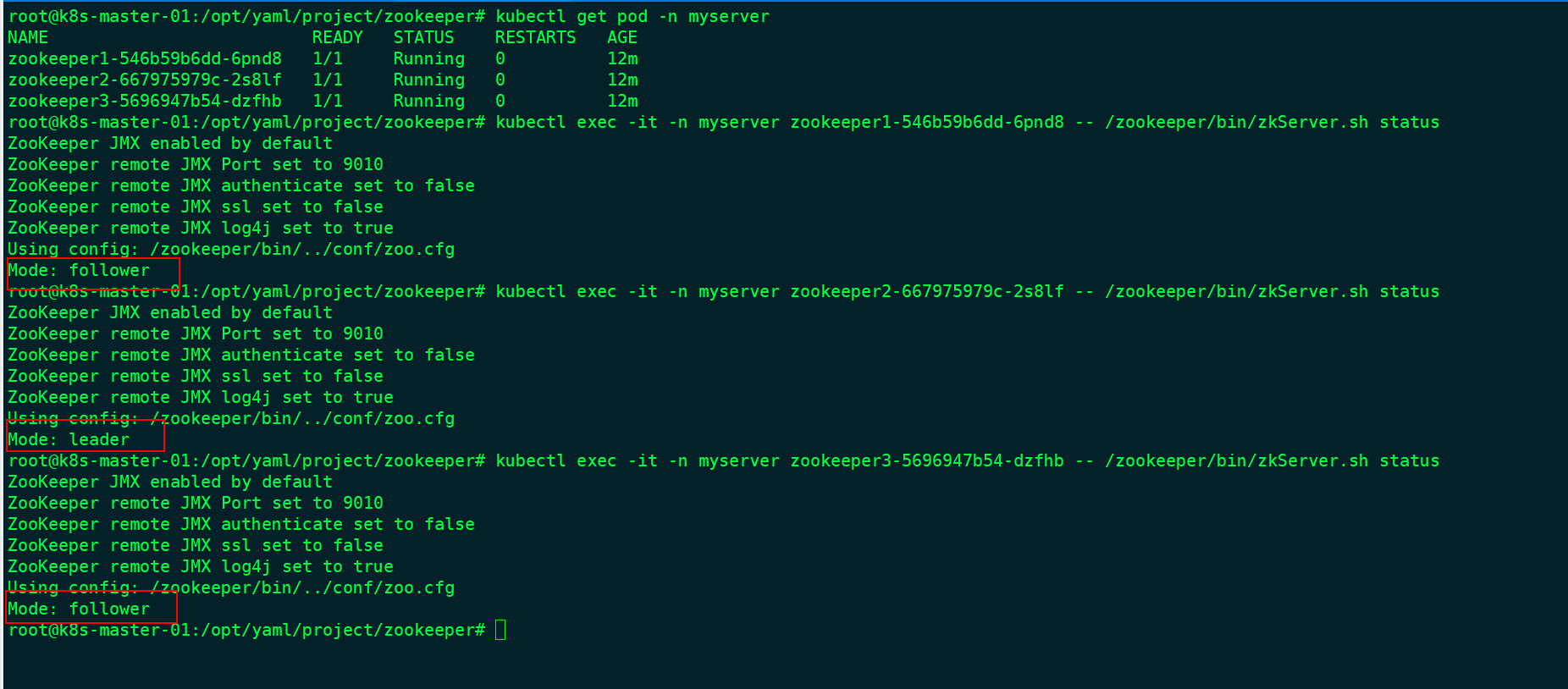

检查

root@k8s-master-01:/opt/yaml/project/zookeeper# kubectl get pod -n myserver

NAME READY STATUS RESTARTS AGE

zookeeper1-546b59b6dd-6pnd8 1/1 Running 0 17s

zookeeper2-667975979c-2s8lf 1/1 Running 0 17s

zookeeper3-5696947b54-dzfhb 1/1 Running 0 17s

root@k8s-master-01:/opt/yaml/project/zookeeper# kubectl get pvc -n myserver

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

zookeeper-pvc-1 Bound zookeeper-pv-1 20Gi RWO 5m9s

zookeeper-pvc-2 Bound zookeeper-pv-2 20Gi RWO 5m9s

zookeeper-pvc-3 Bound zookeeper-pv-3 20Gi RWO 5m9s

root@k8s-master-01:/opt/yaml/project/zookeeper# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

zookeeper-pv-1 20Gi RWO Retain Bound myserver/zookeeper-pvc-1 5m16s

zookeeper-pv-2 20Gi RWO Retain Bound myserver/zookeeper-pvc-2 5m16s

zookeeper-pv-3 20Gi RWO Retain Bound myserver/zookeeper-pvc-3 5m16s

验证集群模式