Pytorch 序列模型和LSTM

SEQUENCE MODELS AND LONG SHORT-TERM MEMORY NETWORKS

FROM https://pytorch.org/tutorials/beginner/nlp/sequence_models_tutorial.html#lstms-in-pytorch

Pytorch中的LSTMs

在开始之前,我们需要明白几点:

1) Pytorch中的LSTM是将所有的输入转换为3D的张量;

2)输入的张量axis顺序很重要:轴1:序列自身;轴2:batch;轴3:输入元素的index;

我们还没有讨论batch的情况;目前先忽略掉它;在轴2的维度上令其为1;

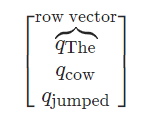

如果我们想要在模型上运行的序列为: “The cow jumped”

我们的输入应该像:

不过记住,还有一个尺寸为1的额外的第2维空间。

此外,你可以一次查看一个序列,在这种情况下,第一个轴的大小也将为1。

Example:

1) 导入包

1 # Author: Robert Guthrie 2 3 import torch 4 import torch.nn as nn 5 import torch.nn.functional as F 6 import torch.optim as optim 7 8 torch.manual_seed(1)

2)

1 lstm = nn.LSTM(3, 3) # Input dim is 3, output dim is 3 2 inputs = [torch.randn(1, 3) for _ in range(5)] # make a sequence of length 5

inputs: #每个数据都有3个特征;

[tensor([[0.8516, 1.4533, 0.5523]]), tensor([[ 0.7652, 0.0448, -0.6949]]), t

ensor([[-1.0071, -0.8920, 0.5949]]), tensor([[0.5701, 0.2354, 2.3964]]), ten

sor([[-0.2667, 1.1217, 1.7860]])]

4 # initialize the hidden state. #隐藏层的状态 5 hidden = (torch.randn(1, 1, 3), 6 torch.randn(1, 1, 3)) 7 for i in inputs: 8 # Step through the sequence one element at a time. 9 # after each step, hidden contains the hidden state. 10 out, hidden = lstm(i.view(1, 1, -1), hidden)

#out:tensor([[[-0.3600, 0.0893, 0.0215]]], grad_fn=<StackBackward>) 11 12 # alternatively, we can do the entire sequence all at once. 13 # the first value returned by LSTM is all of the hidden states throughout 14 # the sequence. the second is just the most recent hidden state 15 # (compare the last slice of "out" with "hidden" below, they are the same) 16 # The reason for this is that: 17 # "out" will give you access to all hidden states in the sequence 18 # "hidden" will allow you to continue the sequence and backpropagate, 19 # by passing it as an argument to the lstm at a later time 20 # Add the extra 2nd dimension 21 inputs = torch.cat(inputs).view(len(inputs), 1, -1) 22 hidden = (torch.randn(1, 1, 3), torch.randn(1, 1, 3)) # clean out hidden state 23 out, hidden = lstm(inputs, hidden) #inputs为5行,3列。 24 print(out) 25 print(hidden)

out:

tensor([[[-0.0187, 0.1713, -0.2944]],

[[-0.3521, 0.1026, -0.2971]],

[[-0.3191, 0.0781, -0.1957]],

[[-0.1634, 0.0941, -0.1637]],

[[-0.3368, 0.0959, -0.0538]]], grad_fn=<StackBackward>)

(tensor([[[-0.3368, 0.0959, -0.0538]]], grad_fn=<StackBackward>), tensor([[[-0.9825, 0.4715, -0.0633]]], grad_fn=<StackBackward>))

序列的长度是可以变的;

可以一个长度序列输入进去,也可以5个长度序列一起输入;

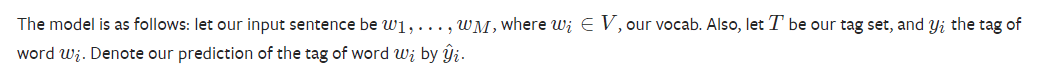

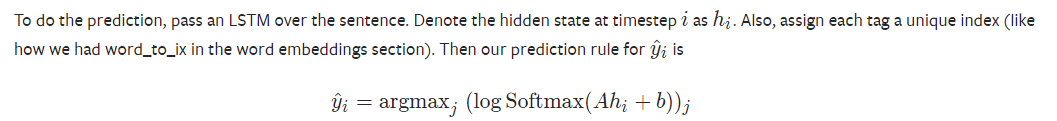

模型的输入w是一个个的单词,T是目标的标记;给定每个单词w预测它的label y;

这是一个结构预测的模型,我们的输出是一个序列y

预测标记为最大的标记索引,也就是说预测的维度应该是|T|维;

数据的准备:

将单词和label都转换为数字;

1 def prepare_sequence(seq, to_ix): 2 idxs = [to_ix[w] for w in seq] 3 return torch.tensor(idxs, dtype=torch.long) 4 5 6 training_data = [ 7 # Tags are: DET - determiner; NN - noun; V - verb 8 # For example, the word "The" is a determiner 9 ("The dog ate the apple".split(), ["DET", "NN", "V", "DET", "NN"]), 10 ("Everybody read that book".split(), ["NN", "V", "DET", "NN"]) 11 ] 12 word_to_ix = {} 13 # For each words-list (sentence) and tags-list in each tuple of training_data 14 for sent, tags in training_data: 15 for word in sent: 16 if word not in word_to_ix: # word has not been assigned an index yet 17 word_to_ix[word] = len(word_to_ix) # Assign each word with a unique index 18 print(word_to_ix) 19 tag_to_ix = {"DET": 0, "NN": 1, "V": 2} # Assign each tag with a unique index 20 21 # These will usually be more like 32 or 64 dimensional. 22 # We will keep them small, so we can see how the weights change as we train. 23 EMBEDDING_DIM = 6 24 HIDDEN_DIM = 6

1 class LSTMTagger(nn.Module): 2 3 def __init__(self, embedding_dim, hidden_dim, vocab_size, tagset_size): 4 super(LSTMTagger, self).__init__() 5 self.hidden_dim = hidden_dim 6 7 self.word_embeddings = nn.Embedding(vocab_size, embedding_dim) 8 9 # The LSTM takes word embeddings as inputs, and outputs hidden states 10 # with dimensionality hidden_dim. 11 self.lstm = nn.LSTM(embedding_dim, hidden_dim) 12 13 # The linear layer that maps from hidden state space to tag space 14 self.hidden2tag = nn.Linear(hidden_dim, tagset_size) 15 16 def forward(self, sentence): 17 embeds = self.word_embeddings(sentence) 18 lstm_out, _ = self.lstm(embeds.view(len(sentence), 1, -1)) 19 tag_space = self.hidden2tag(lstm_out.view(len(sentence), -1)) 20 tag_scores = F.log_softmax(tag_space, dim=1) 21 return tag_scores

模型的训练:

1 model = LSTMTagger(EMBEDDING_DIM, HIDDEN_DIM, len(word_to_ix), len(tag_to_ix)) 2 loss_function = nn.NLLLoss() 3 optimizer = optim.SGD(model.parameters(), lr=0.1) 4 5 # See what the scores are before training 6 # Note that element i,j of the output is the score for tag j for word i. 7 # Here we don't need to train, so the code is wrapped in torch.no_grad() 8 with torch.no_grad(): 9 inputs = prepare_sequence(training_data[0][0], word_to_ix) 10 tag_scores = model(inputs) 11 print(tag_scores) 12 13 for epoch in range(300): # again, normally you would NOT do 300 epochs, it is toy data 14 for sentence, tags in training_data: 15 # Step 1. Remember that Pytorch accumulates gradients. 16 # We need to clear them out before each instance 17 model.zero_grad() 18 19 # Step 2. Get our inputs ready for the network, that is, turn them into 20 # Tensors of word indices. 21 sentence_in = prepare_sequence(sentence, word_to_ix) 22 targets = prepare_sequence(tags, tag_to_ix) 23 24 # Step 3. Run our forward pass. 25 tag_scores = model(sentence_in) 26 27 # Step 4. Compute the loss, gradients, and update the parameters by 28 # calling optimizer.step() 29 loss = loss_function(tag_scores, targets) 30 loss.backward() 31 optimizer.step() 32 33 # See what the scores are after training 34 with torch.no_grad(): 35 inputs = prepare_sequence(training_data[0][0], word_to_ix) 36 tag_scores = model(inputs) 37 38 # The sentence is "the dog ate the apple". i,j corresponds to score for tag j 39 # for word i. The predicted tag is the maximum scoring tag. 40 # Here, we can see the predicted sequence below is 0 1 2 0 1 41 # since 0 is index of the maximum value of row 1, 42 # 1 is the index of maximum value of row 2, etc. 43 # Which is DET NOUN VERB DET NOUN, the correct sequence! 44 print(tag_scores)