线性代数

Abstract Algebra 抽象代数

“代数”研究的不再只是“数”(实数或复数),而是更广泛的符号,包含“实数”、“复数”、“函数”、或其他,从一般意义上的“数”推广到符号。

\(\sigma\) -algebra ( \(\sigma\) -field)

[Def] A collection \(\Sigma\) of subsets of a set \(A\) (that's to say \(\Sigma\in \mathrm{power}(A)\) where \(\mathrm{power}(A)\) is the power set of \(A\) ), is said a ** \(\sigma\) -algebra** (or ** \(\sigma\) -field**) of \(A\) if it satifies the following properties:

- Closure under complement. \(W\in \Sigma\implies (A-W)\in\Sigma\) where \((A-W)\) denotes the complement set of \(W\) under \(A\) .

- Closure under countable unions and intersections. \(W_i\in \Sigma\implies \bigcap_i W_i \in \Sigma\) , \(W_i\in \Sigma\implies \bigcup_i W_i \in \Sigma\) .

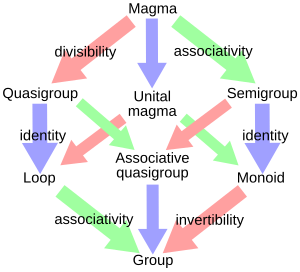

Group 群

A group is a set together with an operation that maps any two elements to another. Let \(G\) be a set, \(*\) be a binary operator taking any two elements \(x,y\) , resulting a third element denoted as \(x*y\) . To qualify as a group, the set and operation \((G, *)\) must satisfy 4 requirements known as the group axioms:

- Closure. \(\forall x,y: x,y\in G \implies x*y\in G\)

- Identity element (unique). \(\exists e\in G \forall x\in G: e*x=x\)

- Inverse element (unique with respect to corresponding element). \(\forall x\in G \exists z\in G: x*z=e \wedge z*x=e\) where \(e\) is the identity element.

- Associativity. \(\forall x,y,z\in G: x*(y*z)=(x*y)*z\)

Commutative Group (Abelian Group) 可交换群

A group \((G, *)\) is abelian(commutative) if it satisfies the commutativity:

- Commutativity. \(\forall x,y\in G: x*y = y*x\)

Semigroup 半群

axioms:

- Closure.

- Associativity.

A semigroup is an associative magma.

Monoid 幺半群

A monoid is a set equipped with an associative binary operation and an identity element and the set is closed under the operation.

Formally, let \(M\) be a set, \(*: x,y\mapsto x*y\) be a binary operation, then to qualify as a monoid, \((M, *)\) must satisfy 3 requirements known as the monoid axioms:

- Closure. \(\forall x,y\in M: x*y\in M\)

- Associativity. \(\forall x,y,z\in M: x*(y*z)=(x*y)*z\)

- Identity element (unique). \(\exists e\forall x\in M: e*x=x\wedge x*e=x\)

A monoid is a semigroup with an (unique) identity element.

Magma

a set \(M\) , a binary operation \(*: x,y \mapsto x*y\) . To qualify as a magma, \((M, *)\) must satisfy the closure axiom:

- Closure. \(\forall x,y: x,y\in M \implies x*y\in M\)

magmas and groups:

Ring 环

A ring is a set \(R\) together with two operations \(+, \cdot\) (commonly called addition and multiplication), satisfying 3 requirements called ring axioms:

- \(R\) is a commutative group(abelian group) under \(+\) (addition), meaning that

- \(\forall x,y\in R: x+y\in R\)

- \(\forall x,y\in R: x+y=y+x\)

- \(\exists 0\in R \forall x\in R: x+0=x\) (the additive identity element)

- \(\forall x\in R: \exists -\!\!x\in R: x+ (-x)=0\) (the additive inverse element)

- \(R\) is a monoid under \(\cdot\) (multiplication), meaning that:

- \(\forall x,y,z\in R: (x\cdot y)\cdot z = x\cdot (y\cdot z)\)

- \(\exists 1\in R \forall x\in R: 1 \cdot x= x \wedge x\cdot 1= x\) (the multiplicative identity element)

- \(\cdot\) (multiplication) is distributive with respect to \(+\) (addition), meaning that:

- \(\forall x,y,z\in R: x\cdot(y+z)=x\cdot y+ x\cdot z\) (left distributivity)

- \(\forall x,y,z\in R: (x+y)\cdot z=x\cdot z + y\cdot z\) (right distributivity)

The additive identity and the multicative identity are commonly denoted as \(0, 1\) respectively.

( \(0\neq 1\) the additive identity and the multiplicative identity must not be the same)

一些理论中存在不要求环有乘法单位元素(multiplicative identity)的现象。

Field 域

\((F, +, \cdot)\) , a set \(F\) , equipped with two binary operations \(+: x,y \mapsto x+y\) , \(\cdot: x,y\mapsto x\cdot y\) (one of the two operations/functions is called addition, the other is called multiplication, the differences are reflected on axioms of inverse and distributivity), axioms:

- Closure under the two operations(addition and multiplication).

- Associativity of the two operations.

- Commutativity of the two operations.

- Identity of addition.

- Identity of multiplication.

- Inverse of addition.

- Inverse of multiplication. (undefined for the additive identity)

- Distributivity of multiplication with respect to addition.

Or, a field is a commutative ring where $ 0\neq 1$ (here \(0,1\) denote the additive identity and the multiplicative identity respectively), and all elements are invertible (additive inverses are defined for all elements, and multiplicative inverses are defined for all elements except the additive identity).

Or an alternative definition: \((F, +, \cdot)\) is a field if

- \(F\) is a commutative group under \(+\) .

- \(F\backslash\{0\}\) (the set without the additive identity element) is a commutative group under \(\cdot\) .

- Distributivity of \(\cdot\) with respect to \(+\) .

由于域中两个操作本质是两个抽象的同构的二元函数, \(F\times F\mapsto F\) (蕴含他们满足封闭性),他们的区别在于逆元素及分配律的定义,逆元素定义中其中一个函数下的逆元素不会对集合中的一个特殊元素做定义,该特殊元素是另一个函数的单位元素;分配律定义是前述前者函数(存在一个特殊元素未定义逆元素的函数)相对于后者函数的。

[Theorem]:

Proof:

Algebraically closed field

A field \(F\) is algebraically closed if every non-constant polynomial in \(F[x]\) has a root in \(F\) .

No finite field is algebraically closed.

Exmaples:

- The field of real numbers is not algebraically closed. cos \(x^2+1=0\) has no root in real numbers.

- No subfield of real numbers is algebraically closed.

- The field of complex numbers is algebraically closed.

线性代数 Linear Algebra

向量

向量 \(\bm a\) 的大小,也称长度,也称模,是向量的L-2范数,常记为 \(\|\bm a\|\) :

bilinear map

Let three vector spaces \(U,V,W\) over the same field \(F\) , a mapping taking two arguments from vector space \(U\) and \(V\) onto a third vector space \(W\) , \(f(\cdot,\cdot): U\times V \mapsto W\) , is bilinear if it holds that

, for any vectors in \(U,V\) and scalars in \(F\) .

The top equation asserts that the mapping is linear in the first argument, and the bottom equation asserts the mapping is linear in the second arguments.

In other words, let \(U,V,W\) be three vector spaces over the same field \(F\) , a bilinear map is a map \(U\times V \mapsto W\) is linear in each of its arguments for fixed the other argument.

bilinear form

Let \(V\) be a vector space over a field \(F\) , a bilinear form on \(V\) is a bilinear map with two arguments being from the same vector space \(V\) and mapping onto the field \(F\) (a field is naturally a vector space).

In other words, let \(V\) be a vector space over a field \(F\) , a bilinear form is a function \(V\times V\mapsto F\) with being linear in each of its arguments.

symmetric & positive definite

Let \(V\) be a vector and \(\Omega: V\times V\mapsto \R\) be a bilinear mapping, then

- \(\Omega\) is called symmetric if \(\Omega(\bm x,\bm y)=\Omega(\bm y, \bm x), \forall \bm x,\bm y\in V\) .

- \(\Omega\) is called positive definite if

where \(V\backslash\{\bm 0\}\) is the vector space of \(V\) without \(\bm 0\) .

内积 Inner Product: (for the real numbers) A positive definite, symmetric, bilinear form on a vector space to a real number is called an inner product on the vector space. Usually denoted by \(\langle\bm x,\bm x \rangle\) .

Inner Product

( for the complex numbers)

Let \(V\) be a vector space over \(\cnums\) , a function $\langle \cdot, \cdot\rangle : V \times V \mapsto \cnums $ qualifies as a inner product if it satisfies the following conditions:

- linearity on the first argument.

It's ok to define linearity on the second argument instead of on the first.

- conjugate symmetry.

where $ \overline{\langle \bm y, \bm x\rangle}$ denotes the conjugate of \({\langle \bm y, \bm x\rangle}\) .

- positive definiteness.

For a inner product on real numbers, the conjugate symmetry reduces to symmetry.

[Theorem]:

Proof:

Matrix representation of an inner product

Let \(V\) be a n-dimensional vector space over a field \(F\) (either \(\R\) or \(\cnums\) ) with an inner product \(\langle, \rangle\) , \(\mathcal{B}=[\bm b_1, \bm b_2, \dots, \bm b_n]\) be a basis of \(V\) , so for any given vecotrs \(\bm u, \bm v\in V\) , they can be uniquely writen as linear combinations, \(\bm u = u_1 \bm b_1 + u_2 \bm b_2+\dots+ u_n \bm b_n=\sum_i^n u_i\bm b_i, u_i\in F;\; \bm v = v_1 \bm b_1 + v_2 \bm b_2+\dots+ v_n \bm b_n=\sum_i^n v_i\bm b_i, v_i\in F\) . The linearity of inner products implies that

.

We call the matrix \(\left[\langle\cdot,\cdot\rangle\right]_{\mathcal{B}}=[\langle \bm b_i, \bm b_j \rangle]\in F^{n\times n}\) the matrix representation of the inner product w.r.t. bais \(\mathcal{B}\) , and we have

.

Cauchy Schwarz Inequality

Let \(V\) be an inner product space (over real or complex numbers), the Cauchy Schwarz inequality asserts that

, or

.

Proof: see on Wikipedia

点积 Dot Product:

设向量 \(\bm a, \bm b\in\R^n\) ,向量点积、点乘积记为 \(\bm a\cdot \bm b \in \R\) 是标量,其中的点号称作点乘(点乘以):

向量点积的几何意义是,点积刻画了两个向量之间的夹角。

向量 \(\bm a,\bm b\) 间的夹角记为 \(\theta\) ,则:

向量内积不一定是点积,点积是内积的一种特殊情形,并且一般说内积指的是这种特殊情形(即内积常指点积)。

是内积但不是点积的示例:

The cardinality of a finite set is the number of its (necessarily) distinct elements .

外积/叉乘:

设向量 \(\bm a, \bm b\in \R^n\) ,向量外积(叉积、叉乘积, exterior product, cross product, wedge product)记为 \(\bm a \times \bm b \in \R^n\) 是向量。

向量外积的几何意义是,外积是因子向量构成的“平面”的法向量。如三维向量 \(\bm a, \bm b\) 的外积 \(\bm a\times\bm b\) 是a与b构成的平面的法向量。

直积:

向量 \(\bm u\in\R^m, \bm v\in\R^n\) ,向量的直积(Outer Product)记为 \(\bm u\bm v\in\R^{m\times n}\) ,即为张量积。

Linear Space(Vector Space) 线性空间/向量空间

A linear space ( or vector space) over a field \(F\) is a set \(V\) that is closed under two operations that satisfy the below 8 axioms(properties). (The elements in the field \(F\) are called scalars, and the elements in the set \(V\) are called vectors.) \((V,F, +, \cdot)\)

Operations:

- Vector Addition (or simplified Addition). \(+: V\times V \mapsto V, (\bm u, \bm v)\mapsto \bm u+\bm v\) , takes any two vectors in the space and maps to a third vector, and the resultant vector is in the space.

- Scalar Multipliciation. \(\cdot: F\times V \mapsto V, (a,\bm v)\mapsto a\cdot \bm v\) (or simplified \((a,\bm v)\mapsto a\bm v\) ), takes any scalar in the field and any vector in the space, and maps to a third vector, and the resultant vector is in the space.

Operation axioms(properties):

- Associativity of vector addition. \(\forall \bm u\in V,\forall \bm v\in V, \forall \bm w \in V: \bm u+(\bm v+\bm w)=(\bm u+\bm v)+\bm w\)

- Commutativity of vector addition. $\forall \bm u\in V,\forall\bm v\in V: \bm u+\bm v=\bm v +\bm u $

- Identity element of vecotor addition. \(\exists \bm 0\in V, \forall \bm v\in V: \bm v+\bm 0=\bm v\)

- Inverse element of vector addition. \(\forall \bm v \in V, \exists {-\bm v}\in V: \bm v + ({-\bm v})=\bm 0\) where \({-\bm v}\) is called the additive inverse of \(v\) , noting that here \(-\bm v\) is a single symbol for an element instead of an operation \(-\) on an element \(\bm v\) , so for clarity it can be denoted by \(\bm v'\) or even any symbol \(\bm z\) ; \(\bm 0\) is a symbol for the identity element of addition.

- Compatibility of scalar multiplication with field multiplication. \(\forall a,b\in F, \forall \bm v\in V: a(b\bm v)=(ab)\bm v\) .

- Identity element of scalar multiplication. \(\exists 1\in F \forall \bm v \in V: 1\bm v=\bm v\) where \(1\) denotes the scalar multiplicative identity in \(F\) .

- Distributivity of scalar multilication with respect to vector addition. \(\forall a\in F \forall \bm u\in V \forall \bm v\in V: a(\bm u+\bm v)=a\bm u+ a\bm v\) .

- Distributivity of scalar multiplication with respect to field addition. \(\forall a,b\in F, \forall \bm v \in V: (a+b)\bm v=a\bm v+ b \bm v\)

In vector spaces, the additive identity element is commonly denoted as \(\bm 0\) , the multiplicative identity element as \(1\) , the additive inverse for a given element \(\bm v\) as \(-\bm v\) .

The first four axioms indicates that \((V, +)\) (V under vector addition) is a commutative group.

[Theorem]:

where the \(0\) is the identity of scalar addition (implying \(0\in F\) ), and the \(\vec\bm 0\) is the identity of vector addition (implying \(\vec\bm 0\in V\) ), \(-1\) is the inverse of the scalar addition(implying \(-1\in F\) ), \(-\vec\bm v\) is the inverse of the \(\vec\bm v\) under vector addition, that is \((-\vec\bm v)+\vec\bm v=\vec\bm 0\) .

Proof:

a Field is a linear space:

A field \(F\) is a vector space over itself equiped with operations in \(F\) . More generally, if \(F, K\) are fields and \(F\subseteq K\) , then we have \(K\) is a vector space over \(F\) .

\(\bm F^n\) : n-tuple vector space

For a given field \(\bm F\) and a positive integer \(n\) , the set \(\bm F^n\) of n-tuples with entries from \(\bm F\) , forms a vector space over \(\bm F\) under entry-wise addition and scalar-distributed-to-each-entry multiplication. More generally, if \(F, K\) are fields and \(F\subseteq K\) , then \(K^n\) is a vector space over \(F\) (under operations ...).

Proof:

Given: \(F\subseteq K\) and they are fields equiped with addition \(+\) and multiplication \(\cdot\) , a set of n-tuple elements \(K^n=\{[v_1,v_2,\dots, v_n]: v_i\in K\}\) (denoting \(\vec v=[v_1,v_2,\dots, v_n]\) for simplicity), binary addition operation on \(K\) : \(\oplus: K^n\times K^n\mapsto K^n: \vec u\oplus \vec v=[u_1,u_2,\dots, u_n]\oplus[v_1, v_2,\dots, v_n]=[u_1+v_1, u_2+v_2,\dots, u_n+v_n]\) , multiplication operation between \(F\) and \(K^n\) : \(*: F\times K^n\mapsto K^n: a*[v_1,v_2,\dots, v_n] = [a\cdot v_1, a\cdot v_2, \dots, a\cdot v_n]\) .

Proving: \(K^n\) is a vector space over \(F\) under the above defined \(\oplus\) and \(*\) .

Proof as following:

- Closure. \(u_i,v_i\in K \implies u_i+v_i\in K \implies \vec u\oplus\vec v=[u_1+v_1, u_2+v_2,\dots, u_n+v_n]\in K^n\) ; \(a\in F\subseteq K, v_i\in K^n \implies a\cdot v_i\in K \implies a*\vec v=[a\cdot v_1, a\cdot v_2, \dots, a\cdot v_n]\in K^n\)

- Associativity of vector addition. \((\vec u \oplus \vec v)\oplus \vec w=[\dots, (u_i+v_i)+w_i, \dots]=[\dots, u_i+(v_i+w_i),\dots]=\vec u\oplus (\vec v\oplus \vec w)\) (by associativity of scalar addition \(+\) in the field \(K\) )

- Commutativity of vector addition. \(\vec u\oplus \vec v=[\dots,u_i+v_i,\dots]=[\dots, v_i+u_i,\dots]=\vec v\oplus \vec u\) (by commutativity of scalar addition \(+\) in the field \(K\) )

- Identity of vector addition. \(\vec 0 = [0, \dots, 0]\in K^n\implies \forall \vec v\in K^n: \vec v\oplus \vec 0=\vec v\) . (by identity of scalar addition \(+\) in the field \(K\) )

- Inverse of vector addition. \(\forall \vec v=[v_1,v_2,\dots, v_n]\in K^n: \exists \vec {v'}=[-v_1,-v_2,\dots, -v_n]\in K^n \implies \vec v\oplus \vec{v'}=\vec 0\) . (by inverse of scalar addition in the field \(K\) )

- Identity of scalar with respect to multiplication \(*\) in the vector space. \(1\in F\subseteq K \implies \forall \vec v\in K^n: 1*\vec v=[1\cdot v_1,1\cdot v_2,\dots, 1\cdot v_n]=[v_1,v_2,\dots, v_n]=\vec v\) . (by identity of scalar multiplication \(\cdot\) in the field \(K\) )

- Compatibility of muplitication in the field and vector space. \(\forall a,b\in F\subseteq K, \vec v \in K^n: a*(b*\vec v)=a*[b\cdot v_1,\dots, b\cdot v_n]=[a\cdot b\cdot v_1,\dots, a\cdot b\cdot v_n]=[(a\cdot b)\cdot v_1,\dots, (a\cdot b)\cdot v_n]=(a\cdot b)* \vec v\) . (by associativity of scalar multiplication \(\cdot\) in the field \(F\) or alternatively in \(K\) )

- Distributivity of scalar multiplication w.r.t. vector addition. \(\forall a\in F, \vec u,\vec v\in K^n: a*(\vec u\oplus \vec v)=[a\cdot (u_1+v_1),\dots, a\cdot (u_n+v_n)]=[au_1+av_1,\dots, au_n+av_n]=(a*\vec u)\oplus (a*\vec v)\) . (by distributivity of scalar mulitiplication \(\cdot\) w.r.t. scalar addition \(+\) in the field \(K\) )

- Distributivity of scalar multiplication w.r.t. scalar addition. \(\forall a,b\in F, \vec v\in K^n: (a+b)*\vec v=[(a+b)\cdot v_1, \dots, (a+b)\cdot v_n]=[a\cdot v_1 + b\cdot v_1, \dots, a\cdot v_n + b\cdot v_n]=a*\vec v\oplus b* \vec v\) . (by distributivity of scalar multiplication \(\cdot\) w.r.t. scalar addition \(+\) in the field \(K\) )

Hence the result.

Exmaples of vector spaces:

- the real numbers \(\R\) is a vector space; the complex numbers \(\cnums\) is a vector space.

- the set of n-tuple of real numbers, \(\R^n\) is a vector space.

- the set of n-tuple of complex numbers, \(\cnums^n\) can be a vector space over \(\cnums\) or over \(\R\) .

- the set of polynomials with real or complex coefficients (of arbitary degree).

Subpace

(also known as linear subspace, or linear vector subspace)

A (linear) subspace is a vector space which is a subset of a larger vector space. \(\{\bm 0\}\) and \(V\) are always subspaces of \(V\) , so they are often called trivial subspaces, and a subspace of \(V\) is said to be non-trivial if it is different from both \(\{\bm 0\}\) and \(V\) . A subspace of \(V\) is said to be a proper subspace if it is not equal to \(V\) . A subspace cannot be empty.

subspace examples:

- \(\left\{\begin{bmatrix}a \\ b \\ 0\end{bmatrix}: a,b\in\R\right\}\) is a subspace of \(\R^3\) .

Linear Span

A linear span or just span of a set of vectors is the smallest linear subspace that contains the set. (is also the intersection of all subspaces containing the set)

Let \(K\) be a field, \(V\) a vector space over \(K\) , \(\{\bm v_1,\bm v_2,...,\bm v_n\}\) a set of vectors(in \(V\) ), then a linear span is

where \(+\) is the associated addition operation to \(V\) , \(a_i\bm v_i\) is short for \(a_i\cdot \bm v_i\) for simplicity in which \(\cdot\) is the associated multiplication (of a scalar and a vector) operation to \(V\) .

Or, a linear span of a set (denoted \(S\) ) of vectors from a vector space over a field (denoted \(K\) ), is a set whose elements are linear combinations among \(S\) for arbitary coeffients in \(K\) .

linearly dependent: A list of vectors \(v_1,v_2,...,v_n\) in a vector space \(V\) over a field \(K\) is said to be linearly dependent if and only if some nontrivial (the coeffients are not all zero) linear combination is zero vector, or \(\exists a_1,a_2,\dots,a_n\in K\wedge [a_1,a_2,\dots,a_n]\ne [0,0,\dots, 0]: a_1 v_1 + a_2 v_2+\dots +a_n v_n = \bm 0\) .

linearly independent: A list of vectors is linearly independent if it is not linearly dependent, or

, where \(\bm v_i\in V, a_i\in K\) , \(K\) is a field, \(V\) is a vector space over \(K\) .

- A list of two or more vectors is linearly dependent if one of the vectors is a linear combination of (some of) the others.

- A list containing the zero vector is linearly dependent. In particular, the singleton list consisting of only the zero vector is linearly dependent.

- A singleton list consisting of a non-zero vector is linearly independent.

Basis

A set \(B\) of vectors in a vector space \(V\) is called a basis of \(V\) if for every element of \(V\) may be written as an unique finite linear combination of elements of \(B\) (i.e. \(B\) spans \(V\) ). The coefficients of this linear combination are called coordinates of the vector with respect to the basis \(B\) .

Equivalently, a basis of a vector space \(V\) is a linearly independent set of vectors in \(V\) whose span is \(V\) .

change of basis:

Let \(V\) be an \(n\) -dimensional vector space over a field \(F\) , \(\mathcal B_1=\{ v_1, v_2,\dots, v_n\}\) be a basis of \(V\) , \(\mathcal B_2=\{ u_1, u_2,\dots, u_n\}\) . Let \(T: V\mapsto V\) be a linear transformation. Any given vector \(x \in V\) can be uniquely represented as \(x=a_1v_1+a_2v_2+\dots+a_nv_n\) , where \(a_i\in F\) ,

, which is a \(V \mapsto F^n\) .

The matrix representation of a linear transformation \(T\) w.r.t \(\mathcal{B_1}\rm{-}\mathcal{B_2}\) basis is defined as:

where \(v_i\in B_1\) are vectors of the basis \(B_1\) , \([T]_{B_1\to B_2}\) denoted also as \(_{\mathcal B_2}[T]_{\mathcal B_1}\) .

Remember that here \(T\) is a function( a linear transformation on \(V\) speaking more specifically), however \([T]_{B_1\to B_2}\) is a matrix in \(F^{n\times n}\) .

.

where \(I_n\) is the \(n\times n\) identity matrix, \(I\) is the identity transformation \(I(x)=x\) .

We denote \([T]{_{B_1\to B_1}}\) simply as \([T]{_{{B_1}}}\) .

This identity shows how the \(B_1\) basis representation of \(T\) changes if the basis is changed to \(B_2\) . For this reason, the matrix \([I]_{B_1\to B_2}\) is called the \(B_1\) − \(B_2\) change of basis matrix. Every invertible matrix is a change of basis matrix, and every change-of-basis matrix is invertible.

Any matrix \(M\in F^{n\times n}\) is a basis representation of some linear transformation $T : V \mapsto V $ , for if \(B\) is any basis of \(V\) , we can determine \(Tx\) by \([Tx]_B = M[x]_B\) . For this \(T\) , a computation reveals that \([T]_B=[T]_{B\to B} = M\) .

Matrix Similarity

Two square matrix \(A,B\in F^{n\times n}\) are called similar if there exists an invertible matrix \(P\in F^{n\times n}\) such that

.

A transformation \(A\mapsto P^{-1}A P\) for a square matrix \(A\) with nonsingular and invertible \(P\) is called a similarity transformation.

Similar matrices represent the same linear transformation under different bases, with \(P\) being the change of basis matrix.

Let \(T\) be a given linear transformation on a vector space \(V\) over a field \(F\) , \(B\) be a basis of \(V\) , if the representation of \(T\) under \(B\) is matrix \(A\) , or \(A=[T]_B\) , then the set of representation matrix of \(T\) under all possible bases is

[Theorem]

Matrix similarity is an equivalence relation (reflexive, symmetric, transitive).

[Theorem]

Similar matrices have equal eigenvalues.

Let \(A\) and \(B\) be similar matrices. Then the characteristic polynomials of \(A\) and \(B\) are equal, that is, \(p_A(x)=p_B(x)\) .

(The converse is false, that is, two matrices with equal eigenvaules are not necessarily similar.)

If \(B\) is similar to \(A\) by \(B=S^{-1}AS\) , \(\lambda_i\) is an eigenvalue of \(A\) , \(\bm v_i\) is the eigenvector corresponding to the eigenvalue \(\lambda_i\) . Then \(B\) shares eigenvalues of \(A\) , and the eigenvector of \(B\) corresponding to the eigenvalue \(\lambda_i\) is \(S^{-1}\bm v_i\) .

Proof:

Proof:

trace and determinant:

For any nonsingular \(S\in F^{n\times n}\) ,

.

(simple proof: \(\rm{trace}(S^{-1}AS)=\rm{trace}(ASS^{-1})=\rm{trace}(A); \det(S^{-1}AS=\det(S^{-1})\det(A)\det(S)=\det(A)\) )

conjugate congruence

The transformation \(A\mapsto S^HAS\) where \(S\) is nonsingular but not neccessarily unitary, is called conjugate congruence (*congruence).

Unitary Equivalence

The transformation \(A\mapsto UAV\) for \(A\in\cnums^{m\times n}\) , where \(U\in\cnums^{m\times m}, V\in\cnums^{n\times n}\) are both unitary, is called unitary equivalence.

Dimension

The dimension of a vector space \(V\) over a field \(K\) is the cardinality of a basis of \(V\) over \(K\) , denoted as \(\dim(V)\) . Every basis of a vector space has equal cardinality.

Exmaples of basis and dimensions:

- \(\cnums\) over \(\cnums\) , the dimension is 1, and the basis (1) can be \(\{1\}\) because \(\forall a\in\cnums: a=a*1\) (which means for every 'vector' in vector space \(\cnums\) there exists an unique linear combination of the basis \(1\) with coefficient \(a\) from the field \(\cnums\) ; (2) can also be one of any complex number except 0, because, saying the basis \(b\in\cnums\wedge b\ne 0\) , we have \(\forall a\in\cnums=b^{-1}a*b\) .

- The basis of \(\cnums\) over \(\R\) can be \(\{1, i\}\) , and the dimension is 2.

- The basis of \(\cnums^n\) over \(\cnums\) can be \(\{[1,0,\dots,0]^T, [0,1,\dots,0]^T,...,[0,\dots,0,1]\}\) , and the dimension is \(n\) .

- The basis of \(\cnums^n\) over \(\R\) would be \(\{[1+0i, 0,\dots,0]^T, [0+1i,0,\dots,0], \dots, [0, 1+0i,\dots,0]^T, [0, 0+1i,\dots,0]^T,...\}\) , and the dimension is \(2n\) .

Isomorphism

Let \(U, V\) be vector spaces over the same field \(F\) , if \(f: U\mapsto V\) is an invertible function such that \(\forall x,y\in U, a,b\in F: f(ax+by)=af(x)+bf(y)\) , then \(f\) is said to be an isomorphism (preserving structure mapping), and \(U\) and \(V\) are said to be isomorphic. Two finite-dimensional vector spaces over the same field are isomorphic if and only if they have the same dimension. Any \(n\) -dimensional vecotr space over a field \(F\) is isomorphic to \(F^n\) . In particular, any \(n\) -dimensional real vector space (over \(\R\) ) is isomorphic to \(\R^n\) . Any \(n\) -dimensional complex vector space (over \(\cnums\) ) is isomorphic to \(\cnums^n\) . If \(V\) is a \(n\) -dimensional vector space over \(F\) with specified basis \(B=[b_1,b_2,\dots, b_n]\) , then any \(x\in V\) may be written uniquely as \(x=a_1 b_1 + a_2 b_2+\dots + a_n b_n\) where \(a_i\in F\) , we may identity \(x\) with the \(n\) -vector \([x]_B = [a_1, a_2, \dots, a_n]^T\) (where \(x\in V, [x]_B\in F^n\) ) with respect to the basis \(B\) . For any basis \(B\) , the mapping \(x \mapsto [x]_B\) is an isomorphism between \(V\) and \(F^n\) .

Linear Transformation

Let \(U\) be a \(n\) -dimensional vector space, \(V\) be a \(m\) -dimensional vector space, both over the same field \(F\) , then a linear transformation is a function \(f:U\mapsto V\) such that

.

Exmaples of linear transformation:

- scaling (dialations and contractions.) \(T(x)=cx\) .

- reflection about an axis. \(T(x)=[\dots, x_{i-1}, -x_i, x_{i+1},\dots]^T\)

- rotation. counterclockwise angle \(\theta\) , \(T(x)=Ax, A=\begin{bmatrix} \cos\theta & -\sin\theta \\ \sin\theta & \cos\theta \end{bmatrix}\) .

A translation operation \(f(x)=x+ b\) where \(b\) is a constant is NOT a linear transform. But we can present it by a linear transformation in a homogeneous coordinate system.

Homogeneous coordinate system

For a n-vector \(\bm x= [x_1,x_2,\dots, x_n]^T\) , the homogeneous coordinates of \(\bm x\) is a \((n+1)\) -vector which is constructed from \(\bm x\) by appending a dimension with value \(1\) , or \([x_1,x_2,\dots,x_n, 1]^T\) .

A translation operation can be presented as a linear transform in a homogeneous coordinate system. \(\bm f(\bm x)=\bm x+\bm b\) ==>(in homogeneous coordinate system) \([\bm f(\bm x), 1]=\begin{bmatrix} 1 & 0 & \dots &0& a_1 \\ 0&1&\dots&0&a_2\\ \vdots&\vdots&\ddots&\vdots&\vdots \\ 0&0&\dots&1& a_n \\0&0&\dots&0&1 \end{bmatrix}\begin{bmatrix}x_1\\x_2\\ \vdots \\ x_n \\ 1\end{bmatrix}=\left(\bm I_{n+1} + \begin{bmatrix} 0 & \dots &0 & a_1 \\ 0 & \dots & 0 &a_2 \\ \vdots&\vdots &\vdots & \vdots& \\ 0& \dots &0 & a_n \\ 0&\dots&0 &0 \end{bmatrix}\right)\begin{bmatrix}x_1\\x_2\\ \vdots \\ x_n \\ 1\end{bmatrix}\)

Affine Space

The elements of the affine space \(A\) are called points. The vector space is saied to be associated to the affine space, and its elements are called vectors, or free vectors, or translations.

An affine space is nothing more than a vector space whose origin we try to forget about, by adding translations to the linear maps. - by Marcel Berger (a French mathematician).

Normed Vector Space

A vector space with norm defined.

norm: A norm is a function from a vector space onto non-negative real numbers, satisfying norm axioms.

Formally, given a vector space \(V\) over \(\R\) , a function from the vector space to non-negative real numbers, \(\|\cdot\|: V\mapsto \R, \bm x\mapsto \|\bm x\|\) . To qualify as a norm, the function \(p\) must satisfy the following axioms, \(\forall \bm x,\bm y\in V, a\in \R\) :

- \(\|\bm x\| \ge 0\) and \(\|\bm x\|=0 \iff \bm x=\bm 0\) . positive definiteness.

- \(\|\bm x+\bm y\| \le \|\bm x\|+\|\bm y\|\) ,for every vector \(\bm x,\bm y\) . triangle inequality, or subadditivity.

- \(\|a\bm x\|=|a|\|\bm x\|\) , for every scalar and any vector $$ \(\bm x\) . absolute scalability

A norm of a vector can be considered as a distance from the origin(in that vecor space) to the vector.

p-norm, ** \(L^p\) -norm**, Lp norm, L-p norm:

Given a vector \(\bm x\in\R^n\) , represented by Cartesian coordinates \(\bm x=(x_1,...,x_n)\) , L-p norm is as follows

maximum norm (infinite norm):

L0 "norm" (which is actually NOT a norm \(\|\alpha \bm x\| \not \equiv |\alpha|\|\bm x\|\) ): The Number of non-zero components of the vector.

[Theorem] Minkowski's inequality:

for any p-norm:

Manhattan distance:

equivalent to L-1 norm of vector difference of two points:

seminorm: a function satisfying the norm axioms except the positive definiteness.

seminormed vector space: a vector space on which a seminorm is defined.

unit vector: a vector in a normed vector space is called a unit vecotr if its norm is 1.

the normalized vector of a vector: nomalizing a vector is to scale that vector to a unit vector, or the normalized vector of a vector \(\bm u\) is \(\hat\bm u=\frac{\bm u}{\|\bm u\|}\) .

Linear Combination

Let \(K\) be a field, \(V\) a vector space over \(K\) , then a linear combination has the form

where \(a_i\in K, \bm v_i\in V\) (or \(a_i\) is a scalar, \(\bm v_i\) is a vector), and \(+\) is the associated addition operation to \(V\) , and \(\cdot\) is the associated multiplication operation to \(V\) . Scalars in linear combinations are known as coefficients.

Let us denote \(a_1\bm v_1+...+a_n\bm v_n\) as \(\sum_{i=1}^n a_i\bm v_i\) , which in essence is the result of folding all elements \(\{a_i\bm v_i\}\) by the binary operation 'vector addition'. It's ok \(n=1\) (or only one term in the linear combination) in \(\sum_{i=1}^n a_i\bm v_i\) , cos \(\sum_{i=1}^1 a_i\bm v_i= \bm 0+a_1\bm v_1\) (meaning that the other argument for the binary operation, addition, is \(\bm 0\) ).

Affine Combination

a linear combination constrained by sum of coeffients to 1.

where \(1\) is the multiplicative identity in the field.

Convex Combination

non-rigorous (cos no definition for order)

Euclidean Space

Originally, the Euclidean space was 3-dimensional space of Euclidean geometry, but in modern mathematics there are n-dimensional Euclidean spaces.

A Euclidean space is a normed vector space with Euclidean norm.

Euclidean norm (or L-2 norm):

\(\bm x=(x_1,x_2,...,x_n)\)

Euclidean distance:

defined by Euclidean norm: Euclidean distance between two vectors \(\bm x, \bm y\) , is the Euclidean norm of the substraction of the two vectors. \(d(\bm x,\bm y)=\|\bm x-\bm y\|\)

defined by dot product: \(d(\bm x,\bm y)=\sqrt{(\bm x-\bm y)\cdot(\bm x-\bm y)}\)

defined using Cartesian coordinates: \(d(\bm x,\bm y)=\sqrt{(x_1-y_1)^2+...+(x_n-y_n)^2}\)

Inner Product Space

An inner product space is a vector space equiped with an inner product operator.

Let \(V\) be a vector space over a field \(F\) (F is either \(\R\) or \(\cnums\) ), a function taking two arugments from \(V\) to map onto \(F\) , \(\langle\cdot,\cdot \rangle: V\times V\mapsto F\) , qualifies as an inner product if it satisfies the following conditions:

- linearity on the first argument. \(\langle a\bm u+b \bm v, \bm w\rangle = a\langle \bm u, \bm v\rangle + b\langle \bm v, \bm w\rangle, \forall a,b\in F, \bm u,\bm v,\bm w\in V\) .

- positive definteness. \(\langle \bm u, \bm u\rangle \ge 0, \forall \bm u\in V\) and \(\langle \bm u, \bm u\rangle = 0 \iff \bm u=\bm 0\) .

- conjugate symmetry.

矩阵 Matrix

A matrix is a rectangular array of scalars from a field. The set of all \(m\) -by- \(n\) matrices over a field \(F\) is denoted as \(M_{m,n}(F)\) , or \(F^{m\times n}\) . \(M_{n\times n}(F)\) may be simply denoted as \(M_n(F)\) .

The set of all \(m\times n\) matrices over a field \(F\) , \(F^{m\times n}\) , is a vector space over \(F\) . The vector addition is the matrix addition (element-wise addition), and the scalar multiplication is defined by multiplying each entry with the same scalar. The identity of vector addition (zero vector) is the zero matrix (the matrix with all entries being 0). The dimension is \(mn\) .

Calculus on matrices/vectors

标量值函数对向量的偏导是一个向量: \(\frac{\partial f}{\partial\bm x}=(\frac{\partial f}{\partial x_1}, \frac{\partial f}{\partial x_2},...)\)

行向量对列向量的导数: \(1\times m\) 的行向量值函数 \(\bm f(\bm x)=\bm y=[y_1, y_2, ...,y_m]\in\R^{1\times m}\) 对 \(n\times 1\) 的列向量 $ \bm x=[x_1, x_2,...,x_n]T\in\R$ 的导数(梯度)是一个nxm的矩阵:

向量一般认为是列向量,对于上述行向量对列向量的定义,转述成当 \(\bm f(\boldsymbol x)\) 是列向量值函数的情况时,导数形式是

。

列向量对行向量导数: \(m\times1\) 列向量值函数对 \(1 \times n\) 的行向量(即 \(n\times1\) 列向量的转置, \({\boldsymbol x}^T\) )的导数 \(\frac{\partial\boldsymbol y}{\partial{{\boldsymbol x}^T}}\) 是一个 \(m\times n\) 的矩阵:

向量一般认为是列向量,对于上述列向量对行向量的定义,转述成当 \(\bm x\) 是列向量的情况时,导数形式是

。

由 \(\frac{\partial\boldsymbol y^T}{\partial \boldsymbol x}\) 及 \(\frac{\partial\boldsymbol y}{\partial{{\boldsymbol x}^T}}\) 的矩阵排列方式可看出,对列向量的偏导矩阵的列向排列是从 \(x_1\) 到 \(x_n\) ,对行向量( \({\boldsymbol x}^T\) )的偏导矩阵的行向排列是是从 \(x_1\) 到 \(x_n\) 。

(对列向量 \(\boldsymbol x\) 的偏导矩阵于 \(\boldsymbol x\) 列向排,对行向量 \({\boldsymbol x}^T\) 的偏导矩阵于 \({\boldsymbol x}^T\) 横向排)

对 \(m\times1\) 的列向量值函数 \(\bm y(\bm x)\in\mathbb R^m\) , \(n\times 1\) 的列向量 \(\bm x\in\mathbb R^n\) ,有 \(\frac{\partial \bm y(\bm x)}{\partial\bm x^T}=[\frac{\partial\bm y^T}{\partial\bm x}]^T\) 。

向量对向量的导数,不论是行对行、列对列、行对列、列对行,共同的特征是,导数是矩阵,其排列形状是沿函数值轴向展开分别计算每个标量函数值对向量的导数向量最终形成矩阵。

雅克比矩阵(Jacobian Matrix):向量对向量的一阶导数形成的矩阵

向量自身的转置(自身的行向量形式)对自身的偏导是单位矩阵 \(\frac{\partial{{\boldsymbol x}^T}}{\partial\boldsymbol x}=I\) 。

++列(行)向量值函数++对++列(行)向量++的偏导:这时将标量值函数对列(行)向量的导数定义为行(列)向量,此时得到的导数矩阵形状纵(横)向同函数值列(行)向量,横(纵)向同标量值对列(行)向量的梯度行(列)向量。

矩阵对矩阵的导数:矩阵 \(\bm A\in\R^{m\times n}, \bm B\in\R^{p \times q}\) ,则矩阵对矩阵的导数(此时为张量)

对向量的偏导的结论公式:

矩阵、向量、标量的偏导:

偏导(偏微分):

逆偏导:

特征值偏导:

行列式偏导(偏微分):

迹偏导(偏微分):

Column Space, Row Space

Row space, or coimage of a matrix \(\bm A\) is the span of the row vectors of \(\bm A\) .

Null Space

In linear algebra, the null space, also known as nullspace, kernel, of a linear mapping, is the set of vectors in the domain of the mapping which are mapped to zero vector.

i.e. for a linear mapping represented as a matrix, \(\bm A\) , the null space of \(\bm A\) is

.

The nullity of a matrix is the dimension of the null space of the matrix.

Properties:

\(\bm A\bm x\) can be written in terms of the dot production related on row vectors of \(\bm A\) :

where \(\bm a_i\) is the \(i\) -th row vector of \(\bm A\) .

So

which illustrates that a vector in the null space of \(\bm A\) is orthogonal to each of the row vectors of \(\bm A\) .

Left null space, or cokernel, of a matrix \(\bm A\) is

Trace 迹

The trace of square matrix \(\bm A\in\R^{n\times n}\) is defined as the sum of the diagonal elements of \(\bm A\) .

矩阵的迹是其对角元素之和: \(\mathrm{tr}\bm A=\sum_i A_{i,i}\) .

Properties:

where \(\odot\) denotes the element-wise(Hadamard) product.

The trace is invariant under cyclic permutations, i.e.,

This property generalizes to products of an arbitary number of matrices.

As a special case, for vectors \(\bm x, \bm y \in \R^n\) ,

In the above equation, \(\bm x\bm y^T \in \R^{n\times n}\) , \(\bm y^T \bm x \in\R^{1\times 1}\) .

Rank 秩

The maximal number of linearly independent columns of a matrix A equals to the number of linearly independent rows, and is called the rank of A.

The following statements are equivalent:

- the rank of \(A\) .

- the dimension of row space of \(A\) (spanned space of row vectors).

- the dimension of column space of \(A\) (spanned space of column vectors).

- the maximal number of linearly independent column vectors of \(A\) .

- the maximal number of linearly independent row vectors of \(A\) .

- the dimension of the image of transformation \(A\) .

The following are equivalent for a square matrix \(A\in M_n(\cnums)\) :

(a) A is nonsingular.

(b) A^−1 exists.

(c) rank A = n.

(d) The rows of A are linearly independent.

(e) The columns of A are linearly independent.

(f) det A ≠ 0.

(g) The dimension of the range of A is n.

(h) The dimension of the null space of A is 0.

(i) Ax = b is consistent for each b ∈ F^n.

(j) If Ax = b is consistent, then the solution is unique.

(k) Ax = b has a unique solution for each b ∈ Fn.

(l) The only solution to Ax = 0 is x = 0.

(m) 0 is not an eigenvalue of A. (0 is not in the spectrum of A)

(n) A is a change-of-basis matrix.

Properties:

For any matrix \(\bm A\in F^{m\times n}\) ,

Positive Definite Matrix 正定矩阵

狭义定义(仅定义在对称矩阵上):

关于实对称矩阵A,若其所有特征值为正,则称A是正定的(positve definite),若所有特征值大于等于0,则称半正定或正半定(positive semidefinite)。

positive definite \(\iff\) the Hermitian(symmetric part) has all positive eigenvalues.

广义定义:

For a square matrix \(\bm A\in \R^{n\times n}\) , is positive definite if the quadratic form holds

. It is positive semi-definite if

.

A positive (semi-)definite matrix does not have to be symmetric. However a symmetric positive definite matrix has many nice properties.

\(\bm x\) 的二次型方程被定义为 \(f(\bm x):=\bm x^TA\bm x, \;s.t.\; \|\bm x\|_2=1\) ,特别地,当 \(\bm x\) 是A的特征向量时, \(f(\bm x)\) 是 \(\bm x\) 关于方阵A的对应特征值。在约束条件 \(\|\bm x\|_2=1\) 下, \(f(\bm x)\) 最大值是A的最大特征值,最小值是A的最小特征值。

正定矩阵保证 \(\bm x^TA\bm x=0 \Rightarrow \bm x=\bm 0\)

positive definteness & eigenvalues & symmetry

For a Hermitian matrix \(A\in M_n(F)\) ( \(F\in\{\reals,\cnums \}\) ), it is positive definite if and only if it has all positive eigenvalues.

orthogonal vectors

A list of vectors, \(\bm v_1, \bm v_2, \dots, \bm v_m\) is orthogonal if \(\bm v_i^*\cdot \bm v_j=0, \forall i\ne j, i,j\in[1,2,\dots, m]\) , where \(\bm v_i\cdot \bm v_j\) denotes the dot product of two vectors.

A list of vectors, \(\bm v_1, \bm v_2, \dots, \bm v_m\) is orthonormal if it is orthogonal and unit vectors, \(\bm v_i\cdot \bm v_j=0, \forall i\ne j \wedge \bm v_i\cdot \bm v_i = 1, \forall i\) .

More generally, let \(V\) be a inner product space. Two vectors \(\bm u, \bm v\in V\) are said to be orthogonal if

.

[Theorem] Nonzero orthogonal vectors are linearly independent.

Proof:

Given: Let \(\bm v_1, \bm v_2,\dots, \bm v_n\) be nonzero orthogonal vectors in an inner product vector space \(V, \langle,\cdot,\cdot\rangle\) .

Prove: \(a_1 \bm v_1 + a_2\bm v_2 +\dots + a_n \bm v_n = \bm 0 \implies a_1=a_2=\dots=a_n=0\) .

Proving:

Orthogonal Matrix 正交矩阵

正交矩阵(orthogonal matrix):(also known as orthonormal matrix) 行向量和列向量都是实数单位向量,且行向量相互正交,列向量也相互正交,如此行列向量构成的方阵是正交矩阵。

正交矩阵是指列向量组和行向量组分别标准正交(orthonormal)的方阵,即 \(\bm A \bm A^T=\bm A^T\bm A=\bm I\) 的方阵。(列向量相互标准正交,同时行向量也相互标准正交)

如果A正交,则 \(A^T=A^{-1}\) 。

如果矩阵 \(\bm A_{m\times n}\) (不一定是方阵)的列向量相互(标准)正交,不能称A是正交矩阵。

Unitary Matrix 酉矩阵

酉矩阵(unitary matrix):是正交矩阵在复数域上的推广,复方阵 \(U_{n\times n}\in \mathbb C^{n\times n}\) 满足 \(U^H U=UU^H =I\) ,其中 \(U^H\) 是 \(U\) 的共轭转置,则 \(U\) 是酉矩阵。

The conjugate transpose is much easier to compute than the inverse.

The similatriy via a unitary matrix \(U\) : \(A \sim U^H AU\) is not only conceptually but also computationally simpler than general similarity.

[Theorem]

The following statments are equivalent for a sqaure matrix \(U\in\cnums^{n\times n}\) :

- \(U\) is unitary.

- \(U^H\) is unitary.

- \(U^H=U^{-1}\)

- \(U\) is nonsingular.

- \(UU^H=I\) .

- \(U^HU=I\) .

- The columns of \(U\) are orthonormal.

- The rows of \(U\) are orthonormal.

- $ |U\bm x|_2 = |\bm x|_2, \forall \bm x\in\cnums^n$ , where \(\|\bm x\|_2=(\bm x^H\bm x)^{1/2}\) denotes the Euclidean norm.

- \(\langle U\bm x, U\bm y \rangle = \langle \bm x, \bm y \rangle\) (inner product)

[Theorem]

If \(U\in\cnums^{n\times n}\) is a unitary matrix with eigenvalues \(\lambda_1, \lambda_2, \dots, \lambda_n\) , then

- \(|\lambda_i|=1, \forall i\)

- there exists a unitary matrix \(Q\in\cnums^{n\times n}\) such that

[Theorem] Let \(A\in\cnums^{n\times n}\) be nonsingular. Then \(A^{-1}\) is similar to \(A^H\) if and only if there exists a nonsingular \(B\in\cnums^{n\times n}\) such that \(A=B^{-1} B^H\) .

Normal Matrix

A complex square matrix is said to be normal if it commutes with its conjugate transpose.

[Theorem] Let \(A\in\cnums^{n\times n}\) , the following statements are equivalent:

- \(A\) is normal.

- \(A\) is diagonalizable by a unitary matrix.

- \(A^H=AU\) for some unitary matrix \(U\) .

- \(A\) has \(n\) orthonomal eigenvectors.

- \(\sum_{i,j}|a_{ij}|^2=\sum_i |\lambda_i|^2\) , where \([a_{ij}]=A\) , \(\{\lambda_i\}\) are the eigenvalues of \(A\) .

[Theorem] Let \(A\in\cnums^{n\times n}, B\in\cnums^{m\times m}\) be normal, and \(X\in\cnums^{n\times m}\) be given. Then \(AX=XB\) if and only if \(A^H X= XB^H\) .

[Theorem] Let \(U\in\cnums^{n\times n}\) be unitary.

- If \(U\) is symmetric, then there is a real orthogonal \(Q\in\R^{n\times n}\) and real \(\theta_1,\dots, \theta_n\in[0, 2\pi)\) such that

[Theorem] Let \(A,B\in\R^{n\times n}\) . There are real orthogonal \(Q,P\in\R^{n\times n}\) such that \(A=QT_A P^{-1}, B=QT_B P^{-1}\) , \(T_A\) is real and upper quasi-triangular and \(T_B\) is real and upper triangular.

Diagonal Matrix 对角矩阵

A diagonal matrix is a matrix in which off-diagonal entries are all 0. (no requirements on diagonal entries)

The identity matrix, zero matrix are diagonal. A \(1\times 1\) matrix is always diagonal.

Properties:

- If \(A, B\) are diagonal, then \(AB\) is diagonal.

- Multiplication of all diagonal matrices is commutative. If \(A,B\) is diagonal, then \(AB=BA\) .

- \(DA\) implies scaling rows of \(A\) with scaling matrix \(D\) , and \(AD\) implies scaling columns of \(A\) with scaling matrix \(D\) . In particular, if \(A\) is a column/row vector, the scaling matrix scales the elements of the vector.

In details, If \(D\) is diagonal and \(A\) is general, then \(DA\) results in a matrix whose i-th row vector is the i-th entry of the diagonal matrix \(D\) times the i-th row vector of matrix \(A\) . If \(D\) is diagonal and \(A\) is general, then \(AD\) results in a matrix whose j-th column vector is the j-th entry of the diagonal matrix \(D\) times the j-th column vector of matrix \(A\) .

Diagonally Dominant Matrix

A square matrix is said to be diagonally dominant if, for every row vector the magnitude(absolute value) of the diagonal entry is greater than or equal to the sum of magnitudes of all other(off-diagonal) entries in that row.

Formally, given a square matrix \(A\in\cnums^{n\times n}=[A_{ij}]\) , it is said to be diagonally dominant if

.

It is said to be strictly diagonally dominant if a strict inequality(>) holds in the above formula.

Properties:

- A strictly diagonally dominant matrix is non-sigular (or invertible). The result is known as the Levy–Desplanques theorem.

- A Hermitian diagonally dominant matrix with real non-negative diagonal entries is positive semi-definite. (If it strictly diagonally dominant, then it is positive definite.)

rectangular diagonal matrix:

Sometimes a m-by-n matrix \(A\in F^{m\times n}\) (not neccessarily square) is said to be rectangular diagonal if all off-diagonal entries are zero, or \(A_{ij}=0, \forall i\ne j, i=1,2,\dots,m, j=1,2,\dots, n\) .

e.g.

Permutation Matrix

(置换矩阵)

A permutation matrix is a square binary matrix of which each row vector and column vector has exactly one entry of 1 and all other entries of 0 (row/column vectors are one-hot vectors).

When a permutation matrix \(P\) is multiplied from the left with a matrix \(M\) to make \(PM\) , it will permute the row vectors of \(M\) .

When a perumuation matrix \(P\) is multiplied from the right with a matrix \(M\) to make \(MP\) , it will permute the column vectors of \(M\) .

Interchange of row i and j of a matrix \(\bm A\) results from left multiplication of \(\bm A\) by the following permuation matrix \(P\) :

where two off-diagonal 1s are in the \([i,j]\) and \([j,i]\) positions, the two diagonal 0s are in \([i,i]\) and \([j,j]\) positions.

In another words, \(IA\) , with \(I\) being the identity matrix, selects every row of \(A\) and puts it in its place. If we want to interchange row \(i\) and \(j\) , we need to interchange the corresponding rows of \(I\) to form the permutation matrix. Similarly, a permutation matrix \(P\) which is resulted from interchanging column \(i\) and \(j\) of the identity matrix \(I\) , is multiplied from the left with a matrix \(A\) will interchange the columns \(i\) and \(j\) of \(A\) .

And also, we can see this from a perspective of matrix-by-matrix or vector-by-matrix multiplication. Left multiplying a matrix by a row vector is a linear combination of rows of the right matrix with coeffients being elements of the left row vector. So, for a one-hot row vector \(\bm z_{(i)}\in\{0,1\}^{1\times m}\) with the 1 in the position \(i\) and 0s in the other positions, \(\bm z_{(i)}\bm A\) results the selecting \(i\) -th row vector from \(\bm A\) .

矩阵转置(transpose)

转置是一种对矩阵的操作,该操作将矩阵中以矩阵左上角到右下角为中心线进行位置翻转,实数矩阵A的转置记为 \(A^T\) ,也有记为 \(A'\) 。即矩阵A的转置B满足 \(B_{i,j}=A_{j,i}\) 。

Properties:

symmetric matrix: For a square real matrix \(\bm A\in\R^{n\times n}\) , \(\bm A\) is called symmetric if \(\bm A^T=\bm A\) . (undefined for non-square matrix)

For any matrix \(\bm A\in\R^{m\times n}\) , the \(\bm A \bm A^T\) is symmetric, and so is \(\bm A^T\bm A\) .

Proof: \((AA^T)^T=(A^T)^TA^T=AA^T\) ; \((A^TA)^T=A^T(A^T)^T=A^TA\) .

skew-symmetric: A square real matrix is said to be skew-symmetric if its transpose equals to its negative.

symmetric part: The symmetric part of a matrix \(\bm A\) is defined as

.

skew-symmetric: The skew-symmetric part of a matrix \(\bm A\) is given by

.

共轭转置(conjugate transpose)

对矩阵元素取共轭,然后进行转置,又称厄米特转置(Hermitian transpose)。复数矩阵 \(A\) 的共轭转置记为 \(A^H\) ,也有记为 \(A^*\) 。可以看出,实数矩阵转置 \(A^T\) 是共轭转置 \(A^H\) 在矩阵全为实数(实数特例化)下的特殊情况。

or \(A^H=\bar A^T\) where \(\bar A\) denotes the elementwise conjugate of \(A\) .

For real matrices, the conjugate transpose is equal to the transpose, \(\forall A\in\R^{m\times n}: A^T=A^H\) . But for complex matrices, the conjugate transpose is in general not equal to the transpose, \(A\in\cnums^{m\times n}: A^T\not\equiv A^H\) .

共轭(conjugate):

共轭是复数域中的一种现象,两个复数共轭指的是它们实部相同,虚部互为相反数。取共轭是一种操作,对一个复数取共轭指的是计算出一个新复数使得两数共轭。复数 \(c\) 的共轭复数记为 \(\bar c\) 。实数的共轭等于其自身。

自共轭矩阵(Hermitian): 实数对称矩阵在复数域上的推广,共轭转置等于自身的矩阵,即 \(A^H=A\) ,可称++共轭对称++,亦即矩阵任意元素 \(A_{i,j}\) ,它与元素 \(A_{j,i}\) 共轭。

厄米特矩阵(Hermitian matrix),即自共轭矩阵(实数域上即对称矩阵)。

[Theorem] If \(A\in\cnums^{n\times n}\) is a Hermitian matrix with eigenvalues \(\lambda_1, \lambda_2, \dots, \lambda_n\) , then

- \(\overline\lambda_i=\lambda, \forall i\) , in another words, eigenvalues are real numbers.

- there exists a unitary matrix \(U\in\cnums^{n\times n}\) such that

.

skew-Hermitian: A square complex matrix is said to be skew-Hermitian (or anti-Hermitian) if its conjugate transpose equals to its negative.

For any given complex square matrix \(A, B\in M_n(\cnums)\) , these are true:

- \(A+A^H, AA^H, A^H A\) are Hermitian.

- If \(A\) is Hermitian, then \(A^k\) is Hermitian for any \(k=1,2,\dots\) . If \(A\) is Hermitian and invertible, then \(A^{-1}\) is Hermitian.

Table for some concepts comparison between real and complex numbers:

on Reals | on Complexes

--|--|--

Orthogonal 正交矩阵 | Unitary 酉矩阵

Symmetric 对称的 | Hermitian 自共轭的

Transpose (实矩阵)转置 (记号 \(X^T\) ) | conjugate transpose 共轭转置 (记号 \(X^H\) )

Hermitian part: the Hermitian part of a matrix \(\bm A\) is defined as

.

skew-Hermitian part: the skew-Hermitian part of a matrix \(\bm A\) is given by

.

向量范数(norm of vectors):

范数是满足如下性质的实值函数 \(f(\bm x)\) :

- \(f(\bm x)\ge 0\)

- \(f(\bm x)=0 \Rightarrow \bm x=\bm 0\)

- \(f(\bm x + \bm y)\le f(\bm x)+f(\bm y)\) ,三角不等式(triangle inequality)

- \(\forall \alpha\in\mathbb R, f(\alpha\bm x)=|\alpha|f(\bm x)\)

L范数,

\(\mathop L^p\) 范数定义为

\(\mathop L^2\) 范数(或L2范数) \(\|\bm x\|_2\) 又称欧几里得范数(Euclidean norm, 欧几里得距离 Euclidean distance)。 \(\|\bm x\|\) 一般指L2范数。在统计学领域,L2范数叫做 ridge regression.

\(\mathop L^1\) 范数(或L1范数)也是曼哈顿距离(Manhattan distance)。在统计学领域L1范数叫做 LASSO (least absolute shrinkage and selection operator).

\(\mathop L^\infty\) 又称++最大范数++(max norm),表示向量中元素绝对值的最大者。

矩阵范数 F范数(Frobenius norm, F-norm):

(norm of matrices)

矩阵的逆 Inverse of a matrix: For a square matrix \(A\in\cnums^{n\times n}\) , the inverse of \(A\) is \(A^{-1}\in\cnums^{n\times n}\) satisfying \(AA^{-1}=A^{-1}A=I\) .

矩阵伪逆 M-P逆(Moore-Penrose inverse, MP inverse, pseudo-inverse):

非方矩阵的逆没有定义,伪逆将逆性质拓展到非方阵上。

For a matrix \(A\in\cnums^{m\times n}\) , the pseudo-inverse of \(A\) is the matrix \(A^+\in\cnums^{n\times m}\) satisfying all the following four cretiria:

- \(AA^+ A=A\)

- \(A^+ A A^+ = A^+\)

- \((AA^+)^H = AA^+\)

- \((A^+ A)^H= A^+A\)

Pseudo-inverse exists for any matrix, and is unique.

与极限的关系:

可通过SVD结果计算:

Properties:

- If A is invertible, its pseudo-inverse is its inverse. or \(\det(\bm A)\ne 0 \implies \bm A^+=\bm A^{-1}\) .

- The pseudo-inverse of a zero matrix is its transpose.

- The pseudo-inverse of the pseudo-inverse is the original matrix: \((\bm A^+)^+ = \bm A\) .

- \((\alpha \bm A)^+ = \alpha^{-1}\bm A^+, \alpha\in\R\backslash\{0\}\)

- 对角矩阵的伪逆可通过对非0元素取倒数后转置得到。

矩阵乘法 Matrix Mulplication:

矩阵A、B,在A的列数等于B行数时A和B可相乘,假设 \(A\in \mathbb R^{m\times k}\) , \(B\in \mathbb R^{k\times n}\) ,则矩阵积 \(AB\in\mathbb R^{m\times n}\) 。

矩阵乘法的内积视角: \(AB[i,j]=\overrightharpoon{A[i,:]} \cdot \overrightharpoon{B[:,j]}\)

矩阵乘法的行向量视角:

The i-th row vector of \(AB\) is a linear combination of all row vectors of \(B\) with coeffients being the elements of the i-th row vector of \(A\) .

矩阵乘法的列向量视角:

The j-th column vector of \(AB\) is a linear combination of all column vectors of \(A\) with coeffients being the elements of the j-th column vector of \(B\) .

矩阵乘向量与向量乘向量:

假设有行向量 \(\bm v\) 、 \(m\times n\) 的矩阵 \(\bm M\) ,则向量乘以矩阵即 \(\bm v\bm M\) 表示改变矩阵M的行形状,由m行变成1行,变换结果行向量元素是由M中对应列的m个元素的加权和,比如,v是全1的行向量,则 \(v\bm M\) 行向量的元素是M对应列的m个元素的和。

同理,列向量 \(v\) , \(m\times n\) 的矩阵 \(\bm M\) , \(\bm Mv\) 表示将M的n列变成1列。

Multiplying a matrix and a vector:

The result of \(\bm v \bm M\) is a linear combination of row vectors of \(\bm M\) , with coeffients being elements of \(\bm v\) .

The result of \(\bm Mv\) is a linear combination of column vectors of \(\bm M\) , with coeffients being elements of \(\bm v\) .

设 \(\bm X\in\R^{m\times n}, \bm\theta\in\R^n\) , 则矩阵与向量的乘积 \(\bm X\bm\theta\) 是一个 \(m\) 维列向量,其第i元素 \((\bm X\bm\theta)_i\) 是一个标量,是X的第i行向量与向量 \(\bm \theta\) 的点积,即 \((\bm X\bm\theta)_i=\bm x_i \cdot\bm\theta=\bm x_i\bm\theta=\bm\theta^T\bm x_i^T=\bm X_{i:*}\bm\theta\) ,其中 \(\bm x_i\) 表示X的第i行向量(为n维),形状为 \(\bm x_i\in\R^{1\times n}\) ,若将向量表示为列向量形式,即 \(\bm x_i\in\R^{n\times 1}\) ,则 \((\bm X\bm\theta)_i=\bm x_i\cdot \bm\theta=\bm\theta^T\bm x_i=\bm x_i^T\bm\theta=\bm X_{i:*}\bm\theta\) ,其中 \(\bm X_{i:*}\in\R^{1\times n}\) 。

矩阵乘矩阵与矩阵乘向量:

设 \(\bm X\in\R^{m\times n}, \bm\Theta\in\R^{n\times p}\) ,则矩阵乘矩阵的积 \(\bm X\bm\Theta\) 是一个 \(m\times p\) 矩阵(即 \(\bm X\bm\Theta\in\R^{m\times p}\) ),其第i行向量 \((\bm X\bm\Theta)_{i:*}=\bm X_{i:*}\bm\Theta \in\R^{ 1\times p}\) 。

The \(i\) -th row vector of \(\bm A\bm B\) is the \(i\) -th row vector multiplies \(B\) . The \(j\) -th column vector of \(\bm A\bm B\) is \(\bm A\) multiplies the \(j\) -th column vector of \(\bm B\) .

注意,矩阵乘法通常不满足交换律,即

矩阵乘法具备结合律。 \(ABC\equiv A(BC)\) 。据此得出推导公式: \(ABCD=(AB)(CD)=A(BC)D\) 。

标量平方和与矩阵向量乘积:

例子:

当数据矩阵 \(X\in\mathbb R^{m\times n}, \bm y\in\mathbb R^m\) 时, \(\sum_i^m(\bm\theta^T\bm x^{(i)}-y^{(i)})^2\) (其中 \(\bm x^{(i)}\in\R^n\) 表示X的第i个样本, \(\bm\theta\in\R^n\) )可重写为矩阵运算形式 \((X\bm\theta-\bm y)^T(X\bm\theta-\bm y)\) ,重写过程: \(\bm\theta^T\bm x^{(i)}-y^{(i)}\) 是标量,则 \(\sum_i^m(\bm\theta^T\bm x^{(i)}-y^{(i)})^2 = \sum_i^m v_i^2 =\bm v^T\bm v\) (某个序列内先逐元素取平方后求和的结果相当于该序列形成的向量的转置乘以这个向量),其中 \(\bm v\in\R^{m\times 1}, v_i=\bm\theta^T\bm x^{(i)}-y^{(i)}\in\R\) ,又因 \(X\bm\theta\in\R^m\) 是一个向量,其第i元素 \((X\bm\theta)_i={\bm x^{(i)}}^T\bm\theta=\bm\theta^T\bm x^{(i)}\) ,故 \(\sum_i^m(\bm\theta^T\bm x^{(i)}-y^{(i)})^2=(X\bm\theta-\bm y)^T(X\bm\theta-\bm y)\) 。

矩阵向量积乘自身转置的和与矩阵矩阵乘积:

例子:

当 \(X\in\mathbb R^{m\times n}, \Theta\in\mathbb R^{p\times n}, Y\in\mathbb R^{m\times p}\) 时, \(\sum_i^m\sum_j^p((\bm\theta^{(j)})^T(\bm x^{(i)})-y^{(i,j)})^2\) 可重写为矩阵运算形式 \(\sum_i^m\sum_j^p((\bm\theta^{(j)})^T(\bm x^{(i)})-y^{(i,j)})^2 =\sum_i^m (\bm X_{i:*}\bm\Theta^T- \bm Y_{i:*})(\bm X_{i:*}\bm\Theta^T- \bm Y_{i:*})^T=\sum_{i,j}^{m,p}[(\bm X\bm \Theta-\bm Y)_{i,j}]^2=\|\bm X\bm\Theta-\bm Y\|^2=\mathrm{trace}[(\bm X\bm\Theta-\bm Y)(\bm X\bm\Theta-\bm Y)^T]\) :。

Representation from element-wise product of vectors to product of matrices

(1) columns vectors. The element-wise product of two columns vectors can be reformedt as a product of the diagonal matrix which is generated by the left column vector and the right column vector.

For two column vectors \(\bm a=[a_1,a_2,\dots, a_n]^T\in F^{n\times 1}, \bm b=[b_1,b_2,\dots,b_n]^T\in F^{n\times 1}\) ,

or

where \(\bm D=\mathrm{diag}(\bm a)\) .

(2) row vectors.

The element-wise product of two row vectors can be reformedt as a product of the left row vector and the diagonal matrix which is generated by the right row vector.

For two row vectors \(\bm a=[a_1,a_2,\dots, a_n]\in F^{1\times n}, \bm b=[b_1,b_2,\dots,b_n]\in F^{1\times n}\) ,

or

where \(\bm D=\mathrm{diag}(\bm b)\) .

单位矩阵:对角线上元素全为1,其余全为0的方阵,记为 \(I\) ,其维度一般能从上下文中看出。单位矩阵具有性质,对任意矩阵 \(A\in\mathbb R{m\times n}\) , \(IA=AI=A\) ,值得注意的是,该连等式中前面个单位矩阵I的维度为 \(m\times m\) ,而后面个单位矩阵I的维度为 \(n\times n\) 。

逐元素积(element-wise product)、Hadamard积:形状相同的矩阵对应元素的乘积,记号: \(\bm A\odot \bm B\) 。

直积、张量积、Kronecker积记为: \(\mathbf X\otimes \mathbf Y\)

矩阵计算:

注意, \(A^TA\not\equiv AA^T\) 。

将矩阵视为向量(列向量)的排列时,矩阵的行数即是向量的维度。

C=AB意味着A的行数决定C的行数,B的列数决定C的列数。

矩阵乘积AB的结果矩阵C的列数同B的列数,AB乘积即是取B中一列向量(每列)左乘矩阵A,得到一个行数与A相同列向量,对B中所有列向量如此操作,由B中取出的第几列得到的结果向量则作为结果矩阵C中的第几列,如此构造乘积矩阵。

AB意味着A的列与B的行相同。

\(W^TX\) 意味着W的行数与X的相同(列数随意),乘积结果矩阵的行数由 \(X\) 决定(与 \(X\) 相同)。

矩阵转置的幂等于幂的转置, \((A^T)^n=(A^n)^T\) ,可由 \((XY)^T=Y^TX^T\) 证明。

行列式 Determinants

\(n\times n\) 方阵的行列式 \(\det A\) 的几何意义是矩阵变换的“变换率”(方阵A对n维向量变换后的“n维体积”变化大小, \(\det A\) 为0意味着变换后上无“n维体积”,因此不存在逆变换 \(A^{-1}\) (无法变换回来)。n维上无体积时低维上(n-1维甚至更低维)体积可能存在,如 \(\det A=0\) 的三维方阵变换A使得三维变低维(某些维上值是0),没有体积,但可能有面积。)

Determinants are only defined for square matrices.

The determinant of a triangular matrix \(T\in \cnums^{n\times n}\) , is the product of the diagonal elements.

Determinants may be measures of volume.

For any square matrix \(\bm A\in \cnums^{n\times n}\) it holds that \(A\) is invertible if and only if \(\det(A)\neq 0\) .

运算性质:

Characteristic polynomial of a square matrix

[Def] Let \(A\in F^{n\times n}\) be a square matrix. Then the characteristic polynomial of \(A\) is defined by

.

There sometimes is a definition of characteristic polynomial by \(\det(xI_n-A)\) . It differs from \(\det(A-xI_n)\) by a sign \((-1)^n\) .

Eigenvalues and Eigenvectors 特征值与特征向量

For a square matrix \(\bm A\in\cnums^{n\times n}\) , \(\lambda\in\cnums\) is an eigenvalue of \(\bm A\) and an non-zero vector \(\bm x\in\cnums^n\backslash\{\bm 0\}\) is the corresponding eigenvector of \(\bm A\) w.r.t. \(\lambda\) if

. The pair \(\lambda, \bm x\) is called an eigenpair of \(\bm A\) .

The following statements are equivalent:

- \(0\) is an eigenvalue of \(\bm A\in \cnums^{n\times n}\) .

- \(\det(\bm A) = 0\) .

- There exists an \(\bm x\in \cnums^n\backslash\{\bm 0\}\) with \(\bm A \bm x = \lambda\bm x\) , or equivalently, \((\bm A-\lambda \bm I_n)\bm x=\bm 0\) can be solved non-trivially, i.e., \(\bm x\ne \bm 0\) .

- \(\mathrm{rank}(\bm A − \lambda \bm I_n) < n\) .

How to compute eigenvalues: let \(\det(\bm A-\lambda \bm I_n)=0\) .

[Theorem] Eigenvectors corresponding to distinct eigenvalues are linearly independent.

The converse is false, that is, eigenvalues corresponding to linearly independent eigenvectors are not necessarily distinct.

e.g. for the matrix \(A=\begin{bmatrix}2 & -1 &0 \\ 0&5&0\\0&-1&2\end{bmatrix}\) , \(p_A(\lambda)=(\lambda -5)(\lambda-2)^2\) , there are two eigenvalues \(\lambda_1=2, \lambda_2=5\) , for \(\lambda_1=2\) , the matrix \(A\) has two linearly independent eigenvectors \(\bm v_1=[1,0,0]^T, \bm v_2=[0,0,1]^T\) .

[Theorem] Eigenvalues of a triangular matrix are the diagonal elements.

关于方阵A,对向量 \(\bm v\in\cnums^n\backslash\{\bm 0\}\) (v不能是0向量),有 \(A\bm v=\lambda\bm v\) ,称 \(\bm v\) 和 \(\lambda\) 是矩阵的一对特征向量和特征值(右特征)。也可以定义左特征,即 \(\bm v^T \bm A=\lambda\bm v^T\) (亦即 \(\bm A^T \bm v=\lambda \bm v\) ),但一般更关注右特征。

特征向量不能是 \(\bm 0\) 向量(根据特征值定义)。特征值可以是0。方阵的特征值有无数个,特征向量也有无数个,特征分解结果也有无数种(但一般习惯约束分解结果的特征值由从大到小排列而来,特征向量矩阵由线性无关向量且是单位向量构成,这样就只有唯一一个特征分解结果)。

一个特征向量对应一个唯一特征值,但相同的特征值可能对应不同的特征向量,向量间甚至线性无关(即两个线性无关的特征向量对应的特征值可能相同)。

对应特征值相同的特征向量所张成的向量空间中任意向量都是特征向量。即若 \(\bm v_1, \bm v_2\) 对应的特征值 \(\lambda_1=\lambda_2=\lambda\) ,则任意 \(\alpha\bm v_1+\beta\bm v_2\) 是特征向量,对应特征值为 \(\lambda\) 。

特征向量 \(\bm v\) 与其标量缩放的向量 \(\alpha\bm v\) 对应的特征值是同一个。

Proof: for a given eigenvector \(\bm v\) and corresponding eigenvalue \(\lambda\) of a matrix \(A\) , or \(A\bm v=\lambda \bm v\) , then for any nonzero scalar \(\alpha \ne 0\) , \(\alpha (A\bm v)=\alpha (\lambda \bm v) \implies A(\alpha\bm v)=\lambda (\alpha\bm v)\) implying that the correspondig eigenvalue of eigenvector \(\alpha\) is \(\lambda\) .

代数重数(algebraic multiplicity):方阵A的值为 \(\lambda\) 的特征值个数称为这个特征值的代数重数。

几何重数(geometric multiplicity):特征值 \(\lambda\) 对应的线性无关向量个数称为这个特征值的几何重数。

如果向量 \(\bm v\) 是方阵A的特征向量,那么对 \(\forall a\ne 0\) , \(a\bm v\) 也是A的特征向量,且特征值和 \(\bm v\) 的相同(而不是 \(a\) 倍)。因此通常关注单位特征向量。

方阵的迹等于特征值之和,方阵的行列式等于特征值之积。

Let \(A\in M_n(\cnums)=[a_{ij}]\) be a square matrix with eigenvalues \(\lambda_1, \dots, \lambda_n\) . Then

spectrum of a matrix:

The spectrum of a matrix is the set of all eigenvalues of that matrix.

Infinite power of a matrix

If every eigenvalue of a matrix \(\bm A\) is in absolute value (strictly) less than \(1\) (or \(|\lambda| < 1\) where \(\lambda\) is the eigenvalues of \(\bm A\) ), then

where \(\bm A^p\) denotes the \(p\) -th power of matrix \(\bm A\) ( \(p\) times of matrix multiplication on \(\bm A\) ).

Perron-Frobenius theorem

The Perron-Frobenius theorem asserts that a real square matrix with positive entries has a unique largest real eigenvalue and that the corresponding eigenvector can be chosen to have (strictly) positive elements. And also asserts a similar statement to a matrix with non-negative entries.

It's useful in probability theory.

对角化 Diagonalization

对于方阵 \(\bm A\in\R^{n\times n}\) ,如果存在可逆矩阵 \(\bm V\) 使得 \(\bm V^{-1}\bm A\bm V\) 是对角矩阵,则称 \(\bm A\) 可被对角化(可对角化)。

亦即,如果一个方阵与一个对角矩阵相似,则称可被对角化。

[Def] A square matrix is said to be diagonalizable if it is similar to a diagonal matrix.

Or:

(The last equation, \(VV^{-1}=I\) , in the above formula implies that \(V\) is invertible and the inverse is denoted as \(V^{-1}\) .)

如何判断:

方阵 \(\bm A\in \R^{n\times n}\) 有 \(n\) 个线性无关的特征向量时其可被对角化。令A的特征向量组成的矩阵为V,则 \(V^{-1}AV\) 是对角矩阵,对角矩阵的对角元是A的所有特征值。

[Theorem] Let \(A\in\cnums^{n\times n}\) , then \(A\) is diagonalizable if and only if \(A\) has \(n\) linearly independent eigenvectors.

If \(v_1,v_2,\dots, v_n\) are linearly independent eigenvectors of \(A\in F^{n\times n}\) and let \(V=\begin{bmatrix}v_1 & v_2 & \dots & v_n\end{bmatrix}\) , then \(V^{-1}AV\) is a diagonal matrix.

If a square matrix \(A\) is similar to a diagonal matrix \(D\) , then the diagonal entries of \(D\) are all of the eigenvalues of \(A\) .

[Theorem] Let \(A\in\cnums^{n\times n}\) . If \(A\) has \(n\) distinct eigenvalues, then \(A\) is diagonalizable (or \(A\) is similar to a diagonal matrix).

Matrix Decomposition

Decomposition for symmetric positive (semi-)definite matrix

Let \(\bm A\in \R^{n\times n}\) be a symmetric, square matrix, \(\bm A\) is positive semidefinete if and only if it can be decomposed as a product of a matrix \(\bm B\) with its transpose. \(\bm A\) is positive definite if and only if such decomposition exists with \(\bm B\) invertible.

That is, Given a symmetric square matrix \(\bm A\in\R^{n\times n}\) :

Proof:

For direction \(A=B^TB \Rightarrow A \text{ is positive semidefinite}\) :

For direction \(A=B^TB \Leftarrow A \text{ is positive semidefinite}\) :

A is symmetric square matrix, hence applying eigendecomposition \(A=Q^TDQ\) where Q is unitary(orthogonal) and D is diagonal; A is positive semidefinite, hence \(D\ge 0\) , so exists \(D^{\frac12}\) . \(A=Q^TDQ=Q^TD^{\frac12}D^{\frac12}Q=Q^T(D^{\frac12})^TD^{\frac12}Q=(D^{\frac12}Q)^T(D^{\frac12}Q)=B^TB\) where \(B=D^{\frac12}Q\) .

特征分解 Eigen Decomposition

Eigendecompostion sometimes is called spectral decomposition.

特征分解即是找出方阵 \(\bm A_{n\times n}\) 的n个特征向量及对应特征值的过程,一般只关注单位特征向量,且特征向量之间线性无关。将n个特征向量 \(\{\bm v^{(1)},...,\bm v^{(n)}\}\) 排列成矩阵 \(\bm V=[\bm v^{(1)},...,\bm v^{(n)}]\) ,对应的特征值排列在与 \(\bm V\) 形状相同的对角阵 \(\bm\Lambda\) 上(通常先降序排列特征值,再放置对应特征向量),即 \(\Lambda_{i,i}=\lambda_i\) ,则有

只能对方阵进行特征分解,且方阵行列式非0,即 $\bm A\in \R^{n\times n} \wedge \det \bm A \ne 0 $ 。

对称矩阵的正交对角化 (对称对角化)

(行列式非0的)实对称矩阵 \(\bm S\) 可以分解为:

其中 \(\bm Q\) 是实对称方阵 \(\bm S\) 的特征向量组成的正交矩阵(即有 \(\bm Q\bm Q^T=\bm I\) 亦即 \(\bm Q^{-1}=\bm Q^T\) ), \(\bm\Lambda\) 是对角矩阵,对角元为对应特征值。

projection onto eigenvectors of a symmetric matrix:

奇异值分解 SVD(Singular Values Decomposition)

[Theorem] Let \(A\in\cnums^{m\times n}\) , then there exist unitary matrices \(U\in\cnums^{m\times m}\) and \(V\in\cnums^{n\times n}\) such that

where \(\Sigma_1=\rm{diag}[\sigma_1, \sigma_2,\dots, \sigma_k] \in\cnums^{k\times k}\) is a diagonal matrix with \(\sigma_1 \ge \sigma_2 \ge \dots \ge \sigma_k \gt 0\) , \(k=\rm{rank}(A)\) , \(\Sigma\in\cnums{m\times n}\) is a rectangular diagonal matrix. The \(\sigma_1,\sigma_2,\dots, \sigma_k\) are the positive square roots of the decreasingly ordered nonzero eigenvalues of \(AA^H\) (which has the equal eigenvalues of \(A^H A\) ). If \(A\) is real ( \(A\in \R^{m\times n}\) ), then \(U,V,\Sigma\) are real.

[Corollary] Let \(A\in\cnums^{m\times n}\) have the singular value decomposition

, then

- \(\rm{Img}(U_1)=\rm{Img}(A)\)

- \(\rm{Img}(U_2)=\rm{kernel}(A^H)\)

- \(\rm{Img}(V_1)=\rm{Img}(A^H)\)

- \(\rm{Img}(V_2)=\rm{kernel}(A)\)

.

As a special case for real numbers,

其中 \(\bm U \in \mathbb R^{m\times m}, \bm V \in \mathbb R^{n\times n}\) 都是正交矩阵, \(\bm \Sigma \in \mathbb R^{m\times n}\) 是对角矩阵,留意,SVD可对任意矩阵进行(不像特征分解只能对方阵进行)。

\(\bm U\) 的列向量称为A的左奇异向量, \(\bm V\) 的列向量(而不是 \(\bm V^T\) 的)称为A的右奇异向量, \(\bm\Sigma\) 的元素称为A的奇异值。

\(\bm U\) 是 \(\bm A\bm A^T\) 的特征向量构成的矩阵, \(\bm V\) 是 \(\bm A^T\bm A\) 的特征向量构成的矩阵, \(\bm \Sigma\) 中非零值(A的非零奇异值)是 \(\bm A^T\bm A\) 及 \(\bm A\bm A^T\) 的特征值的平方根。即:若 \(\bm A=\bm U\bm\Sigma\bm V^T\) ,则 \(\bm A\bm A^T=\bm U\bm\Sigma^2\bm U^T\) , \(\bm A^T\bm A=\bm V\bm\Sigma^2\bm V^T\) 。

SVD可将矩阵逆运算拓展到非方阵上。

对实对称矩阵A奇异值分解时,其左奇异向量矩阵同A的特征分解出的特征向量矩阵,取特征向量(奇异向量)矩阵的前K个列向量。

[Threorem] Let \(A\in\cnums^{n\times m}\) . (For any complex matrix \(A\) ) \(A, \bar A, A^T, A^H\) have the same singular values.

[Theorem] Let \(A\in M_n(\cnums)\) :

- \(A=A^T\) (symmetric) if and only if there is a unitary \(U\in M_n(\cnums)\) and a nonnegative diagonal matrix \(\Sigma\) such that \(A=U\Sigma U^T\) . The diagonals of \(\Sigma\) are the singular values of \(A\) .

- If \(A= -A^T\) (skew-symmetric), then \(\rm{rank}(A)\) is even, and, letting \(k=\rm{rank}(A)\) , there is a unitary \(U\in M_n(\cnums)\) and positive scalars \(b_1, \dots, b_{k/2}\) , such that

, where \(\oplus\) denotes the direct sum of matrices. The nonzero singular values of \(A\) are \(b_1,b_1, \dots, b_{k/2},b_{k/2}\) .

Cholesky Decomposition

A symmetric, positive definite matrix \(\bm A\) can be factorized into a product

, where L is a lower-triangular matrix with positive diagonal elements.

\(\bm L\) is called the Cholesky factor of \(\bm A\) , and \(\bm L\) is unique.

LDL Decomposition

LDL decomposition is a closely related variant of the classical Cholesky decomposition.

A symmetric, positive definite matrix \(\bm A\) can be factorized into a product

, where \(L\) is a lower unit triangular (unitriangular) matrix, and \(D\) is a diagonal matrix. That is, the diagonal elements of L are required to be \(1\) s at the cost of introducing an additional diagonal matrix D in the decomposition. The main advantage is that the LDL decomposition can be computed and used with essentially the same algorithms, but avoids extracting square roots.

The LDL decomposition is related to the classical Cholesky decomposition of the form \(\bm L\bm L^T\) as follows:

Cholesky decomposition and LDL decomposition can be applied on complex numbers by replacing transpose operations with conjugate transpose (Hermitian transpose) operations.

Polar Decomposition

For any square matrix \(A\) ,

, where \(U\) is orthogonal , and \(P\) is symmetric, positive semi-definite.

, where \(U\) is unitary , and \(P\) is Hermitian, positive semi-definite.

If \(A\) is invertible, then the decomposition is unique.

The decomposition can alse be defined as \(A=PU\) , where \(P\) is symmetric positive semi-definite matrix, and \(U\) is an orthogonal matrix.

QR Decomposition (QR factorization)

For any given square matrix \(A\in F^{n\times n}\) ( \(F\) is either \(\cnums\) or \(\R\) ),

where \(Q\in F^{n\times n}\) is unitary ( \(Q^H=Q^{-1}\) , for \(F=\cnums\) ) (or \(Q\) is orthogonal, \(Q^T=Q^{-1}\) , for \(F=\R\) ), and \(R\in F^{n\times n}\) is upper triangular (right triangular).

If \(A\) is invertible and we require the diagonal elements of \(R\) are positive, then the factorization is unique.

Analogously, we can define QL, LQ, RQ decompositions, with L being a lower triangular matrix.

QR decomposition for rectangular matrix:

(general shaped matrices)

For any given matrix \(A\in\cnums^{m\times n}\) ,

...

Schur Decomposition (Schur form)

For any given square complex matrix \(A\in\cnums^{n\times n}\) , it can be factorized as

where \(U\) is a unitary matrix ( \(U^H=U^{-1}\) ), \(R\) is an upper triangular matrix with diagonal entries being the eigenvalues of \(A\) .

For real matrices, or in \(M_n(\R)\) ( or \(\R^{n\times n}\) ), the statement is false.

[Theorem] Different upper triangular matrices in \(C^{n\times n}\) with the same diagonals can be unitarily similar.

[Theorem] (Cayley-Hamilton theorem) Let \(F\) be any field, \(A\in F^{n\times n}\) be a square matrix, \(p_A(x)\in F\) be the characteristic polynomial of \(A\in F^{n\times n}\) . Then \(p_A(A)=0_{n\times n}\in F^{n\times n}\) .

Applications:

- Writing powers \(A^k\) of \(A\in F^{n\times n}\) for \(k\ge n\) as linear combination of \(I, A, A^2, \dots, A^{n-1}\) .

[Theorem] Let \(A\in F^{n\times n}, B\in F^{m\times m}, X\in F^{n\times m}\) . If \(AX-XB=0\) , then \(g(A)X-X g(B)=0\) for any polynomial \(g(t)\) .

Proof:

[Theorem] Let \(A\in F^{n\times n}, B\in F^{m\times m}\) be given, \(F\in\{\R, \cnums\}\) . The equation \(AX-XB=C\) has a unique solution \(X\in F^{n\times m}\) for each given \(C\in F^{n\times m}\) if and only if $\lambda(A) \cap \lambda(B)=\varnothing $ , that is, A and B has no eigenvalue in common.

In particular, if A and B has no eigenvalue in common, or $\lambda(A) \cap \lambda(B)=\varnothing $ , then the only solution for \(AX-XB=0\) is \(X=0\) .

CS Decomposition

...

Toeplitz Decomposition

For any given complex square matrix \(A\in M_n(\cnums)\) , it can be uniquely written as

, where \(H_1, H_2\) are Hermitian.

For any given complex square matrix \(A\in M_n(\cnums)\) , it can be uniquely written as

, where \(H\) is Hermitian, \(K\) is skew-Hermitian.

仿射变换(affine transformation):

一次线性变换加平移( \(\bm W^T\bm x+\bm c\) )。

Affine transformation: A composition of two functions: a linear map and a translation. Ordinary vector algebra uses matrix multiplication to represent linear maps, and vector addition to represent translations.

线性回归、逻辑回归、凸优化从任何初始参数出发最终都可以收敛到全局最优解(理论上是这样的,实际求解中可能遇到数值问题)。基于随机梯度下降(SGD)的非凸优化对初始参数敏感,前馈神经网络将初始权重一般设为小的随机值(偏置可设为0)。

logistic sigmoid函数:

向量 \(\bm x\) 到其仿射函数 \(\bm w^T \bm x + b\) (超平面)的距离定义为:

Tensor Decompositon

higher-order SVD, CP decomposition, Tucker decomposition

浙公网安备 33010602011771号

浙公网安备 33010602011771号