WebRTC Native开发实战之数据采集--摄像头

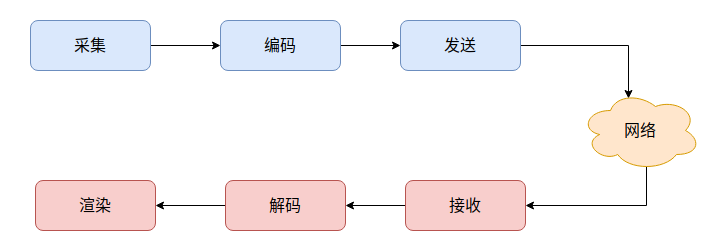

1. 实时音视频开发主要步骤

2. 数据采集

音频的采集主要来自麦克风;

视频的采集源主要有两个: 1. 摄像头; 2. 屏幕。

这里先介绍如何采集摄像头数据。

2.1 环境

我这里使用的是Ubuntu,因此和windows会稍微有些差别,但是都可以通过example下的peerconnection实例来很方便地对照实现。

由于我的机器没有摄像头,因此还是采取在ubuntu上使用v4l2loopback和ffmpeg模拟摄像头 .

2.2 获取设备信息

再进行摄像头采集前,需要先知道有哪几个摄像头可用。

在webrt中可以使用webrtc::VideoCaptureModule::DeviceInfo来实现设备枚举:

std::unique_ptr<webrtc::VideoCaptureModule::DeviceInfo> info(

webrtc::VideoCaptureFactory::CreateDeviceInfo());

int num_devices = info->NumberOfDevices();

if (!info) {

RTC_LOG(LERROR) << "CreateDeviceInfo failed";

return -1;

}

int num_devices = info->NumberOfDevices();

for (int i = 0; i < num_devices; ++i) {

//使用索引i创建capture对象

}

}

Linux上枚举设备的函数NumberOfDevices:

uint32_t DeviceInfoLinux::NumberOfDevices() {

RTC_LOG(LS_INFO) << __FUNCTION__;

uint32_t count = 0;

char device[20];

int fd = -1;

struct v4l2_capability cap;

/* detect /dev/video [0-63]VideoCaptureModule entries */

for (int n = 0; n < 64; n++) {

sprintf(device, "/dev/video%d", n);

if ((fd = open(device, O_RDONLY)) != -1) {

// query device capabilities and make sure this is a video capture device

if (ioctl(fd, VIDIOC_QUERYCAP, &cap) < 0 ||

!(cap.device_caps & V4L2_CAP_VIDEO_CAPTURE)) {

close(fd);

continue;

}

close(fd);

count++;

}

}

return count;

}

很简单,就是遍历/dev/video*文件。

2.3 实现Sink

首先还是要再次确认几个概念:

- 对于流媒体系统来说,产生数据的装置叫

Source,接收数据的装置叫Sink。 webrtc中抽象了VideoSourceInterface和VideoSinkInterface分别表示Source和Sink,但是它们是相对的概念,比如某一抽象可能对底层是Sink,但是对上层是Source。- 如果能够提供视频数据,需要实现

VideoSourceInterface,此接口类暴露了AddOrUpdateSink,可以将Sink注册给Source。 - 如果想要接收视频数据,需要实现

VideoSinkInterface,此接口暴露了OnFrame函数,只要将Sink通过AddOrUpdateSink函数注册给Source,那么Source就会通过OnFrame接口将数据传给Sink。 VideoCapture采集摄像头时,既是VideoSinkInterface也是VideoSourceInterface.

因此可以仿照peer_connection/client项目写出捕获摄像头的大概代码.

首先,实现VideoSinkInterface:

// vcm_capturer_test.h

#ifndef EXAMPLES_VIDEO_CAPTURE_VCM_CAPTURER_TEST_H_

#define EXAMPLES_VIDEO_CAPTURE_VCM_CAPTURER_TEST_H_

#include <memory>

#include "modules/video_capture/video_capture.h"

#include "examples/video_capture/video_capturer_test.h"

namespace webrtc_demo {

class VcmCapturerTest : public VideoCapturerTest,

public rtc::VideoSinkInterface<webrtc::VideoFrame> {

public:

static VcmCapturerTest* Create(size_t width,

size_t height,

size_t target_fps,

size_t capture_device_index);

virtual ~VcmCapturerTest();

void OnFrame(const webrtc::VideoFrame& frame) override;

private:

VcmCapturerTest();

bool Init(size_t width,

size_t height,

size_t target_fps,

size_t capture_device_index);

void Destroy();

rtc::scoped_refptr<webrtc::VideoCaptureModule> vcm_;

webrtc::VideoCaptureCapability capability_;

};

} // namespace webrtc_demo

#endif // EXAMPLES_VIDEO_CAPTURE_VCM_CAPTURER_TEST_H_

// vcm_capturer_test.cc

#include "examples/video_capture/vcm_capturer_test.h"

#include "modules/video_capture/video_capture_factory.h"

#include "rtc_base/logging.h"

namespace webrtc_demo {

VcmCapturerTest::VcmCapturerTest() : vcm_(nullptr) {}

VcmCapturerTest::~VcmCapturerTest() {

Destroy();

}

bool VcmCapturerTest::Init(size_t width,

size_t height,

size_t target_fps,

size_t capture_device_index) {

std::unique_ptr<webrtc::VideoCaptureModule::DeviceInfo> device_info(

webrtc::VideoCaptureFactory::CreateDeviceInfo());

char device_name[256];

char unique_name[256];

if (device_info->GetDeviceName(static_cast<uint32_t>(capture_device_index),

device_name, sizeof(device_name), unique_name,

sizeof(unique_name)) != 0) {

Destroy();

return false;

}

vcm_ = webrtc::VideoCaptureFactory::Create(unique_name);

if (!vcm_) {

return false;

}

vcm_->RegisterCaptureDataCallback(this);

device_info->GetCapability(vcm_->CurrentDeviceName(), 0, capability_);

capability_.width = static_cast<int32_t>(width);

capability_.height = static_cast<int32_t>(height);

capability_.maxFPS = static_cast<int32_t>(target_fps);

capability_.videoType = webrtc::VideoType::kI420;

if (vcm_->StartCapture(capability_) != 0) {

Destroy();

return false;

}

RTC_CHECK(vcm_->CaptureStarted());

return true;

}

VcmCapturerTest* VcmCapturerTest::Create(size_t width,

size_t height,

size_t target_fps,

size_t capture_device_index) {

std::unique_ptr<VcmCapturerTest> vcm_capturer(new VcmCapturerTest());

if (!vcm_capturer->Init(width, height, target_fps, capture_device_index)) {

RTC_LOG(LS_WARNING) << "Failed to create VcmCapturer(w = " << width

<< ", h = " << height << ", fps = " << target_fps

<< ")";

return nullptr;

}

return vcm_capturer.release();

}

void VcmCapturerTest::Destroy() {

if (!vcm_)

return;

vcm_->StopCapture();

vcm_->DeRegisterCaptureDataCallback();

// Release reference to VCM.

vcm_ = nullptr;

}

void VcmCapturerTest::OnFrame(const webrtc::VideoFrame& frame) {

static auto timestamp = std::chrono::duration_cast<std::chrono::milliseconds>(

std::chrono::system_clock::now().time_since_epoch()).count();

static size_t cnt = 0;

RTC_LOG(LS_INFO) << "OnFrame";

VideoCapturerTest::OnFrame(frame);

cnt++;

auto timestamp_curr = std::chrono::duration_cast<std::chrono::milliseconds>(

std::chrono::system_clock::now().time_since_epoch()).count();

if(timestamp_curr - timestamp > 1000) {

RTC_LOG(LS_INFO) << "FPS: " << cnt;

cnt = 0;

timestamp = timestamp_curr;

}

}

} // namespace webrtc_demo

2.4 实现Source

// video_capturer_test.h

class VideoCapturerTest : public rtc::VideoSourceInterface<webrtc::VideoFrame> {

public:

class FramePreprocessor {

public:

virtual ~FramePreprocessor() = default;

virtual webrtc::VideoFrame Preprocess(const webrtc::VideoFrame& frame) = 0;

};

public:

~VideoCapturerTest() override;

void AddOrUpdateSink(rtc::VideoSinkInterface<webrtc::VideoFrame>* sink,

const rtc::VideoSinkWants& wants) override;

void RemoveSink(rtc::VideoSinkInterface<webrtc::VideoFrame>* sink) override;

void SetFramePreprocessor(std::unique_ptr<FramePreprocessor> preprocessor) {

std::lock_guard<std::mutex> lock(mutex_);

preprocessor_ = std::move(preprocessor);

}

protected:

void OnFrame(const webrtc::VideoFrame& frame);

rtc::VideoSinkWants GetSinkWants();

private:

void UpdateVideoAdapter();

webrtc::VideoFrame MaybePreprocess(const webrtc::VideoFrame& frame);

private:

std::unique_ptr<FramePreprocessor> preprocessor_;

std::mutex mutex_;

rtc::VideoBroadcaster broadcaster_;

cricket::VideoAdapter video_adapter_;

};

} // namespace webrtc_demo

#endif // EXAMPLES_VIDEO_CAPTURE_VIDEO_CPTURE_TEST_H_

// video_capturer_test.cc

#include "examples/video_capture/video_capturer_test.h"

#include "api/video/i420_buffer.h"

#include "api/video/video_rotation.h"

#include "rtc_base/logging.h"

namespace webrtc_demo {

VideoCapturerTest::~VideoCapturerTest() = default;

void VideoCapturerTest::OnFrame(const webrtc::VideoFrame& original_frame) {

int cropped_width = 0;

int cropped_height = 0;

int out_width = 0;

int out_height = 0;

webrtc::VideoFrame frame = MaybePreprocess(original_frame);

if (!video_adapter_.AdaptFrameResolution(

frame.width(), frame.height(), frame.timestamp_us() * 1000,

&cropped_width, &cropped_height, &out_width, &out_height)) {

// Drop frame in order to respect frame rate constraint.

return;

}

if (out_height != frame.height() || out_width != frame.width()) {

// Video adapter has requested a down-scale. Allocate a new buffer and

// return scaled version.

// For simplicity, only scale here without cropping.

rtc::scoped_refptr<webrtc::I420Buffer> scaled_buffer =

webrtc::I420Buffer::Create(out_width, out_height);

scaled_buffer->ScaleFrom(*frame.video_frame_buffer()->ToI420());

webrtc::VideoFrame::Builder new_frame_builder =

webrtc::VideoFrame::Builder()

.set_video_frame_buffer(scaled_buffer)

.set_rotation(webrtc::kVideoRotation_0)

.set_timestamp_us(frame.timestamp_us())

.set_id(frame.id());

;

if (frame.has_update_rect()) {

webrtc::VideoFrame::UpdateRect new_rect =

frame.update_rect().ScaleWithFrame(frame.width(), frame.height(), 0,

0, frame.width(), frame.height(),

out_width, out_height);

new_frame_builder.set_update_rect(new_rect);

}

broadcaster_.OnFrame(new_frame_builder.build());

} else {

// No adaptations needed, just return the frame as is.

broadcaster_.OnFrame(frame);

}

}

rtc::VideoSinkWants VideoCapturerTest::GetSinkWants() {

return broadcaster_.wants();

}

void VideoCapturerTest::AddOrUpdateSink(

rtc::VideoSinkInterface<webrtc::VideoFrame>* sink,

const rtc::VideoSinkWants& wants) {

broadcaster_.AddOrUpdateSink(sink, wants);

UpdateVideoAdapter();

}

void VideoCapturerTest::RemoveSink(

rtc::VideoSinkInterface<webrtc::VideoFrame>* sink) {

broadcaster_.RemoveSink(sink);

UpdateVideoAdapter();

}

void VideoCapturerTest::UpdateVideoAdapter() {

video_adapter_.OnSinkWants(broadcaster_.wants());

}

webrtc::VideoFrame VideoCapturerTest::MaybePreprocess(

const webrtc::VideoFrame& frame) {

std::lock_guard<std::mutex> lock(mutex_);

if (preprocessor_ != nullptr) {

return preprocessor_->Preprocess(frame);

} else {

return frame;

}

}

} // namespace webrtc_demo

2.5 main函数

main函数:

#include "modules/video_capture/video_capture_factory.h"

#include "rtc_base/logging.h"

#include "examples/video_capture/vcm_capturer_test.h"

#include "test/video_renderer.h"

#include <iostream>

#include <thread>

int main() {

const size_t kWidth = 1920;

const size_t kHeight = 1080;

const size_t kFps = 30;

std::unique_ptr<webrtc_demo::VcmCapturerTest> capturer;

std::unique_ptr<webrtc::VideoCaptureModule::DeviceInfo> info(

webrtc::VideoCaptureFactory::CreateDeviceInfo());

std::unique_ptr<webrtc::test::VideoRenderer> renderer;

if (!info) {

RTC_LOG(LERROR) << "CreateDeviceInfo failed";

return -1;

}

int num_devices = info->NumberOfDevices();

for (int i = 0; i < num_devices; ++i) {

capturer.reset(

webrtc_demo::VcmCapturerTest::Create(kWidth, kHeight, kFps, i));

if (capturer) {

break;

}

}

if (!capturer) {

RTC_LOG(LERROR) << "Cannot found available video device";

return -1;

}

renderer.reset(webrtc::test::VideoRenderer::Create("Camera", kWidth, kHeight));

capturer->AddOrUpdateSink(renderer.get(), rtc::VideoSinkWants());

std::this_thread::sleep_for(std::chrono::seconds(30));

capturer->RemoveSink(renderer.get());

RTC_LOG(WARNING) << "Demo exit";

return 0;

}

这里直接使用webrtc::test::VideoRenderer现成的结构实现渲染端。其实仔细看webrtc::test::VideoRenderer的代码,它就实现了rtc::VideoSinkInterface,可以直接通过AddOrUpdateSink将它交给我们自己定义的VideoCapturerTest结构体,因为我们向上层提供的VideoCapturerTest是一个rtc::VideoSourceInterface实现。

demo代码分支: https://github.com/243286065/webrtc-cpp-demo/tree/496545116dd6d44c10a0c9b96f1420f54b540abb

提交diff: https://github.com/243286065/webrtc-cpp-demo/commit/496545116dd6d44c10a0c9b96f1420f54b540abb

3. VideoCaptureFactory

上面的例子中我们通过webrtc::test::VideoRenderer来实现一个渲染端是个很方便偷懒的办法,其实如果要自己实现的话也是很简单的,仿照src/test/video_renderer.h实现就行:

// src/test/video_renderer.h

/*

* Copyright (c) 2013 The WebRTC project authors. All Rights Reserved.

*

* Use of this source code is governed by a BSD-style license

* that can be found in the LICENSE file in the root of the source

* tree. An additional intellectual property rights grant can be found

* in the file PATENTS. All contributing project authors may

* be found in the AUTHORS file in the root of the source tree.

*/

#ifndef TEST_VIDEO_RENDERER_H_

#define TEST_VIDEO_RENDERER_H_

#include <stddef.h>

#include "api/video/video_sink_interface.h"

namespace webrtc {

class VideoFrame;

namespace test {

class VideoRenderer : public rtc::VideoSinkInterface<VideoFrame> {

public:

// Creates a platform-specific renderer if possible, or a null implementation

// if failing.

static VideoRenderer* Create(const char* window_title,

size_t width,

size_t height);

// Returns a renderer rendering to a platform specific window if possible,

// NULL if none can be created.

// Creates a platform-specific renderer if possible, returns NULL if a

// platform renderer could not be created. This occurs, for instance, when

// running without an X environment on Linux.

static VideoRenderer* CreatePlatformRenderer(const char* window_title,

size_t width,

size_t height);

virtual ~VideoRenderer() {}

protected:

VideoRenderer() {}

};

} // namespace test

} // namespace webrtc

#endif // TEST_VIDEO_RENDERER_H_

不过CreatePlatformRenderer就需要根据各平台自己实现,webrtc的默认实现位于

src/test/linux/video_renderer_linux.cc 和 src/test/win/d3d_renderer.cc,需要使用glx或者d3d自己实现窗口。

表面上,上述实现中,我们把采集端的source和sink自己给实现,其实是使用了webrt自己提供的一套方便的接口:webrtc::VideoCaptureFactory:

//video_capture_factory.h

#ifndef MODULES_VIDEO_CAPTURE_VIDEO_CAPTURE_FACTORY_H_

#define MODULES_VIDEO_CAPTURE_VIDEO_CAPTURE_FACTORY_H_

#include "api/scoped_refptr.h"

#include "modules/video_capture/video_capture.h"

#include "modules/video_capture/video_capture_defines.h"

namespace webrtc {

class VideoCaptureFactory {

public:

// Create a video capture module object

// id - unique identifier of this video capture module object.

// deviceUniqueIdUTF8 - name of the device.

// Available names can be found by using GetDeviceName

static rtc::scoped_refptr<VideoCaptureModule> Create(

const char* deviceUniqueIdUTF8);

static VideoCaptureModule::DeviceInfo* CreateDeviceInfo();

private:

~VideoCaptureFactory();

};

} // namespace webrtc

#endif // MODULES_VIDEO_CAPTURE_VIDEO_CAPTURE_FACTORY_H_

因为这套接口webrtc进行了封装,它非常简洁且可以跨平台。我们的VcmCapturerTest只不过是对它进行了更进一步的封装。如果要更简洁的实现,完全可以只用VideoCaptureFactory就实现采集,然后使用VideoCaptureModule::RegisterCaptureDataCallback注册一个webrtc::test::VideoRenderer实现视频播放。

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· DeepSeek 解答了困扰我五年的技术问题

· 为什么说在企业级应用开发中,后端往往是效率杀手?

· 用 C# 插值字符串处理器写一个 sscanf

· Java 中堆内存和栈内存上的数据分布和特点

· 开发中对象命名的一点思考

· DeepSeek 解答了困扰我五年的技术问题。时代确实变了!

· PPT革命!DeepSeek+Kimi=N小时工作5分钟完成?

· What?废柴, 还在本地部署DeepSeek吗?Are you kidding?

· DeepSeek企业级部署实战指南:从服务器选型到Dify私有化落地

· 程序员转型AI:行业分析