Pytorch笔记|小土堆|P16-22|神经网络基本骨架、卷积层、池化层、非线性激活层、归一化、线性层、Sequential

Pytorch学习资料:http://www.feiguyunai.com/index.php/2019/09/11/pytorch-char03/

————————————————————————————————————

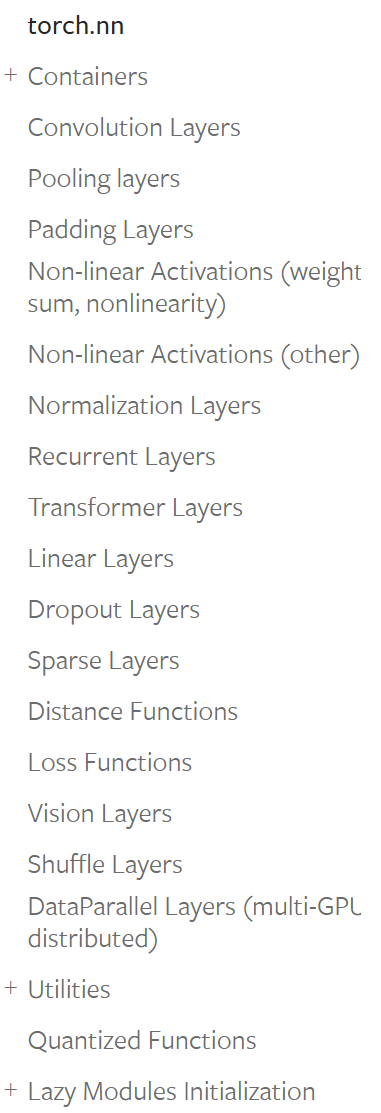

torch.nn

Containers是神经网络骨架,含6个类,最常用的是Module——Base class for all NN modules

Module

所有神经网络模型(子类)都必须继承Module(父类),Module相当于给所有的神经网络提供了模板,但可进行修改

官方示例:

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

土堆示例:

import torch

from torch import nn

class tudui(nn.Module):

# 调出Generate,alt+insert

# tudui继承Module,必须定义__init__和forward,也可自行补充和修改内容

def __init__(self):

super().__init__() # 子类调用父类的__init__

# 这里可定义前向过程forward中要用到的各种层

def forward(self, input):

# forward和__call__一样,可直接传入参数给对象

output = input + 1

return output

a = tudui() # 创建对象

input = torch.tensor(1.0)

output = a(input) # 传入参数,得到返回内容

print(output)

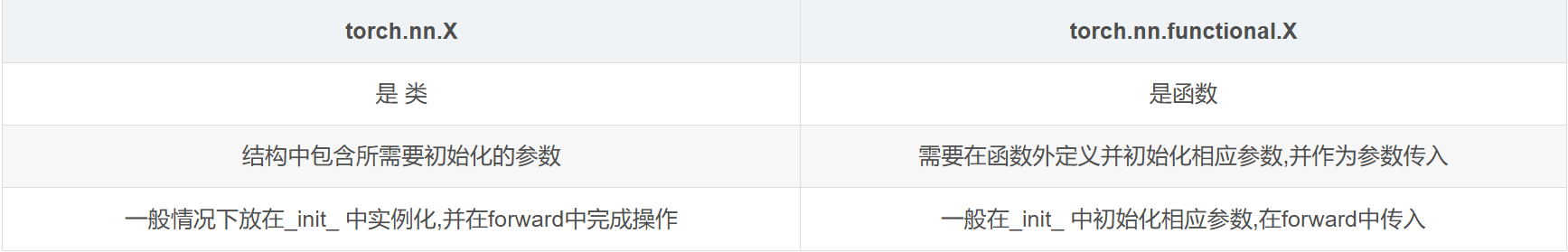

torch.nn和torch.nn.functional区别:

torch.nn包含的是类,torch.nn.functional包含的是函数

参考资料:https://blog.csdn.net/wangweiwells/article/details/100531264

————————————————————————————————

以torch.nn.functional中的conv.2d为例,解释一下卷积的一些重要参数

import torch.nn.functional as F

output = F.conv.2d(input, kernel, stride=1, padding=1)

先看官方文档:

torch.nn.functional.conv2d(input, weight, bias=None, stride=1, padding=0, dilation=1, groups=1) → Tensor

input:要求shape为(minibatch, in_channels, iH, iW)

*如果不符合要求,应reshape:input = torch.reshape(input, (1, 1, 32, 32)

weight:卷积核,要求shape为(out_channels, in_channels/groups, kH, kW)

bias:optional,默认为None

stride:默认为1,卷积核每次移动多少

padding:默认为0(不填充),否则是填充0的宽度和高度

*stride和padding如果只给1个数值,同时适用于H和W,也可以分别给(sH, sW), (padH, padW)

————————————————————————————————

在上述内容(Module和conv.2d)的基础上,学习nn.Conv2d

示例代码:

import torchvision

from torch import nn

from torch.utils.data import DataLoader

test_set = torchvision.datasets.CIFAR10(root=r'./cifar10', train=False, transform=torchvision.transforms.ToTensor()) # ToTensor后的()别忘记

dataloader = DataLoader(test_set, batch_size=64)

class tudui(nn.Module):

# tudui继承Module,必须定义__init__和forward,也可自行补充和修改内容

def __init__(self):

super().__init__() # 子类调用父类的__init__

self.conv = nn.Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

# 这里定义前向过程需要用到的一些层,把参数都设置好

def forward(self, input):

# forward和__call__一样,可直接传入参数给对象

output = self.conv(input) # 此处,前向过程中包含一层卷积

return output

a = tudui() # 创建对象

for data in dataloader: # 一个batch一个batch拿出来!

imgs, targets = data

output = a(imgs) # 传入参数,得到返回内容

print(output.shape)

关于nn.Conv2d这个类(而F.conv.2d是一个函数)所需传入的一些参数:

self.conv = nn.Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

in_channels:input的通道数

out_channels:output的通道数——等于卷积核的个数

比如input是3通道图片,卷积核也是3通道的,那么一个卷积核作用于input形成一张feature map(output的一个通道),有多少个卷积核就导致output有几个通道

kernel_size:卷积核size(卷积核不需要自己定,只用给size,在训练过程中会不断完善)

stride、padding同前

对于Conv.2d,output的H和W可根据公式算:

Input: \((N, C_{in}, H_{in}, W_{in})\)

Output: \((N, C_{out}, H_{out}, W_{out})\) where

\(H_{out} = \left\lfloor\frac{H_{in} + 2 \times \text{padding}[0] - \text{dilation}[0] \times (\text{kernel\_size}[0] - 1) - 1}{\text{stride}[0]} + 1\right\rfloor\)

\(W_{out} = \left\lfloor\frac{W_{in} + 2 \times \text{padding}[1] - \text{dilation}[1] \times (\text{kernel\_size}[1] - 1) - 1}{\text{stride}[1]} + 1\right\rfloor\)

如果input和output尺寸不变,比如都是32*32,则计算padding = (kernel_size - 1)/2

——————————————————————————————

卷积层————提取特征,池化层————降低特征的数据量————降低计算量

池化层pooling layers:

操作:对卷积层的输出做subsampling下采样,即 对卷积层输出的new img内容分组(kernal size自己定),每组选一个代表(自己定,如max【maxpool】/mean【meanpool】),形成变小但通道数未变的new img。

优点:减少运算量

缺点:可能对于细节捕捉有所不足。现在很多做full conv,扔掉pooling层,如AlphaGo下围棋不能随便缺行/缺列。

1、 无需要学习的参数

2、 通道数不变

3、 对微小位置变化有鲁棒性

学习nn.MaxPool2d:

torch.nn.MaxPool2d(kernel_size, stride=None, padding=0, dilation=1, return_indices=False, ceil_mode=False)

参数:

kernel_size – the size of the window to take a max over

stride – the stride of the window. Default value is kernel_size

padding – implicit zero padding to be added on both sides

dilation – a parameter that controls the stride of elements in the window

return_indices – if True, will return the max indices along with the outputs. Useful for torch.nn.MaxUnpool2d later

ceil_mode – when True, will use ceil instead of floor to compute the output shape

实例代码:

import torchvision

from torch import nn

from torch.utils.data import DataLoader

test_set = torchvision.datasets.CIFAR10(root=r'./cifar10', train=False, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(test_set, batch_size=64)

class tudui(nn.Module):

def __init__(self):

super().__init__()

self.conv = nn.Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

self.maxpool = nn.MaxPool2d(3)

def forward(self, input):

output = self.maxpool(self.conv(input))

return output

a = tudui()

for data in dataloader:

imgs, targets = data

output = a(imgs)

print(output.shape)

———————————————————————————————————

非线性变换:torch.nn中Non-linear Activations

nn.ReLU()

nn.Sigmoid()

nn.Softmax()

等

———————————————————————————————————

正则化防止过拟合,作用于损失函数

归一化加快训练速度,作用于激活函数输入。在卷积神经网络的卷积层之后可添加BatchNorm2d进行数据的归一化处理,使得数据在进行非线性激活前不会因为数据过大而导致网络性能的不稳定

nn.BatchNorm2d

具体参考:https://blog.csdn.net/qq_50001789/article/details/120507768

———————————————————————————————————

linear layers,深度学习网络中会用到的全连接层fully connected layers,用于变换特征的维度

torch.nn.Linear(in_features, out_features, bias=True, device=None, dtype=None)

in_features:输入通道数(input特征维度,要先flatten成向量torch.flatten(imgs)或者nn.Flatten())

out_features:输出通道数(output特征维度)

实例代码:

import torchvision

from torch import nn

from torch.utils.data import DataLoader

test_set = torchvision.datasets.CIFAR10(root=r'./cifar10', train=False, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(test_set, batch_size=64, drop_last=True)

class tudui(nn.Module):

def __init__(self):

super().__init__()

self.linear = nn.Linear(196608, 10)

def forward(self, input):

output = self.linear(input)

return output

a = tudui()

for data in dataloader:

imgs, targets = data

imgs_f = torch.flatten(imgs)

output = a(imgs_f)

print(output.shape)

———————————————————————————————————————

nn.Sequential

在__init__中用Sequential封装所有layers:

self.model1 = nn.Sequential(

nn.Conv2d(1,20,5),

nn.ReLU(),

nn.Conv2d(20,64,5),

nn.ReLU()

)

在__forward__中就可以简化代码:

output = self.model1(input)

本文来自博客园,作者:xjl-ultrasound,转载请注明原文链接:https://www.cnblogs.com/xjl-ultrasound/p/18340286