cuda、cudnn、zlib 深度学习GPU必配三件套(Windows)

本文是按照 tensorrtx/yolo11 at master · wang-xinyu/tensorrtx (github.com) 要求的版本进行下载安装,跨大版本不推荐,到处是坑、坑、坑~

默认已经安装了VS2022、英伟达显卡的最新版本驱动。

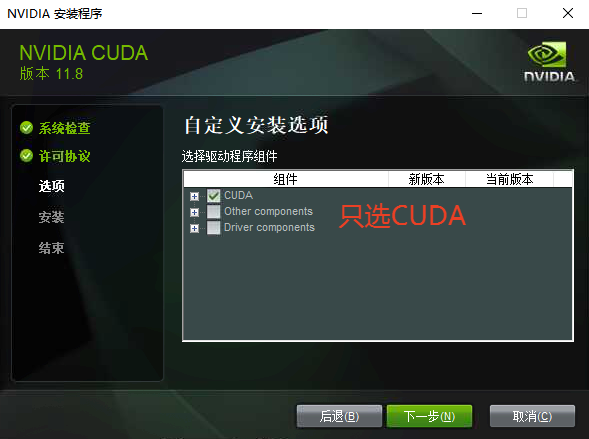

1、cuda 11.8

下载地址:CUDA Toolkit Archive | NVIDIA 开发者

默认安装在 C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.8,注意下图两步,其他默认。

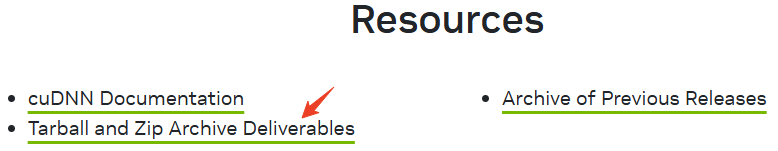

2、cudnn 8.9.7

下载地址:cuDNN Archive | NVIDIA Developer

解压后:

dll 放到 C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.8\bin

lib 放到 C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.8\lib\x64

include 放到 C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.8\include

之后,可以把cudnn文件删除了。

关于新版本:

新版本开始提供exe安装了,也可以压缩包方式安装。更新太快,建议查看官方文档

8版本 Documentation Archives :: NVIDIA cuDNN Documentation

9版本 Release Notes — NVIDIA cuDNN v9.5.0 documentation

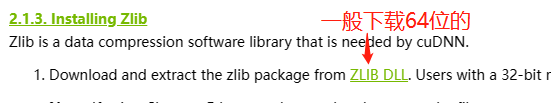

3、zlib

Zlib是cuDNN所需的数据压缩软件库。从cudnn 8.9.4 版本开始,window系统不需要再安装zlib了,已经链接进cudnn的动态库里了。linux还需安装,参见2步骤的官方文档链接。

ZLIB version 1.2.13 is statically linked into the cuDNN Windows dynamic libraries.

具体下载地址查看官方文档 Documentation Archives :: NVIDIA cuDNN Documentation

解压后,

zlibwapi.dll 放到 C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.8\bin

zlibwapi.lib 放到 C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.8\lib\x64

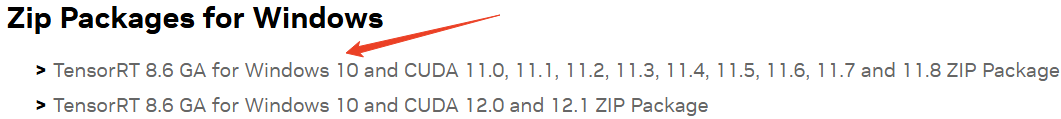

4、tensorrt 8.6

下载地址:TensorRT Download | NVIDIA Developer

GA是稳定版

解压后,直接放到了C盘根目录。

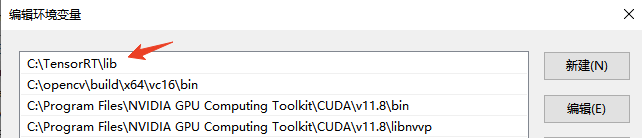

把lib目录添加进系统环境变量,因为dll在此目录里。

5、opencv

下载安装(其实就是解压)到C盘根目录

同上,把bin目录添加进系统环境变量,因为dll在此目录里。

~~~~~~~~~~~~~~~~~ 以上是部署模型要用的,以下是训练模型要用的~~~~~~~~~~~~~~~~~~~

6、minconda3+pyhton3.10+pytorch

训练用python环境训练,推荐装到conda虚拟环境里。

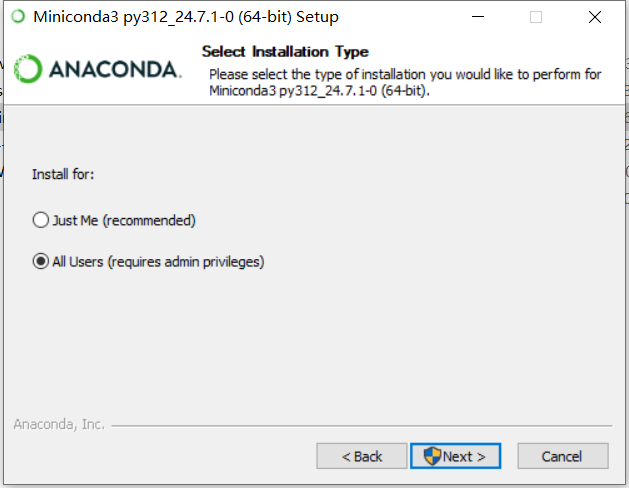

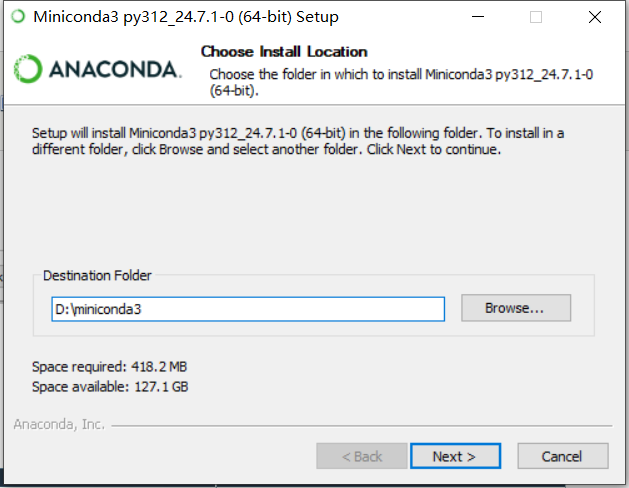

下载地址: Download Anaconda Distribution | Anaconda(打开的网页,往下滑就能看到Miniconda),按照下图安装,其他默认。

miniconda3安装到D盘(怕C盘老是要权限)

打开conda终端,创建环境,指定python3.10版本

conda create -n yolo11 python=3.10

激活yolo11环境

conda activate yolo11

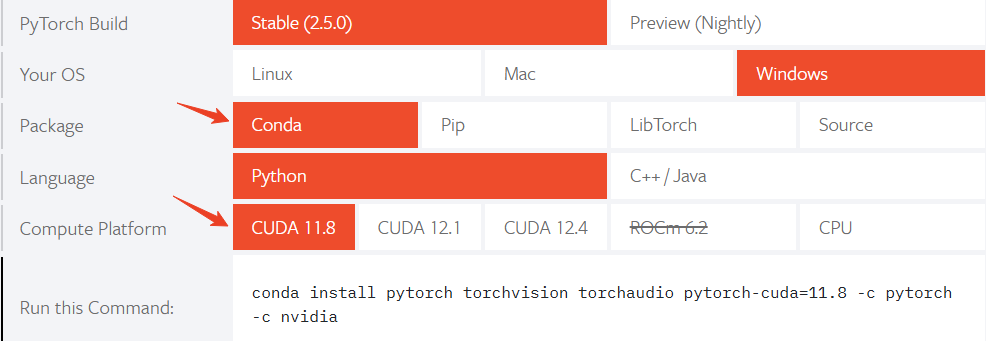

安装pytorch,PyTorch 官网复制粘贴命令行,回车安装即可。

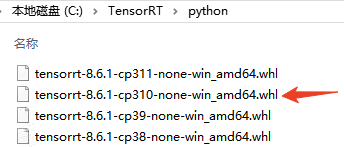

安装tensorrt,其实就是执行下图文件

conda终端里执行:

pip install C:\TensorRT\python\tensorrt-8.6.1-cp310-none-win_amd64.whl

7、训练yolo

conda终端里执行:

pip install ultralytics

pycharm软件,新建python项目,选择yolo11虚拟环境,main.py里输入

from ultralytics import YOLO # Load a model model = YOLO("yolo11n.pt") # Train the model train_results = model.train( data="coco8.yaml", # path to dataset YAML epochs=10, # number of training epochs imgsz=640, # training image size device=0, # device to run on, i.e. device=0 or device=0,1,2,3 or device=cpu batch=1, workers=0 ) # Evaluate model performance on the validation set metrics = model.val() # Perform object detection on an image results = model("https://ultralytics.com/images/bus.jpg") results[0].show() # Export the model to ONNX format # pathOnnx = model.export(format="onnx") # return path to exported model pathEngine = model.export(format="engine", device=0)

报错就多运行几次,因为有一些包会下载失败。

最终生成 yolo11n.engine,说明训练环境OK。

8、部署yolo

下载 tensorrtx/yolo11 at master · wang-xinyu/tensorrtx (github.com)

使用vscode将yolo11编译成vs2022项目,CMakeLists.txt文件内容略微修改如下:

cmake_minimum_required(VERSION 3.10) project(yolov11) add_definitions(-std=c++11) add_definitions(-DAPI_EXPORTS) set(CMAKE_CXX_STANDARD 11) set(CMAKE_BUILD_TYPE Debug) set(CMAKE_CUDA_COMPILER /usr/local/cuda/bin/nvcc) enable_language(CUDA) include_directories(${PROJECT_SOURCE_DIR}/include) include_directories(${PROJECT_SOURCE_DIR}/plugin) # include and link dirs of cuda and tensorrt, you need adapt them if yours are different if(CMAKE_SYSTEM_PROCESSOR MATCHES "aarch64") message("embed_platform on") include_directories(/usr/local/cuda/targets/aarch64-linux/include) link_directories(/usr/local/cuda/targets/aarch64-linux/lib) else() message("embed_platform off") # cuda include_directories("C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v11.8/include") link_directories("C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v11.8/lib/x64") # tensorrt include_directories("C:/TensorRT/include") link_directories("C:/TensorRT/lib") endif() add_library(myplugins SHARED ${PROJECT_SOURCE_DIR}/plugin/yololayer.cu) target_link_libraries(myplugins nvinfer cudart) set(OpenCV_DIR "C:/opencv/build") find_package(OpenCV) include_directories(${OpenCV_INCLUDE_DIRS}) file(GLOB_RECURSE SRCS ${PROJECT_SOURCE_DIR}/src/*.cpp ${PROJECT_SOURCE_DIR}/src/*.cu) add_executable(yolo11_det ${PROJECT_SOURCE_DIR}/yolo11_det.cpp ${SRCS}) target_link_libraries(yolo11_det nvinfer) target_link_libraries(yolo11_det cudart) target_link_libraries(yolo11_det myplugins) target_link_libraries(yolo11_det ${OpenCV_LIBS}) add_executable(yolo11_cls ${PROJECT_SOURCE_DIR}/yolo11_cls.cpp ${SRCS}) target_link_libraries(yolo11_cls nvinfer) target_link_libraries(yolo11_cls cudart) target_link_libraries(yolo11_cls myplugins) target_link_libraries(yolo11_cls ${OpenCV_LIBS}) add_executable(yolo11_seg ${PROJECT_SOURCE_DIR}/yolo11_seg.cpp ${SRCS}) target_link_libraries(yolo11_seg nvinfer) target_link_libraries(yolo11_seg cudart) target_link_libraries(yolo11_seg myplugins) target_link_libraries(yolo11_seg ${OpenCV_LIBS}) add_executable(yolo11_pose ${PROJECT_SOURCE_DIR}/yolo11_pose.cpp ${SRCS}) target_link_libraries(yolo11_pose nvinfer) target_link_libraries(yolo11_pose cudart) target_link_libraries(yolo11_pose myplugins) target_link_libraries(yolo11_pose ${OpenCV_LIBS})

在训练环境中将pt转onnx,用到了pytorch。

在部署环境中将onnx转engine,不再使用pytorch,用tensorrt自带工具 trtexec.exe 转。位于C:\TensorRT\bin

参考 trtexec命令用法_trtexec 执行-CSDN博客

【一些可能问题】

MATLAB解决cuda编译报错的问题_error: static assertion failed with "error stl1002-CSDN博客