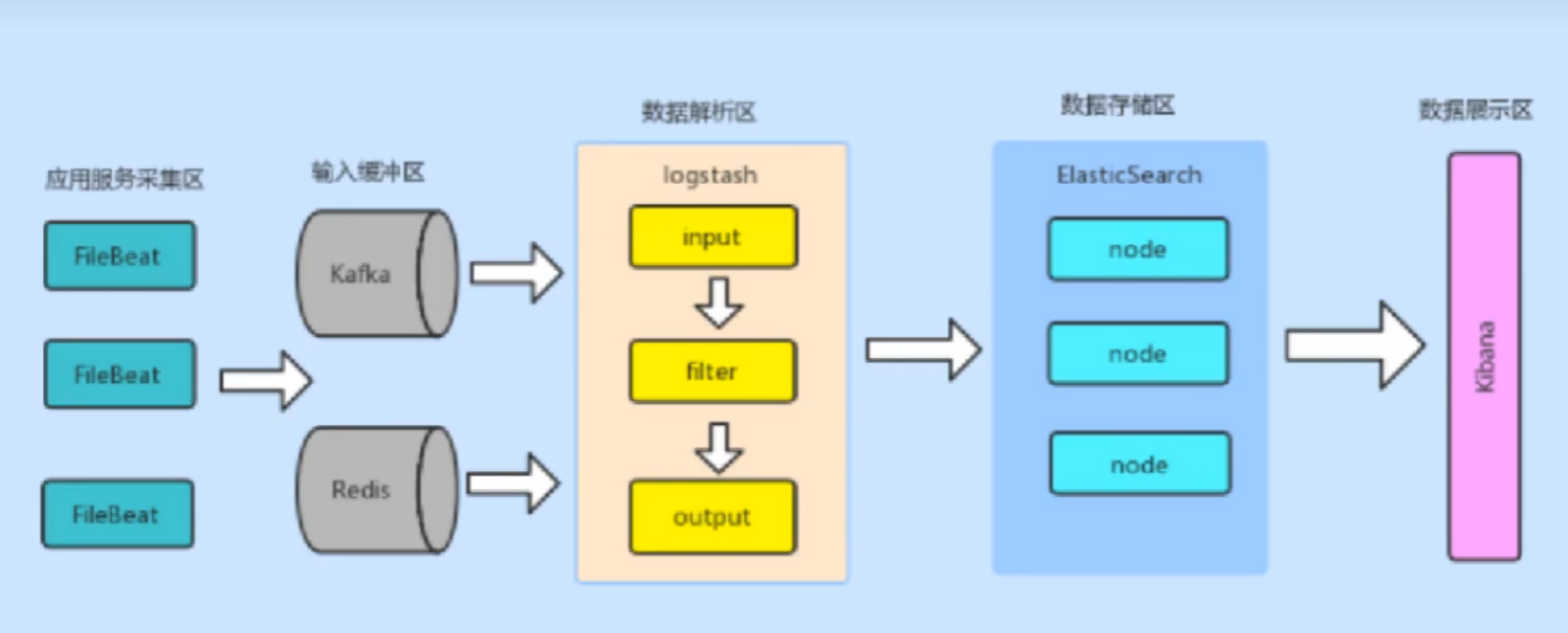

Kafka日志分析平台

kafka 日志分析平台

环境准备

| 主机名 | 外网 IP | 内网 IP | 应用 | 内存 |

|---|---|---|---|---|

| lb01 | 10.0.0.5 | 172.16.1.5 | nginx、logstash7.9 | 2G |

| lb02 | 10.0.0.6 | 172.16.1.6 | nginx、kibana7.9 | 2G |

| web01 | 10.0.0.7 | 172.16.1.7 | nginx、filebeat7.9、kafka2.12 | 3G |

| web02 | 10.0.0.8 | 172.16.1.8 | nginx、filebeat7.9、kafka2.12 | 3G |

| elk01 | 10.0.0.81 | 172.16.1.81 | elasticsearch7.9 | 3G |

| elk02 | 10.0.0.82 | 172.16.1.82 | elasticsearch7.9 | 3G |

- ELK 是 Elasticsearch、Logstash、Kibana 的简称,是近乎完美的开源实时日志分析平台

- 这三者是日志分析平台的核心组件,而并非全部

Elasticsearch:实时全文搜索和分析引擎,提供搜集、分析、存储数据三大功能,具有分布式,零配置,自动发现,索引自动分片,索引副本机制,restful 风格接口,多数据源,自动搜索负载等特点

Logstash:它支持几乎任何类型的日志,包括系统日志、错误日志和自定义应用程序日志,它可以从许多来源接收日志,这些来源包括 syslog、消息传递(例如 RabbitMQ)和 JMX,它能够以多种方式输出数据,包括电子邮件、websockets 和 ElasticsearchKibana:基于 Web 的图形界面,用于搜索、分析和可视化存储在 Elasticsearch 指标中的日志数据,它利用 Elasticsearch 的 REST 接口来检索数据,不仅允许用户创建他们自己的数据的定制仪表板视图,还允许他们以特殊的方式查询和过滤数据,Kibana 可以为 Logstash 和 Elasticsearch 提供友好的日志分析 web 界面,可以帮助你汇总、分析和搜索重要数据日志

配置 elk01 服务器

配置 elasticsearch

下载安装 elasticsearch

yum -y install https://mirrors.tuna.tsinghua.edu.cn/elasticstack/7.x/yum/7.9.1/elasticsearch-7.9.1-x86_64.rpm

编辑配置文件

sed -i 's/^[^#]/#&/' /etc/elasticsearch/elasticsearch.yml

cat >> /etc/elasticsearch/elasticsearch.yml << 'EOF'

cluster.name: elkstack

node.name: es01

node.data: true

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

bootstrap.memory_lock: true

network.host: 127.0.0.1,10.0.0.81

http.port: 9200

discovery.seed_hosts: ["10.0.0.81","10.0.0.82"]

cluster.initial_master_nodes: ["10.0.0.81","10.0.0.82"]

EOF

解除 Elasticsearch 进程对内存锁定的限制

sed -i '/\[Service\]/a LimitMEMLOCK=infinity' /usr/lib/systemd/system/elasticsearch.service

重新加载 systemd 的配置文件

systemctl daemon-reload

启动服务并加入开机自启

systemctl start elasticsearch

systemctl enable elasticsearch

网页访问:10.0.0.81:9200

{

"name" : "es01",

"cluster_name" : "elkstack",

"cluster_uuid" : "BokyxBHiSzGckadvzfnVSQ",

"version" : {

"number" : "7.9.1",

"build_flavor" : "default",

"build_type" : "rpm",

"build_hash" : "083627f112ba94dffc1232e8b42b73492789ef91",

"build_date" : "2020-09-01T21:22:21.964974Z",

"build_snapshot" : false,

"lucene_version" : "8.6.2",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

配置 elk02 服务器

配置 elasticsearch

下载安装 elasticsearch

yum -y install https://mirrors.tuna.tsinghua.edu.cn/elasticstack/7.x/yum/7.9.1/elasticsearch-7.9.1-x86_64.rpm

编辑配置文件

sed -i 's/^[^#]/#&/' /etc/elasticsearch/elasticsearch.yml

cat >> /etc/elasticsearch/elasticsearch.yml << 'EOF'

cluster.name: elkstack

node.name: es02

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

bootstrap.memory_lock: true

network.host: 127.0.0.1,10.0.0.82

http.port: 9200

discovery.seed_hosts: ["10.0.0.81","10.0.0.82"]

cluster.initial_master_nodes: ["10.0.0.81","10.0.0.82"]

EOF

解除 Elasticsearch 进程对内存锁定的限制

sed -i '/\[Service\]/a LimitMEMLOCK=infinity' /usr/lib/systemd/system/elasticsearch.service

重新加载 systemd 的配置文件

systemctl daemon-reload

启动服务并加入开机自启

systemctl start elasticsearch

systemctl enable elasticsearch

网页访问:10.0.0.82:9200

{

"name" : "es02",

"cluster_name" : "elkstack",

"cluster_uuid" : "BokyxBHiSzGckadvzfnVSQ",

"version" : {

"number" : "7.9.1",

"build_flavor" : "default",

"build_type" : "rpm",

"build_hash" : "083627f112ba94dffc1232e8b42b73492789ef91",

"build_date" : "2020-09-01T21:22:21.964974Z",

"build_snapshot" : false,

"lucene_version" : "8.6.2",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

检查集群状态

curl -X GET "localhost:9200/_cat/health?v"

配置 lb01 服务器

lb01:nginx 代理和 logstash 服务器

配置 nginx

配置官方 yum 源

cat > /etc/yum.repos.d/nginx.repo <<EOF

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/\$releasever/\$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

EOF

安装 Nginx 服务与其相关依赖

yum -y install gcc gcc-c++ autoconf pcre pcre-devel make automake wget httpd-tools vim tree nginx

创建 uid=666、gid=666 的用户和用户组

groupadd -g 666 www

useradd -u 666 -g 666 www -s /sbin/nologin -M

id www

修改 nginx 主配置文件中 user 为 www 用户

sed -i 's#user nginx;#user www;#g' /etc/nginx/nginx.conf

nginx 主配置文件增加 json 格式日志

### 编辑主配置文件(增加json格式日志)

vim /etc/nginx/nginx.conf

………………省略部分输出信息………………

access_log /var/log/nginx/access.log main;

# 在http层的原有日志下添加以下内容

log_format access_json '{"@timestamp":"$time_iso8601",'

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"url":"$uri",'

'"domain":"$host",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"status":"$status"}';

access_log /var/log/nginx/access_json.log access_json;

配置 proxy_params

cat > /etc/nginx/proxy_params <<EOF

proxy_set_header Host \$http_host;

proxy_set_header X-Real-IP \$remote_addr;

proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;

proxy_connect_timeout 30;

proxy_send_timeout 60;

proxy_read_timeout 60;

proxy_buffering on;

proxy_buffer_size 32k;

proxy_buffers 4 128k;

EOF

配置负载均衡

cat > /etc/nginx/conf.d/web_proxy.conf <<EOF

upstream www_pools {

server 10.0.0.7;

server 10.0.0.8;

}

server {

listen 80;

server_name blog.xxx.com;

location / {

proxy_pass http://www_pools;

include proxy_params;

}

}

EOF

启动服务并加入开机自启

systemctl start nginx

systemctl enable nginx

配置 keepalived

安装 keepalived

yum -y install keepalived

编辑配置文件

cat > /etc/keepalived/keepalived.conf <<EOF

global_defs {

router_id keepalived01

}

# 每3秒执行一次脚本,脚本执行内容不能超过3秒,否则会中断再次重新执行脚本

vrrp_script check_web {

script "/etc/keepalived/scripts/check_web.sh"

interval 3

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 50

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.3

}

track_script {

check_web

}

}

EOF

创建脚本目录

mkdir -p /etc/keepalived/scripts

配置检测脚本

cat > /etc/keepalived/scripts/check_web.sh <<EOF

#!/bin/bash

nginx_status=\$(ps -ef|grep [n]ginx|wc -l)

if [ \$nginx_status -eq 0 ];then

systemctl stop keepalived

fi

EOF

检测脚本增加执行权限

chmod +x /etc/keepalived/scripts/check_web.sh

启动服务并设置开机自启

systemctl start keepalived

systemctl enable keepalived

配置 java 环境

安装 jdk11

yum -y install java-11-openjdk

查看 java_home

java -version

配置 logstash

下载安装 logstash

yum -y install https://mirrors.tuna.tsinghua.edu.cn/elasticstack/7.x/yum/7.9.1/logstash-7.9.1.rpm

授权 logstash 目录

chown -R logstash.logstash /usr/share/logstash/

启动服务并加入开机自启

systemctl start logstash

systemctl enable logstash

配置 logstash 环境变量

### 添加环境变量

cat > /etc/profile.d/logstash.sh <<EOF

export PATH="/usr/share/logstash/bin:\$PATH"

EOF

### 生效配置文件

source /etc/profile

检查进程

ps -ef | grep logstash

编辑配置文件

cat > /etc/logstash/conf.d/first-pipeline.conf <<EOF

input {

kafka {

type => "nginx_access_log"

codec => "json"

topics => ["nginx"]

decorate_events => true

bootstrap_servers => "10.0.0.7:9092,10.0.0.8:9092"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}"}

}

mutate {

remove_field => ["message","input_type","@version","fields"]

}

geoip {

source => "clientip"

}

}

output {

stdout {}

if [type] == "nginx_access_log" {

elasticsearch {

index => "nginx-%{+YYYY.MM.dd}"

codec => "json"

hosts => ["10.0.0.81:9200","10.0.0.82:9200"]

}

}

}

EOF

启动 logstash

nohup logstash -f /etc/logstash/conf.d/first-pipeline.conf >/dev/null 2>&1 &

配置 lb02 服务器

lb02:nginx 代理和 logstash 服务器

配置 nginx

配置官方 yum 源

cat > /etc/yum.repos.d/nginx.repo <<EOF

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/\$releasever/\$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

EOF

安装 Nginx 服务与其相关依赖

yum -y install gcc gcc-c++ autoconf pcre pcre-devel make automake wget httpd-tools vim tree nginx

创建 uid=666、gid=666 的用户和用户组

groupadd -g 666 www

useradd -u 666 -g 666 www -s /sbin/nologin -M

id www

修改 nginx 主配置文件中 user 为 www 用户

sed -i 's#user nginx;#user www;#g' /etc/nginx/nginx.conf

nginx 主配置文件增加 json 格式日志

### 编辑主配置文件(增加json格式日志)

vim /etc/nginx/nginx.conf

………………省略部分输出信息………………

access_log /var/log/nginx/access.log main;

# 在http层的原有日志下添加以下内容

log_format access_json '{"@timestamp":"$time_iso8601",'

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"url":"$uri",'

'"domain":"$host",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"status":"$status"}';

access_log /var/log/nginx/access_json.log access_json;

配置 proxy_params

cat > /etc/nginx/proxy_params <<EOF

proxy_set_header Host \$http_host;

proxy_set_header X-Real-IP \$remote_addr;

proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;

proxy_connect_timeout 30;

proxy_send_timeout 60;

proxy_read_timeout 60;

proxy_buffering on;

proxy_buffer_size 32k;

proxy_buffers 4 128k;

EOF

配置负载均衡

cat > /etc/nginx/conf.d/web_proxy.conf <<EOF

upstream www_pools {

server 10.0.0.7;

server 10.0.0.8;

}

server {

listen 80;

server_name blog.xxx.com;

location / {

proxy_pass http://www_pools;

include proxy_params;

}

}

EOF

代理 kibana

cat > /etc/nginx/conf.d/kibana.conf <<EOF

server {

listen 80;

server_name kibana.xxx.com;

location / {

proxy_pass http://10.0.0.6:5601;

include proxy_params;

}

}

EOF

启动服务并加入开机自启

systemctl start nginx

systemctl enable nginx

配置 keepalived

安装 keepalived

yum -y install keepalived

编辑配置文件

cat > /etc/keepalived/keepalived.conf <<EOF

global_defs {

router_id keepalived02

}

vrrp_instance VI_1 {

state BACKUP

nopreempt

interface eth0

virtual_router_id 50

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.3

}

}

EOF

启动服务并设置开机自启

systemctl start keepalived

systemctl enable keepalived

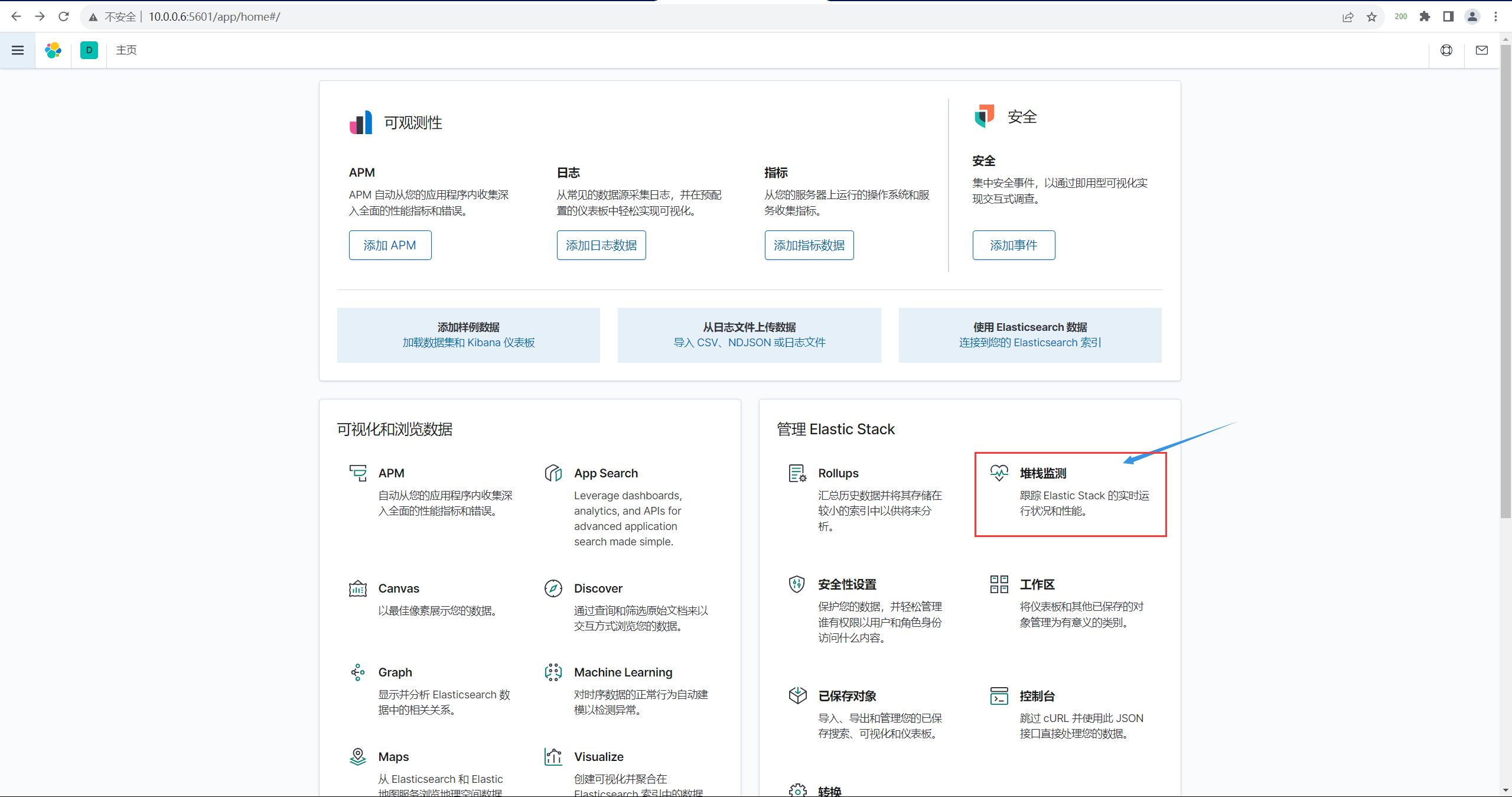

配置 kibana

下载安装 kibana

yum -y install https://mirrors.tuna.tsinghua.edu.cn/elasticstack/7.x/yum/7.9.1/kibana-7.9.1-x86_64.rpm

编辑 kibana 配置文件

kibana_ip=$(ifconfig eth0 | awk 'NR==2 {print $2}')

es_ip=10.0.0.81

cat >> /etc/kibana/kibana.yml <<EOF

server.port: 5601

server.host: "${kibana_ip}"

elasticsearch.hosts: ["http://${es_ip}:9200"]

kibana.index: ".kibana"

i18n.locale: "zh-CN"

EOF

启动服务并加入开机自启

systemctl start kibana

systemctl enable kibana

检查进程

ps -ef | grep kibana

配置 wbe01 服务器

web01:nginx、filebeat、kafka 服务器

配置 nginx

配置官方 yum 源

cat > /etc/yum.repos.d/nginx.repo <<EOF

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/\$releasever/\$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

EOF

安装 Nginx 服务与其相关依赖

yum -y install gcc gcc-c++ autoconf pcre pcre-devel make automake wget httpd-tools vim tree nginx

创建 uid=666、gid=666 的用户和用户组

groupadd -g 666 www

useradd -u 666 -g 666 www -s /sbin/nologin -M

id www

修改 nginx 主配置文件中 user 为 www 用户

sed -i 's#user nginx;#user www;#g' /etc/nginx/nginx.conf

配置 php 第三方源

cat > /etc/yum.repos.d/php.repo <<EOF

[php-webtatic]

name = PHP Repository

baseurl = http://us-east.repo.webtatic.com/yum/el7/x86_64/

gpgcheck = 0

EOF

安装 php 及其相关依赖

yum -y install php71w php71w-cli php71w-common php71w-devel php71w-embedded php71w-gd php71w-mcrypt php71w-mbstring php71w-pdo php71w-xml php71w-fpm php71w-mysqlnd php71w-opcache php71w-pecl-memcached php71w-pecl-redis php71w-pecl-mongodb

修改 php-fpm 配置文件中 user 和 group 为 www 用户

sed -i 's#user = apache#user = www#g' /etc/php-fpm.d/www.conf

sed -i 's#group = apache#group = www#g' /etc/php-fpm.d/www.conf

编辑网站配置文件

cat > /etc/nginx/conf.d/www.conf <<EOF

server {

listen 80;

server_name blog.xxx.com;

location / {

root /code;

index index.html;

}

}

EOF

创建 nginx 站点目录

mkdir -p /code

编辑网站 html

echo "$HOSTNAME" > /code/index.html

nginx 主配置文件增加 json 格式日志

### 编辑主配置文件(增加json格式日志)

vim /etc/nginx/nginx.conf

………………省略部分输出信息………………

access_log /var/log/nginx/access.log main;

# 在http层的原有日志下添加以下内容

log_format access_json '{"@timestamp":"$time_iso8601",'

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"url":"$uri",'

'"domain":"$host",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"status":"$status"}';

access_log /var/log/nginx/access_json.log access_json;

启动服务并加入开机自启

systemctl start php-fpm nginx

systemctl enable php-fpm nginx

查看端口

netstat -lnupt | egrep "9000|80"

配置 filebeat

下载安装 filebeat7.9

yum -y install https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.9.1-x86_64.rpm

备份 filebeat 原配置文件

cp /etc/filebeat/filebeat.yml{,.bak}

注释配置文件

sed -i 's/^[^#]/#&/' /etc/filebeat/filebeat.yml

查看是否将配置文件全部注释

egrep -v "^$|^ *#" /etc/filebeat/filebeat.yml

编辑 filebeat 配置文件

cat >> /etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: false

paths:

- /var/log/*.log

filebeat.config.modules:

path: \${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

# ------------------------------ kafka Output -------------------------------

output.kafka:

hosts: ["10.0.0.7:9092","10.0.0.8:9092"]

enabled: true

topic: 'nginx'

partition.round_robin:

reachable_only: false

required_acks: 1

compression: gzip

max_message_bytes: 100000000

processors:

- drop_event:

when:

regexp:

message: "^DBG:"

- drop_fields:

fields: ['input',"ecs.version"]

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~

EOF

启动 nginx 模块

filebeat modules enable nginx

备份配置文件

cp /etc/filebeat/modules.d/nginx.yml{,.bak}

注释配置文件

sed -i 's/^[^#]/#&/' /etc/filebeat/modules.d/nginx.yml

查看是否将配置文件全部注释

egrep -v "^$|^ *#" /etc/filebeat/modules.d/nginx.yml

编辑 nginx 模块

cat >> /etc/filebeat/modules.d/nginx.yml <<EOF

- module: nginx

access:

enabled: true

var.paths: ["/var/log/nginx/access_json.log"]

error:

enabled: true

ingress_controller:

enabled: false

EOF

启动服务并加入开机自启

systemctl start filebeat

systemctl enable filebeat

检查进程

ps -ef | grep filebeat

配置 java 环境

创建 app 目录

mkdir /app

上传安装包并解压

tar xf jdk-12.0.1_linux-x64_bin.tar.gz -C /app

做软连接

ln -s /app/jdk-12.0.1 /app/jdk

配置环境变量

### 编辑环境变量文件

cat > /etc/profile.d/jdk12.sh <<EOF

export JAVA_HOME=/app/jdk

export PATH=\$PATH:\$JAVA_HOME/bin

EOF

### 生效配置文件

source /etc/profile

配置 zookeeper

上传安装包并解压

tar xf zookeeper-3.4.14.tar.gz -C /app

做软连接

ln -s /app/zookeeper-3.4.14 /app/zookeeper

配置 kafka

上传安装包并解压

tar xf kafka_2.12-2.2.1.tgz -C /app

做软连接

ln -s /app/kafka_2.12-2.2.1 /app/kafka

备份配置文件

cp /app/kafka/config/zookeeper.properties{,.bak}

注释配置文件

sed -i 's/^[^#]/#&/' /app/kafka/config/zookeeper.properties

查看是否将配置文件全部注释

egrep -v "^$|^ *#" /app/kafka/config/zookeeper.properties

编辑配置文件

cat >> /app/kafka/config/zookeeper.properties <<EOF

# zk数据存放目录

dataDir=/app/kafka/data/zookeeper/data

# zk日志存放目录

dataLogDir=/app/kafka/data/zookeeper/logs

# 客户端连接zk服务的端口

clientPort=2181

# zk服务器之间或客户端与服务器之间维持心跳的时间间隔

ticktime=2000

# 允许follower连接并同步到Leader的初始化连接时间,当初始化连接时间超过该值,则表示连接失败

initLimit=20

# Leader与Follower之间发送消息时如果follower在设置时间内不能与leader通信,那么此follower将会被丢弃

syncLimit=10

# 以下IP信息根据配置kafka集群的服务器IP进行修改

# 2888是follower与leader交换信息的端口,3888是当leader挂了时用来执行选举时服务器相互通信的端口

server.1=10.0.0.7:2888:3888

server.2=10.0.0.8:2888:3888

EOF

创建 data/logs 目录

mkdir -p /app/kafka/data/zookeeper/{data,logs}

创建 myid 文件用于标识此服务器实例

echo 1 > /app/kafka/data/zookeeper/data/myid

备份配置文件

cp /app/kafka/config/server.properties{,.bak}

注释配置文件

sed -i 's/^[^#]/#&/' /app/kafka/config/server.properties

查看是否将配置文件全部注释

egrep -v "^$|^ *#" /app/kafka/config/server.properties

编辑配置文件

cat >> /app/kafka/config/server.properties <<EOF

broker.id=1

listeners=PLAINTEXT://10.0.0.7:9092

num.network.threads=3

num.io.threads=3

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/app/data/kafka/logs

num.partitions=6

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=2

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.0.0.7:2181,10.0.0.8:2181

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

EOF

创建日志目录

mkdir -p /app/data/kafka/logs

启动 zookeeper 服务

nohup /app/kafka/bin/zookeeper-server-start.sh /app/kafka/config/zookeeper.properties &

查看端口

netstat -lnupt | grep 2181

启动 kafka 服务

nohup /app/kafka/bin/kafka-server-start.sh /app/kafka/config/server.properties &

查看启动的服务

jps -l

配置 wbe02 服务器

web01:nginx、filebeat、kafka 服务器

配置 nginx

配置官方 yum 源

cat > /etc/yum.repos.d/nginx.repo <<EOF

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/\$releasever/\$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

EOF

安装 Nginx 服务与其相关依赖

yum -y install gcc gcc-c++ autoconf pcre pcre-devel make automake wget httpd-tools vim tree nginx

创建 uid=666、gid=666 的用户和用户组

groupadd -g 666 www

useradd -u 666 -g 666 www -s /sbin/nologin -M

id www

修改 nginx 主配置文件中 user 为 www 用户

sed -i 's#user nginx;#user www;#g' /etc/nginx/nginx.conf

配置 php 第三方源

cat > /etc/yum.repos.d/php.repo <<EOF

[php-webtatic]

name = PHP Repository

baseurl = http://us-east.repo.webtatic.com/yum/el7/x86_64/

gpgcheck = 0

EOF

安装 php 及其相关依赖

yum -y install php71w php71w-cli php71w-common php71w-devel php71w-embedded php71w-gd php71w-mcrypt php71w-mbstring php71w-pdo php71w-xml php71w-fpm php71w-mysqlnd php71w-opcache php71w-pecl-memcached php71w-pecl-redis php71w-pecl-mongodb

修改 php-fpm 配置文件中 user 和 group 为 www 用户

sed -i 's#user = apache#user = www#g' /etc/php-fpm.d/www.conf

sed -i 's#group = apache#group = www#g' /etc/php-fpm.d/www.conf

编辑网站配置文件

cat > /etc/nginx/conf.d/www.conf <<EOF

server {

listen 80;

server_name blog.xxx.com;

location / {

root /code;

index index.html;

}

}

EOF

启动服务并加入开机自启

systemctl start php-fpm nginx

systemctl enable php-fpm nginx

查看端口

netstat -lnupt | egrep "9000|80"

创建 nginx 站点目录

mkdir -p /code

编辑网站 html

echo "$HOSTNAME" > /code/index.html

nginx 主配置文件增加 json 格式日志

### 编辑主配置文件(增加json格式日志)

vim /etc/nginx/nginx.conf

………………省略部分输出信息………………

access_log /var/log/nginx/access.log main;

# 在http层的原有日志下添加以下内容

log_format access_json '{"@timestamp":"$time_iso8601",'

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"url":"$uri",'

'"domain":"$host",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"status":"$status"}';

access_log /var/log/nginx/access_json.log access_json;

配置 filebeat

下载安装 filebeat7.9

yum -y install https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.9.1-x86_64.rpm

备份 filebeat 原配置文件

cp /etc/filebeat/filebeat.yml{,.bak}

注释配置文件

sed -i 's/^[^#]/#&/' /etc/filebeat/filebeat.yml

查看是否将配置文件全部注释

egrep -v "^$|^ *#" /etc/filebeat/filebeat.yml

编辑 filebeat 配置文件

cat >> /etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: false

paths:

- /var/log/*.log

filebeat.config.modules:

path: \${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

# ------------------------------ kafka Output -------------------------------

output.kafka:

hosts: ["10.0.0.7:9092","10.0.0.8:9092"]

enabled: true

topic: 'nginx'

partition.round_robin:

reachable_only: false

required_acks: 1

compression: gzip

max_message_bytes: 1000000

processors:

- drop_event:

when:

regexp:

message: "^DBG:"

- drop_fields:

fields: ['input',"ecs.version"]

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~

EOF

启动 nginx 模块

filebeat modules enable nginx

备份配置文件

cp /etc/filebeat/modules.d/nginx.yml{,.bak}

注释配置文件

sed -i 's/^[^#]/#&/' /etc/filebeat/modules.d/nginx.yml

查看是否将配置文件全部注释

egrep -v "^$|^ *#" /etc/filebeat/modules.d/nginx.yml

编辑 nginx 模块

cat >> /etc/filebeat/modules.d/nginx.yml <<EOF

- module: nginx

access:

enabled: true

var.paths: ["/var/log/nginx/access_json.log"]

error:

enabled: true

ingress_controller:

enabled: false

EOF

启动服务并加入开机自启

systemctl start filebeat

systemctl enable filebeat

检查进程

ps -ef | grep filebeat

配置 java 环境

创建 app 目录

mkdir /app

上传安装包并解压

tar xf jdk-12.0.1_linux-x64_bin.tar.gz -C /app

做软连接

ln -s /app/jdk-12.0.1 /app/jdk

配置环境变量

### 编辑环境变量文件

cat > /etc/profile.d/jdk12.sh <<EOF

export JAVA_HOME=/app/jdk

export PATH=\$PATH:\$JAVA_HOME/bin

EOF

### 生效配置文件

source /etc/profile

配置 zookeeper

上传安装包并解压

tar xf zookeeper-3.4.14.tar.gz -C /app

做软连接

ln -s /app/zookeeper-3.4.14 /app/zookeeper

配置 kafka

上传安装包并解压

tar xf kafka_2.12-2.2.1.tgz -C /app

做软连接

ln -s /app/kafka_2.12-2.2.1 /app/kafka

备份配置文件

cp /app/kafka/config/zookeeper.properties{,.bak}

注释配置文件

sed -i 's/^[^#]/#&/' /app/kafka/config/zookeeper.properties

查看是否将配置文件全部注释

egrep -v "^$|^ *#" /app/kafka/config/zookeeper.properties

编辑配置文件

cat >> /app/kafka/config/zookeeper.properties <<EOF

# zk数据存放目录

dataDir=/app/kafka/data/zookeeper/data

# zk日志存放目录

dataLogDir=/app/kafka/data/zookeeper/logs

# 客户端连接zk服务的端口

clientPort=2181

# zk服务器之间或客户端与服务器之间维持心跳的时间间隔

ticktime=2000

# 允许follower连接并同步到Leader的初始化连接时间,当初始化连接时间超过该值,则表示连接失败

initLimit=20

# Leader与Follower之间发送消息时如果follower在设置时间内不能与leader通信,那么此follower将会被丢弃

syncLimit=10

# 以下IP信息根据配置kafka集群的服务器IP进行修改

# 2888是follower与leader交换信息的端口,3888是当leader挂了时用来执行选举时服务器相互通信的端口

server.1=10.0.0.7:2888:3888

server.2=10.0.0.8:2888:3888

EOF

创建 data/logs 目录

mkdir -p /app/kafka/data/zookeeper/{data,logs}

创建 myid 文件用于标识此服务器实例

echo 2 > /app/kafka/data/zookeeper/data/myid

备份配置文件

cp /app/kafka/config/server.properties{,.bak}

注释配置文件

sed -i 's/^[^#]/#&/' /app/kafka/config/server.properties

查看是否将配置文件全部注释

egrep -v "^$|^ *#" /app/kafka/config/server.properties

编辑配置文件

cat >> /app/kafka/config/server.properties <<EOF

# 修改broker

broker.id=2

# 修改本机

listeners=PLAINTEXT://10.0.0.8:9092

num.network.threads=3

num.io.threads=3

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/app/data/kafka/logs

num.partitions=6

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=2

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.0.0.7:2181,10.0.0.8:2181

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

EOF

创建日志目录

mkdir -p /app/data/kafka/logs

启动 zookeeper 服务

nohup /app/kafka/bin/zookeeper-server-start.sh /app/kafka/config/zookeeper.properties >/dev/null 2>&1 &

查看端口

netstat -lnupt | grep 2181

启动 kafka 服务

nohup /app/kafka/bin/kafka-server-start.sh /app/kafka/config/server.properties >/dev/null 2>&1 &

查看启动的服务

jps -l

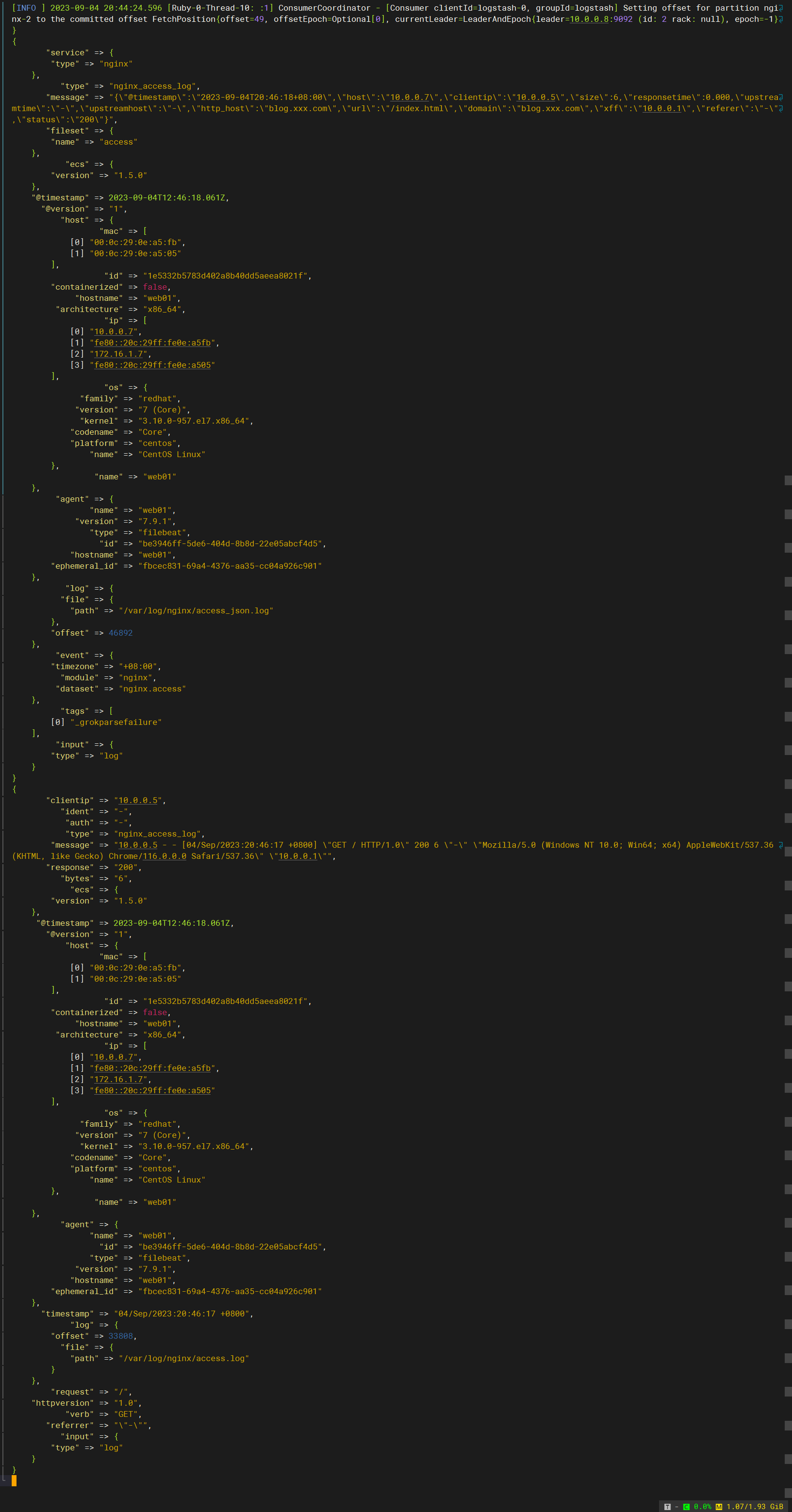

Logstash 检查结果展示

kafka 检查内容展示

# 查询所有主题

[root@web01 ~]# /app/kafka/bin/kafka-topics.sh --zookeeper localhost:2181 --list

__consumer_offsets

nginx

# 查询nginx主题详情

[root@web01 ~]# /app/kafka/bin/kafka-topics.sh --zookeeper localhost:2181 --describe --topic nginx

Topic:nginx PartitionCount:6 ReplicationFactor:1 Configs:

Topic: nginx Partition: 0 Leader: 2 Replicas: 2 Isr: 2

Topic: nginx Partition: 1 Leader: 2 Replicas: 2 Isr: 2

Topic: nginx Partition: 2 Leader: 2 Replicas: 2 Isr: 2

Topic: nginx Partition: 3 Leader: 2 Replicas: 2 Isr: 2

Topic: nginx Partition: 4 Leader: 2 Replicas: 2 Isr: 2

Topic: nginx Partition: 5 Leader: 2 Replicas: 2 Isr: 2

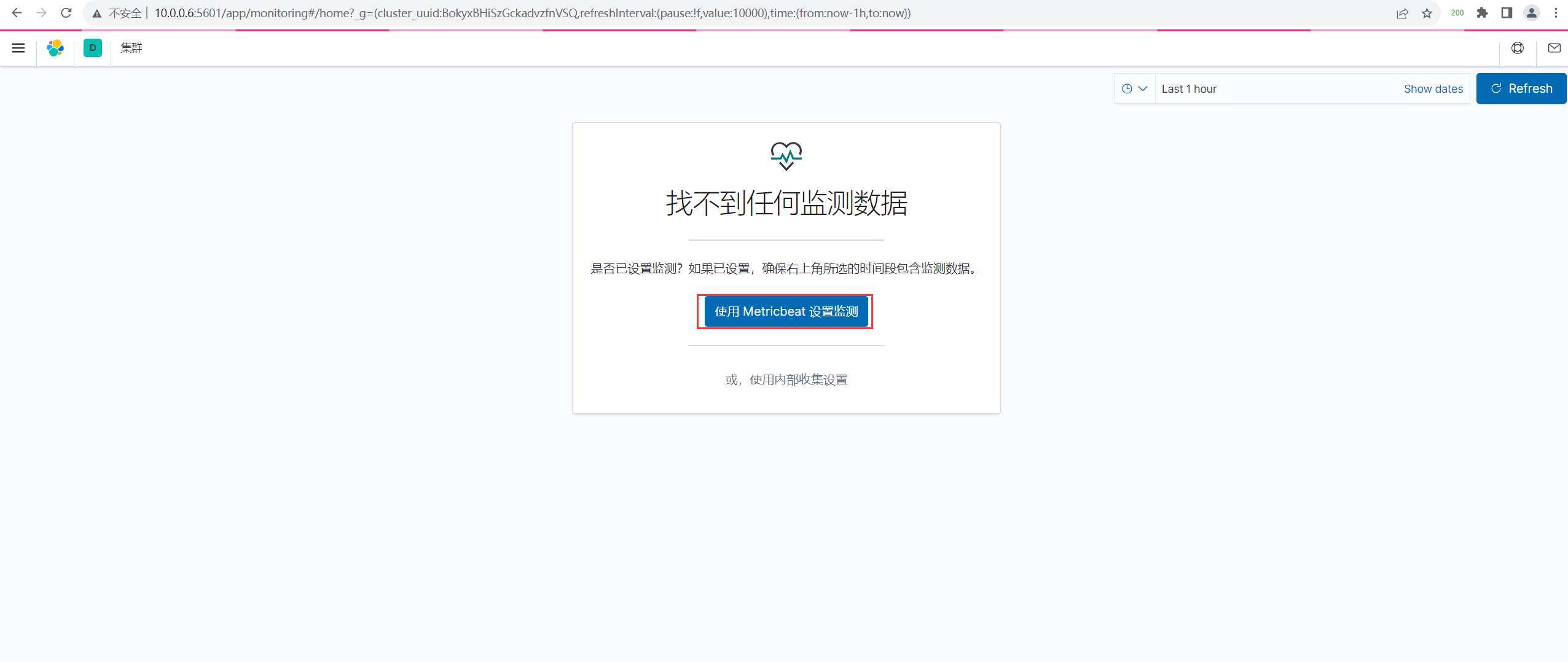

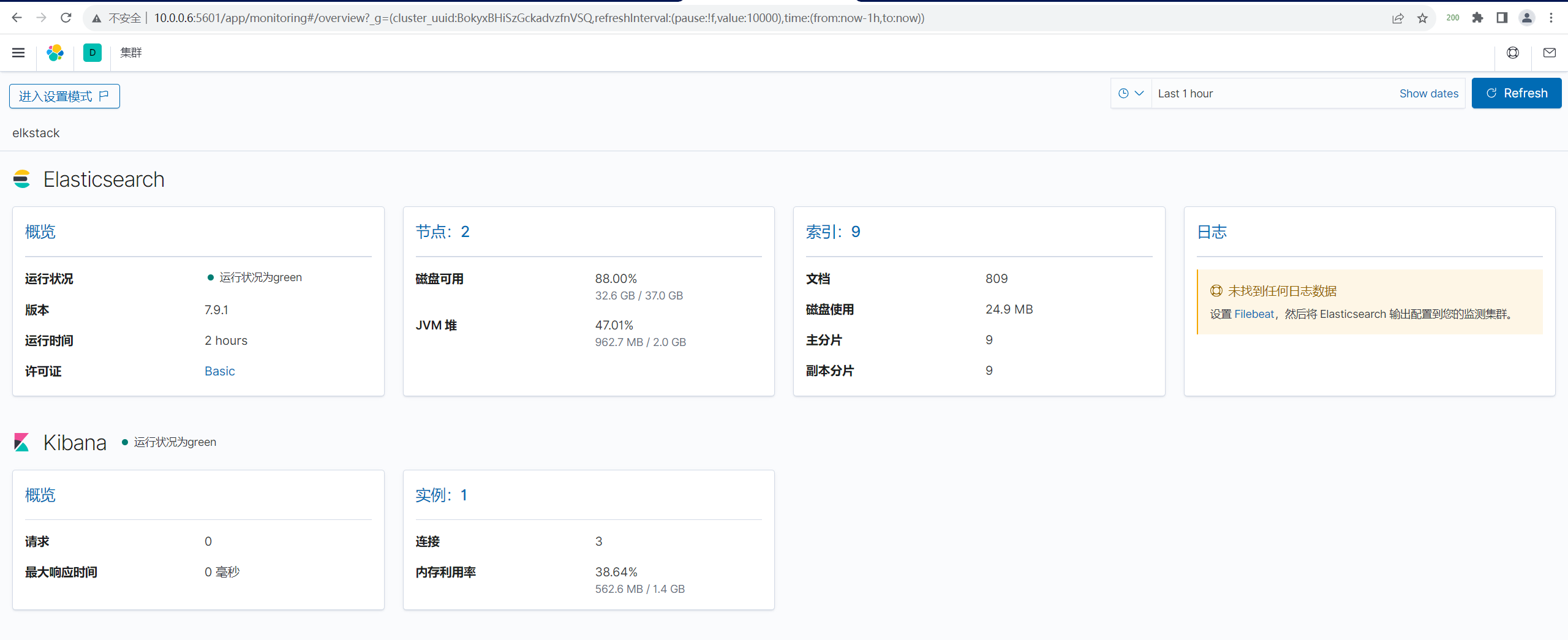

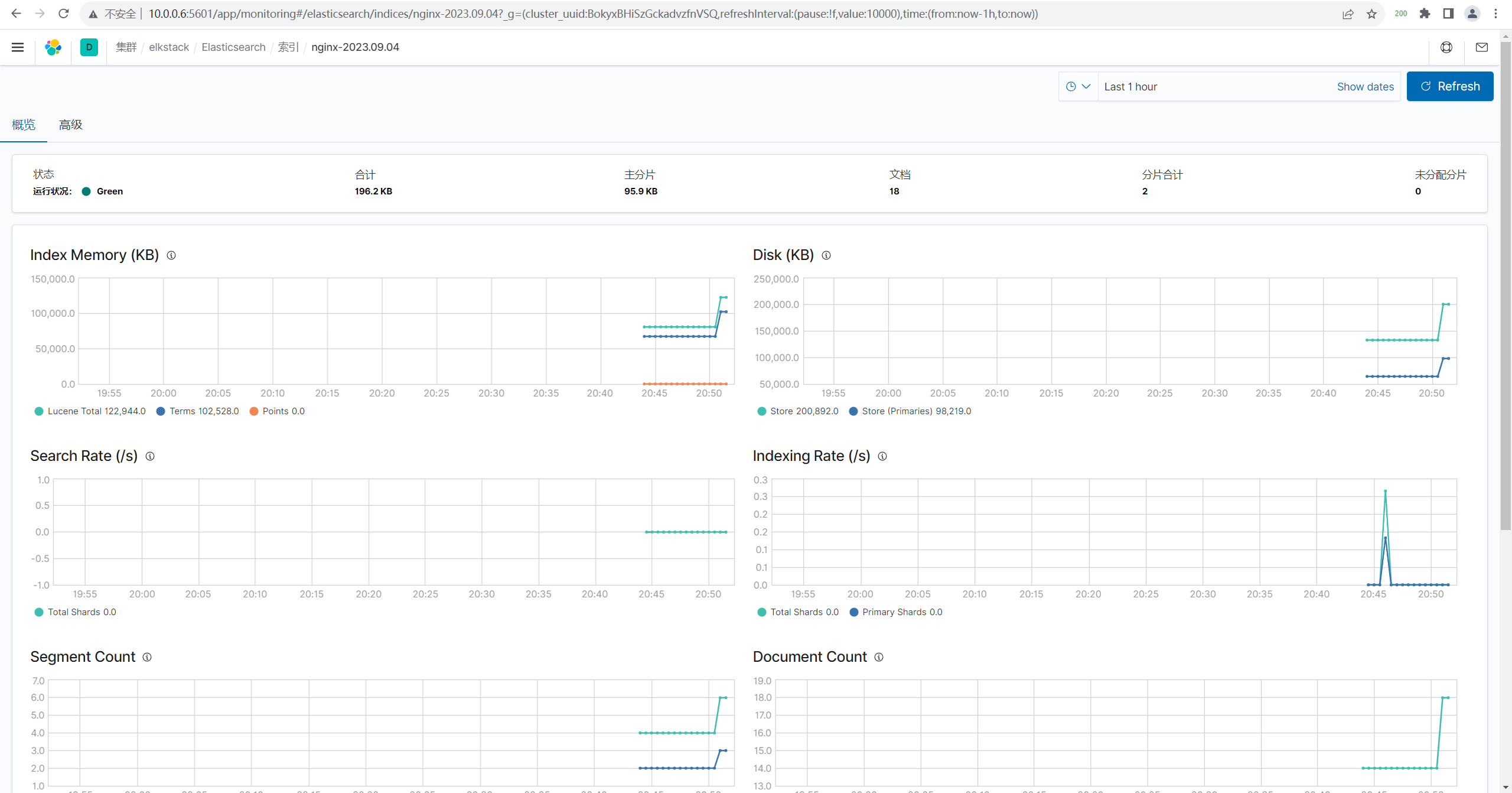

kibana

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通