im开发总结:netty的使用

最近公司在做一个im群聊的开发,技术使用得非常多,各种代码封装得也是十分优美,使用到了netty,zookeeper,redis,线程池·,mongdb,lua,等系列的技术

netty是对nio的一种封装,很好从效率上解决了,nio的epoll模型的bug问题,那么netty到底是个什么玩意呢:

这个是官网上的一个截图:

大概的意思就是:

Netty是一种NIO客户端服务器框架,它支持网络应用程序(如协议服务器和客户端)的快速和轻松开发。它大大简化和简化了网络编程,如TCP和UDP套接字服务器。“快速和简单”并不意味着最终的应用程序会受到可维护性或性能问题的影响。Netty是根据许多协议(如FTP、SMTP、HTTP以及各种二进制和基于文本的遗留协议)的实现所获得的经验精心设计的。因此,Netty成功地找到了一种在不妥协的情况下实现轻松开发、性能、稳定和灵活性的方法。

那么到底怎么用呢:

我可以看到,userguid部分:

官方推荐使用netty4,netty5已经废弃了,

所以我们可以netty4,进行开发:

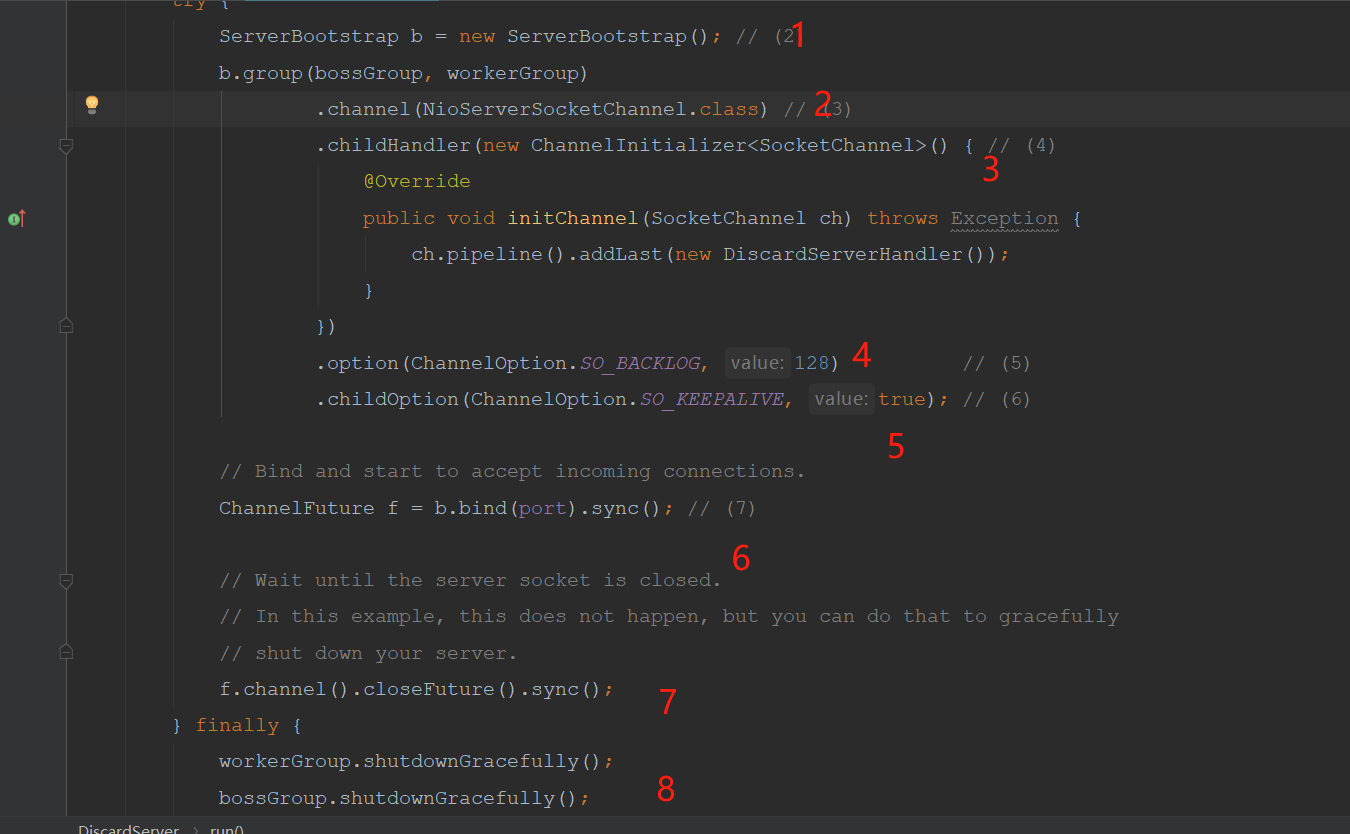

首先服务端:

package com.cxy.sever; import io.netty.bootstrap.ServerBootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelOption; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioServerSocketChannel; public class DiscardServer { private int port; public DiscardServer(int port) { this.port = port; } public void run() throws Exception { EventLoopGroup bossGroup = new NioEventLoopGroup(); // (1) EventLoopGroup workerGroup = new NioEventLoopGroup(); try { ServerBootstrap b = new ServerBootstrap(); // (2) b.group(bossGroup, workerGroup) .channel(NioServerSocketChannel.class) // (3) .childHandler(new ChannelInitializer<SocketChannel>() { // (4) @Override public void initChannel(SocketChannel ch) throws Exception { ch.pipeline().addLast(new DiscardServerHandler()); } }) .option(ChannelOption.SO_BACKLOG, 128) // (5) .childOption(ChannelOption.SO_KEEPALIVE, true); // (6) // Bind and start to accept incoming connections. ChannelFuture f = b.bind(port).sync(); // (7) // Wait until the server socket is closed. // In this example, this does not happen, but you can do that to gracefully // shut down your server. f.channel().closeFuture().sync(); } finally { workerGroup.shutdownGracefully(); bossGroup.shutdownGracefully(); } } public static void main(String[] args) throws Exception { int port = 8081; if (args.length > 0) { port = Integer.parseInt(args[0]); } new DiscardServer(port).run(); } }

package com.cxy.sever; import io.netty.buffer.ByteBuf; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelFutureListener; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; import io.netty.util.ReferenceCountUtil; public class DiscardServerHandler extends ChannelInboundHandlerAdapter { @Override public void channelActive(final ChannelHandlerContext ctx) { // (1) final ByteBuf time = ctx.alloc().buffer(4); // (2) time.writeInt((int) (System.currentTimeMillis() / 1000L + 2208988800L)); final ChannelFuture f = ctx.writeAndFlush(time); // (3) /*f.addListener(new ChannelFutureListener() { public void operationComplete(ChannelFuture future) { assert f == future; ctx.close(); } }); // (4)*/ } @Override public void channelRead(ChannelHandlerContext ctx, Object msg) { // (2) ByteBuf in = (ByteBuf) msg; try { while (in.isReadable()) { // (1) System.out.print((char) in.readByte()); System.out.flush(); } } finally { ReferenceCountUtil.release(msg); // (2) } } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) { // (4) // Close the connection when an exception is raised. cause.printStackTrace(); ctx.close(); } }

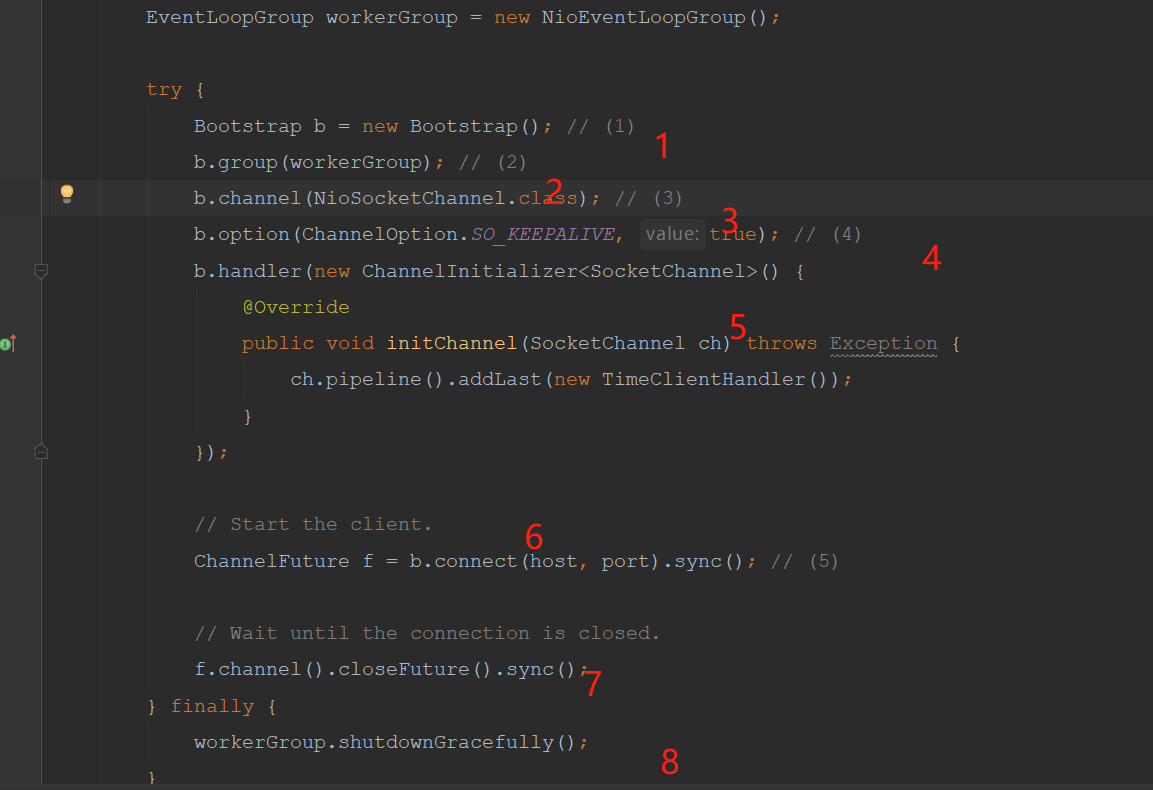

客户端

package com.cxy.client; import io.netty.bootstrap.Bootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelOption; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioSocketChannel; public class TimeClient { public static void main(String[] args) throws Exception { String host = "127.0.0.1"; int port = 8081; EventLoopGroup workerGroup = new NioEventLoopGroup(); try { Bootstrap b = new Bootstrap(); // (1) b.group(workerGroup); // (2) b.channel(NioSocketChannel.class); // (3) b.option(ChannelOption.SO_KEEPALIVE, true); // (4) b.handler(new ChannelInitializer<SocketChannel>() { @Override public void initChannel(SocketChannel ch) throws Exception { ch.pipeline().addLast(new TimeClientHandler()); } }); // Start the client. ChannelFuture f = b.connect(host, port).sync(); // (5) // Wait until the connection is closed. f.channel().closeFuture().sync(); } finally { workerGroup.shutdownGracefully(); } } }

package com.cxy.client; import io.netty.buffer.ByteBuf; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; import java.util.Date; public class TimeClientHandler extends ChannelInboundHandlerAdapter { @Override public void channelRead(ChannelHandlerContext ctx, Object msg) { ByteBuf m = (ByteBuf) msg; // (1) try { long currentTimeMillis = (m.readUnsignedInt() - 2208988800L) * 1000L; System.out.println(new Date(currentTimeMillis)); } finally { m.release(); } } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) { cause.printStackTrace(); ctx.close(); } }

整个代码都是官网的上的介绍,拿下来的,所以可以看到,整个基础代码就是那么多:

其中1 是构建服务端

2 初始化socket通道

3添加助手类

4 设置option

5 设置长连接

6 绑定端口

7 关闭channel

8 关闭主从线程池模型

1 创建客户端

2 加入线程池

3 设置长连接

4 绑定相关助手类

5 流水线上添加助手类

6 绑定连接的主机和端口

7 关闭channel

8 关闭工作线程池

可以看出,那么整合netty客户端,服务端的基础代码都是一致的,那么我们使用netty开发需要注意哪些问题呢

我们著需要关闭我们的handler的开发,其他的不用关心。