Springboot整合kafka

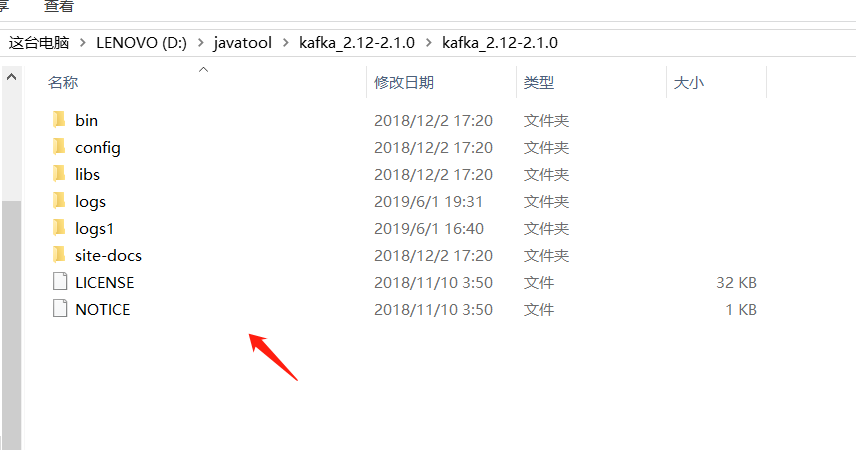

首先在windows下启动kafka

启动方法如下:

首先下载kafka,zookeeper安装包:

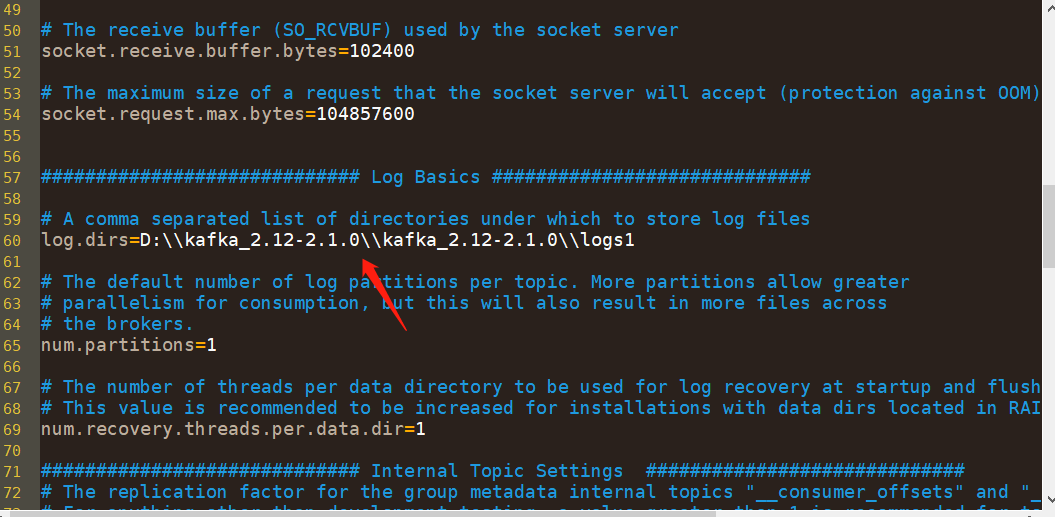

修改下

为你配置的文件路径

修改如图文件

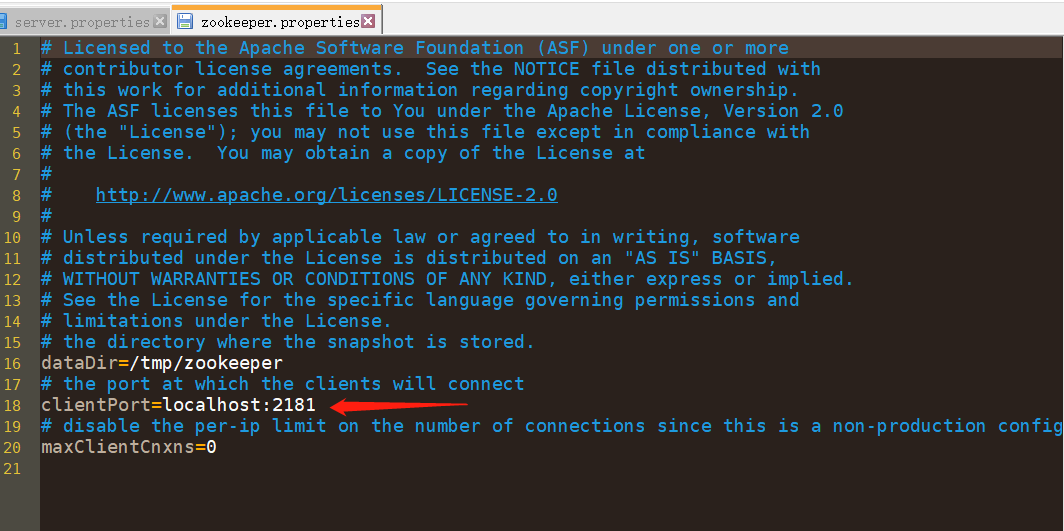

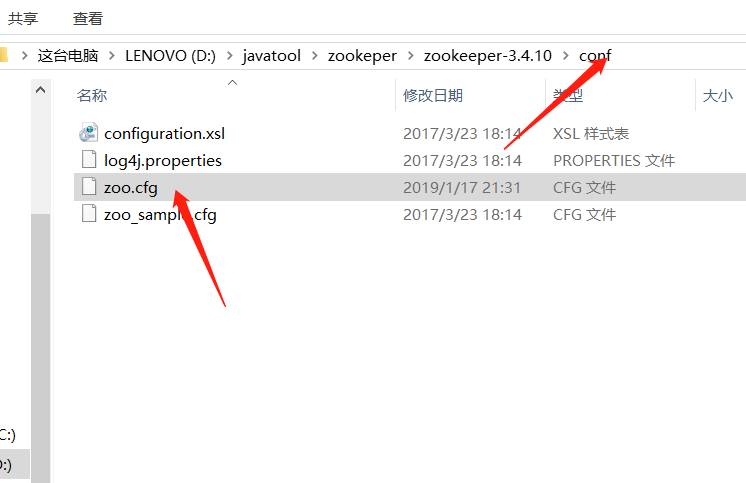

zookeeper启动:

复制下面那个配置文件,重命名为zoo.cnf,然后启动就可以了

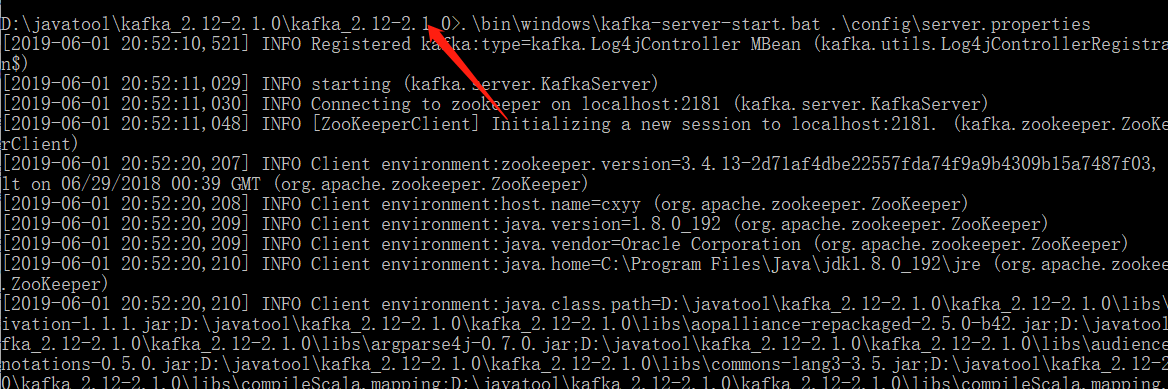

再启动kafka不知道为什么我再本机上,一直点击启动文件无法启动,后来采用启动窗口启动的:

.\bin\windows\kafka-server-start.bat .\config\server.properties

进入那个包里就可以无需要进入bin下

然后整合springboot

,

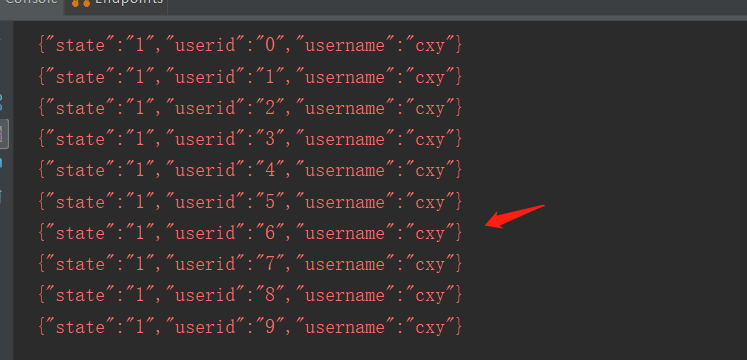

可以看到初始化的进行发送消息了,

看具体代码:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>2.1.5.RELEASE</version> <relativePath/> <!-- lookup parent from repository --> </parent> <groupId>com.cxy</groupId> <artifactId>skafka</artifactId> <version>0.0.1-SNAPSHOT</version> <name>skafka</name> <description>Demo project for Spring Boot</description> <properties> <java.version>1.8</java.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>org.springframework.kafka</groupId> <artifactId>spring-kafka</artifactId> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>1.2.56</version> </dependency> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> <optional>true</optional> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> <dependency> <groupId>org.springframework.kafka</groupId> <artifactId>spring-kafka-test</artifactId> <scope>test</scope> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> </plugin> </plugins> </build> </project>

启动类:

package com.cxy.skafka; import com.cxy.skafka.component.UserLogProducer; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.SpringBootApplication; import javax.annotation.PostConstruct; @SpringBootApplication public class SkafkaApplication { public static void main(String[] args) { SpringApplication.run(SkafkaApplication.class, args); } @Autowired private UserLogProducer userLogProducer; @PostConstruct public void init() { for (int i = 0; i < 10; i++) { userLogProducer.sendlog(String.valueOf(i)); } } }

model

package com.cxy.skafka.model; import lombok.Data; import lombok.experimental.Accessors; /*** * @ClassName: Usrlog * @Description: * @Auther: cxy * @Date: 2019/6/1:16:47 * @version : V1.0 */ @Data @Accessors public class Userlog { private String username; private String userid; private String state; }

producer

package com.cxy.skafka.component; import com.alibaba.fastjson.JSON; import com.cxy.skafka.model.Userlog; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.kafka.core.KafkaTemplate; import org.springframework.stereotype.Component; /*** * @ClassName: UserLogProducer * @Description: * @Auther: cxy * @Date: 2019/6/1:16:48 * @version : V1.0 */ @Component public class UserLogProducer { @Autowired private KafkaTemplate kafkaTemplate; public void sendlog(String userid){ Userlog userlog = new Userlog(); userlog.setUsername("cxy"); userlog.setState("1"); userlog.setUserid(userid); System.err.println(userlog+"1"); kafkaTemplate.send("userLog",JSON.toJSONString(userlog)); } }

消费者:

package com.cxy.skafka.component; import org.apache.kafka.clients.consumer.ConsumerRecord; import org.springframework.kafka.annotation.KafkaListener; import org.springframework.stereotype.Component; import java.util.Optional; /*** * @ClassName: UserLogConsumer * @Description: * @Auther: cxy * @Date: 2019/6/1:16:54 * @version : V1.0 */ @Component public class UserLogConsumer { @KafkaListener(topics = {"userLog"}) public void consumer(ConsumerRecord consumerRecord){ Optional kafkaMsg= Optional.ofNullable(consumerRecord.value()); if (kafkaMsg.isPresent()){ Object msg= kafkaMsg.get(); System.err.println(msg); } } }

配置文件:

server.port=8080 #制定kafka代理地址 spring.kafka.bootstrap-servers=localhost:9092 #消息发送失败重试次数 spring.kafka.producer.retries=0 #每次批量发送消息的数量 spring.kafka.producer.batch-size=16384 #每次批量发送消息的缓冲区大小 spring.kafka.producer.buffer-memory=335554432 # 指定消息key和消息体的编解码方式 spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer #=============== consumer ======================= # 指定默认消费者group id spring.kafka.consumer.group-id=user-log-group spring.kafka.consumer.auto-offset-reset=earliest spring.kafka.consumer.enable-auto-commit=true spring.kafka.consumer.auto-commit-interval=100 # 指定消息key和消息体的编解码方式 spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer

启动之后就是上面的效果

笔记转移,由于在有道云的笔记转移,写的时间可能有点久,如果有错误的地方,请指正