Python爬虫框架Scrapy实例(三)数据存储到MongoDB

任务目标:爬取豆瓣电影top250,将数据存储到MongoDB中。

items.py文件

# -*- coding: utf-8 -*- import scrapy class DoubanItem(scrapy.Item): # define the fields for your item here like: # 电影名 title = scrapy.Field() # 基本信息 bd = scrapy.Field() # 评分 star = scrapy.Field() # 简介 quote = scrapy.Field()

spiders文件

# -*- coding: utf-8 -*- import scrapy from douban.items import DoubanItem class DoubanmovieSpider(scrapy.Spider): name = "doubanmovie" allowed_domains = ["movie.douban.com"] offset = 0 url = "https://movie.douban.com/top250?start=" start_urls = ( url + str(offset), ) def parse(self, response): item = DoubanItem() movies = response.xpath('//div[@class="info"]') for each in movies: # 电影名 item['title'] = each.xpath('.//span[@class="title"][1]/text()').extract()[0] # 基本信息 item['bd'] = each.xpath('.//div[@class="bd"]/p/text()').extract()[0] # 评分 item['star'] = each.xpath('.//div[@class="star"]/span[@class="rating_num"]/text()').extract()[0] # 简介 quote = each.xpath('.//p[@class="quote"]/span/text()').extract() if len(quote) != 0: item['quote'] = quote[0] yield item if self.offset < 225: self.offset += 25 yield scrapy.Request(self.url + str(self.offset), callback=self.parse)

pipelines.py文件

# -*- coding: utf-8 -*- import pymongo from scrapy.conf import settings class DoubanPipeline(object): def __init__(self): host = settings["MONGODB_HOST"] port = settings["MONGODB_PORT"] dbname = settings["MONGODB_DBNAME"] sheetname = settings["MONGODB_SHEETNAME"] # 创建MONGODB数据库链接 client = pymongo.MongoClient(host=host, port=port) # 指定数据库 mydb = client[dbname] # 存放数据的数据库表名 self.post = mydb[sheetname] def process_item(self, item, spider): data = dict(item) self.post.insert(data) return item

settings.py文件

# -*- coding: utf-8 -*- BOT_NAME = 'douban' SPIDER_MODULES = ['douban.spiders'] NEWSPIDER_MODULE = 'douban.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent USER_AGENT = "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0;" ITEM_PIPELINES = { 'douban.pipelines.DoubanPipeline': 300, } # MONGODB 主机名 MONGODB_HOST = "127.0.0.1" # MONGODB 端口号 MONGODB_PORT = 27017 # 数据库名称 MONGODB_DBNAME = "Douban" # 存放数据的表名称 MONGODB_SHEETNAME = "doubanmovies"

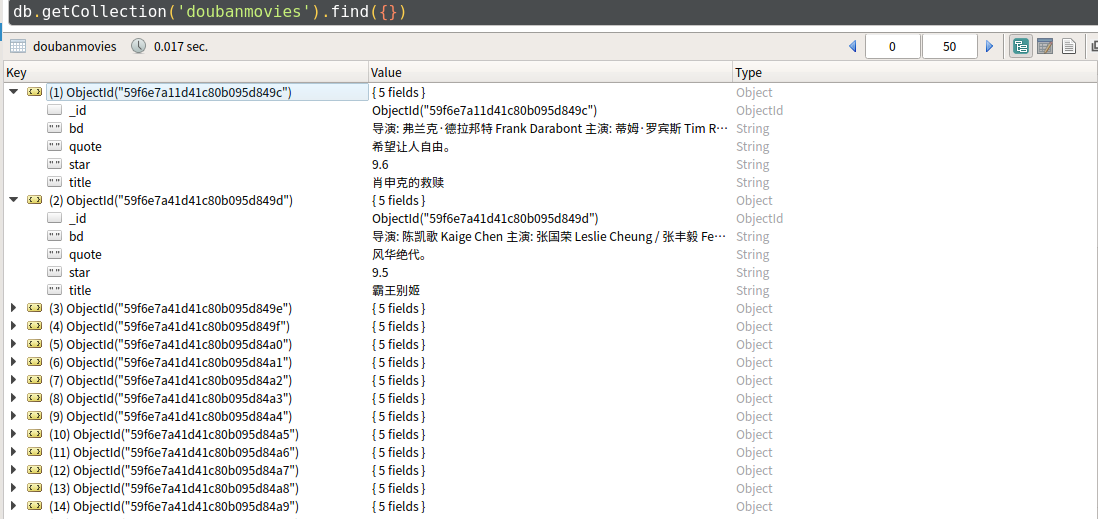

最终结果: