路飞学城-Python爬虫集训-第1章

1心得体会

沛奇老师讲的真心不错。通过这节学习,让我能简单获取一些网站的信息了。以前是只能获取静态网页,不知道获取要登录的网站的资源。这次后能获奖一些需要登录功能网站的资源了,而且也对requests模板更加熟练了。更重要的是,当爬虫时,怎么去分析网页,这个学到了很多。

2 什么是爬虫

百度百科:网络爬虫(又被称为网页蜘蛛,网络机器人,在FOAF社区中间,更经常的称为网页追逐者),是一种按照一定的规则,自动地抓取万维网信息的程序或者脚本。

通过Python可以快速的编写爬虫程序,来获取指定URL的资源。python爬虫用requests和bs4这两个模板就可以爬取很多资源了。

3 request

request用到的常用两个方法为 get 和 post。

由于网络上,大多数的url访问都是这两种访问,所以通过这两个方法可以获取大多数网络资源。

这两个方法的主要参数如下:

url:想要获取URL资源的链接。

headers:请求头,由于很多网站都做了反爬虫。所以伪装好headers就能让网站无法释放是机器在访问。

json:当访问需要携带json时加入。

data:当访问需要携带data时加入,一般登录网站的用户名和密码都在data里。

cookie:由于辨别用户身份,爬取静态网站不需要,但需要登录的网站就需要用到cookie。

parmas:参数,有些url带id=1&user=starry等等,可以写进parmas这个参数里。

timeout:设置访问超时时间,当超过这个时间没有获取到资源就停止。

allow_redirects:有些url会重定向到另外一个url,设置为False可以自己不让它重定向。

proxies:设置代理。

以上参数是主要用到的参数。

4.bs4

bs4是将request获取到的内容进行解析,能更快的找到内容,也很方便。

当requests返回的text内容为html时,用bs4进行解析用,soup = BeautifulSoup4(html, "html.parser")

soup 常用的方法有:

find:根据参数查找第一个符合的内容,用用的有name和attrs参数

find_all:查找全部的。

get:获取标签的属性

常用的属性有children

5 登录抽屉并自动点赞

1、首先向 https://dig.chouti.com 访问获取cookie

1 r1 = requests.get( 2 url="https://dig.chouti.com/", 3 headers={ 4 "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36" 5 } 6 ) 7 r1_cookie_dict = r1.cookies.get_dict()

2 、访问 https://dig.chouti.com/login 这个网页,并携带账户、密码和cookie。

1 response_login = requests.post( 2 url='https://dig.chouti.com/login', 3 data={ 4 "phone":"xxx", 5 "password":"xxx", 6 "oneMonth":"1" 7 }, 8 headers={ 9 "User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36" 10 }, 11 cookies = r1_cookie_dict 12 )

3、获取点赞某个新闻需要的url,携带cookie访问这个url就可以访问了。

1 rst = requests.post( 2 url="https://dig.chouti.com/link/vote?linksId=20639606", 3 headers={ 4 "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36" 5 }, 6 cookies = r1_cookie_dict 7 )

6 自动登入拉勾网并修改信息

1 拉勾网为了防访问,在headers里需要 X-Anit-Forge-Code 和 X-Anit-Forge-Token 这两个值,这两个值访问拉勾登录网页可以获取。

1 r1 = requests.get( 2 url='https://passport.lagou.com/login/login.html', 3 headers={ 4 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36', 5 'Host':'passport.lagou.com' 6 } 7 ) 8 all_cookies.update(r1.cookies.get_dict()) 9 10 X_Anit_Forge_Token = re.findall(r"X_Anti_Forge_Token = '(.*?)';",r1.text,re.S)[0] 11 X_Anti_Forge_Code = re.findall(r"X_Anti_Forge_Code = '(.*?)';",r1.text,re.S)[0]

2 然后访问登录url,并将账户、密码和上面两个值携带进去。当然,headers里的值需要全一点。

1 r2 = requests.post( 2 url="https://passport.lagou.com/login/login.json", 3 headers={ 4 'Host':'passport.lagou.com', 5 "Referer":"https://passport.lagou.com/login/login.html", 6 "X-Anit-Forge-Code": X_Anti_Forge_Code, 7 "X-Anit-Forge-Token": X_Anit_Forge_Token, 8 "X-Requested-With": "XMLHttpRequest", 9 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36', 10 "Content-Type": "application/x-www-form-urlencoded; charset=UTF-8" 11 }, 12 data={ 13 "isValidate":"true", 14 "username":"xxx", 15 "password":"xxx", 16 "request_form_verifyCode":"", 17 "submit":"" 18 }, 19 cookies=r1.cookies.get_dict() 20 ) 21 all_cookies.update(r2.cookies.get_dict())

3 当然,获取了登录成功的cookie也不一定可以修改信息。这时还需要用户授权。访问 https://passport.lagou.com/grantServiceTicket/grant.html这个网页就可以获取到

1 r3 = requests.get( 2 url='https://passport.lagou.com/grantServiceTicket/grant.html', 3 headers={ 4 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36' 5 }, 6 allow_redirects = False, 7 cookies = all_cookies 8 ) 9 all_cookies.update(r3.cookies.get_dict())

4 用户授权会重定向一系列的url,我们需要把重定向的网页的cookie全部拿到。重定向的网页在上一个url中的Location里。

1 r4 = requests.get( 2 url=r3.headers['Location'], 3 headers={ 4 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36' 5 }, 6 allow_redirects=False, 7 cookies=all_cookies 8 ) 9 all_cookies.update(r4.cookies.get_dict()) 10 11 print('r5',r4.headers['Location']) 12 r5 = requests.get( 13 url=r4.headers['Location'], 14 headers={ 15 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36' 16 }, 17 allow_redirects=False, 18 cookies=all_cookies 19 ) 20 all_cookies.update(r5.cookies.get_dict()) 21 22 print('r6',r5.headers['Location']) 23 r6 = requests.get( 24 url=r5.headers['Location'], 25 headers={ 26 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36' 27 }, 28 allow_redirects=False, 29 cookies=all_cookies 30 ) 31 all_cookies.update(r6.cookies.get_dict()) 32 33 print('r7',r6.headers['Location']) 34 r7 = requests.get( 35 url=r6.headers['Location'], 36 headers={ 37 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36' 38 }, 39 allow_redirects=False, 40 cookies=all_cookies 41 ) 42 all_cookies.update(r7.cookies.get_dict())

5 接下来就是修改信息了,修改信息时访问的url需要传入submitCode和submitToken这两个值,通过分析可以得到这两个值在访问 https://gate.lagou.com/v1/neirong/account/users/0/这里url的返回值中可以获取。

获取submitCode和submitToken:

1 r6 = requests.get( 2 url='https://gate.lagou.com/v1/neirong/account/users/0/', 3 headers={ 4 "User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36", 5 'X-L-REQ-HEADER': "{deviceType:1}", 6 'Origin': 'https://account.lagou.com', 7 'Host': 'gate.lagou.com', 8 }, 9 cookies = all_cookies 10 ) 11 all_cookies.update(r6.cookies.get_dict()) 12 r6_json = r6.json()

修改信息:

1 r7 = requests.put( 2 url='https://gate.lagou.com/v1/neirong/account/users/0/', 3 headers={ 4 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36', 5 'Origin': 'https://account.lagou.com', 6 'Host': 'gate.lagou.com', 7 'X-Anit-Forge-Code': r6_json['submitCode'], 8 'X-Anit-Forge-Token': r6_json['submitToken'], 9 'X-L-REQ-HEADER': "{deviceType:1}", 10 }, 11 cookies=all_cookies, 12 json={"userName": "Starry", "sex": "MALE", "portrait": "images/myresume/default_headpic.png", 13 "positionName": '...', "introduce": '....'} 14 )

整体代码如下:

1 #!/usr/bin/env python 2 # -*- coding: utf-8 -*- 3 4 ''' 5 @auther: Starry 6 @file: 5.修改个人信息.py 7 @time: 2018/7/5 11:43 8 ''' 9 10 import re 11 import requests 12 13 14 all_cookies = {} 15 #########################1、查看登录界面 ################### 16 r1 = requests.get( 17 url='https://passport.lagou.com/login/login.html', 18 headers={ 19 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36', 20 'Host':'passport.lagou.com' 21 } 22 ) 23 all_cookies.update(r1.cookies.get_dict()) 24 25 X_Anit_Forge_Token = re.findall(r"X_Anti_Forge_Token = '(.*?)';",r1.text,re.S)[0] 26 X_Anti_Forge_Code = re.findall(r"X_Anti_Forge_Code = '(.*?)';",r1.text,re.S)[0] 27 print(X_Anit_Forge_Token,X_Anti_Forge_Code) 28 #################2.用户名密码登录 ################################ 29 r2 = requests.post( 30 url="https://passport.lagou.com/login/login.json", 31 headers={ 32 'Host':'passport.lagou.com', 33 "Referer":"https://passport.lagou.com/login/login.html", 34 "X-Anit-Forge-Code": X_Anti_Forge_Code, 35 "X-Anit-Forge-Token": X_Anit_Forge_Token, 36 "X-Requested-With": "XMLHttpRequest", 37 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36', 38 "Content-Type": "application/x-www-form-urlencoded; charset=UTF-8" 39 }, 40 data={ 41 "isValidate":"true", 42 "username":"xxx", 43 "password":"xxx", 44 "request_form_verifyCode":"", 45 "submit":"" 46 }, 47 cookies=r1.cookies.get_dict() 48 ) 49 all_cookies.update(r2.cookies.get_dict()) 50 51 ###################3 用户授权 ######################### 52 r3 = requests.get( 53 url='https://passport.lagou.com/grantServiceTicket/grant.html', 54 headers={ 55 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36' 56 }, 57 allow_redirects = False, 58 cookies = all_cookies 59 ) 60 all_cookies.update(r3.cookies.get_dict()) 61 62 ###################4 用户认证 ######################### 63 print('r4',r3.headers['Location']) 64 r4 = requests.get( 65 url=r3.headers['Location'], 66 headers={ 67 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36' 68 }, 69 allow_redirects=False, 70 cookies=all_cookies 71 ) 72 all_cookies.update(r4.cookies.get_dict()) 73 74 print('r5',r4.headers['Location']) 75 r5 = requests.get( 76 url=r4.headers['Location'], 77 headers={ 78 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36' 79 }, 80 allow_redirects=False, 81 cookies=all_cookies 82 ) 83 all_cookies.update(r5.cookies.get_dict()) 84 85 print('r6',r5.headers['Location']) 86 r6 = requests.get( 87 url=r5.headers['Location'], 88 headers={ 89 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36' 90 }, 91 allow_redirects=False, 92 cookies=all_cookies 93 ) 94 all_cookies.update(r6.cookies.get_dict()) 95 96 print('r7',r6.headers['Location']) 97 r7 = requests.get( 98 url=r6.headers['Location'], 99 headers={ 100 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36' 101 }, 102 allow_redirects=False, 103 cookies=all_cookies 104 ) 105 all_cookies.update(r7.cookies.get_dict()) 106 107 ###################5. 查看个人页面 ######################### 108 109 r5 = requests.get( 110 url='https://www.lagou.com/resume/myresume.html', 111 headers={ 112 "User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36" 113 }, 114 cookies = all_cookies 115 ) 116 117 print("李港" in r5.text) 118 119 120 ###################6. 查看 ######################### 121 r6 = requests.get( 122 url='https://gate.lagou.com/v1/neirong/account/users/0/', 123 headers={ 124 "User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36", 125 'X-L-REQ-HEADER': "{deviceType:1}", 126 'Origin': 'https://account.lagou.com', 127 'Host': 'gate.lagou.com', 128 }, 129 cookies = all_cookies 130 ) 131 all_cookies.update(r6.cookies.get_dict()) 132 r6_json = r6.json() 133 print(r6.json()) 134 # ################################ 7.修改信息 ########################## 135 r7 = requests.put( 136 url='https://gate.lagou.com/v1/neirong/account/users/0/', 137 headers={ 138 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36', 139 'Origin': 'https://account.lagou.com', 140 'Host': 'gate.lagou.com', 141 'X-Anit-Forge-Code': r6_json['submitCode'], 142 'X-Anit-Forge-Token': r6_json['submitToken'], 143 'X-L-REQ-HEADER': "{deviceType:1}", 144 }, 145 cookies=all_cookies, 146 json={"userName": "Starry", "sex": "MALE", "portrait": "images/myresume/default_headpic.png", 147 "positionName": '...', "introduce": '....'} 148 ) 149 print(r7.text)

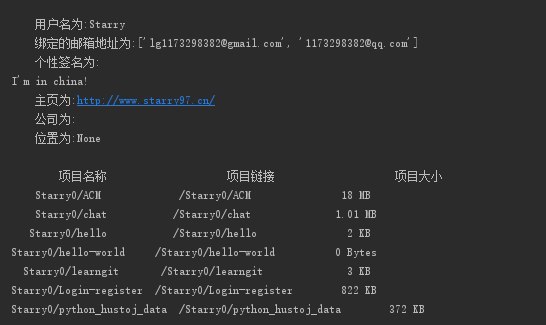

7 作业 自动登录GitHub并获取信息

运行如下:

源代码:

1 #-*- coding:utf-8 -*- 2 3 ''' 4 @auther: Starry 5 @file: github_login.py 6 @time: 2018/6/22 16:22 7 ''' 8 9 import requests 10 from bs4 import BeautifulSoup 11 import bs4 12 13 14 headers = { 15 "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8", 16 "Accept-Encoding": "gzip, deflate, br", 17 "Accept-Language": "zh-CN,zh;q=0.9", 18 "Cache-Control": "max-age=0", 19 "Connection": "keep-alive", 20 "Host": "github.com", 21 "Upgrade-Insecure-Requests": "1", 22 "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36" 23 } 24 def login(user, password): 25 ''' 26 通过已知的账号和密码自动登入GitHub,并返回该账号的Cookie 27 :param user: 登入账号 28 :param password: 登入密码 29 :return: 返回Cookie 30 ''' 31 r1 = requests.get( 32 url = "https://github.com/login", 33 headers = headers 34 ) 35 cookies_dict = r1.cookies.get_dict() 36 soup = BeautifulSoup(r1.text,"html.parser") 37 authenticity_token = soup.find('input',attrs={"name":"authenticity_token"}).get('value') 38 # print(authenticity_token) 39 res = requests.post( 40 url = "https://github.com/session", 41 data = { 42 "commit":"Sign in", 43 "utf8":"✓", 44 "authenticity_token":authenticity_token, 45 "login":user, 46 "password":password 47 }, 48 headers = headers, 49 cookies = cookies_dict 50 ) 51 cookies_dict.update(res.cookies.get_dict()) 52 return cookies_dict 53 54 def View_Information(cookies_dict): 55 ''' 56 展示GitHub的个人信息 57 :param cookies_dict: 账号的Cookie值 58 :return: 59 ''' 60 url_setting_profile = "https://github.com/settings/profile" 61 html = requests.get( 62 url=url_setting_profile, 63 cookies = cookies_dict, 64 headers=headers 65 ) 66 soup = BeautifulSoup(html.text,"html.parser") 67 user_name = soup.find('input',attrs={'id':'user_profile_name'}).get('value') 68 user_email = [] 69 select = soup.find('select',attrs={'class':'form-select select'}) 70 for index,child in enumerate(select): 71 if index == 0: 72 continue 73 if isinstance(child,bs4.element.Tag): 74 user_email.append(child.get('value')) 75 Bio = soup.find('textarea', attrs={'id': 'user_profile_bio'}).text 76 URL = soup.find('input',attrs={'id':'user_profile_blog'}).get('value') 77 Company = soup.find('input',attrs={'id':'user_profile_company'}).get('value') 78 Location = soup.find('input',attrs={'id':'user_profile_location'}).get('value') 79 print(''' 80 用户名为:{0} 81 绑定的邮箱地址为:{1} 82 个性签名为:{2} 83 主页为:{3} 84 公司为:{4} 85 位置为:{5} 86 '''.format(user_name,user_email,Bio,URL,Company,Location)) 87 html = requests.get( 88 url="https://github.com/settings/repositories", 89 cookies = cookies_dict, 90 headers = headers 91 ) 92 html.encoding = html.apparent_encoding 93 soup = BeautifulSoup(html.text, "html.parser") 94 div = soup.find(name='div',attrs={"class":"listgroup"}) 95 # print(div) 96 tplt = "{0:^20}\t{1:^20}\t{2:^20}" 97 print(tplt.format("项目名称","项目链接","项目大小")) 98 for child in div.children: 99 if isinstance(child, bs4.element.Tag): 100 a = child.find('a',attrs={"class":"mr-1"}) 101 small = child.find('small') 102 print(tplt.format(a.text, a.get("href"), small.text)) 103 104 if __name__ == '__main__': 105 user = 'xxx' 106 password = 'xxx' 107 cookies_dict = login(user,password) 108 View_Information(cookies_dict)