官网介绍

一、canal admin的安装

- 下载、解压、创建软连接

- 修改配置

server:

port: 8089

spring:

jackson:

date-format: yyyy-MM-dd HH:mm:ss

time-zone: GMT+8

# 元数据库连接信息

spring.datasource:

address: nn1.hadoop:3306

database: canal_manager

username: canal

password: canal

driver-class-name: com.mysql.jdbc.Driver

url: jdbc:mysql://${spring.datasource.address}/${spring.datasource.database}?useUnicode=true&characterEncoding=UTF-8&useSSL=false

hikari:

maximum-pool-size: 30

minimum-idle: 1

# canal-server 连接信息

canal:

adminUser: admin

adminPasswd: admin

- 初始化源数据

- 以 root 用户进入 MySQL 命令行。

- 运行初始化 sql 文件:source /usr/local/canal-admin-1.1.4/conf/canal_manager.sql

- 开启

- 查看日志是否开启成功。

- 成功后可以访问 web UI,默认密码:admin/123456,在元数据库 canal_user 表中配置。

二、canal-server端配置

- 安装 canal-server

- 修改配置文件

# canal服务端的ip最好使用主机映射

canal.register.ip = nn1.hadoop

# canal admin 的配置

canal.admin.manager = nn1.hadoop:8089

canal.admin.port = 11110

# 与 canal-admin 中 conf/application.yml canal.adminUser 的配置一致。

canal.admin.user = admin

canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

# admin auto register

canal.admin.register.auto = true # 是否开启自动注册模式

canal.admin.register.cluster = # 可以指定默认注册的集群名,如果不指定,默认注册为单机模式

- 启动 canal-service,有两种方式:

- 命令:./bin/startup.sh local。此时 canal_local.properties 会覆盖 canal.properties 配置

- 变更配置文件。用 canal_local.properties 中的配置替换掉 canal.properties 中的配置,以 ./bin/startup.sh 启动。

三、测试

canal-admin WEB UI 操作说明

3.1、canal.serverMode = tcp

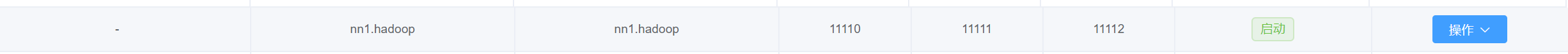

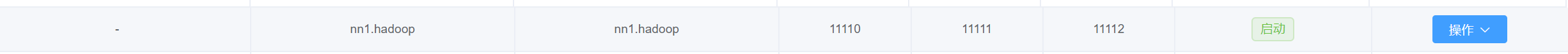

- 新建 server,数据投递方式为 tcp。

- canal.properties 文件

# canal admin config

canal.admin.manager = nn1.hadoop:8089

canal.admin.port = 11110

canal.admin.user = admin

canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

....

# tcp, kafka, RocketMQ

canal.serverMode = tcp

- 新建 instance

- instance.propertios 文件

canal.instance.master.address=nn1.hadoop:3306

# username/password

canal.instance.dbUsername=canal

canal.instance.dbPassword=canal

canal.instance.connectionCharset = UTF-8

canal.instance.defaultDatabaseName = canal_test

# enable druid Decrypt database password

canal.instance.enableDruid=false

# table regex

canal.instance.filter.regex=canal_test\\..*

- canal client 接收数据

import com.alibaba.otter.canal.client.CanalConnector;

import com.alibaba.otter.canal.protocol.CanalEntry;

import com.alibaba.otter.canal.protocol.Message;

import com.google.protobuf.InvalidProtocolBufferException;

import lombok.extern.slf4j.Slf4j;

import java.util.List;

/**

* @author xiandongxie

*/

@Slf4j

public abstract class CanalProcess implements Runnable {

@Override

public void run() {

this.doProcess();

}

public abstract String getSubScribe();

public abstract CanalConnector getConnector();

public void doProcess() {

CanalConnector connector = getConnector();

connector.connect();

connector.subscribe(getSubScribe());

log.info("start {} canal prcess", this.getClass().getName());

while (true) {

try {

Message message = connector.getWithoutAck(100);

long batchId = message.getId();

if (batchId == -1 || message.getEntries().isEmpty()) {

try {

Thread.sleep(3000);

} catch (InterruptedException e) {

e.printStackTrace();

}

} else {

handleEntries(message.getEntries());

}

connector.ack(batchId);

} catch (Exception e) {

log.error(e.getMessage());

}

}

}

private void handleEntries(List<CanalEntry.Entry> entryList) {

for (CanalEntry.Entry entry : entryList) {

CanalEntry.RowChange rowChange = null;

try {

rowChange = CanalEntry.RowChange.parseFrom(entry.getStoreValue());

} catch (InvalidProtocolBufferException e) {

e.printStackTrace();

}

for (CanalEntry.RowData rowData : rowChange.getRowDatasList()) {

String tableName = entry.getHeader().getTableName();

try {

log.info("tableName = {}, {}",tableName, rowChange.getEventType());

// getStorageManager().update(tableName, rowChange.getEventType(), rowData);

} catch (Exception e) {

log.error("handleEntries error", e);

}

if (rowChange.getEventType() == CanalEntry.EventType.INSERT

|| rowChange.getEventType() == CanalEntry.EventType.UPDATE

|| rowChange.getEventType() == CanalEntry.EventType.DELETE) {

printColumns(rowData.getAfterColumnsList());

}

}

}

}

private void printColumns(List<CanalEntry.Column> columns) {

for (CanalEntry.Column column : columns) {

String line = column.getName() + "=" + column.getValue();

log.info("data:{} ", line);

}

}

}

----------------------------------------------------------------------------

import com.alibaba.otter.canal.client.CanalConnector;

import com.alibaba.otter.canal.client.CanalConnectors;

import javax.annotation.PostConstruct;

import java.net.InetSocketAddress;

/**

* @author xiandongxie

*/

public class CanalTestProcess extends CanalProcess{

private String zookeeper = "nn1.hadoop:2181,nn2.hadoop:2181,s1:2181";

private String destination = "instance_tcp";

private String schema = "canal_test";

private String pattern;

@PostConstruct

public void init(){

StringBuffer patternSb = new StringBuffer();

patternSb.append(schema).append(".order");

pattern = patternSb.toString();

}

@Override

public String getSubScribe() {

return pattern;

}

@Override

public CanalConnector getConnector() {

CanalConnector connector = CanalConnectors.newSingleConnector(new InetSocketAddress("nn1.hadoop",

11111), destination, "canal", "canal");

// CanalConnector connector = CanalConnectors.newClusterConnector(zookeeper, destination,"", "");

return connector;

}

}

3.2、canal.serverMode = kafka

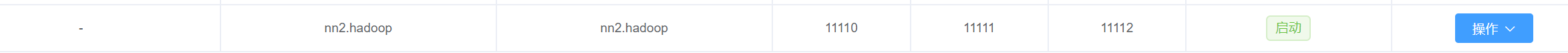

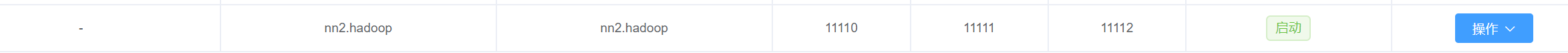

- 新建 server2,数据投递至 kafka。

- canal.properties 文件

# canal admin config

canal.admin.manager = nn1.hadoop:8089

canal.admin.port = 11110

canal.admin.user = admin

canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

....

# tcp, kafka, RocketMQ

canal.serverMode = kafka

canal.mq.servers = nn1.hadoop:9092,nn2.hadoop:9092,s1:9092

- 新建 instance

- instance.propertios 文件

canal.instance.master.address=nn1.hadoop:3306

# username/password

canal.instance.dbUsername=canal

canal.instance.dbPassword=canal

canal.instance.connectionCharset = UTF-8

canal.instance.defaultDatabaseName = canal_test

# enable druid Decrypt database password

canal.instance.enableDruid=false

# table regex

canal.instance.filter.regex=canal_test\\..*

# mq config

canal.mq.topic=example

# dynamic topic route by schema or table regex

canal.mq.dynamicTopic=canal_test

- 启动 kafka 消费者测试

- /usr/local/kafka/bin/kafka-console-consumer.sh --bootstrap-server nn1.hadoop:9092,nn2.hadoop:9092,s1:9092 --topic canal_test