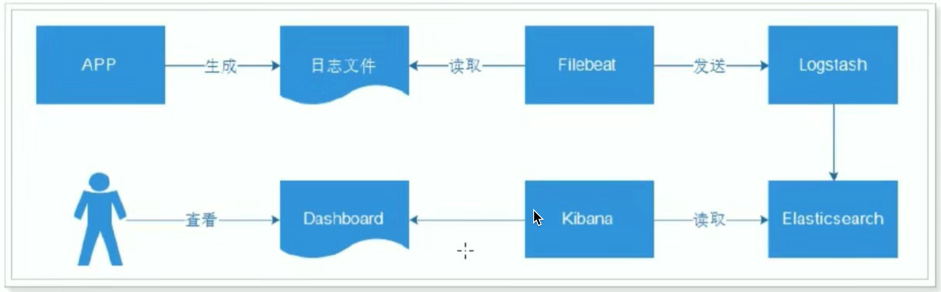

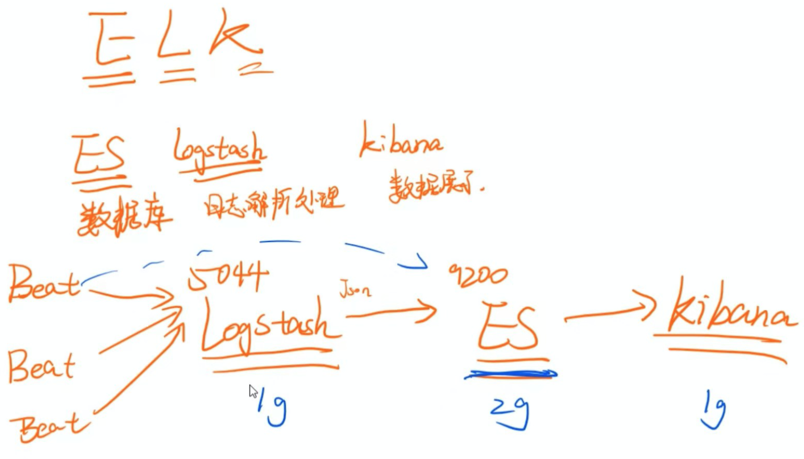

ELK日志解决方案

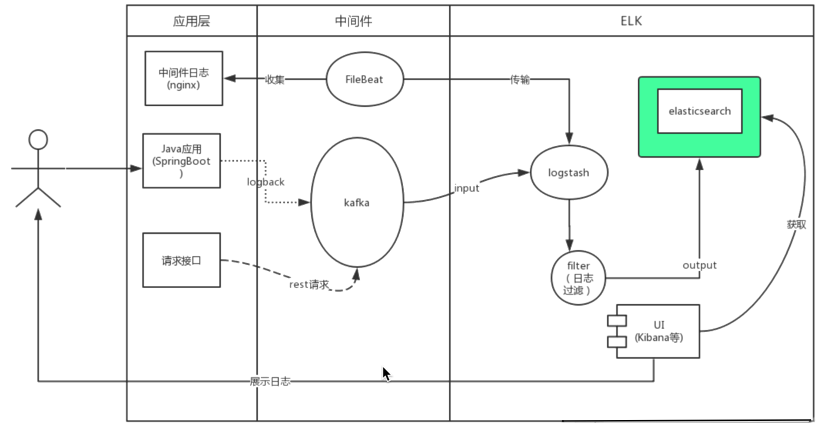

1、方案整体设计

FileBeats+Logstash+ElasticSearch+Kibana

1)ElasticSearch

简称ES,用来做日志数据的存储,当然也可以存储其他数据,

ES是互联网应用全文检索的大杀器。

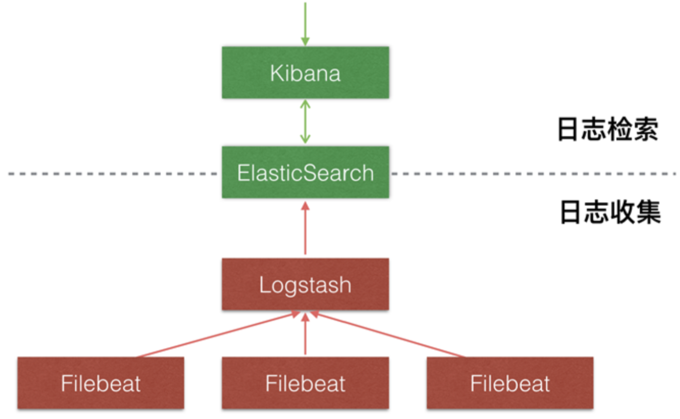

2)LogStash

用来做日志的收集、整理、拆分,负责将数据存储到ES中。

由于Logstash本身消耗资源较多,因此官方建议使用Filebeat来进行日志收集。

3)Kibana

用来做图形化页面,将ES中的数据用可视化的方式展现给用户,并支持多种功能,

例如:多维度查询、大盘监控、统计报表等等。

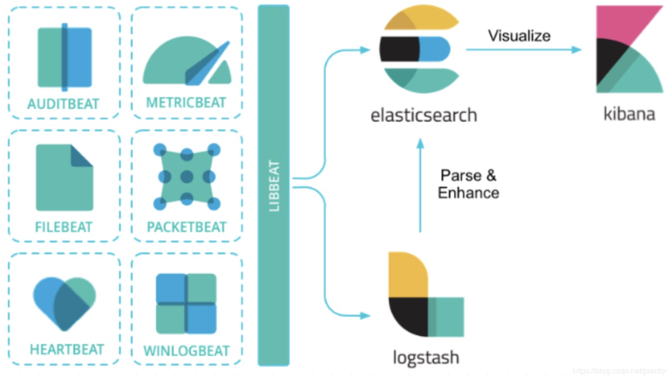

4)FileBeat

轻量级日志文件收集工具,占用资源特别少,收集到的日志数据可以输出到ES或LogStash中。

FileBeat只负责文件的收集,它是Beat家族的成员之一,关于Beat家族的其他成员可以查看官网。

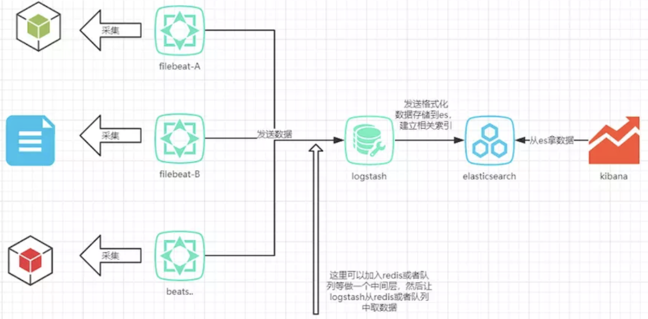

当然,日志这块也可以有其他多种玩法,

例如我们可以将日志不打到xxx.log文件中,而是直接打到ES或LogStash里,或者中间再加一个mq来做异步。

本文的内容是基于xxx.log的玩法。

其它解决方案

2、ES配置和启动

1)主节点配置文件(data节点类似)

/opt/app/elasticsearch-7.4.1/elasticsearch.yml

cluster.name: escluster

node.name: ip101 #node.name: node-2#node.name: node-3

node.master: true

node.data: true

network.host: 0.0.0.0

http.port: 9200

transport.tcp.port: 9300

#Elasticsearch7新增参数,写入候选主节点的设备地址,来开启服务时就可以被选为主节点,由discovery.zen.ping.unicast.hosts:参数改变而来

discovery.seed_hosts: ["ip101","ip102","ip103"]

#Elasticsearch7新增参数,写入候选主节点的设备地址,来开启服务时就可以被选为主节点

cluster.initial_master_nodes: ["ip101"]

#ES7后参数被废弃

#discovery.zen.ping.unicast.hosts: ["192.168.8.101:9300", "192.168.8.102:9300", "192.168.8.103:9300"]

http.cors.enabled: true

http.cors.allow-origin: "*"

2)启动

/bin/elasticsearch

3、Logstash 配置和启动

1) 配置

input {

beats {

type => "log"

port => "5044" #开始本机的5044端口,监听

}

}

filter{

mutate{

split=>["message","|"]

add_field => {

"log_date" => "%{[message][0]}"

}

add_field => {

"log_level" => "%{[message][1]}"

}

add_field => {

"log_thread" => "%{[message][2]}"

}

add_field => {

"log_class" => "%{[message][3]}"

}

add_field => {

"log_content" => "%{[message][4]}"

}

remove_field => ["message"]

}

}

output {

stdout { codec => rubydebug }

elasticsearch {

hosts => ["ip101:9200"]

index => "%{type}-%{+YYYY.MM.dd}"

}

}

2)启动

[root@ip101 config]# ../bin/logstash -f logstash.conf

4、Filebeat 配置和启动

1) 配置

#=========================== Filebeat inputs ==============

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/test.log

#============================== Dashboards ===============

#setup.dashboards.enabled: false

#============================== Kibana ==================

#setup.kibana:

# host: "192.168.101.5:5601"

#-------------------------- Elasticsearch output ---------

#output.elasticsearch:

# hosts: ["192.168.8.101:9200"]

output.logstash:

hosts: ["192.168.8.101:5044"]

2)启动

./filebeat -e -c my_filebeat.yml -d "publish"

5、Kibana配置和启动

1)配置

vi /opt/app/kibana-7.4.2-linux-x86_64/config/kibana.yml

# Kibana is served by a back end server. This setting specifies the port to use.

server.port: 5601

# Specifies the address to which the Kibana server will bind. IP addresses and host names are both valid values.

# The default is 'localhost', which usually means remote machines will not be able to connect.

# To allow connections from remote users, set this parameter to a non-loopback address.

server.host: "192.168.8.101"

# The URLs of the Elasticsearch instances to use for all your queries.

elasticsearch.hosts: ["http://192.168.8.101:9200"]

2)启动

bin/kibana

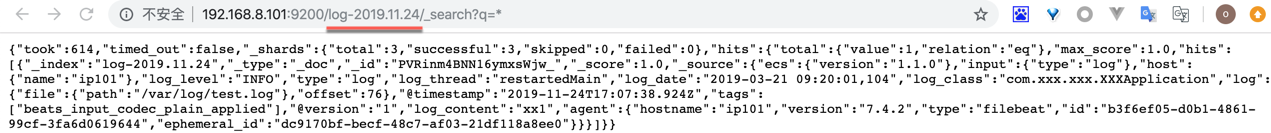

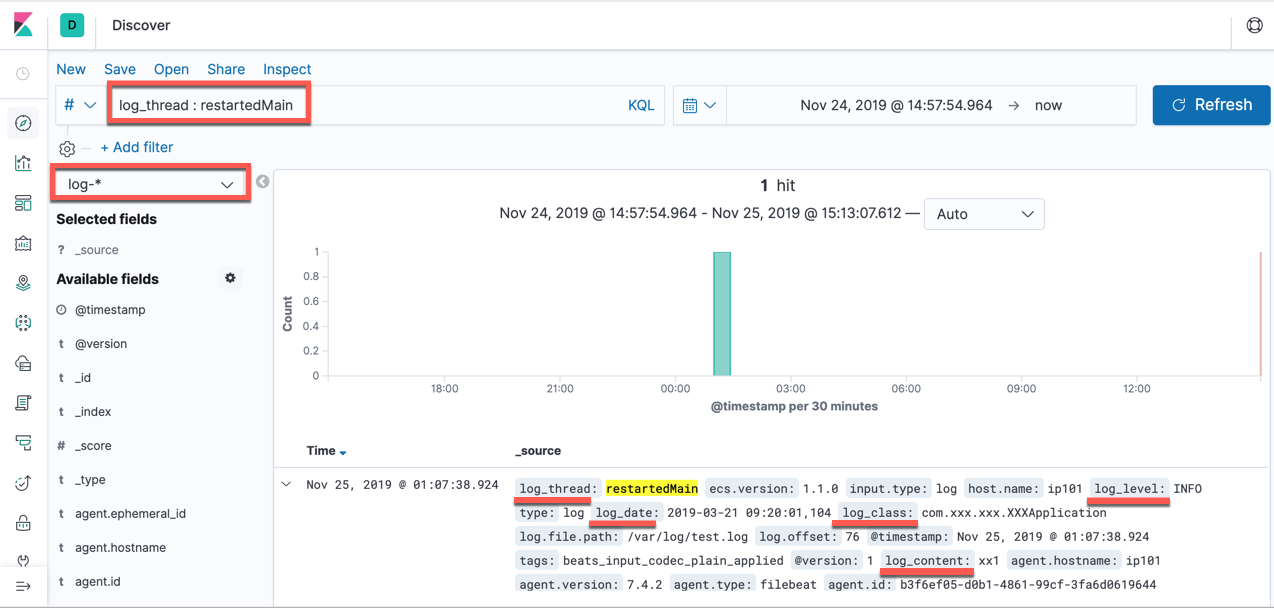

3)日志生成

[root@ip101 ~]# echo "2019-03-21 09:20:01,104|INFO|restartedMain|com.xxx.xxx.XXXApplication|xx1" >> /var/log/test.log

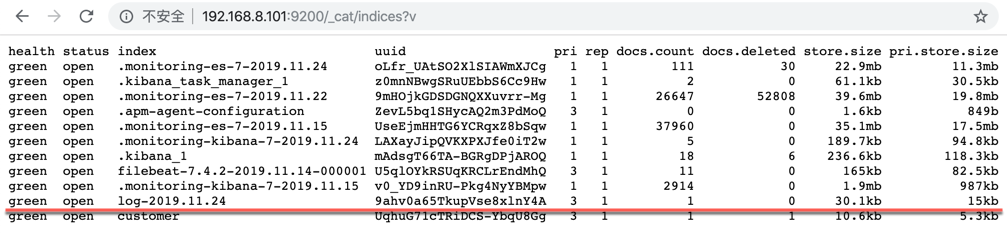

查看索引

{

"took": 614,

"timed_out": false,

"_shards": {

"total": 3,

"successful": 3,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"max_score": 1.0,

"hits": [{

"_index": "log-2019.11.24",

"_type": "_doc",

"_id": "PVRinm4BNN16ymxsWjw_",

"_score": 1.0,

"_source": {

"ecs": {

"version": "1.1.0"

},

"input": {

"type": "log"

},

"host": {

"name": "ip101"

},

"log_level": "INFO",

"type": "log",

"log_thread": "restartedMain",

"log_date": "2019-03-21 09:20:01,104",

"log_class": "com.xxx.xxx.XXXApplication",

"log": {

"file": {

"path": "/var/log/test.log"

},

"offset": 76

},

"@timestamp": "2019-11-24T17:07:38.924Z",

"tags": ["beats_input_codec_plain_applied"],

"@version": "1",

"log_content": "xx1",

"agent": {

"hostname": "ip101",

"version": "7.4.2",

"type": "filebeat",

"id": "b3f6ef05-d0b1-4861-99cf-3fa6d0619644",

"ephemeral_id": "dc9170bf-becf-48c7-af03-21df118a8ee0"

}

}

}]

}

}

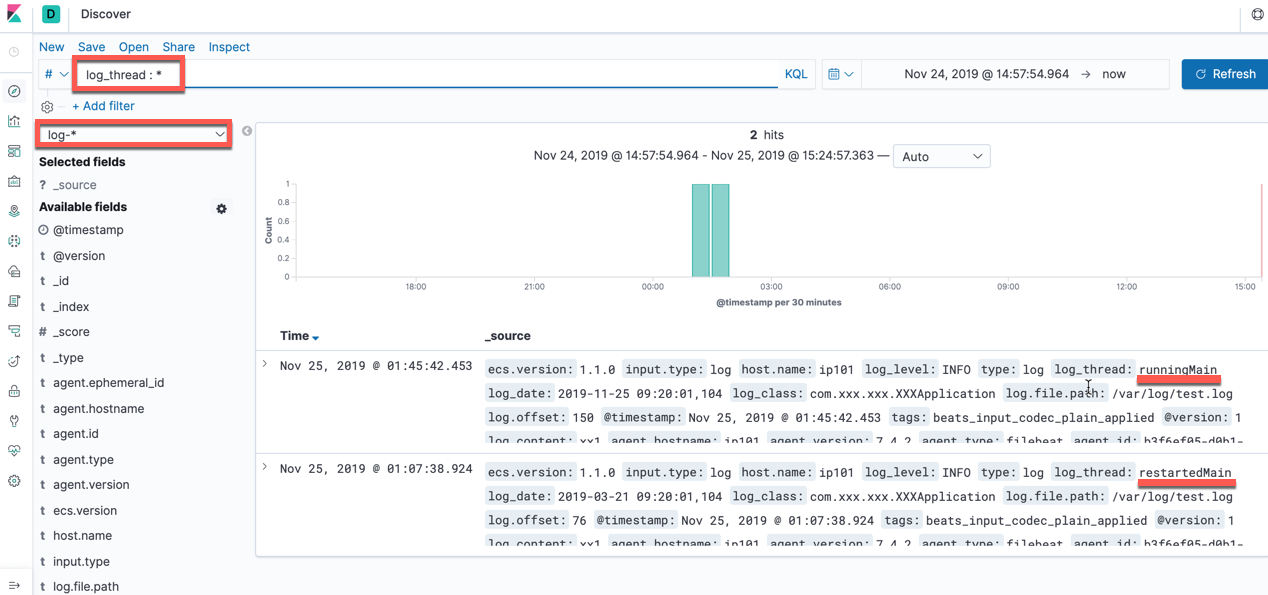

新增一条日志:

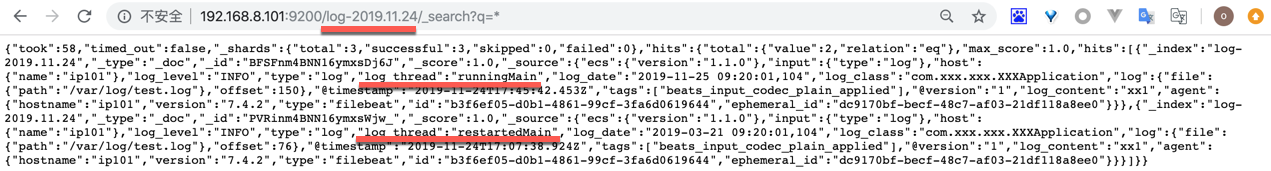

通过RestAPI获取:

MacBookPro:Chrome_Download zhangxm$ curl -X GET "192.168.8.101:9200/log-2019.11.24/_search" -H 'Content-Type: application/json' -d'

> {

> "query": { "match": {"log_thread": "runningMain"} }

> }

> '

{"took":25,"timed_out":false,"_shards":{"total":3,"successful":3,"skipped":0,"failed":0},"hits":{"total":{"value":1,"relation":"eq"},"max_score":0.2876821,"hits":[{"_index":"log-2019.11.24","_type":"_doc","_id":"BFSFnm4BNN16ymxsDj6J","_score":0.2876821,"_source":{"ecs":{"version":"1.1.0"},"input":{"type":"log"},"host":{"name":"ip101"},"log_level":"INFO","type":"log","log_thread":"runningMain","log_date":"2019-11-25 09:20:01,104","log_class":"com.xxx.xxx.XXXApplication","log":{"file":{"path":"/var/log/test.log"},"offset":150},"@timestamp":"2019-11-24T17:45:42.453Z","tags":["beats_input_codec_plain_applied"],"@version":"1","log_content":"xx1","agent":{"hostname":"ip101","version":"7.4.2","type":"filebeat","id":"b3f6ef05-d0b1-4861-99cf-3fa6d0619644","ephemeral_id":"dc9170bf-becf-48c7-af03-21df118a8ee0"}}}]}}MacBookPro:Chrome_Download zhangxm$

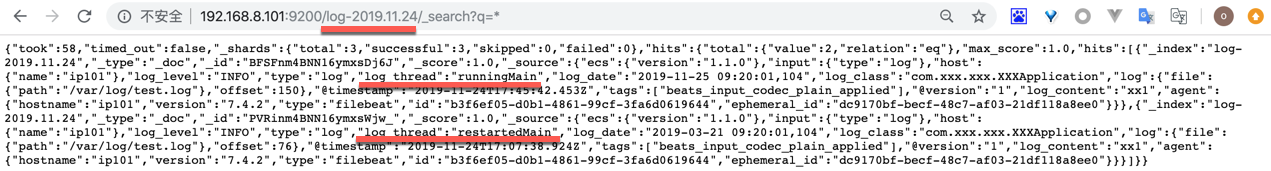

另一个搜索条件

MacBookPro:Chrome_Download zhangxm$ curl -X GET "192.168.8.101:9200/log-2019.11.24/_search" -H 'Content-Type: application/json' -d'

{

"query": { "match": {"log_thread": "restartedMain"} }

}

'

{"took":15,"timed_out":false,"_shards":{"total":3,"successful":3,"skipped":0,"failed":0},"hits":{"total":{"value":1,"relation":"eq"},"max_score":0.2876821,"hits":[{"_index":"log-2019.11.24","_type":"_doc","_id":"PVRinm4BNN16ymxsWjw_","_score":0.2876821,"_source":{"ecs":{"version":"1.1.0"},"input":{"type":"log"},"host":{"name":"ip101"},"log_level":"INFO","type":"log","log_thread":"restartedMain","log_date":"2019-03-21 09:20:01,104","log_class":"com.xxx.xxx.XXXApplication","log":{"file":{"path":"/var/log/test.log"},"offset":76},"@timestamp":"2019-11-24T17:07:38.924Z","tags":["beats_input_codec_plain_applied"],"@version":"1","log_content":"xx1","agent":{"hostname":"ip101","version":"7.4.2","type":"filebeat","id":"b3f6ef05-d0b1-4861-99cf-3fa6d0619644","ephemeral_id":"dc9170bf-becf-48c7-af03-21df118a8ee0"}}}]}}MacBookPro:Chrome_Download zhangxm$