一、测试环境准备

1、MySQL环境

version:5.7.34

IP:192.168.124.44

TAB:company、products、result

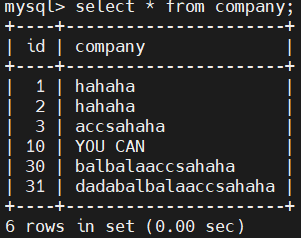

company

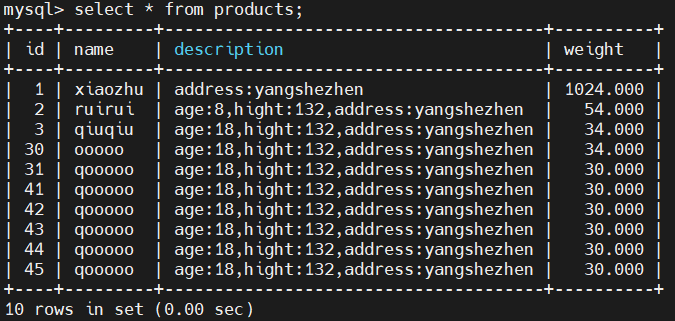

products

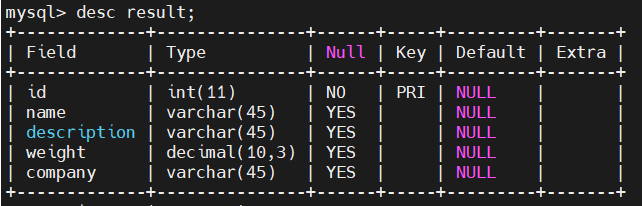

result

2、flink

version:flink-1.13.2

IP:192.168.124.48

TAB:

-- creates a mysql cdc table source

--同步products表

注意:建表的时候需要设置主键,可以MySQL结构设置,否则会报错,或者通过修改参数,可以不加主键

CREATE TABLE products (

id INT NOT NULL PRIMARY key ,

name STRING,

description STRING,

weight DECIMAL(10,3)

) WITH (

'connector' = 'mysql-cdc',

'hostname' = '192.168.124.44',

'port' = '3306',

'username' = 'myflink',

'password' = 'sg123456A@',

'database-name' = 'inventory',

'table-name' = 'products'

);

--同步company表

CREATE TABLE company(

id INT NOT NULL PRIMARY key ,

company STRING

) WITH (

'connector' = 'mysql-cdc',

'hostname' = '192.168.124.44',

'port' = '3306',

'username' = 'myflink',

'password' = 'sg123456A@',

'database-name' = 'inventory',

'table-name' = 'company'

);

CREATE TABLE mysql_result (

id INT NOT NULL,

name STRING,

description STRING,

weight DECIMAL(10,3),

company STRING,

PRIMARY KEY (id) NOT ENFORCED

) WITH (

'connector' = 'jdbc',

'url' = 'jdbc:mysql://192.168.124.44:3306/inventory',

'username' = 'root',

'password' = 'sg123456',

'table-name' = 'result'

);

insert into mysql_result (id,name,description,weight,company)

select

a.id,

a.name,

a.description,

a.weight,

b.company

from products a

left join company b

on a.id = b.id;

二、编辑flink sql job 初始化配置文件与DML文件

1、初始化配置文件

初始化配置文件可以包含多个job的初始化数据DDL信息

[root@flinkdb01 flinksqljob]# cat myjob-ddl

--创建测试DB

create database inventory;

use inventory;

-- creates a mysql cdc table source

--同步products表

CREATE TABLE products (

id INT NOT NULL PRIMARY key ,

name STRING,

description STRING,

weight DECIMAL(10,3)

) WITH (

'connector' = 'mysql-cdc',

'hostname' = '192.168.2.100',

'port' = '3306',

'username' = 'root',

'password' = 'sg123456',

'database-name' = 'inventory',

'table-name' = 'products'

);

--同步company表

CREATE TABLE company(

id INT NOT NULL PRIMARY key ,

company STRING

) WITH (

'connector' = 'mysql-cdc',

'hostname' = '192.168.2.100',

'port' = '3306',

'username' = 'root',

'password' = 'sg123456',

'database-name' = 'inventory',

'table-name' = 'company'

);

2、job dml文件

[root@flinkdb01 flinksqljob]# cat myjob-dml

-- set sync mode

SET 'table.dml-sync' = 'true';

-- set the job name

SET pipeline.name = SqlJob_mysql_result;

-- set the queue that the job submit to

--SET 'yarn.application.queue' = 'root';

-- set the job parallism

--SET 'parallism.default' = '100';

-- restore from the specific savepoint path

-- 注意:在初始化job的DML文件中不需要设置此选项,此选项是job失败/停止后,从某个checkpoint点回复job所用

--SET 'execution.savepoint.path' = '/u01/soft/flink/flinkjob/flinksqljob/checkpoint/b2f2b0dda89bfb41df57d939479d7786/chk-407';

--设置savepoint 时间

--set 'execution.checkpointing.interval' = '2sec';

insert into mysql_result01 (id,name,description,weight,company)

select

a.id,

a.name,

a.description,

a.weight,

b.company

from products a

left join company b

on a.id = b.id;

三、开启checkpoint 与 savepoint

1、编辑flink-conf.yaml文件

#==============================================================================

# Fault tolerance and checkpointing

#==============================================================================

execution.checkpointing.interval: 5000 ##单位毫秒,checkpoint时间间隔

state.checkpoints.num-retained: 20 ##单位个,保存checkpoint的个数

execution.checkpointing.mode: EXACTLY_ONCE

execution.checkpointing.externalized-checkpoint-retention: RETAIN_ON_CANCELLATION

#state.backend: rocksdb

# The backend that will be used to store operator state checkpoints if

# checkpointing is enabled.

#

# Supported backends are 'jobmanager', 'filesystem', 'rocksdb', or the

# <class-name-of-factory>.

#

# state.backend: filesystem

state.backend: filesystem #checkpoint 保存模式

# Directory for checkpoints filesystem, when using any of the default bundled

# state backends.

#

# state.checkpoints.dir: hdfs://namenode-host:port/flink-checkpoints

state.checkpoints.dir: file:///u01/soft/flink/flinkjob/flinksqljob/checkpoint ##checkpoint 保存路径,需要使用URL方式

# Default target directory for savepoints, optional.

#

# state.savepoints.dir: hdfs://namenode-host:port/flink-savepoints

state.savepoints.dir: file:///u01/soft/flink/flinkjob/flinksqljob/checkpoint ##savepoint保存路径,需要使用URL方式,应该已经合并到checkpoint中,在实验中设置了,也没生成(现阶段

flink checkpoint 需要手动触发)

# Flag to enable/disable incremental checkpoints for backends that

# support incremental checkpoints (like the RocksDB state backend).

#

# state.backend.incremental: false

state.backend.incremental: true

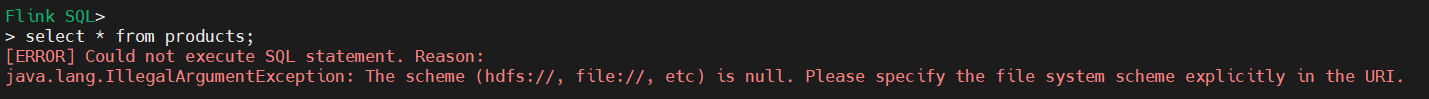

注意:checkpoint路径必须设置为URL模式,否则运行job时,会报错

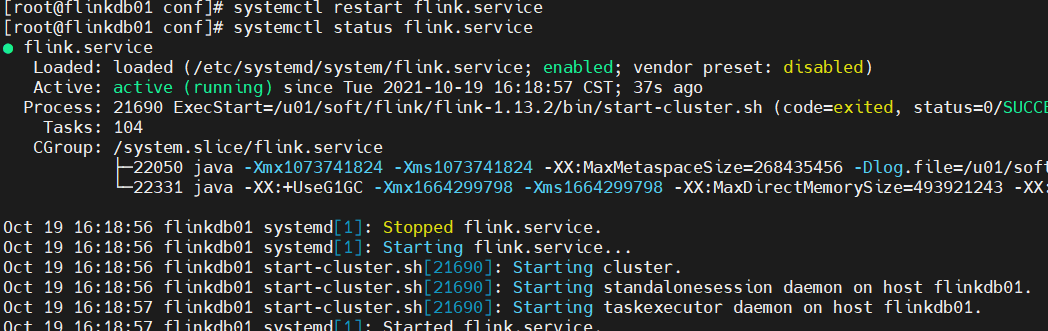

2、重启flink使得配置生效

systemctl restart flink.service

systemctl status flink.service

四、测试checkpoint恢复

1、初始化DDL与DML JOB

[root@flinkdb01 flinksqljob]# flinksql -i myjob-ddl -f myjob-dml

No default environment specified.

Searching for '/u01/soft/flink/flink-1.13.2/conf/sql-client-defaults.yaml'...not found.

Successfully initialized from sql script: file:/u01/soft/flink/flinkjob/flinksqljob/myjob-ddl

[INFO] Executing SQL from file.

Flink SQL>

SET pipeline.name = SqlJob_mysql_result;

[INFO] Session property has been set.

Flink SQL>

insert into mysql_result (id,name,description,weight,company)

select

a.id,

a.name,

a.description,

a.weight,

b.company

from products a

left join company b

on a.id = b.id;

[INFO] Submitting SQL update statement to the cluster...

[INFO] SQL update statement has been successfully submitted to the cluster:

Job ID: f39957c3386e1e943f50ac16d4e3f809

Shutting down the session...

done.

[root@flinkdb01 flinksqljob]#

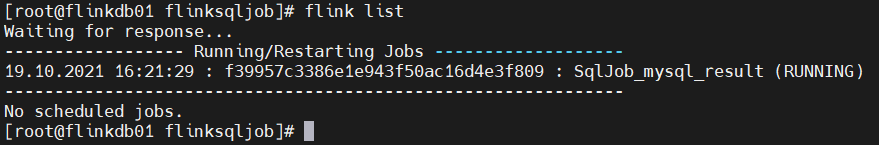

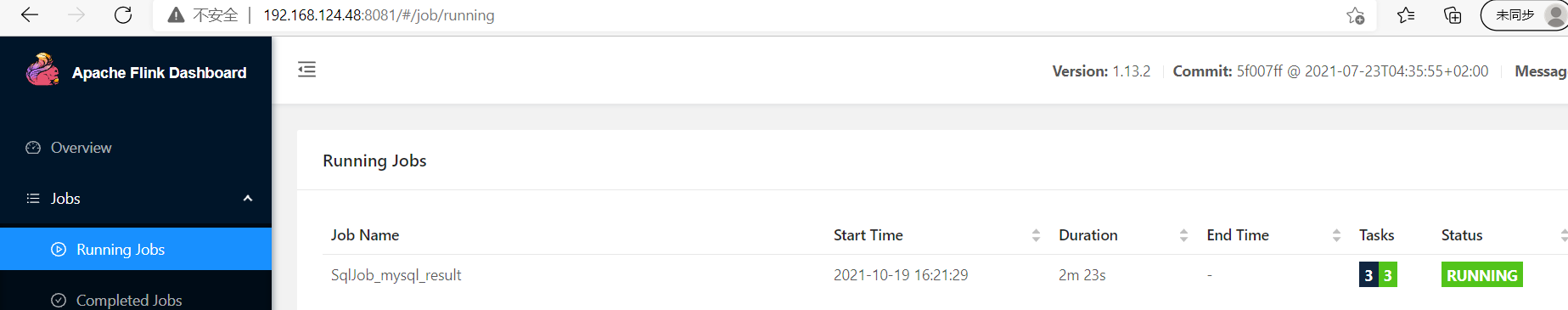

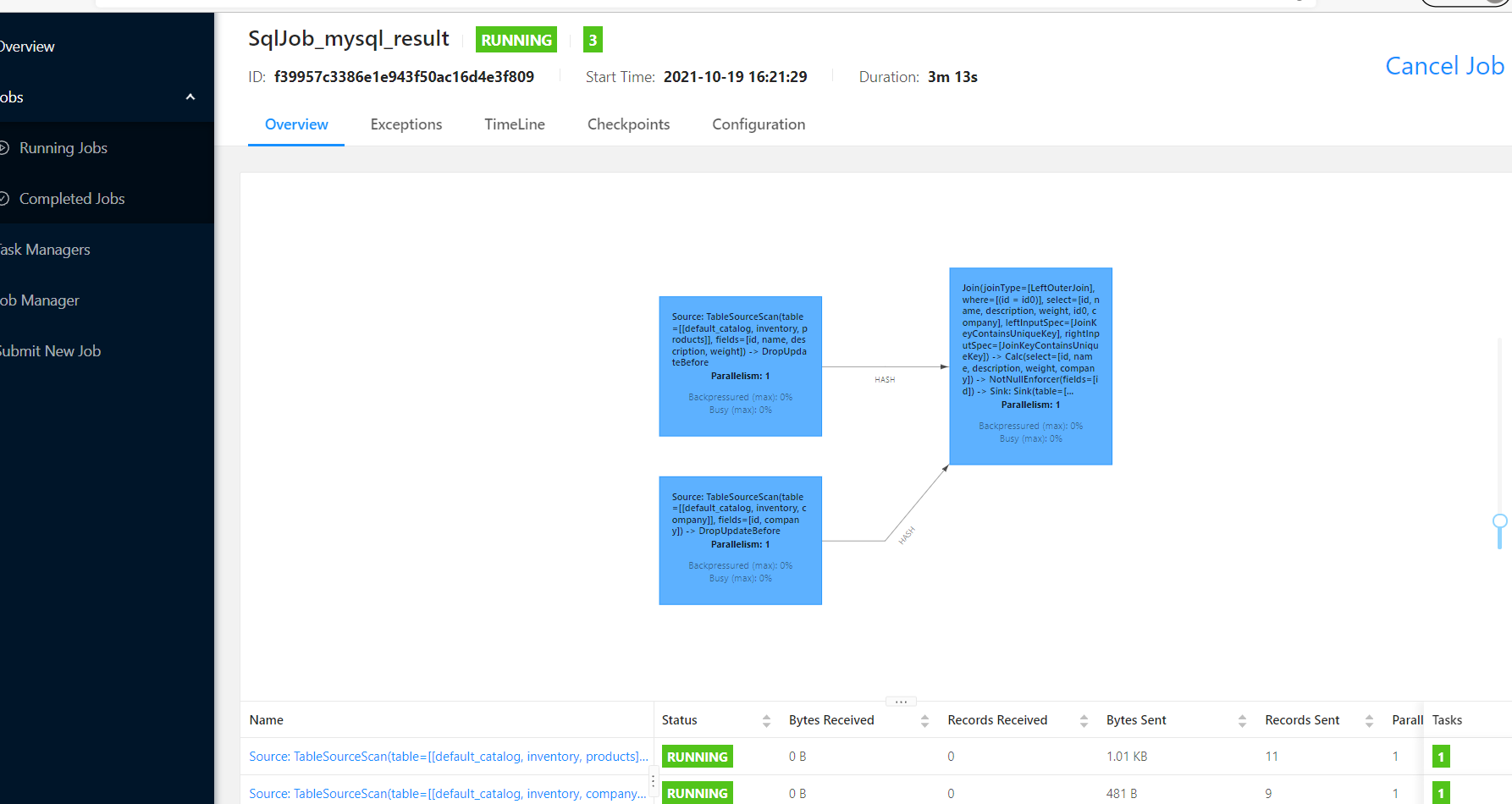

2、查看JOB

1)终端查看job

[root@flinkdb01 flinksqljob]# flink list

2)web查看job

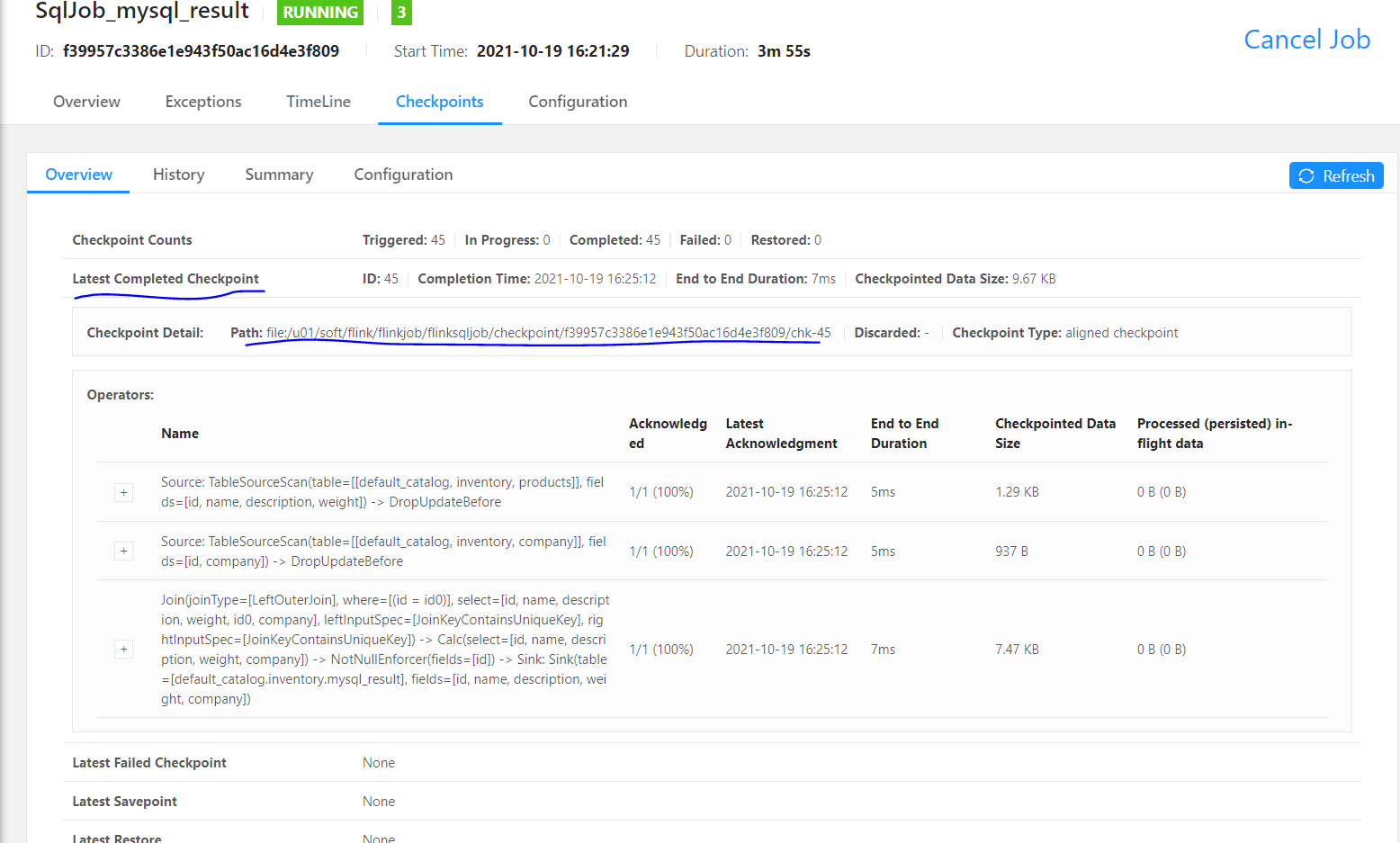

3、查看JOB的checkpoint

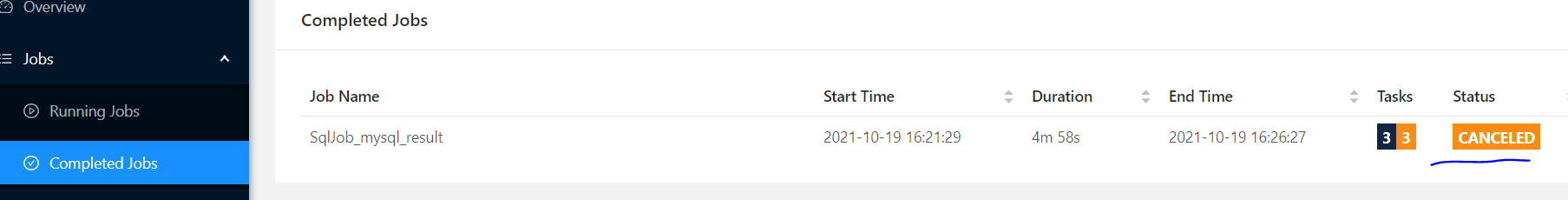

4、cancel DML JOB

5、从checkpoint恢复

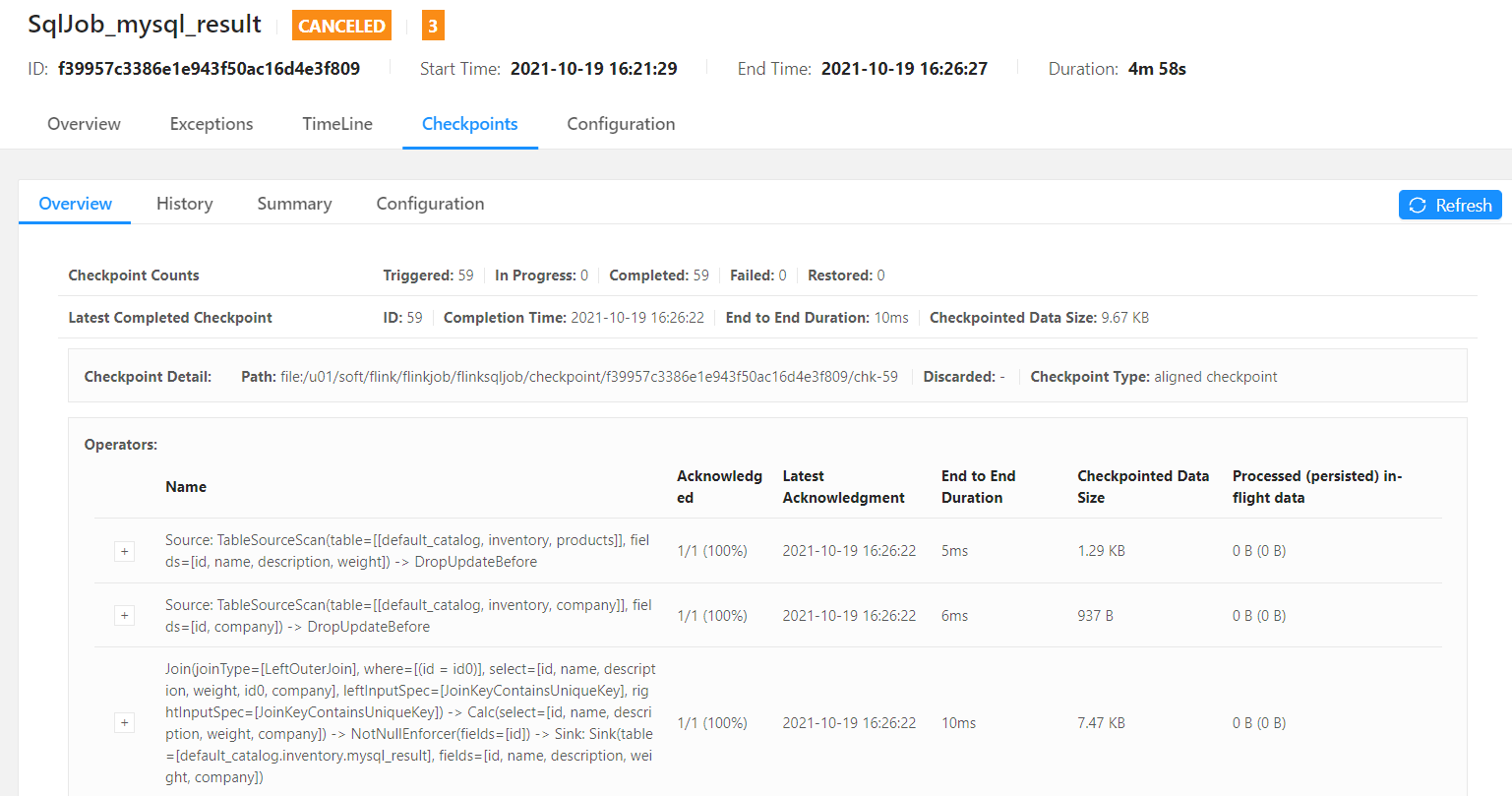

1)查看cancel后的checkpoint

可以看到最后一个checkpoint是:/u01/soft/flink/flinkjob/flinksqljob/checkpoint/f39957c3386e1e943f50ac16d4e3f809/chk-59

2)从最后的checkpoint恢复

配置用于回复的DML文件

[root@flinkdb01 flinksqljob]# cat myjob-dml-restore

-- set sync mode

--SET 'table.dml-sync' = 'true';

-- set the job name

--SET pipeline.name = SqlJob_mysql_result;

-- set the queue that the job submit to

--SET 'yarn.application.queue' = 'root';

-- set the job parallism

--SET 'parallism.default' = '100';

-- restore from the specific savepoint path

SET 'execution.savepoint.path' = '/u01/soft/flink/flinkjob/flinksqljob/checkpoint/f39957c3386e1e943f50ac16d4e3f809/chk-59';

--设置savepoint 时间

--set 'execution.checkpointing.interval' = '2sec';

insert into mysql_result (id,name,description,weight,company)

select

a.id,

a.name,

a.description,

a.weight,

b.company

from products a

left join company b

on a.id = b.id;

3)恢复job

[root@flinkdb01 flinksqljob]# flinksql -i myjob-ddl -f myjob-dml-restore

No default environment specified.

Searching for '/u01/soft/flink/flink-1.13.2/conf/sql-client-defaults.yaml'...not found.

Successfully initialized from sql script: file:/u01/soft/flink/flinkjob/flinksqljob/myjob-ddl

[INFO] Executing SQL from file.

Flink SQL>

SET 'execution.savepoint.path' = '/u01/soft/flink/flinkjob/flinksqljob/checkpoint/f39957c3386e1e943f50ac16d4e3f809/chk-59';

[INFO] Session property has been set.

Flink SQL>

insert into mysql_result (id,name,description,weight,company)

select

a.id,

a.name,

a.description,

a.weight,

b.company

from products a

left join company b

on a.id = b.id;

[INFO] Submitting SQL update statement to the cluster...

[INFO] SQL update statement has been successfully submitted to the cluster:

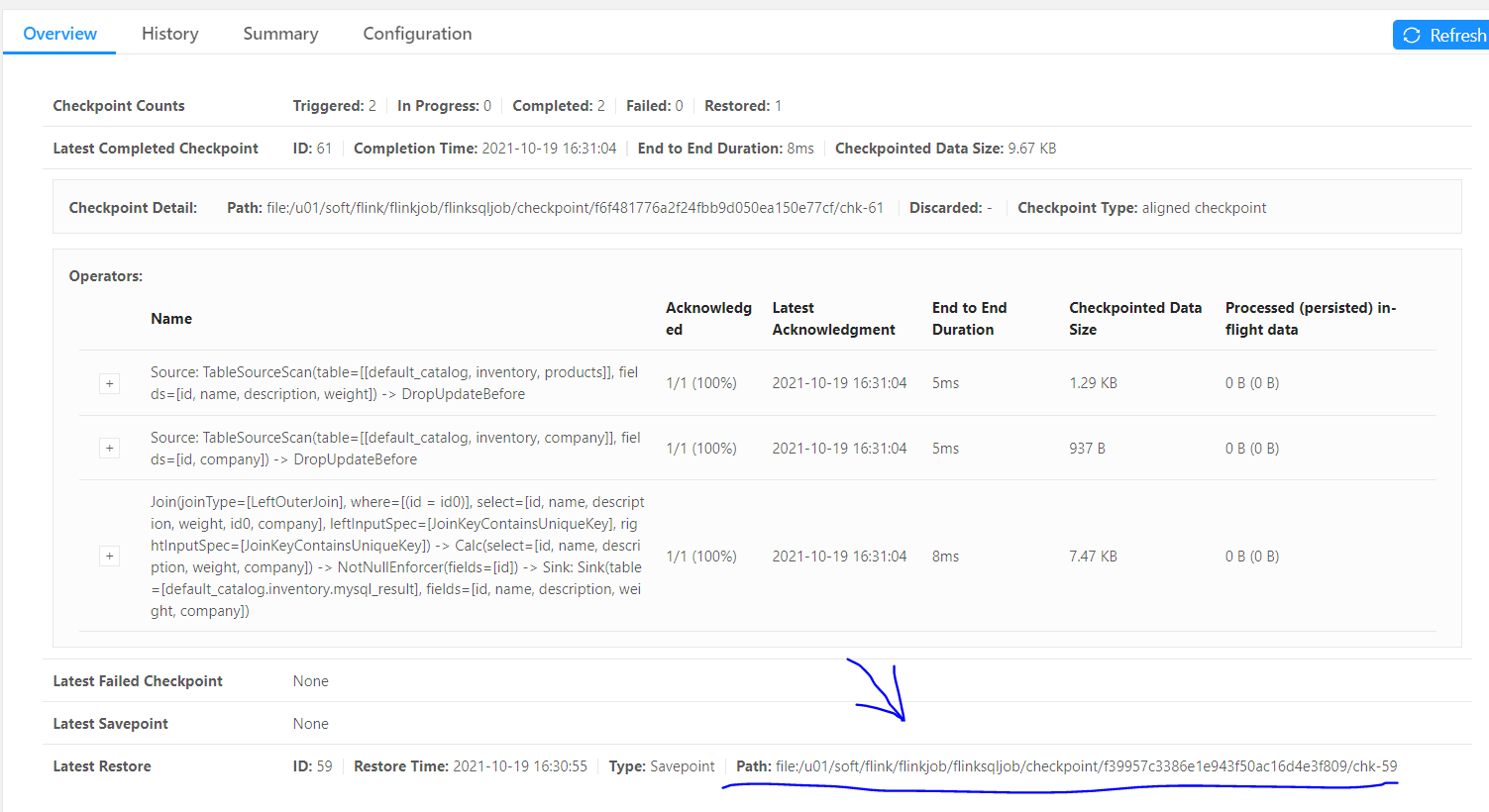

Job ID: f6f481776a2f24fbb9d050ea150e77cf

Shutting down the session...

done.

4)查看job恢复情况

五、测试savepoint恢复

1、手动生成savepoint

2、cancel job

3、从savepoint 恢复

posted on

posted on

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报