hadoop2.7.3部署安装

环境:5台linux服务器,两台namenode,5台datanode,(两台namenode为主备模式active standby)(两台namenode都为active)

centos7.2_linux ubuntu16.4.02._linux

普通用户为:hadoop

集群目录规划为:

#程序目录

/home/hadoop/app

mkdir -p /data/logs/zk_logs/

chown -R media:media /data export

cd /data/zk_data/

ubuntu中的设置方法:

vi /etc/security/limits.conf

media soft nproc 102400

media soft nproc 102400

media soft nofile 102400

media hard nofile 102400

38 scp authorized_keys media@node2:/home/media/.ssh

39 scp authorized_keys media@node3:/home/media/.ssh

40 scp authorized_keys media@node4:/home/media/.ssh

41 scp authorized_keys media@node5:/home/media/.ssh

ZooKeeper JMX enabled by default

Using config: /home/media/app/zookeeper-3.4.8/bin/../conf/zoo.cfg

Mode: follower

Hadoop配置文件目录

/home/soft/app/hadoop-2.7.3/etc/hadoop

Hadoop创建的目录:(配置文件中会用到这个路径)

mkdir -p /data/hadoop_data/hdfs

mkdir -p /data/hadoop_data/tmp

mkdir -p /data/hadoop_data/hdfs/name

mkdir -p /data/hadoop_data/hdfs/data/disk1

chown -R soft:soft /data/

设置hadoop特有的环境变量

1./hadoop-2.7.3/etc/hadoop/hadoop-env.sh

2./hadoop-2.7.3/etc/hadoop/yarn-env.sh

3./hadoop-2.7.3/etc/hadoop/core-site.xml

4./hadoop-2.7.3/etc/hadoop/hdfs-site.xml

5./hadoop-2.7.3/etc/hadoop/mapred-site.xml

6./hadoop-2.7.3/etc/hadoop/yarn-site.xml

#配置hadoop-env.sh

1 # Licensed to the Apache Software Foundation (ASF) under one 2 # or more contributor license agreements. See the NOTICE file 3 # distributed with this work for additional information 4 # regarding copyright ownership. The ASF licenses this file 5 # to you under the Apache License, Version 2.0 (the 6 # "License"); you may not use this file except in compliance 7 # with the License. You may obtain a copy of the License at 8 # 9 # http://www.apache.org/licenses/LICENSE-2.0 10 # 11 # Unless required by applicable law or agreed to in writing, software 12 # distributed under the License is distributed on an "AS IS" BASIS, 13 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 14 # See the License for the specific language governing permissions and 15 # limitations under the License. 16 17 # Set Hadoop-specific environment variables here. 18 19 # The only required environment variable is JAVA_HOME. All others are 20 # optional. When running a distributed configuration it is best to 21 # set JAVA_HOME in this file, so that it is correctly defined on 22 # remote nodes. 23 24 # The java implementation to use. 25 export JAVA_HOME=/home/soft/app/jdk1.8.0_101 26 27 # The jsvc implementation to use. Jsvc is required to run secure datanodes 28 # that bind to privileged ports to provide authentication of data transfer 29 # protocol. Jsvc is not required if SASL is configured for authentication of 30 # data transfer protocol using non-privileged ports. 31 #export JSVC_HOME=${JSVC_HOME} 32 33 export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/etc/hadoop"} 34 35 # Extra Java CLASSPATH elements. Automatically insert capacity-scheduler. 36 for f in $HADOOP_HOME/contrib/capacity-scheduler/*.jar; do 37 if [ "$HADOOP_CLASSPATH" ]; then 38 export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$f 39 else 40 export HADOOP_CLASSPATH=$f 41 fi 42 done 43 44 # The maximum amount of heap to use, in MB. Default is 1000. 45 #export HADOOP_HEAPSIZE= 46 #export HADOOP_NAMENODE_INIT_HEAPSIZE="" 47 48 # Extra Java runtime options. Empty by default. 49 export HADOOP_OPTS="$HADOOP_OPTS -Djava.net.preferIPv4Stack=true" 50 51 # Command specific options appended to HADOOP_OPTS when specified 52 export HADOOP_NAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS" 53 export HADOOP_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS $HADOOP_DATANODE_OPTS" 54 55 export HADOOP_SECONDARYNAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_SECONDARYNAMENODE_OPTS" 56 57 export HADOOP_NFS3_OPTS="$HADOOP_NFS3_OPTS" 58 export HADOOP_PORTMAP_OPTS="-Xmx512m $HADOOP_PORTMAP_OPTS" 59 60 # The following applies to multiple commands (fs, dfs, fsck, distcp etc) 61 export HADOOP_CLIENT_OPTS="-Xmx512m $HADOOP_CLIENT_OPTS" 62 #HADOOP_JAVA_PLATFORM_OPTS="-XX:-UsePerfData $HADOOP_JAVA_PLATFORM_OPTS" 63 64 # On secure datanodes, user to run the datanode as after dropping privileges. 65 # This **MUST** be uncommented to enable secure HDFS if using privileged ports 66 # to provide authentication of data transfer protocol. This **MUST NOT** be 67 # defined if SASL is configured for authentication of data transfer protocol 68 # using non-privileged ports. 69 export HADOOP_SECURE_DN_USER=${HADOOP_SECURE_DN_USER} 70 71 # Where log files are stored. $HADOOP_HOME/logs by default. 72 #export HADOOP_LOG_DIR=${HADOOP_LOG_DIR}/$USER 73 74 # Where log files are stored in the secure data environment. 75 export HADOOP_SECURE_DN_LOG_DIR=${HADOOP_LOG_DIR}/${HADOOP_HDFS_USER} 76 77 ### 78 # HDFS Mover specific parameters 79 ### 80 # Specify the JVM options to be used when starting the HDFS Mover. 81 # These options will be appended to the options specified as HADOOP_OPTS 82 # and therefore may override any similar flags set in HADOOP_OPTS 83 # 84 # export HADOOP_MOVER_OPTS="" 85 86 ### 87 # Advanced Users Only! 88 ### 89 90 # The directory where pid files are stored. /tmp by default. 91 # NOTE: this should be set to a directory that can only be written to by 92 # the user that will run the hadoop daemons. Otherwise there is the 93 # potential for a symlink attack. 94 export HADOOP_PID_DIR=${HADOOP_PID_DIR} 95 export HADOOP_SECURE_DN_PID_DIR=${HADOOP_PID_DIR} 96 97 # A string representing this instance of hadoop. $USER by default. 98 export HADOOP_IDENT_STRING=$USER 99 export HADOOP_OPTS="$HADOOP_OPTS -Djava.library.path=/home/soft/app/hadoop-2.7.3/lib/native"

#编辑core-site.xml

1 <?xml version="1.0" encoding="UTF-8"?> 2 <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 3 <!-- 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 8 http://www.apache.org/licenses/LICENSE-2.0 9 10 Unless required by applicable law or agreed to in writing, software 11 distributed under the License is distributed on an "AS IS" BASIS, 12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 See the License for the specific language governing permissions and 14 limitations under the License. See accompanying LICENSE file. 15 --> 16 17 <!-- Put site-specific property overrides in this file. --> 18 19 <configuration> 20 <property> 21 <name>fs.defaultFS</name> 22 <value>hdfs://ns</value> 23 </property> 24 <property> 25 <name>hadoop.tmp.dir</name> 26 <value>/data/hadoop_data/tmp</value> 27 </property> 28 <!-- 指定zookeeper地址 --> 29 <property> 30 <name>ha.zookeeper.quorum</name> 31 <value>node1:2181,node2:2181,node3:2181,node4:2181</value> 32 </property> 33 <property> 34 <name>fs.hdfs.impl</name> 35 <value>org.apache.hadoop.hdfs.DistributedFileSystem</value> 36 <description>The FileSystem for hdfs: uris.</description> 37 </property> 38 <property> 39 <name>hadoop.proxyuser.hue.hosts</name> 40 <value>*</value> 41 </property> 42 <property> 43 <name>hadoop.proxyuser.hue.groups</name> 44 <value>*</value> 45 </property> 46 </configuration>

#编辑hdfs-site.xml

1 <?xml version="1.0" encoding="UTF-8"?> 2 <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 3 <!-- 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 8 http://www.apache.org/licenses/LICENSE-2.0 9 10 Unless required by applicable law or agreed to in writing, software 11 distributed under the License is distributed on an "AS IS" BASIS, 12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 See the License for the specific language governing permissions and 14 limitations under the License. See accompanying LICENSE file. 15 --> 16 17 <!-- Put site-specific property overrides in this file. --> 18 19 <configuration> 20 <property> 21 <!-- 开几个备份 --> 22 <name>dfs.replication</name> 23 <value>3</value> 24 </property> 25 <property> 26 <name>dfs.namenode.name.dir</name> 27 <value>file:///data/hadoop_data/hdfs/name</value> 28 </property> 29 <property> 30 <name>dfs.datanode.data.dir</name> 31 <value>/data/hadoop_data/hdfs/data/disk1</value> 32 <final>true</final> 33 </property> 34 <property> 35 <name>dfs.nameservices</name> 36 <value>ns</value> 37 </property> 38 <property> 39 <name>dfs.ha.namenodes.ns</name> 40 <value>node1,node2</value> 41 </property> 42 <property> 43 <name>dfs.namenode.rpc-address.ns.node1</name> 44 <value>node1:8020</value> 45 </property> 46 <property> 47 <name>dfs.namenode.rpc-address.ns.node2</name> 48 <value>node2:8020</value> 49 </property> 50 <property> 51 <name>dfs.namenode.http-address.ns.node1</name> 52 <value>node1:50070</value> 53 </property> 54 <property> 55 <name>dfs.namenode.http-address.ns.node2</name> 56 <value>node2:50070</value> 57 </property> 58 <property> 59 <name>dfs.namenode.shared.edits.dir</name> 60 <value>qjournal://node1:8485;node2:8485;node3:8485;node4:8485/ns</value> 61 </property> 62 <property> 63 <name>dfs.client.failover.proxy.provider.ns</name> 64 <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> 65 </property> 66 <property> 67 <name>dfs.ha.fencing.methods</name> 68 <value>sshfence</value> 69 </property> 70 <property> 71 <name>dfs.ha.fencing.ssh.private-key-files</name> 72 <value>/home/soft/.ssh/id_rsa</value> 73 </property> 74 <property> 75 <name>dfs.journalnode.edits.dir</name> 76 <value>/data/hadoop_data/journal</value> 77 </property> 78 <!--开启基于Zookeeper及ZKFC进程的自动备援设置,监视进程是否死掉 --> 79 <property> 80 <name>dfs.ha.automatic-failover.enabled</name> 81 <value>true</value> 82 </property> 83 <property> 84 <name>ha.zookeeper.quorum</name> 85 <value>node1:2181,node2:2181,node3:2181,node4:2181</value> 86 </property> 87 <property> 88 <!--指定ZooKeeper超时间隔,单位毫秒 --> 89 <name>ha.zookeeper.session-timeout.ms</name> 90 <value>2000</value> 91 </property> 92 <property> 93 <name>fs.hdfs.impl.disable.cache</name> 94 <value>true</value> 95 </property> 96 <property> 97 <name>dfs.webhdfs.enabled</name> 98 <value>true</value> 99 </property> 100 </configuration>

#编辑mapred-site.xml

1 <?xml version="1.0"?> 2 <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 3 <!-- 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 8 http://www.apache.org/licenses/LICENSE-2.0 9 10 Unless required by applicable law or agreed to in writing, software 11 distributed under the License is distributed on an "AS IS" BASIS, 12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 See the License for the specific language governing permissions and 14 limitations under the License. See accompanying LICENSE file. 15 --> 16 17 <!-- Put site-specific property overrides in this file. --> 18 19 <configuration> 20 <property> 21 <name>mapreduce.framework.name</name> 22 <value>yarn</value> 23 </property> 24 <property> 25 <name>mapreduce.map.memory.mb</name> 26 <value>8000</value> 27 </property> 28 <property> 29 <name>mapreduce.reduce.memory.mb</name> 30 <value>8000</value> 31 </property> 32 <property> 33 <name>mapreduce.map.java.opts</name> 34 <value>-Xmx8000m</value> 35 </property> 36 <property> 37 <name>mapreduce.reduce.java.opts</name> 38 <value>-Xmx8000m</value> 39 </property> 40 <property> 41 <name>mapred.task.timeout</name> 42 <value>1800000</value> <!-- 30 minutes --> 43 </property> 44 <property> 45 <name>mapreduce.jobhistory.webapp.address</name> 46 <value>node1:19888</value> 47 </property> 48 <property> 49 <name>mapreduce.jobhistory.address</name> 50 <value>node1:10020</value> 51 </property> 52 </configuration>

#编辑yarn-site.xml

1 <?xml version="1.0"?> 2 <!-- 3 Licensed under the Apache License, Version 2.0 (the "License"); 4 you may not use this file except in compliance with the License. 5 You may obtain a copy of the License at 6 7 http://www.apache.org/licenses/LICENSE-2.0 8 9 Unless required by applicable law or agreed to in writing, software 10 distributed under the License is distributed on an "AS IS" BASIS, 11 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 12 See the License for the specific language governing permissions and 13 limitations under the License. See accompanying LICENSE file. 14 --> 15 <configuration> 16 <property> 17 <name>yarn.resourcemanager.connect.retry-interval.ms</name> 18 <value>2000</value> 19 </property> 20 <property> 21 <name>yarn.resourcemanager.ha.automatic-failover.enabled</name> 22 <value>true</value> 23 </property> 24 <!-- 开启RM高可靠 --> 25 <property> 26 <name>yarn.resourcemanager.ha.enabled</name> 27 <value>true</value> 28 </property> 29 <!-- 指定RM的cluster id --> 30 <property> 31 <name>yarn.resourcemanager.cluster-id</name> 32 <value>yrc</value> 33 </property> 34 <!-- 指定RM的名字 --> 35 <property> 36 <name>yarn.resourcemanager.ha.rm-ids</name> 37 <value>rm1,rm2</value> 38 </property> 39 <!-- 分别指定RM的地址 --> 40 <property> 41 <name>yarn.resourcemanager.hostname.rm1</name> 42 <value>node1</value> 43 </property> 44 <property> 45 <name>yarn.resourcemanager.hostname.rm2</name> 46 <value>node2</value> 47 </property> 48 <property> 49 <name>yarn.resourcemanager.ha.id</name> 50 <value>rm1</value> 51 </property> 52 <!-- 指定zk集群地址 --> 53 <property> 54 <name>yarn.resourcemanager.zk-address</name> 55 <value>node1:2181,node2:2181,node3:2181,node4:2181</value> 56 </property> 57 <property> 58 <name>yarn.nodemanager.aux-services</name> 59 <value>mapreduce_shuffle</value> 60 </property> 61 <property> 62 <name>yarn.log-aggregation-enable</name> 63 <value>true</value> 64 </property> 65 <property> 66 <name>yarn.log-aggregation.retain-seconds</name> 67 <value>86400</value> 68 </property> 69 <property> 70 <name>yarn.nodemanager.vmem-pmem-ratio</name> 71 <value>3</value> 72 </property> 73 <property> 74 <name>yarn.nodemanager.resource.memory-mb</name> 75 <value>57000</value> 76 </property> 77 <property> 78 <name>yarn.scheduler.minimum-allocation-mb</name> 79 <value>4000</value> 80 </property> 81 <property> 82 <name>yarn.scheduler.maximum-allocation-mb</name> 83 <value>10000</value> 84 </property> 85 <property> 86 <name>yarn.resourcemanager.webapp.address</name> 87 <value>node1:8088</value> 88 </property> 89 <property> 90 <name>yarn.web-proxy.address</name> 91 <value>node1:8888</value> 92 </property> 93 </configuration>

#编辑slaves文件

node1

node2

node3

node4

node5

vi .bashrc

.bashrc

.bashrc

1 export JAVA_HOME=/home/soft/app/jdk1.8.0_101 2 export CLASSPATH=.:$JAVA_HOME/lib/ 3 export JRE_HOME=/home/soft/app/jdk1.8.0_101/jre 4 export ZOOKEEPER=/home/soft/app/zookeeper-3.4.8/ 5 export HADOOP_HOME=/home/soft/app/hadoop-2.7.3 6 export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native 7 export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib" 8 export PATH=:$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:/sbin:$ZOOKEEPER/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:

#将hadoop目录scp到其他主机(.bashrc)

并分别执行source .bashrc

在node2上修改yarn-site.xml文件

property>

<value>rm2</value> #这里改成node的id,否则会报端口已占用的错误 <name>yarn.resourcemanager.ha.id</name></property><br><span style="color: #333333; font-family: "Microsoft Yahei"">./yarn-daemon.sh start resourcemanager</span>

六、启动

启动所有zookeeper(每台都必须启动),查看状态是否成功。

zkServer.sh start #zookeeper启动

zkServer.sh stop #zookeeper停止

zkServer.sh status #zookeeper查看状态

启动journalnode(每台都必须启动),jps查看状态是否成功。

hadoop-daemons.sh start journalnode #启动的命令

hadoop-daemons.sh stop journalnode #停止的命令

HDFS格式化(如果格式化失败请删除创建的/date目录下面的hadoop目录,然后再创建执行格式化命令)

#hadoop01上执行

hdfs namenode -format #namenode 格式化

hdfs zkfc -formatZK #格式化高可用

sbin/hadoop-daemon.sh start namenode #启动namenode

#备份节点执行

hdfs namenode -bootstrapStandby #同步主节点和备节点之间的元数据

停止所有journalnode,namenode

启动hdfs和yarn相关进程

./start-dfs.sh

./start-yarn.sh

或者

start-all.sh启动所有

stop-all.sh停止所有

备份节点手动启动resourcemanager

yarn-daemon.sh start resourcemanager

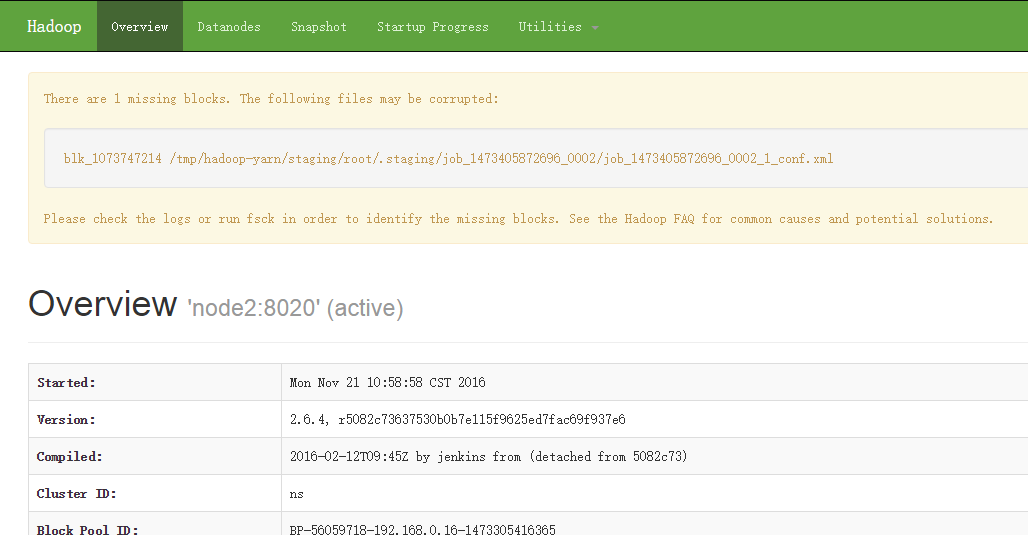

七、检查

[root@n1 ~]# jps

19856 JournalNode

20049 DFSZKFailoverController

20130 ResourceManager

21060 Jps

20261 NodeManager

19655 DataNode

19532 NameNode

2061 QuorumPeerMain

hadoop@node1:~$ jps|wc -l

8

#浏览器访问

http://ip:50070