CUDA学习(七)之使用CUDA内置API计时

问题:对于使用GPU计算时,都想知道kernel函数运行所耗费的时间,使用CUDA内置的API可以方便准确的获得kernel运行时间。

在CPU上,可以使用clock()函数和GetTickCount()函数计时。

clock_t start, end; start = clock(); //执行步骤;

......

end = clock(); printf(" time (CPU) : %f ms(毫秒) \n", end - start);

int startTime, endTime; // 开始时间 startTime = GetTickCount(); //执行步骤;

......

endTime = GetTickCount(); cout << " 总时间为 : " << (double)(endTime - startTime)<< " ms " << endl;

对于CUDA核函数计时使用clock()或GetTickCount()函数结果不准确,计算归约求和的例子如下:

//CPU计时 clock_t start, end; start = clock(); d_SharedMemoryTest << < NThreadX, ThreadX >> > (S_Para, MX); //调用核函数(M个包含N个线程的线程块) cudaDeviceSynchronize(); end = clock(); clock_t time = end - start; printf(" time (GPU) : %f ms \n", time);

结果为0.000000 ms(明显结果错误):

而使用CUDA内置API(cudaEvent_t)计时,主要代码如下

//GPU计时 cudaEvent_t startTime, endTime; cudaEventCreate(&startTime); cudaEventCreate(&endTime); cudaEventRecord(startTime, 0); d_SharedMemoryTest << < NThreadX, ThreadX >> > (S_Para, MX); //调用核函数(M个包含N个线程的线程块) cudaEventRecord(endTime, 0); cudaEventSynchronize(startTime); cudaEventSynchronize(endTime); float time; cudaEventElapsedTime(&time, startTime, endTime); printf(" time (GPU) : %f ms \n", time); cudaEventDestroy(startTime); cudaEventDestroy(endTime);

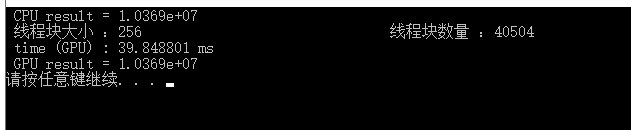

结果为39.848801 ms:

最后附上全部代码:

#pragma once #include "cuda_runtime.h" #include "device_launch_parameters.h" #include "device_functions.h" #include <iostream> using namespace std; const int NX = 10369000; //数组长度 const int ThreadX = 256; //线程块大小 //使用shared memory和多个线程块 __global__ void d_SharedMemoryTest(double *para, int MX) { int i = threadIdx.x; //该线程块中线程索引 int tid = blockIdx.x * blockDim.x + threadIdx.x; //M个包含N个线程的线程块中相对应全局内存数组的索引(全局线程) __shared__ double s_Para[ThreadX]; //定义固定长度(线程块长度)的共享内存数组 if (tid < MX) //判断全局线程小于整个数组长度NX,防止数组越界 s_Para[i] = para[tid]; //将对应全局内存数组中一段元素的值赋给共享内存数组 __syncthreads(); //(红色下波浪线提示由于VS不识别,不影响运行)同步,等待所有线程把自己负责的元素载入到共享内存再执行下面代码 if (tid < MX) { for (int index = 1; index < blockDim.x; index *= 4) //归约求和 (对应256=4*4*4*4线程数) { __syncthreads(); if (i % (4 * index) == 0) { s_Para[i] += s_Para[i + index] + s_Para[i + 2*index] + s_Para[i + 3*index]; } } } if (i == 0) //求和完成,总和保存在共享内存数组的0号元素中 para[blockIdx.x * blockDim.x + i] = s_Para[i]; //在每个线程块中,将共享内存数组的0号元素赋给全局内存数组的对应元素,即线程块索引*线程块维度+i(blockIdx.x * blockDim.x + i) } //使用shared memory和多个线程块 void s_ParallelTest() { double *Para; cudaMallocManaged((void **)&Para, sizeof(double) * NX); //统一内存寻址,CPU和GPU都可以使用 double ParaSum = 0; for (int i = 0; i<NX; i++) { Para[i] = 1; //数组赋值 ParaSum += Para[i]; //CPU端数组累加 } cout << " CPU result = " << ParaSum << endl; //显示CPU端结果 double d_ParaSum; int Blocks = ((NX + ThreadX - 1) / ThreadX); cout << " 线程块大小 :" << ThreadX << " 线程块数量 :" << Blocks << endl; double *S_Para; int MX = ThreadX * Blocks; cudaMallocManaged(&S_Para, sizeof(double) * MX); for (int i=0; i<MX; i++) { if (i < NX) S_Para[i] = Para[i]; } ////CPU计时 //clock_t start, end; //start = clock(); //d_SharedMemoryTest << < Blocks, ThreadX >> > (S_Para, MX); //调用核函数(M个包含N个线程的线程块) // //cudaDeviceSynchronize(); //end = clock(); //clock_t time = end - start; //printf(" time (GPU) : %f ms \n", time); //GPU计时 cudaEvent_t startTime, endTime; cudaEventCreate(&startTime); cudaEventCreate(&endTime); cudaEventRecord(startTime, 0); d_SharedMemoryTest << < Blocks, ThreadX >> > (S_Para, MX); //调用核函数(M个包含N个线程的线程块) cudaEventRecord(endTime, 0); cudaEventSynchronize(startTime); cudaEventSynchronize(endTime); float time; cudaEventElapsedTime(&time, startTime, endTime); printf(" time (GPU) : %f ms \n", time); cudaEventDestroy(startTime); cudaEventDestroy(endTime); for (int i=0; i<Blocks; i++) { d_ParaSum += S_Para[i*ThreadX]; //将每个线程块相加求的和(保存在对应全局内存数组中)相加求和 } cout << " GPU result = " << d_ParaSum << endl; //显示GPU端结果 } int main() { s_ParallelTest(); system("pause"); return 0; }