Slurm及OpenLDAP部署

Slurm及OpenLDAP部署

概述

Slurm是一个开源、容错且高度可扩展性的集群管理和作业调度系统,用于大型和小型Linux集群。

Slurm提供三种关键功能:

- 分配对资源的排他和/非排他访问

- 提供一个用于在分配的节点集上启动、执行和监视作业的框架

- 通过管理一个未完成作业队列来解决对资源的争用

一、构建拓扑结构

-

搭建4台Linux服务器

-

配置IP地址及主机名

[root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33 # 修改网卡参数,没有添加即可 IPADDR=192.168.100.100 NETMASK=255.255.255.0 GATEWAY=192.168.100.2 DNS1=114.114.114.114 ONBOOT=yes BOOTPROTO=static [root@localhost ~]# systemctl restart network [root@localhost ~]# hostnamectl set-hostname slurm [root@localhost ~]# bash [root@slurm ~]# #-------------------Node01---------------- [root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33 # 修改网卡参数,没有添加即可 IPADDR=192.168.100.101 NETMASK=255.255.255.0 GATEWAY=192.168.100.2 DNS1=114.114.114.114 ONBOOT=yes BOOTPROTO=static [root@localhost ~]# systemctl restart network [root@localhost ~]# hostnamectl set-hostname node01 [root@localhost ~]# bash [root@node01 ~]# #-------------------Node02---------------- [root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33 # 修改网卡参数,没有添加即可 IPADDR=192.168.100.102 NETMASK=255.255.255.0 GATEWAY=192.168.100.2 DNS1=114.114.114.114 ONBOOT=yes BOOTPROTO=static [root@localhost ~]# systemctl restart network [root@localhost ~]# hostnamectl set-hostname node02 [root@localhost ~]# bash [root@node02 ~]# #-------------------Node03---------------- [root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33 # 修改网卡参数,没有添加即可 IPADDR=192.168.100.103 NETMASK=255.255.255.0 GATEWAY=192.168.100.2 DNS1=114.114.114.114 ONBOOT=yes BOOTPROTO=static [root@localhost ~]# systemctl restart network [root@localhost ~]# hostnamectl set-hostname node03 [root@localhost ~]# bash [root@node03 ~]# -

修改Hosts文件(4台服务器一致)

[root@slurm ~]# vi /etc/hosts # 新增 192.168.100.100 slurm 192.168.100.101 node01 192.168.100.102 node02 192.168.100.103 node03 # node01-03同样需要修改参照上面配置即可

二、安装Slurm应用

-

上传slurm-EL7.5GUI-install.tar.gz文件至4台服务器的root目录下

注:4台机器都需安装

# 方法不限 E:\cmp>scp slurm19-EL7.5GUI-installer-1210.tar.gz root@192.168.100.100:/root E:\cmp>scp slurm19-EL7.5GUI-installer-1210.tar.gz root@192.168.100.101:/root E:\cmp>scp slurm19-EL7.5GUI-installer-1210.tar.gz root@192.168.100.102:/root E:\cmp>scp slurm19-EL7.5GUI-installer-1210.tar.gz root@192.168.100.103:/root -

解压并执行

[root@slurm ~]# yum -y install librrd* libhwloc* [root@slurm ~]# tar zxvf slurm19-EL7.5GUI-installer-1210.tar.gz [root@slurm ~]# cd slurm19-EL7.5GUI-installer [root@slurm slurm19-EL7.5GUI-installer]# ./install_slurm.sh [root@slurm ~]# systemctl stop firewalld.service [root@slurm ~]# systemctl disable firewalld.service

三、配置NTP时间同步

-

修改配置文件将Slurm调度系统作为本地时间服务器

[root@slurm etc]# yum -y install ntp [root@slurm etc]# vi /etc/ntp.conf #server 0.centos.pool.ntp.org iburst #server 1.centos.pool.ntp.org iburst #server 2.centos.pool.ntp.org iburst #server 3.centos.pool.ntp.org iburst server 127.127.1.0 fudge 127.127.1.0 stratum 10 [root@slurm etc]# systemctl restart ntpd -

配置3台slurm计算节点的NTP

[root@node01 etc]# vi /etc/ntp.conf #server 0.centos.pool.ntp.org iburst #server 1.centos.pool.ntp.org iburst #server 2.centos.pool.ntp.org iburst #server 3.centos.pool.ntp.org iburst server slurm iburst [root@node01 etc]# ntpdate -u slurm 3 Jun 14:34:21 ntpdate[1944]: adjust time server 192.168.100.100 offset -0.034998 sec [root@slurm etc]# systemctl restart ntpd [root@node01 etc]# ntpq -p remote refid st t when poll reach delay offset jitter ============================================================================== *slurm LOCAL(0) 11 u 2 64 1 0.237 -31.197 3.549 [root@node01 etc]# ntpstat # 显示如下即成功 synchronised to NTP server (192.168.100.100) at stratum 12 time correct to within 983 ms polling server every 64 s [root@node01 etc]#

四、修改Slurm配置文件

-

所有Slurm集群结点均使用相同的配置文件

[root@slurm ~]# cd slurm19-EL7.5GUI-installer/slurm-config/slurm.conf # 将所有的ctl01修改为slurm # 12 34 159 # # 并修改文件最后的NODES和PARTITIONS ################################################ # NODES # ################################################ NodeName=node[01-10] CPUs=1 Boards=1 SocketsPerBoard=1 CoresPerSocket=1 ThreadsPerCore=1 RealMemory=972 # ################################################ # PARTITIONS # ################################################ PartitionName=computerPartiton Default=YES MinNodes=0 Nodes=cmp[001-101] State=UP # # NODES的参数设置为主机名[节点数] 后面的参数请使用slumpd -C命令查看 [root@node01 slurm-config]# slurmd -C NodeName=node01 CPUs=1 Boards=1 SocketsPerBoard=1 CoresPerSocket=1 ThreadsPerCore=1 RealMemory=972 -

将slurm.conf文件复制到各个节点包括主节点

[root@slurm ~]# cp /root/slurm19-EL7.5GUI-installer/slurm-config/slurm.conf /etc/slurm/ [root@slurm ~]# scp /root/slurm19-EL7.5GUI-installer/slurm-config/slurm.conf root@192.168.100.101:/etc/slurm/ [root@slurm ~]# scp /root/slurm19-EL7.5GUI-installer/slurm-config/slurm.conf root@192.168.100.102:/etc/slurm/ [root@slurm ~]# scp /root/slurm19-EL7.5GUI-installer/slurm-config/slurm.conf root@192.168.100.103:/etc/slurm/ -

配置主控节点

[root@slurm slurm19-EL7.5GUI-installer]# ./slurm_init_ctld.sh # 自动安装数据库并初始化 # user = root # passwd = 123456a? [root@slurm slurm19-EL7.5GUI-installer]# cd [root@slurm ~]# systemctl restart slurmdbd [root@slurm ~]# systemctl status slurmdbd ● slurmdbd.service - Slurm DBD accounting daemon Loaded: loaded (/usr/lib/systemd/system/slurmdbd.service; enabled; vendor preset: disabled) Active: active (running) since Thu 2021-06-03 17:00:20 CST; 6s ago Process: 13388 ExecStart=/usr/sbin/slurmdbd $SLURMDBD_OPTIONS (code=exited, status=0/SUCCESS) CGroup: /system.slice/slurmdbd.service └─13391 /usr/sbin/slurmdbd [root@slurm ~]# systemctl enable slurmctld.service [root@slurm ~]# systemctl start slurmctld.service -

配置4台计算节点

[root@node01 slurm19-EL7.5GUI-installer]# ./cmp_slurm_init.sh Slurm computer node installed, configuration successfully!. [root@node01 slurm19-EL7.5GUI-installer]# sytemctl restart slurmd.service [root@node01 slurm19-EL7.5GUI-installer]# sytemctl enable slurmd.service

五、运行Slurm调度

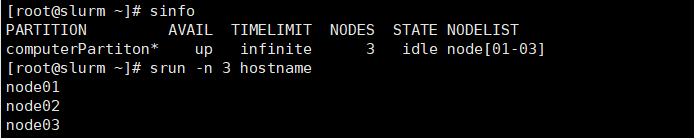

[root@slurm ~]# sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

computerPartiton* up infinite 3 idle node[01-03]

[root@slurm ~]# srun -n 3 hostname

node01

node02

node03

六、部署OpenLDAP

安装前准备

# 关闭selinux、关闭防火墙

[root@slurm ~]# vi /etc/sysconfig/selinux

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@slurm ~]# systemctl stop NetworkManager

[root@slurm ~]# systemctl disable NetworkManager

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service.

Removed symlink /etc/systemd/system/network-online.target.wants/NetworkManager-wait-online.service.

[root@slurm ~]#

-

安装OpenLDAP服务端(Slurm主控节点上安装)

[root@slurm ~]# yum -y install openldap openldap-servers openldap-clients migrationtools [root@slurm ~]# sed -i -e 's/olcSuffix:.*/olcSuffix: dc=xiaowangc,dc=com/g' /etc/openldap/slapd.d/cn\=config/olcDatabase\=\{2\}hdb.ldif [root@slurm ~]# sed -i -e 's/olcRootDN:.*/olcRootDN: cn=admin,dc=xiaowangc,dc=com/g' /etc/openldap/slapd.d/cn\=config/olcDatabase\=\{2\}hdb.ldif [root@slurm ~]# echo 'olcRootPw: 123456a?' >> /etc/openldap/slapd.d/cn\=config/olcDatabase\=\{2\}hdb.ldif [root@slurm ~]# sed -i -e 's/dn.base="cn=.*"/dn.base="cn=admin,dc=xiaowangc,dc=com"/g' /etc/openldap/slapd.d/cn\=config/olcDatabase\=\{1\}monitor.ldif [root@slurm ~]# cp /usr/share/openldap-servers/DB_CONFIG.example /var/lib/ldap/DB_CONFIG [root@slurm ~]# chown -R ldap.ldap /var/lib/ldap # 超级管理员为cn=admin,dc=xiaowangc,dc=com 密码为:123456a? [root@slurm ~]# systemctl restart slapd [root@slurm ~]# systemctl enable slapd Created symlink from /etc/systemd/system/multi-user.target.wants/slapd.service to /usr/lib/systemd/system/slapd.service. [root@slurm ~]# cd /etc/openldap/schema/ [root@slurm ~]# find . -name '*.ldif' -exec ldapadd -Y EXTERNAL -H ldapi:/// -D "cn=config" -f {} \; [root@slurm ~]# cd /usr/share/migrationtools/ [root@slurm ~]# sed -i -e 's/"ou=Group"/"ou=Groups"/g' migrate_common.ph [root@slurm ~]# sed -i -e 's/$DEFAULT_MAIL_DOMAIN = .*/$DEFAULT_MAIL_DOMAIN = "hpcce.com";/g' migrate_common.ph [root@slurm ~]# sed -i -e 's/$DEFAULT_BASE = .*/$DEFAULT_BASE = "dc=xiaowangc,dc=com";/g' migrate_common.ph [root@slurm ~]# sed -i -e 's/$EXTENDED_SCHEMA = 0;/$EXTENDED_SCHEMA = 1;/g' migrate_common.ph ./migrate_base.pl > /root/base.ldif [root@slurm ~]# ldapadd -x -w 123456a? -D "cn=admin,dc=xiaowangc,dc=com" -f /root/base.ldif -

部署OpenLDAP客户端(3个计算节点)

[root@node01 ~]# yum -y install nss-pam-ldapd [root@node01 ~]# authconfig --enableldap --enableldapauth --ldapserver="ldap://192.168.100.100:389" --ldapbasedn=" dc=xiaowangc,dc=com " --update [root@node01 ~]# authconfig --enablemkhomedir --update [root@node01 ~]# authconfig --updateall ``` #确保一下三个文件配置一致 ``` [root@node01 ~]# vi /etc/nsswitch.conf # looked up first in the databases # # Example: #passwd: db files nisplus nis #shadow: db files nisplus nis #group: db files nisplus nis passwd: files sss ldap # 修改这 shadow: files sss ldap # 修改这 group: files sss ldap # 修改这 #initgroups: files sss #hosts: db files nisplus nis dns hosts: files dns myhostname # Example - obey only what nisplus tells us... #services: nisplus [NOTFOUND=return] files #networks: nisplus [NOTFOUND=return] files #protocols: nisplus [NOTFOUND=return] files #rpc: nisplus [NOTFOUND=return] files #ethers: nisplus [NOTFOUND=return] files #netmasks: nisplus [NOTFOUND=return] files bootparams: nisplus [NOTFOUND=return] files ethers: files netmasks: files networks: files protocols: files rpc: files services: files sss netgroup: files sss ldap # 修改这 publickey: nisplus automount: files ldap # 修改这 aliases: files nisplus [root@node01 ~]# vi /etc/pam.d/system-auth #%PAM-1.0 # This file is auto-generated. # User changes will be destroyed the next time authconfig is run. auth required pam_env.so auth required pam_faildelay.so delay=2000000 auth sufficient pam_unix.so nullok try_first_pass auth requisite pam_succeed_if.so uid >= 1000 quiet_success auth sufficient pam_ldap.so use_first_pass # 修改这 auth required pam_deny.so account required pam_unix.so broken_shadow account sufficient pam_localuser.so account sufficient pam_succeed_if.so uid < 1000 quiet account [default=bad success=ok user_unknown=ignore] pam_ldap.so # 修改这 account required pam_permit.so password requisite pam_pwquality.so try_first_pass local_users_only retry=3 authtok_type= password sufficient pam_unix.so sha512 shadow nullok try_first_pass use_authtok password sufficient pam_ldap.so use_authtok # 修改这 password required pam_deny.so session optional pam_keyinit.so revoke session required pam_limits.so -session optional pam_systemd.so session optional pam_mkhomedir.so umask=0077 # 修改这 session [success=1 default=ignore] pam_succeed_if.so service in crond quiet use_uid session required pam_unix.so session optional pam_ldap.so # 修改这 [root@node01 ~]# vi /etc/pam.d/password-auth #%PAM-1.0 # This file is auto-generated. # User changes will be destroyed the next time authconfig is run. auth required pam_env.so auth required pam_faildelay.so delay=2000000 auth sufficient pam_unix.so nullok try_first_pass auth requisite pam_succeed_if.so uid >= 1000 quiet_success auth sufficient pam_ldap.so use_first_pass # 修改这 auth required pam_deny.so account required pam_unix.so broken_shadow account sufficient pam_localuser.so account sufficient pam_succeed_if.so uid < 1000 quiet account [default=bad success=ok user_unknown=ignore] pam_ldap.so # 修改这 account required pam_permit.so password requisite pam_pwquality.so try_first_pass local_users_only retry=3 authtok_type= password sufficient pam_unix.so sha512 shadow nullok try_first_pass use_authtok password sufficient pam_ldap.so use_authtok # 修改这 password required pam_deny.so session optional pam_keyinit.so revoke session required pam_limits.so -session optional pam_systemd.so session optional pam_mkhomedir.so umask=0077 # 修改这 session [success=1 default=ignore] pam_succeed_if.so service in crond quiet use_uid session required pam_unix.so session optional pam_ldap.so # 修改这 [root@node01 ~]# systemctl restart nslcd [root@node01 ~]# systemctl restart sshd [root@node01 ~]# systemctl enable nslcd [root@node01 ~]#

八、测试OpenLDAP

使用LDAP Admin软件测试

-

随意在一个计算节点验证

[root@node01 ~]# id testuser01 uid=44317(testuser01) gid=0(root) groups=0(root) [root@node01 ~]# cat /etc/passwd | grep testuser01 [root@node01 ~]#

某些内容均来源于互联网,分享仅供学习使用,如果有侵权、不妥之处,请第一时间联系我删除

浙公网安备 33010602011771号

浙公网安备 33010602011771号