blktrace分析IO

前言

由于在iostat输出中,只能看到service time + wait time,因为对于评估一个磁盘或者云磁盘而言,service time才是衡量磁盘性能的核心指标和直接指标。很不幸iostat无法提供这个指标,但是blktrace可以。

blktrace是一柄神器,很多工具都是基于该神器的:ioprof,seekwatcher,iowatcher,这个工具基本可以满足我们的对块设备请求的所有了解。

blktrace的原理

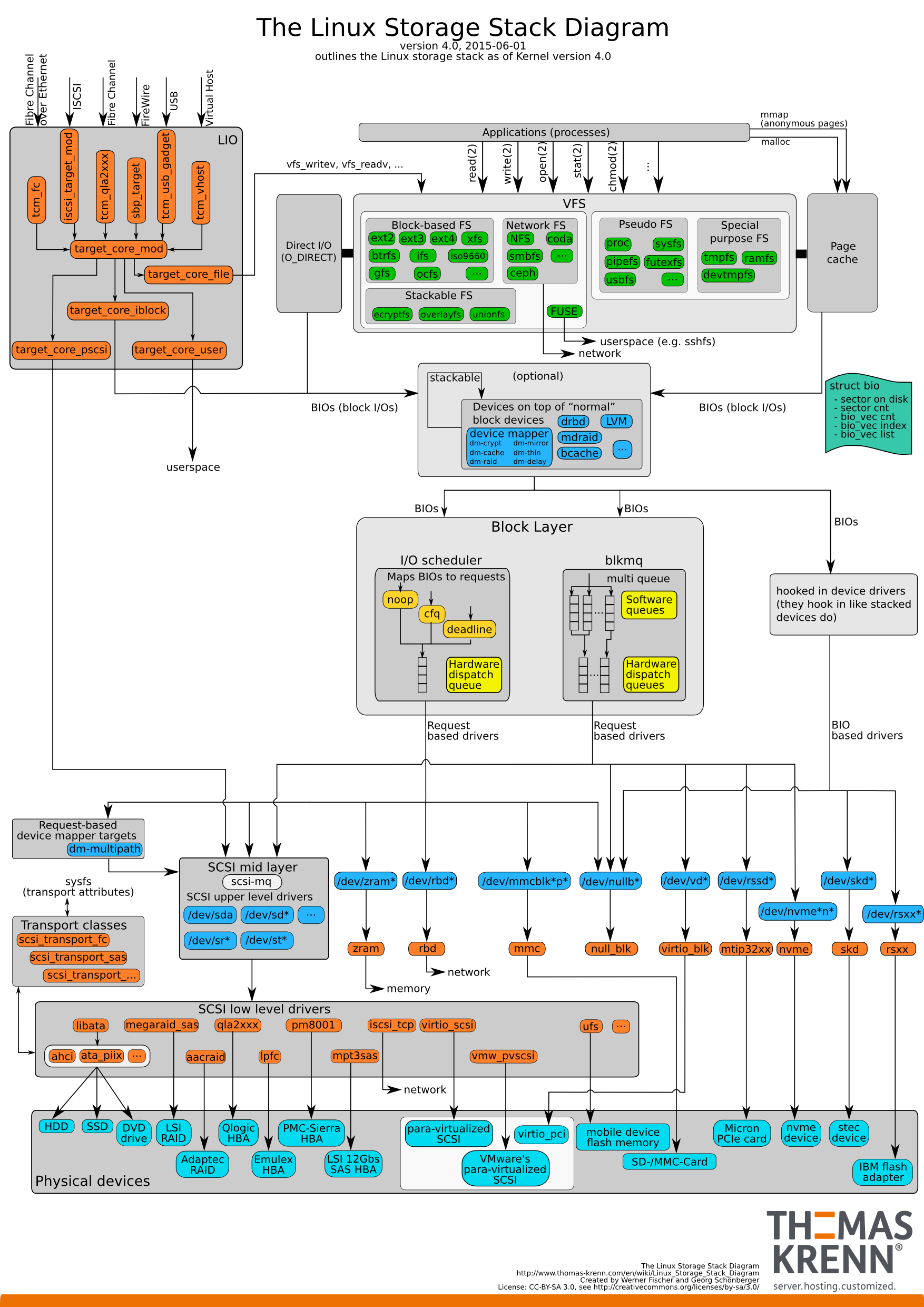

一个I/O请求,从应用层到底层块设备,路径如下图所示:

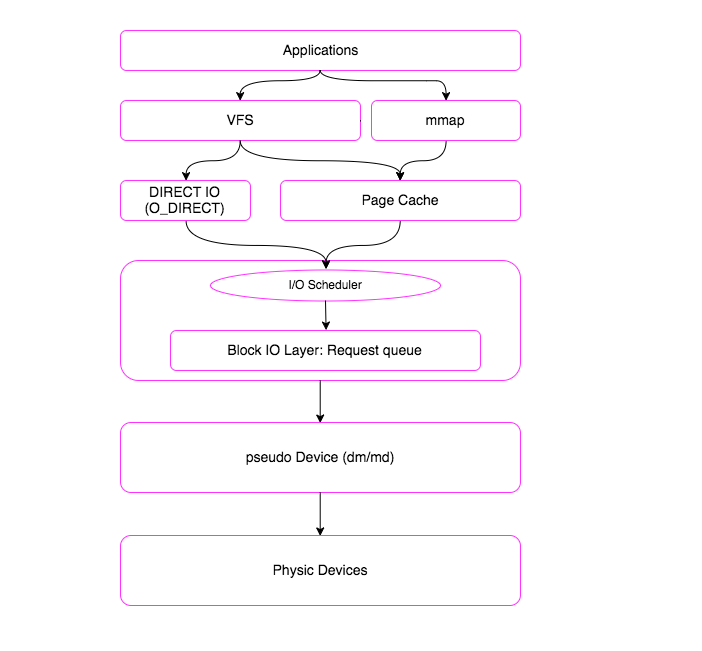

从上图可以看出IO路径是很复杂的。这么复杂的IO路径我们是无法用短短一篇小博文介绍清楚的。我们将IO路径简化一下:

一个I/O请求进入block layer之后,可能会经历下面的过程:

- Remap: 可能被DM(Device Mapper)或MD(Multiple Device, Software RAID) remap到其它设备

- Split: 可能会因为I/O请求与扇区边界未对齐、或者size太大而被分拆(split)成多个物理I/O

- Merge: 可能会因为与其它I/O请求的物理位置相邻而合并(merge)成一个I/O

- 被IO Scheduler依照调度策略发送给driver

- 被driver提交给硬件,经过HBA、电缆(光纤、网线等)、交换机(SAN或网络)、最后到达存储设备,设备完成IO请求之后再把结果发回。

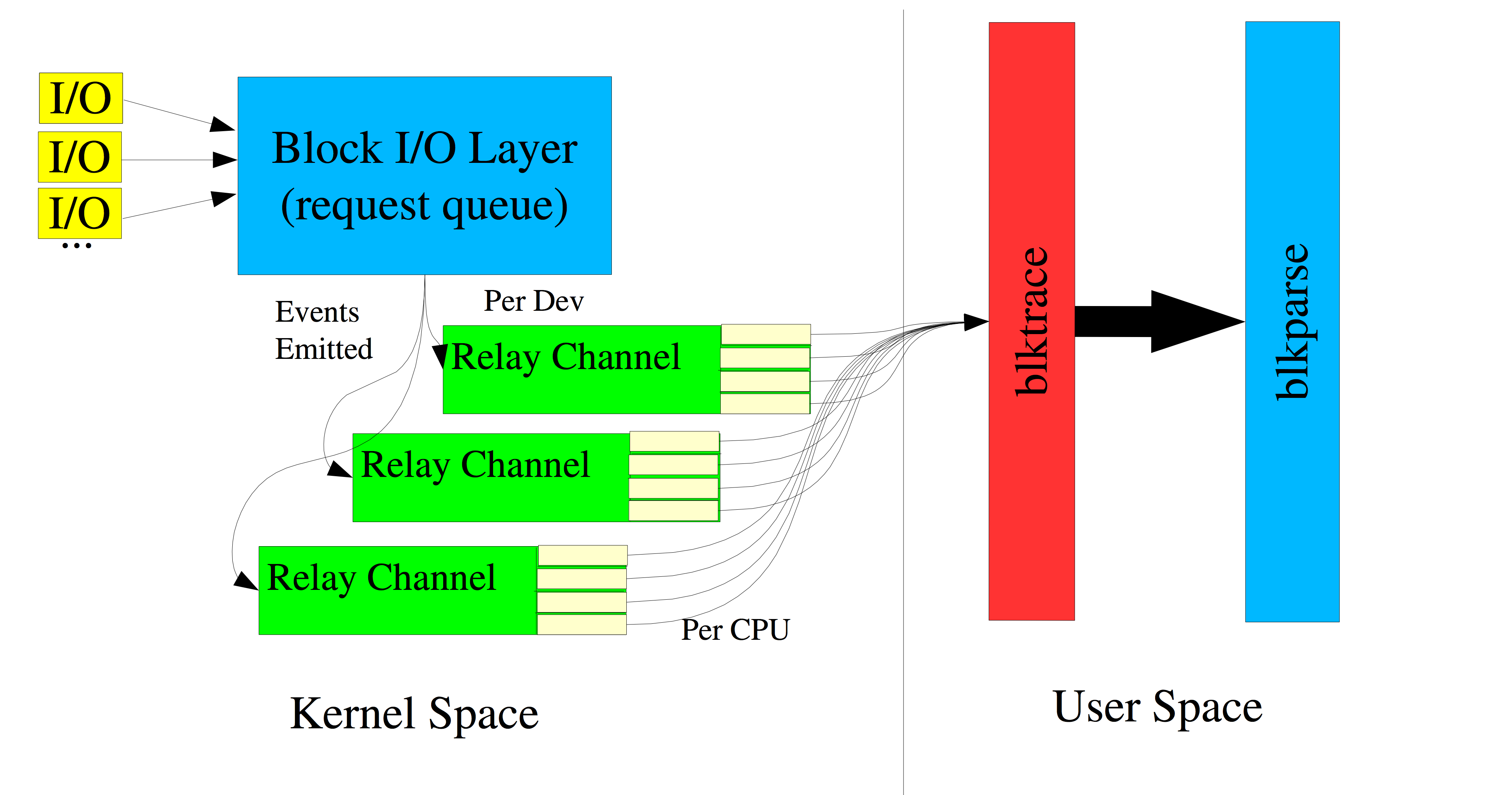

blktrace 能够记录下IO所经历的各个步骤:

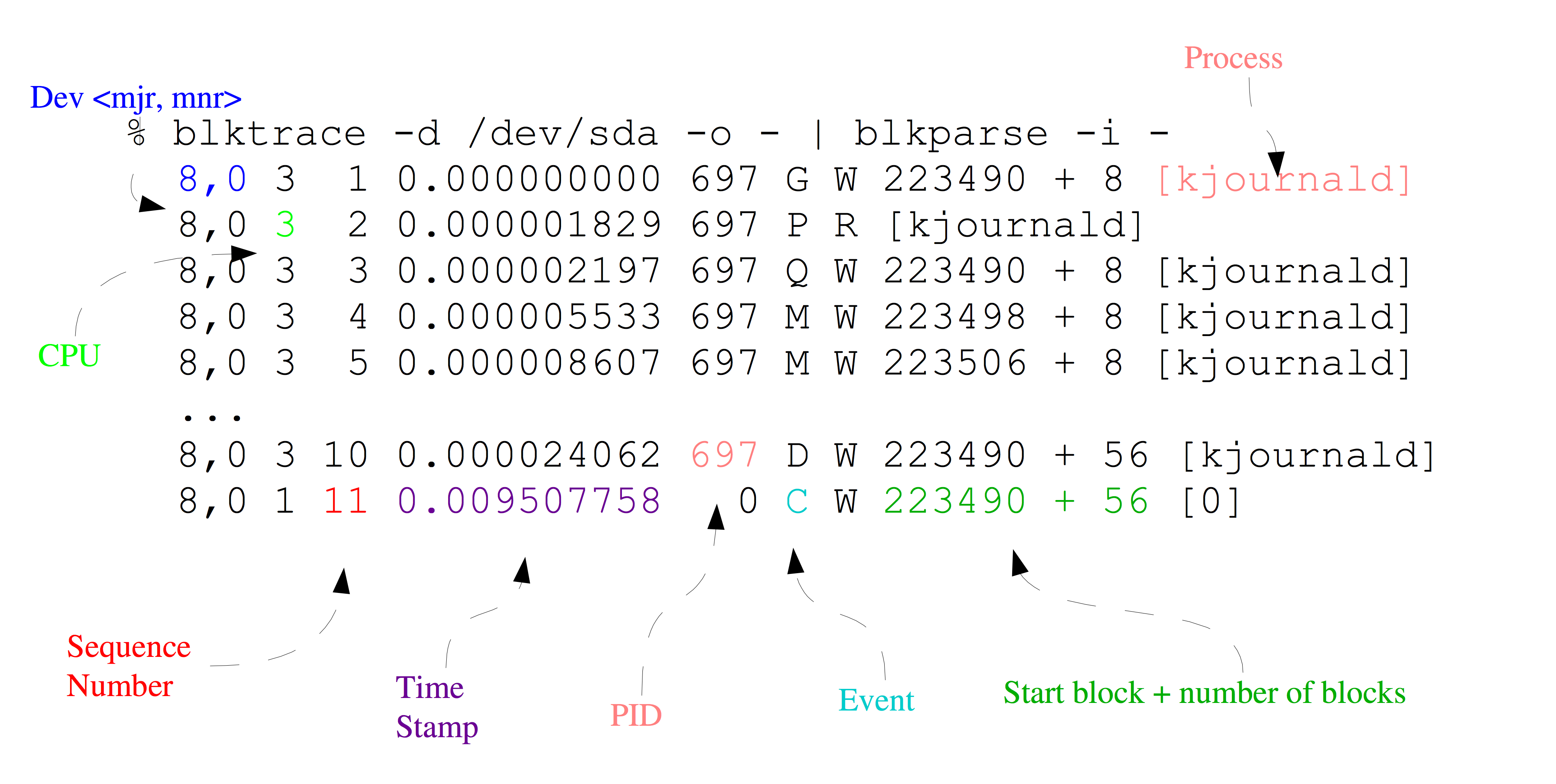

我们一起看下blktrace的输出长什么样子:

- 第一个字段:8,0 这个字段是设备号 major device ID和minor device ID。

- 第二个字段:3 表示CPU

- 第三个字段:11 序列号

- 第四个字段:0.009507758 Time Stamp是时间偏移

- 第五个字段:PID 本次IO对应的进程ID

- 第六个字段:Event,这个字段非常重要,反映了IO进行到了那一步

- 第七个字段:R表示 Read, W是Write,D表示block,B表示Barrier Operation

- 第八个字段:223490+56,表示的是起始block number 和 number of blocks,即我们常说的Offset 和 Size

- 第九个字段: 进程名

其中第六个字段非常有用:每一个字母都代表了IO请求所经历的某个阶段。

Q – 即将生成IO请求 | G – IO请求生成 | I – IO请求进入IO Scheduler队列 | D – IO请求进入driver | C – IO请求执行完毕

根据以上步骤对应的时间戳就可以计算出I/O请求在每个阶段所消耗的时间:

Q2G – 生成IO请求所消耗的时间,包括remap和split的时间;

G2I – IO请求进入IO Scheduler所消耗的时间,包括merge的时间;

I2D – IO请求在IO Scheduler中等待的时间;

D2C – IO请求在driver和硬件上所消耗的时间;

Q2C – 整个IO请求所消耗的时间(Q2I + I2D + D2C = Q2C),相当于iostat的await。

如果I/O性能慢的话,以上指标有助于进一步定位缓慢发生的地方:

D2C可以作为硬件性能的指标;

I2D可以作为IO Scheduler性能的指标。

Q2Q — time between requests sent to the block layer

Q2G — timefrom a block I/O is queued to the time it gets a request allocatedforit

G2I — time from a request is allocated to the time it is Inserted into the device's queue

Q2M — timefrom a block I/O is queued to the time it gets merged with an existing request

I2D — timefrom a request is inserted into the device's queue to the time it is actually issued to the device

M2D — time froma block I/O is merged with an exiting request until the request is issued to the device

D2C — service time of the request by the device

Q2C — total time spent in the block layerfora request

注意,整个IO路径,分成很多段,每一段开始的时候,都会有一个时间戳,根据上一段开始的时间和下一段开始的时间,就可以得到IO 路径各段花费的时间。

注意,我们心心念念的service time,也就是反应块设备处理能力的指标,就是从D到C所花费的时间,简称D2C。

而iostat输出中的await,即整个IO从生成请求到IO请求执行完毕,即从Q到C所花费的时间,我们简称Q2C。

我们知道Linux 有I/O scheduler,调度器的效率如何,I2D是重要的指标。

blktrace、blkparse和btt

我们接下来简单介绍这些工具的使用,其中这三个命令都是属于blktrace这个包的,他们是一家人。

使用blktrace需要挂载debugfs:

$ mount -t debugfs debugfs /sys/kernel/debug

首先通过如下命令,可以查看磁盘上的实时信息:

blktrace -d /dev/sdb -o – | blkparse -i –

这个命令会连绵不绝地出现很多输出,当你输入ctrl+C的时候,会停止。

当然了,你也可以先用如下命令采集信息,待所有信息采集完毕后,统一分析所有采集到的数据。搜集信息的命令如下:

blktrace -d /dev/sdb

注意,这个命令并不是只输出一个文件,他会根据CPU的个数上,每一个CPU都会输出一个文件,你也可以用-o参数指定自己的输出文件名。如下所示:

-rw-r--r-- 1 manu manu 1.3M Jul 6 19:58 sdb.blktrace.0 -rw-r--r-- 1 manu manu 823K Jul 6 19:58 sdb.blktrace.1 -rw-r--r-- 1 manu manu 2.8M Jul 6 19:58 sdb.blktrace.10 -rw-r--r-- 1 manu manu 1.9M Jul 6 19:58 sdb.blktrace.11 -rw-r--r-- 1 manu manu 474K Jul 6 19:58 sdb.blktrace.12 -rw-r--r-- 1 manu manu 271K Jul 6 19:58 sdb.blktrace.13 -rw-r--r-- 1 manu manu 578K Jul 6 19:58 sdb.blktrace.14 -rw-r--r-- 1 manu manu 375K Jul 6 19:58 sdb.blktrace.15 -rw-r--r-- 1 manu manu 382K Jul 6 19:58 sdb.blktrace.16 -rw-r--r-- 1 manu manu 478K Jul 6 19:58 sdb.blktrace.17 -rw-r--r-- 1 manu manu 839K Jul 6 19:58 sdb.blktrace.18 -rw-r--r-- 1 manu manu 848K Jul 6 19:58 sdb.blktrace.19 -rw-r--r-- 1 manu manu 1.6M Jul 6 19:58 sdb.blktrace.2 -rw-r--r-- 1 manu manu 652K Jul 6 19:58 sdb.blktrace.20 -rw-r--r-- 1 manu manu 738K Jul 6 19:58 sdb.blktrace.21 -rw-r--r-- 1 manu manu 594K Jul 6 19:58 sdb.blktrace.22 -rw-r--r-- 1 manu manu 527K Jul 6 19:58 sdb.blktrace.23 -rw-r--r-- 1 manu manu 1005K Jul 6 19:58 sdb.blktrace.3 -rw-r--r-- 1 manu manu 1.2M Jul 6 19:58 sdb.blktrace.4 -rw-r--r-- 1 manu manu 511K Jul 6 19:58 sdb.blktrace.5 -rw-r--r-- 1 manu manu 2.3M Jul 6 19:58 sdb.blktrace.6 -rw-r--r-- 1 manu manu 1.3M Jul 6 19:58 sdb.blktrace.7 -rw-r--r-- 1 manu manu 2.1M Jul 6 19:58 sdb.blktrace.8 -rw-r--r-- 1 manu manu 1.1M Jul 6 19:58 sdb.blktrace.9

有了输出,我们可以通过blkparse -i sdb来分析采集的数据:

$ blktrace -d /dev/sdb $ blkparse -i sdb # 第一个IO开始: 8,16 1 1 0.000000000 18166 Q R 0 + 1 [dd] 8,16 1 0 0.000009827 0 m N cfq18166S /user.slice alloced 8,16 1 2 0.000010451 18166 G R 0 + 1 [dd] 8,16 1 3 0.000011056 18166 P N [dd] 8,16 1 4 0.000012255 18166 I R 0 + 1 [dd] 8,16 1 0 0.000013477 0 m N cfq18166S /user.slice insert_request 8,16 1 0 0.000014526 0 m N cfq18166S /user.slice add_to_rr 8,16 1 5 0.000016643 18166 U N [dd] 1 8,16 1 0 0.000017522 0 m N cfq workload slice:300 8,16 1 0 0.000018880 0 m N cfq18166S /user.slice set_active wl_class:0 wl_type:2 8,16 1 0 0.000020594 0 m N cfq18166S /user.slice fifo= (null) 8,16 1 0 0.000021462 0 m N cfq18166S /user.slice dispatch_insert 8,16 1 0 0.000022898 0 m N cfq18166S /user.slice dispatched a request 8,16 1 0 0.000023786 0 m N cfq18166S /user.slice activate rq, drv=1 8,16 1 6 0.000023977 18166 D R 0 + 1 [dd] 8,16 0 1 0.014270153 0 C R 0 + 1 [0] # 第一个IO结束。 8,16 0 0 0.014278115 0 m N cfq18166S /user.slice complete rqnoidle 0 8,16 0 0 0.014280044 0 m N cfq18166S /user.slice set_slice=100 8,16 0 0 0.014282217 0 m N cfq18166S /user.slice arm_idle: 8 group_idle: 0 8,16 0 0 0.014282728 0 m N cfq schedule dispatch # 第二个IO开始: 8,16 1 7 0.014298247 18166 Q R 1 + 1 [dd] 8,16 1 8 0.014300522 18166 G R 1 + 1 [dd] 8,16 1 9 0.014300984 18166 P N [dd] 8,16 1 10 0.014301996 18166 I R 1 + 1 [dd] 8,16 1 0 0.014303864 0 m N cfq18166S /user.slice insert_request 8,16 1 11 0.014304981 18166 U N [dd] 1 8,16 1 0 0.014306368 0 m N cfq18166S /user.slice dispatch_insert 8,16 1 0 0.014307793 0 m N cfq18166S /user.slice dispatched a request 8,16 1 0 0.014308763 0 m N cfq18166S /user.slice activate rq, drv=1 8,16 1 12 0.014308962 18166 D R 1 + 1 [dd] 8,16 0 2 0.014518615 0 C R 1 + 1 [0] # 第二个IO结束。 8,16 0 0 0.014523548 0 m N cfq18166S /user.slice complete rqnoidle 0 8,16 0 0 0.014525334 0 m N cfq18166S /user.slice arm_idle: 8 group_idle: 0 8,16 0 0 0.014525676 0 m N cfq schedule dispatch # 第三个IO开始: 8,16 1 13 0.014531022 18166 Q R 2 + 1 [dd] ...

注意,blkparse仅仅是将blktrace输出的信息转化成人可以阅读和理解的输出,但是,信息太多,太杂,人完全没法得到关键信息。 这时候btt就横空出世了,这个工具可以将blktrace采集回来的数据,进行分析,得到对人更有用的信息。事实上,btt也是我们的终点。

注:

在以上数据中,有一些记录的event类型是”m”,那是IO Scheduler的调度信息,对研究IO Scheduler问题有意义:

- cfq18166S – cfq是IO Scheduler的名称,18166是进程号,”S”表示Sync(同步IO),如果异步IO则用“A”表示(Async);

- 它们的第三列sequence number都是0;

- 它们表示IO Scheduler内部的关键函数,上例中是cfq,代码参见block/cfq-iosched.c,以下是各关键字所对应的内部函数:

alloced <<< cfq_find_alloc_queue() insert_request <<< cfq_insert_request() add_to_rr <<< cfq_add_cfqq_rr() cfq workload slice:300 <<< choose_wl_class_and_type() set_active wl_class:0 wl_type:2 <<< __cfq_set_active_queue() fifo= (null) <<< cfq_check_fifo() dispatch_insert <<< cfq_dispatch_insert() dispatched a request <<< cfq_dispatch_requests() activate rq, drv=1 <<< cfq_activate_request() complete rqnoidle 0 <<< cfq_completed_request() set_slice=100 <<< cfq_set_prio_slice() arm_idle: 8 group_idle: 0 <<< cfq_arm_slice_timer() cfq schedule dispatch <<< cfq_schedule_dispatch()

利用btt分析blktrace数据

blkparse只是将blktrace数据转成可以人工阅读的格式,由于数据量通常很大,人工分析并不轻松。btt是对blktrace数据进行自动分析的工具。

btt不能分析实时数据,只能对blktrace保存的数据文件进行分析。使用方法:

把原本按CPU分别保存的文件合并成一个,合并后的文件名为sdb.blktrace.bin:

$ blkparse -i sdb -d sdb.blktrace.bin

执行btt对sdb.blktrace.bin进行分析:

$ btt -i sdb.blktrace.bin

下面是一个btt实例:

... ALL MIN AVG MAX N --------------- ------------- ------------- ------------- ----------- Q2Q 0.000138923 0.000154010 0.014298247 94558 Q2G 0.000001154 0.000001661 0.000017313 94559 G2I 0.000000883 0.000001197 0.000012011 94559 I2D 0.000004722 0.000005761 0.000027286 94559 D2C 0.000118840 0.000129201 0.014246176 94558 Q2C 0.000125953 0.000137820 0.014270153 94558 ==================== Device Overhead ==================== DEV | Q2G G2I Q2M I2D D2C ---------- | --------- --------- --------- --------- --------- ( 8, 16) | 1.2050% 0.8688% 0.0000% 4.1801% 93.7461% ---------- | --------- --------- --------- --------- --------- Overall | 1.2050% 0.8688% 0.0000% 4.1801% 93.7461%

我们看到93.7461%的时间消耗在D2C,也就是硬件层,这是正常的,我们说过D2C是衡量硬件性能的指标,这里单个IO平均0.129201毫秒,已经是相当快了,单个IO最慢14.246176 毫秒,不算坏。Q2G和G2I都很小,完全正常。I2D稍微有点大,应该是cfq scheduler的调度策略造成的,你可以试试其它scheduler,比如deadline,比较两者的差异,然后选择最适合你应用特点的那个。

注:

1、一些状态的对应关系

Act Description SetPosition A IO was remapped to a different device blk-core.c/trace_block_remap B IO bounced bounce.c/trace_block_bio_bounce C IO completion blk-core.c/trace_block_rq_complete D IO issued to driver elevator.c/trace_block_rq_issue F IO front merged with request on queue blk-core.c/trace_block_bio_frontmerge G Get request blk-core.c/trace_block_getrq I IO inserted onto request queue elevator.c/trace_block_rq_insert M IO back merged with request on queue blk-core.c/trace_block_bio_backmerge P Plug request blk-core.c/trace_block_plug Q IO handled by request queue code blk-core.c/trace_block_bio_queue S Sleep request blk-core.c/trace_block_sleeprq T Unplug due to timeout blk-core.c/trace_block_unplug_timer U Unplug request blk-core.c/trace_block_unplug_io X Split bio.c/trace_block_split

2、Trace actions:

C – complete A previously issued request has been completed. The output will detail the sector and size of that request, as well as the success or failure of it.

D – issued A request that previously resided on the block layer queue or in the io scheduler has been sent to the driver.

I – inserted A request is being sent to the io scheduler for addition to the internal queue and later service by the driver. The request is fully formed at this time.

Q – queued This notes intent to queue io at the given location. No real re- quests exists yet.

B – bounced The data pages attached to this bio are not reachable by the hardware and must be bounced to a lower memory location. This causes a big slowdown in io performance, since the data must be copied to/from kernel buffers. Usually this can be fixed with using better hardware - either a better io controller, or a platform with an IOMMU.

m – message Text message generated via kernel call to blk add trace msg.

M – back merge A previously inserted request exists that ends on the bound- ary of where this io begins, so the io scheduler can merge them together.

F – front merge Same as the back merge, except this io ends where a previ- ously inserted requests starts.

G – get request To send any type of request to a block device, a struct request container must be allocated first.

S – sleep No available request structures were available, so the issuer has to wait for one to be freed.

P – plug When io is queued to a previously empty block device queue, Linux will plug the queue in anticipation of future ios being added before this data is needed.

U – unplug Some request data already queued in the device, start sending requests to the driver. This may happen automatically if a timeout period has passed (see next entry) or if a number of requests have been added to the queue.

T – unplug due to timer If nobody requests the io that was queued after plugging the queue, Linux will automatically unplug it after a defined period has passed.

X – split On raid or device mapper setups, an incoming io may straddle a device or internal zone and needs to be chopped up into smaller pieces for service. This may indicate a performance problem due to a bad setup of that raid/dm device, but may also just be part of normal boundary conditions. dm is notably bad at this and will clone lots of io.

A – remap For stacked devices, incoming io is remapped to device below it in the io stack. The remap action details what exactly is being remapped to what.

参考:

http://bean-li.github.io/blktrace-to-report/

http://linuxperf.com/?p=161

http://bbs.chinaunix.net/thread-1976867-1-1.html

http://www.cnblogs.com/cobbliu/p/7002933.html

/usr/share/doc/blktrace-1.0.1/blktrace.pdf

/usr/share/doc/blktrace-1.0.1/btt.pdf

http://blog.yufeng.info/archives/751

http://duch.mimuw.edu.pl/~lichota/09-10/Optymalizacja-open-source/Materialy/10%20-%20Dysk/gelato_ICE06apr_blktrace_brunelle_hp.pdf

http://fibrevillage.com/storage/539-blktrace-and-btt-example-to-debug-and-tune-disk-i-o-on-linux

posted on 2017-10-17 15:26 Still water run deep 阅读(1883) 评论(0) 编辑 收藏 举报