【Linux】【hive】基于Hadoop安装hive单机版

前提:本文环境CentOS7,已经安装Hadoop,也安装MySQL

提示:先看Hadoop和hive版本对应,本文在Hadoop3.0.0、hive2.1.0下安装失败,后面修改为hive2.3.4

1、下载安装包,本文安装包放在/home/hadoop

hive2.3.4:http://archive.apache.org/dist/hive/hive-2.3.4/apache-hive-2.3.4-bin.tar.gz

2、解压

cd /home/hadoop

tar -zxvf /home/hadoop/apache-hive-2.3.4-bin.tar.gz

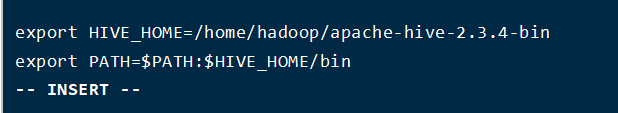

3、配置环境变量,添加下面内容,vi /etc/profile,然后重新加载文件source /etc/profile

export HIVE_HOME=/home/hadoop/apache-hive-2.3.4-bin

export PATH=$PATH:$HIVE_HOME/bin

4、配置hive-site.xml

先复制文件,cp /home/hadoop/apache-hive-2.3.4-bin/conf/hive-default.xml.template /home/hadoop/apache-hive-2.3.4-bin/conf/hive-site.xml

修改下面内容,注意自己MySQL配置,vi /home/hadoop/apache-hive-2.3.4-bin/conf/hive-site.xml

<property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://192.168.8.71:3306/hive?&createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false</value> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>123456</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>datanucleus.schema.autoCreateAll</name> <value>true</value> </property> <property> <name>hive.metastore.schema.verification</name> <value>false</value> </property> <property> <name>hive.server2.thrift.client.user</name> <value>root</value> <description>Username to use against thrift client</description> </property> <property> <name>hive.server2.thrift.client.password</name> <value>1234</value> <description>Password to use against thrift client</description> </property> <property> <name>hive.exec.local.scratchdir</name> <value>/tmp/hive</value> <description>Local scratch space for Hive jobs</description> </property> <property> <name>hive.downloaded.resources.dir</name> <value>/tmp/hive/resources</value> <description>Temporary local directory for added resources in the remote file system.</description> </property>

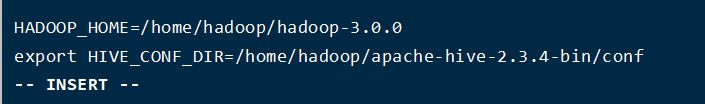

5、配置hive-env.sh

先复制一个hive-env.sh文件,cp /home/hadoop/apache-hive-2.3.4-bin/conf/hive-env.sh.template /home/hadoop/apache-hive-2.3.4-bin/conf/hive-env.sh

在进入文件添加下面内容,注意自己的Hadoop、hive路径,vi /home/hadoop/apache-hive-2.3.4-bin/conf/hive-env.sh

HADOOP_HOME=/home/hadoop/hadoop-3.0.0

export HIVE_CONF_DIR=/home/hadoop/apache-hive-2.3.4-bin/conf

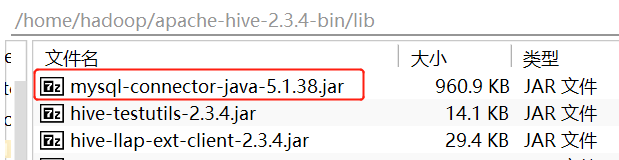

6、下载MySQL驱动,放在/home/hadoop/apache-hive-2.1.1-bin/lib

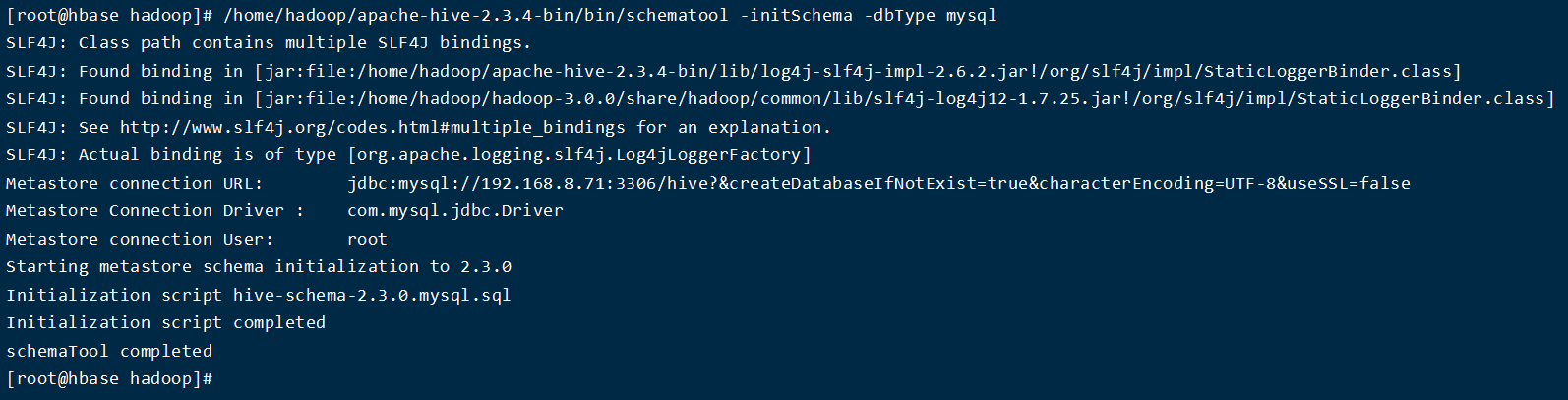

7、初始化数据库,/home/hadoop/apache-hive-2.3.4-bin/bin/schematool -initSchema -dbType mysql

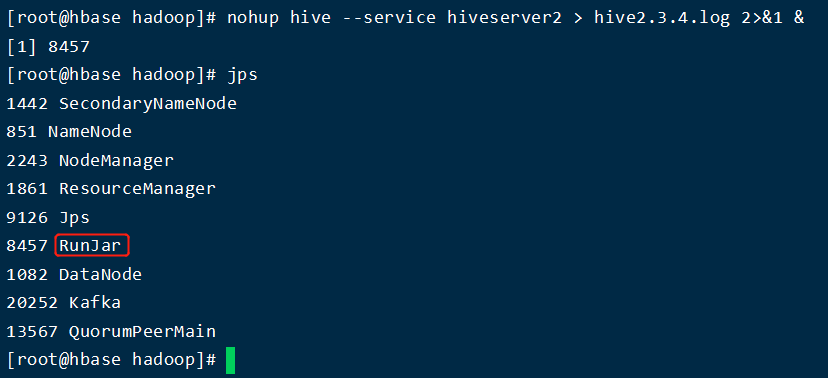

8、后台启动hiveserver2,nohup hive --service hiveserver2 > hive2.3.4.log 2>&1 &

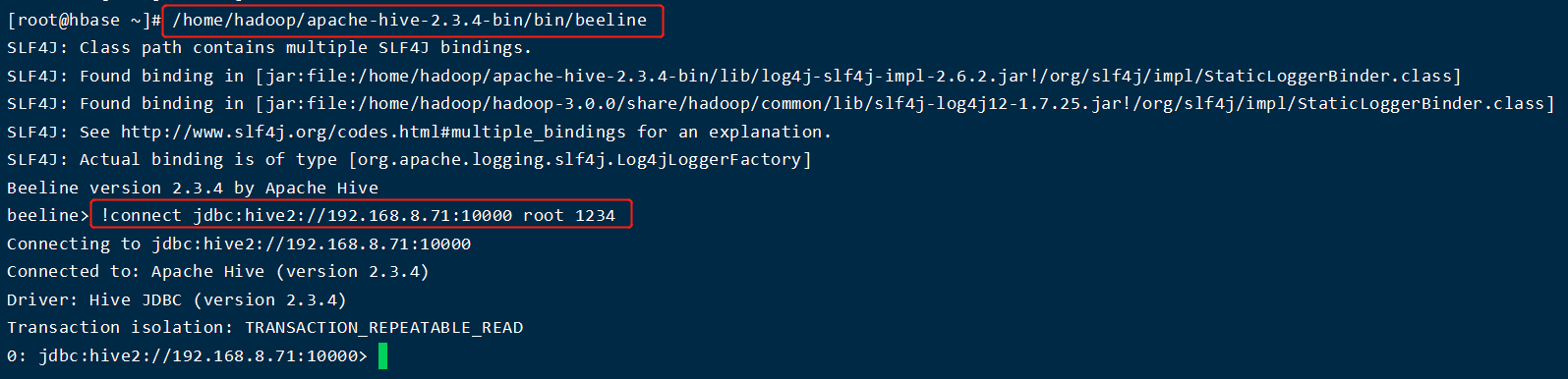

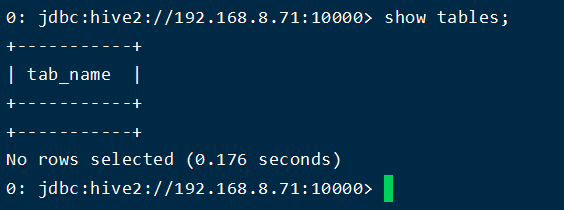

9、beeline连接hive

/home/hadoop/apache-hive-2.3.4-bin/bin/beeline

!connect jdbc:hive2://192.168.8.71:10000 root 1234

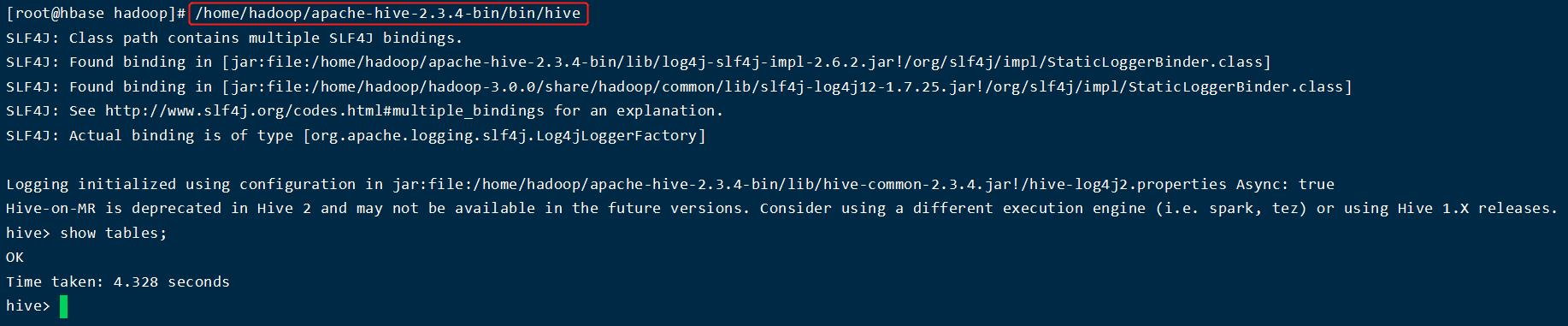

10、直接进入hive,/home/hadoop/apache-hive-2.3.4-bin/bin/hive

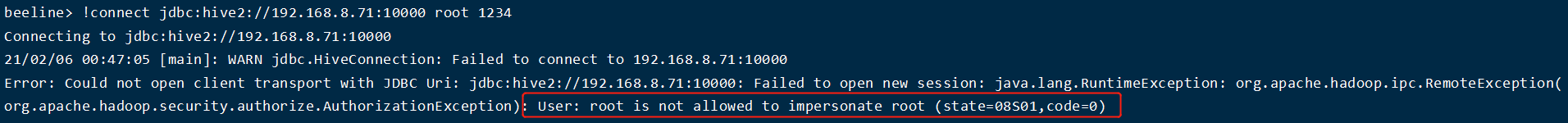

问题:如果出现 User: root is not allowed to impersonate root (state=08S01,code=0)

解决,Hadoop的core-site.xml文件添加下面内容

<property> <name>hadoop.proxyuser.root.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.root.groups</name> <value>*</value> </property>

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步