[深度应用]·实战掌握PyTorch图片分类简明教程

[深度应用]·实战掌握PyTorch图片分类简明教程

个人网站--> http://www.yansongsong.cn/

项目GitHub地址--> https://github.com/xiaosongshine/image_classifier_PyTorch/

1.引文

深度学习的比赛中,图片分类是很常见的比赛,同时也是很难取得特别高名次的比赛,因为图片分类已经被大家研究的很透彻,一些开源的网络很容易取得高分。如果大家还掌握不了使用开源的网络进行训练,再慢慢去模型调优,很难取得较好的成绩。

我们在[PyTorch小试牛刀]实战六·准备自己的数据集用于训练讲解了如何制作自己的数据集用于训练,这个教程在此基础上,进行训练与应用。

2.数据介绍

数据 下载地址

这次的实战使用的数据是交通标志数据集,共有62类交通标志。其中训练集数据有4572张照片(每个类别大概七十个),测试数据集有2520张照片(每个类别大概40个)。数据包含两个子目录分别train与test:

为什么还需要测试数据集呢?这个测试数据集不会拿来训练,是用来进行模型的评估与调优。

train与test每个文件夹里又有62个子文件夹,每个类别在同一个文件夹内:

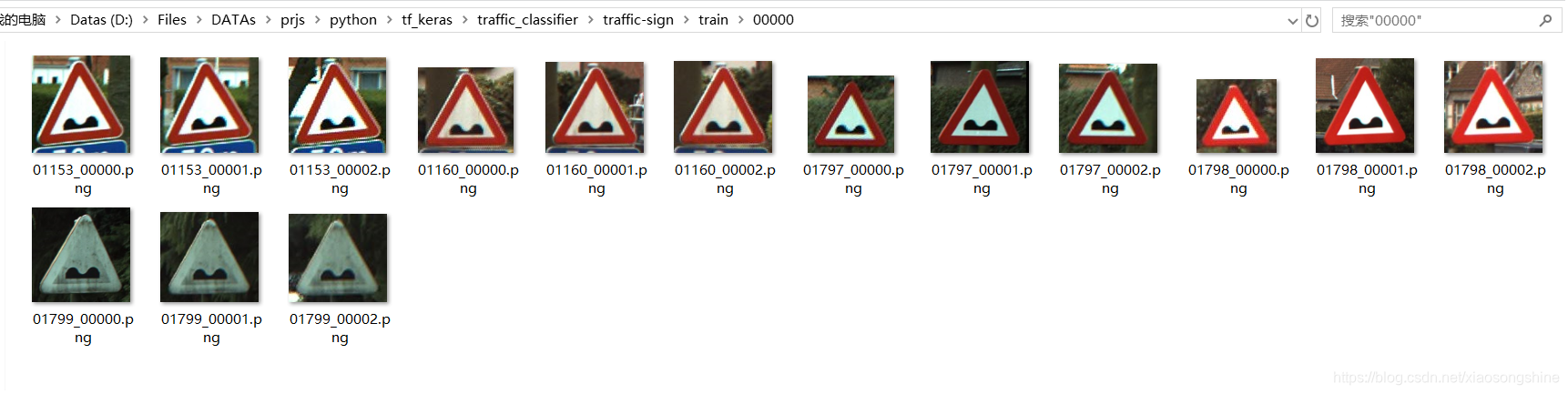

我从中打开一个文件间,把里面图片展示出来:

其中每张照片都类似下面的例子,100*100*3的大小。100是照片的照片的长和宽,3是什么呢?这其实是照片的色彩通道数目,RGB。彩色照片存储在计算机里就是以三维数组的形式。我们送入网络的也是这些数组。

3.网络构建

1.导入Python包,定义一些参数

import torch as t

import torchvision as tv

import os

import time

import numpy as np

from tqdm import tqdm

class DefaultConfigs(object):

data_dir = "./traffic-sign/"

data_list = ["train","test"]

lr = 0.001

epochs = 10

num_classes = 62

image_size = 224

batch_size = 40

channels = 3

gpu = "0"

train_len = 4572

test_len = 2520

use_gpu = t.cuda.is_available()

config = DefaultConfigs()

2.数据准备,采用PyTorch提供的读取方式(具体内容参考[PyTorch小试牛刀]实战六·准备自己的数据集用于训练)

注意一点Train数据需要进行随机裁剪,Test数据不要进行裁剪了

normalize = tv.transforms.Normalize(mean = [0.485, 0.456, 0.406],

std = [0.229, 0.224, 0.225]

)

transform = {

config.data_list[0]:tv.transforms.Compose(

[tv.transforms.Resize([224,224]),tv.transforms.CenterCrop([224,224]),

tv.transforms.ToTensor(),normalize]#tv.transforms.Resize 用于重设图片大小

) ,

config.data_list[1]:tv.transforms.Compose(

[tv.transforms.Resize([224,224]),tv.transforms.ToTensor(),normalize]

)

}

datasets = {

x:tv.datasets.ImageFolder(root = os.path.join(config.data_dir,x),transform=transform[x])

for x in config.data_list

}

dataloader = {

x:t.utils.data.DataLoader(dataset= datasets[x],

batch_size=config.batch_size,

shuffle=True

)

for x in config.data_list

}

3.构建网络模型(使用resnet18进行迁移学习,训练参数为最后一个全连接层 t.nn.Linear(512,num_classes))

def get_model(num_classes):

model = tv.models.resnet18(pretrained=True)

for parma in model.parameters():

parma.requires_grad = False

model.fc = t.nn.Sequential(

t.nn.Dropout(p=0.3),

t.nn.Linear(512,num_classes)

)

return(model)

如果电脑硬件支持,可以把下述代码屏蔽,则训练整个网络,最终准确率会上升,训练数据会变慢。

for parma in model.parameters():

parma.requires_grad = False

模型输出

ResNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer2): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer3): Sequential(

(0): BasicBlock(

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer4): Sequential(

(0): BasicBlock(

(conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(avgpool): AvgPool2d(kernel_size=7, stride=1, padding=0)

(fc): Sequential(

(0): Dropout(p=0.3)

(1): Linear(in_features=512, out_features=62, bias=True)

)

)

4.训练模型(支持自动GPU加速,GPU使用教程参考:[开发技巧]·PyTorch如何使用GPU加速)

def train(epochs):

model = get_model(config.num_classes)

print(model)

loss_f = t.nn.CrossEntropyLoss()

if(config.use_gpu):

model = model.cuda()

loss_f = loss_f.cuda()

opt = t.optim.Adam(model.fc.parameters(),lr = config.lr)

time_start = time.time()

for epoch in range(epochs):

train_loss = []

train_acc = []

test_loss = []

test_acc = []

model.train(True)

print("Epoch {}/{}".format(epoch+1,epochs))

for batch, datas in tqdm(enumerate(iter(dataloader["train"]))):

x,y = datas

if (config.use_gpu):

x,y = x.cuda(),y.cuda()

y_ = model(x)

#print(x.shape,y.shape,y_.shape)

_, pre_y_ = t.max(y_,1)

pre_y = y

#print(y_.shape)

loss = loss_f(y_,pre_y)

#print(y_.shape)

acc = t.sum(pre_y_ == pre_y)

loss.backward()

opt.step()

opt.zero_grad()

if(config.use_gpu):

loss = loss.cpu()

acc = acc.cpu()

train_loss.append(loss.data)

train_acc.append(acc)

#if((batch+1)%5 ==0):

time_end = time.time()

print("Batch {}, Train loss:{:.4f}, Train acc:{:.4f}, Time: {}"\

.format(batch+1,np.mean(train_loss)/config.batch_size,np.mean(train_acc)/config.batch_size,(time_end-time_start)))

time_start = time.time()

model.train(False)

for batch, datas in tqdm(enumerate(iter(dataloader["test"]))):

x,y = datas

if (config.use_gpu):

x,y = x.cuda(),y.cuda()

y_ = model(x)

#print(x.shape,y.shape,y_.shape)

_, pre_y_ = t.max(y_,1)

pre_y = y

#print(y_.shape)

loss = loss_f(y_,pre_y)

acc = t.sum(pre_y_ == pre_y)

if(config.use_gpu):

loss = loss.cpu()

acc = acc.cpu()

test_loss.append(loss.data)

test_acc.append(acc)

print("Batch {}, Test loss:{:.4f}, Test acc:{:.4f}".format(batch+1,np.mean(test_loss)/config.batch_size,np.mean(test_acc)/config.batch_size))

t.save(model,str(epoch+1)+"ttmodel.pkl")

if __name__ == "__main__":

train(config.epochs)

训练结果如下:

def train(epochs):

model = get_model(config.num_classes)

print(model)

loss_f = t.nn.CrossEntropyLoss()

if(config.use_gpu):

model = model.cuda()

loss_f = loss_f.cuda()

opt = t.optim.Adam(model.fc.parameters(),lr = config.lr)

time_start = time.time()

for epoch in range(epochs):

train_loss = []

train_acc = []

test_loss = []

test_acc = []

model.train(True)

print("Epoch {}/{}".format(epoch+1,epochs))

for batch, datas in tqdm(enumerate(iter(dataloader["train"]))):

x,y = datas

if (config.use_gpu):

x,y = x.cuda(),y.cuda()

y_ = model(x)

#print(x.shape,y.shape,y_.shape)

_, pre_y_ = t.max(y_,1)

pre_y = y

#print(y_.shape)

loss = loss_f(y_,pre_y)

#print(y_.shape)

acc = t.sum(pre_y_ == pre_y)

loss.backward()

opt.step()

opt.zero_grad()

if(config.use_gpu):

loss = loss.cpu()

acc = acc.cpu()

train_loss.append(loss.data)

train_acc.append(acc)

#if((batch+1)%5 ==0):

time_end = time.time()

print("Batch {}, Train loss:{:.4f}, Train acc:{:.4f}, Time: {}"\

.format(batch+1,np.mean(train_loss)/config.batch_size,np.mean(train_acc)/config.batch_size,(time_end-time_start)))

time_start = time.time()

model.train(False)

for batch, datas in tqdm(enumerate(iter(dataloader["test"]))):

x,y = datas

if (config.use_gpu):

x,y = x.cuda(),y.cuda()

y_ = model(x)

#print(x.shape,y.shape,y_.shape)

_, pre_y_ = t.max(y_,1)

pre_y = y

#print(y_.shape)

loss = loss_f(y_,pre_y)

acc = t.sum(pre_y_ == pre_y)

if(config.use_gpu):

loss = loss.cpu()

acc = acc.cpu()

test_loss.append(loss.data)

test_acc.append(acc)

print("Batch {}, Test loss:{:.4f}, Test acc:{:.4f}".format(batch+1,np.mean(test_loss)/config.batch_size,np.mean(test_acc)/config.batch_size))

t.save(model,str(epoch+1)+"ttmodel.pkl")

if __name__ == "__main__":

train(config.epochs)

训练10个Epoch,测试集准确率可以到达0.86,已经达到不错效果。通过修改参数,增加训练,可以达到更高的准确率。