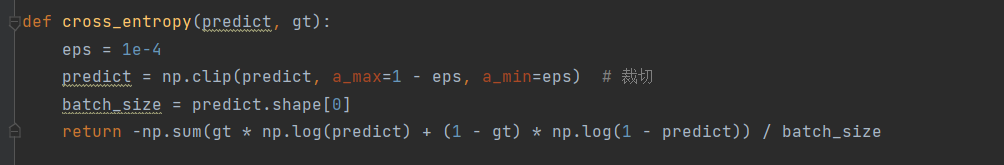

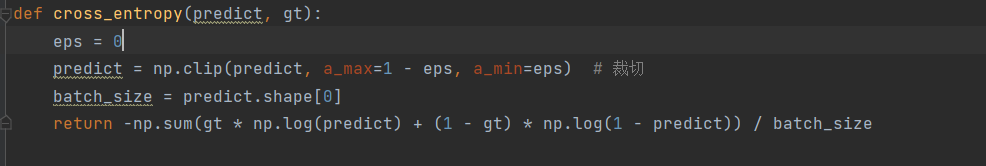

import numpy as np import struct import random import matplotlib.pyplot as plt import pandas as pd import math def load_labels(file): with open(file, "rb") as f: data = f.read() magic_number, num_samples = struct.unpack(">ii", data[:8]) if magic_number != 2049: # 0x00000801 print(f"magic number mismatch {magic_number} != 2049") return None labels = np.array(list(data[8:])) return labels def load_images(file): with open(file, "rb") as f: data = f.read() magic_number, num_samples, image_width, image_height = struct.unpack(">iiii", data[:16]) if magic_number != 2051: # 0x00000803 print(f"magic number mismatch {magic_number} != 2051") return None image_data = np.asarray(list(data[16:]), dtype=np.uint8).reshape(num_samples, -1) return image_data def one_hot(labels, classes): n = len(labels) output = np.zeros((n, classes), dtype=np.int32) for row, label in enumerate(labels): output[row, label] = 1 return output val_labels = load_labels("E:/杜老师课程/dataset/t10k-labels-idx1-ubyte") # 10000, val_images = load_images("E:/杜老师课程/dataset/t10k-images-idx3-ubyte") # 10000, 784 numdata = val_images.shape[0] # 60000 val_images = np.hstack((val_images / 255 - 0.5, np.ones((numdata, 1)))) # 10000, 785 val_pd = pd.DataFrame(val_labels, columns=["label"]) train_labels = load_labels("E:/杜老师课程/dataset/train-labels-idx1-ubyte") # 60000, train_images = load_images("E:/杜老师课程/dataset/train-images-idx3-ubyte") # 60000, 784 numdata = train_images.shape[0] # 60000 train_images = np.hstack((train_images / 255 - 0.5, np.ones((numdata, 1)))) # 60000, 785 train_pd = pd.DataFrame(train_labels, columns=["label"]) def show_hist(labels, num_classes): label_map = {key: 0 for key in range(num_classes)} for label in labels: label_map[label] += 1 labels_hist = [label_map[key] for key in range(num_classes)] pd.DataFrame(labels_hist, columns=["label"]).plot(kind="bar") show_hist(train_labels, 10) class Dataset: def __init__(self, images, labels): self.images = images self.labels = labels # 获取他的一个item, dataset = Dataset(), dataset[index] def __getitem__(self, index): return self.images[index], self.labels[index] # 获取数据集的长度,个数 def __len__(self): return len(self.images) class DataLoaderIterator: def __init__(self, dataloader): self.dataloader = dataloader self.cursor = 0 self.indexs = list(range(self.dataloader.count_data)) # 0, ... 60000 if self.dataloader.shuffle: # 打乱一下 random.shuffle(self.indexs) # 合并batch的数据 def merge_to(self, container, b): if len(container) == 0: for index, data in enumerate(b): if isinstance(data, np.ndarray): container.append(data) else: container.append(np.array([data], dtype=type(data))) else: for index, data in enumerate(b): container[index] = np.vstack((container[index], data)) return container def __next__(self): if self.cursor >= self.dataloader.count_data: raise StopIteration() batch_data = [] remain = min(self.dataloader.batch_size, self.dataloader.count_data - self.cursor) # 256, 128 for n in range(remain): index = self.indexs[self.cursor] data = self.dataloader.dataset[index] batch_data = self.merge_to(batch_data, data) self.cursor += 1 return batch_data class DataLoader: # shuffle 打乱 def __init__(self, dataset, batch_size, shuffle): self.dataset = dataset self.shuffle = shuffle self.count_data = len(dataset) self.batch_size = batch_size def __iter__(self): return DataLoaderIterator(self) def estimate(plabel, gt_labels, classes): plabel = plabel.copy() gt_labels = gt_labels.copy() match_mask = plabel == classes mismatch_mask = plabel != classes plabel[match_mask] = 1 plabel[mismatch_mask] = 0 gt_mask = gt_labels == classes gt_mismatch_mask = gt_labels != classes gt_labels[gt_mask] = 1 gt_labels[gt_mismatch_mask] = 0 TP = sum(plabel & gt_labels) FP = sum(plabel & (1 - gt_labels)) FN = sum((1 - plabel) & gt_labels) TN = sum((1 - plabel) & (1 - gt_labels)) precision = TP / (TP + FP) recall = TP / (TP + FN) accuracy = (TP + TN) / (TP + FP + FN + TN) F1 = 2 * (precision * recall) / (precision + recall) return precision, recall, accuracy, F1 def estimate_val(images, gt_labels, theta, classes): predict = sigmoid(val_images @ theta) plabel = predict.argmax(1) prob = plabel == val_labels total_images = images.shape[0] accuracy = sum(prob) / total_images return accuracy, cross_entropy(predict, one_hot(gt_labels, classes)) def cross_entropy(predict, gt): eps = 1e-4 predict = np.clip(predict, a_max=1 - eps, a_min=eps) # 裁切 batch_size = predict.shape[0] return -np.sum(gt * np.log(predict) + (1 - gt) * np.log(1 - predict)) / batch_size def lr_schedule_cosine(lr_min, lr_max, per_epochs): def compute(epoch): return lr_min + 0.5 * (lr_max - lr_min) * (1 + np.cos(epoch / per_epochs * np.pi)) return compute import matplotlib.pyplot as plt def sigmoid(x): return 1 / (1 + np.exp(-x)) classes = 10 # 定义10个类别 batch_size = 512 # 定义每个批次的大小 lr_warm_up_alpha = 1e-2 # 定义warm up的起点值 lr_min = 1e-4 # cosine学习率的最小值 lr_max = 1e-1 # cosine学习率的最大值 epochs = 10 # 退出策略,也就是最大把所有数据看10次 numdata, data_dims = train_images.shape # 60000, 785 # 定义dataloader和dataset,用于数据抓取 train_data = DataLoader(Dataset(train_images, one_hot(train_labels, classes)), batch_size, shuffle=True) # 初始化theta参数,采用正态分布,大小是数据维度为行,类别数为列。kaiming theta = np.random.normal(size=(data_dims, classes)) iters = 0 # 定义迭代次数,因为我们需要展示loss曲线,那么x将会是iters # 定义warm up的参数,我们在开始值是0.01,1轮时为0.1,2轮时回归cosine学习率,所以是1 lr_warm_up_schedule = { 1: 1e-1, 2: 1 } cosine_total_epoch = 3 # 定义cosine学习率的周期大小,固定为3. cosine_itepoch = 0 # 定义cosine学习率的周期内epoch索引 lr_cosine_schedule = lr_schedule_cosine(lr_min, lr_max, cosine_total_epoch) # 定义cosine学习率的函数,指定好参数 train_losses = [] # 定义train loss的收集变量,用于后面的绘图展示 val_losses = [] # 定义val loss的收集变量(accuracy,loss),用于后面的绘图展示 # 开始进行epoch循环,总数是epochs次 for epoch in range(epochs): # 如果cosine学习率的周期索引达到了周期的最后一次时,将其索引置位为0,相当于是周期性重启的意思 if cosine_itepoch == cosine_total_epoch: cosine_itepoch = 0 # 通过周期epoch索引来计算当前应该给定的学习率值 lr_select = lr_cosine_schedule(cosine_itepoch) # 周期索引+1 cosine_itepoch += 1 # 如果当前的迭代次数在warm up计划改变的节点时。就修改warm up的alpha值为当前需要修改的值 if epoch in lr_warm_up_schedule: lr_warm_up_alpha = lr_warm_up_schedule[epoch] # 定义最终的学习率,是等于cosine学习率 * warm_up_alpha lr = lr_select * lr_warm_up_alpha print(f"Set learning rate to {lr:.5f}") # 对一个批次内的数据进行迭代,每一次迭代都是一个batch(即256) for index, (images, labels) in enumerate(train_data): # 计算预测值 predict = images @ theta # n * 785 dot 785 * 10 = n * 10 # 转换为概率 predict = sigmoid(predict) # 计算loss值 loss = cross_entropy(predict, labels) # 计算theta的梯度 d_theta = images.T @ (predict - labels) # 785xn dot nx10 = 785 x 10 # 更新theta。除以batch_size是为了求平均值 theta = theta - lr * d_theta / batch_size iters += 1 # 记录当前的迭代信息和loss值,为后面展示做准备 train_losses.append([iters, loss]) if index % 100 == 0: print(f"Iter {iters}. {epoch} / {epochs}, Loss: {loss:.3f}, Learning Rate: {lr:.5f}") # 每一轮结束后,每把数据全看完一遍后,使用theta对测试数据集进行测试。来检验训练效果 val_accuracy, val_loss = estimate_val(val_images, val_labels, theta, classes) # 记录val的loss和accuracy,用于后面展示 val_losses.append([iters, val_accuracy, val_loss]) print(f"Val set, Accuracy: {val_accuracy}, Loss: {val_loss}")

上面的结果为

E:\anaconda\python.exe E:/dustartlearnproject/minstLearn/minst.py Set learning rate to 0.00100 Iter 1. 0 / 10, Loss: 24.275, Learning Rate: 0.00100 Iter 101. 0 / 10, Loss: 16.112, Learning Rate: 0.00100 Val set, Accuracy: 0.0967, Loss: 15.854472258208313 Set learning rate to 0.00750 Iter 119. 1 / 10, Loss: 16.177, Learning Rate: 0.00750 Iter 219. 1 / 10, Loss: 10.643, Learning Rate: 0.00750 Val set, Accuracy: 0.1025, Loss: 10.272491602919825 Set learning rate to 0.02508 Iter 237. 2 / 10, Loss: 9.819, Learning Rate: 0.02508 Iter 337. 2 / 10, Loss: 7.824, Learning Rate: 0.02508 Val set, Accuracy: 0.2056, Loss: 7.8075571751523665 Set learning rate to 0.10000 Iter 355. 3 / 10, Loss: 7.838, Learning Rate: 0.10000 Iter 455. 3 / 10, Loss: 3.850, Learning Rate: 0.10000 Val set, Accuracy: 0.5677, Loss: 3.6745011642839085 Set learning rate to 0.07503 Iter 473. 4 / 10, Loss: 3.915, Learning Rate: 0.07503 Iter 573. 4 / 10, Loss: 3.122, Learning Rate: 0.07503 Val set, Accuracy: 0.658, Loss: 2.8318372736284445 Set learning rate to 0.02508 Iter 591. 5 / 10, Loss: 3.012, Learning Rate: 0.02508 Iter 691. 5 / 10, Loss: 2.516, Learning Rate: 0.02508 Val set, Accuracy: 0.6794, Loss: 2.663056994692587 Set learning rate to 0.10000 Iter 709. 6 / 10, Loss: 2.822, Learning Rate: 0.10000 Iter 809. 6 / 10, Loss: 2.314, Learning Rate: 0.10000 Val set, Accuracy: 0.7327, Loss: 2.230728843855682 Set learning rate to 0.07503 Iter 827. 7 / 10, Loss: 2.326, Learning Rate: 0.07503 Iter 927. 7 / 10, Loss: 2.068, Learning Rate: 0.07503 Val set, Accuracy: 0.7574, Loss: 2.0282295027093924 Set learning rate to 0.02508 Iter 945. 8 / 10, Loss: 2.028, Learning Rate: 0.02508 Iter 1045. 8 / 10, Loss: 2.148, Learning Rate: 0.02508 Val set, Accuracy: 0.7653, Loss: 1.9720897257607903 Set learning rate to 0.10000 Iter 1063. 9 / 10, Loss: 2.035, Learning Rate: 0.10000 Iter 1163. 9 / 10, Loss: 1.891, Learning Rate: 0.10000 Val set, Accuracy: 0.7832, Loss: 1.8004302841913162 Process finished with exit code 0

基本没有问题但是如果修改了

如果不做裁切的话

E:\anaconda\python.exe E:/dustartlearnproject/minstLearn/minst.py Set learning rate to 0.00100 Iter 1. 0 / 10, Loss: nan, Learning Rate: 0.00100 E:\dustartlearnproject\minstLearn\minst.py:168: RuntimeWarning: divide by zero encountered in log return -np.sum(gt * np.log(predict) + (1 - gt) * np.log(1 - predict)) / batch_size E:\dustartlearnproject\minstLearn\minst.py:168: RuntimeWarning: invalid value encountered in multiply return -np.sum(gt * np.log(predict) + (1 - gt) * np.log(1 - predict)) / batch_size Iter 101. 0 / 10, Loss: inf, Learning Rate: 0.00100 Val set, Accuracy: 0.0895, Loss: 48.24693390577862 Set learning rate to 0.00750 Iter 119. 1 / 10, Loss: 49.018, Learning Rate: 0.00750 Iter 219. 1 / 10, Loss: 12.323, Learning Rate: 0.00750 Val set, Accuracy: 0.1176, Loss: 12.242563352955944 Set learning rate to 0.02508 Iter 237. 2 / 10, Loss: 11.778, Learning Rate: 0.02508 Iter 337. 2 / 10, Loss: 9.340, Learning Rate: 0.02508 Val set, Accuracy: 0.2352, Loss: 8.752362999505012 Set learning rate to 0.10000 Iter 355. 3 / 10, Loss: 8.544, Learning Rate: 0.10000 Iter 455. 3 / 10, Loss: 4.175, Learning Rate: 0.10000 Val set, Accuracy: 0.5513, Loss: 3.981317252906817 Set learning rate to 0.07503 Iter 473. 4 / 10, Loss: 4.012, Learning Rate: 0.07503 Iter 573. 4 / 10, Loss: 3.343, Learning Rate: 0.07503 Val set, Accuracy: 0.648, Loss: 3.0855568357946415 Set learning rate to 0.02508 Iter 591. 5 / 10, Loss: 3.164, Learning Rate: 0.02508 Iter 691. 5 / 10, Loss: 3.096, Learning Rate: 0.02508 Val set, Accuracy: 0.6668, Loss: 2.901230304990213 Set learning rate to 0.10000 Iter 709. 6 / 10, Loss: 2.972, Learning Rate: 0.10000 Iter 809. 6 / 10, Loss: 2.644, Learning Rate: 0.10000 Val set, Accuracy: 0.7176, Loss: 2.42363543726263 Set learning rate to 0.07503 Iter 827. 7 / 10, Loss: 2.563, Learning Rate: 0.07503 Iter 927. 7 / 10, Loss: 2.370, Learning Rate: 0.07503 Val set, Accuracy: 0.7415, Loss: 2.19418632803101 Set learning rate to 0.02508 Iter 945. 8 / 10, Loss: 2.054, Learning Rate: 0.02508 Iter 1045. 8 / 10, Loss: 2.033, Learning Rate: 0.02508 Val set, Accuracy: 0.7482, Loss: 2.1308324327122503 Set learning rate to 0.10000 Iter 1063. 9 / 10, Loss: 2.322, Learning Rate: 0.10000 Iter 1163. 9 / 10, Loss: 1.971, Learning Rate: 0.10000 Val set, Accuracy: 0.7704, Loss: 1.940373967677279 Process finished with exit code 0

这次没有出现其实出现过

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 25岁的心里话

· 闲置电脑爆改个人服务器(超详细) #公网映射 #Vmware虚拟网络编辑器

· 零经验选手,Compose 一天开发一款小游戏!

· 因为Apifox不支持离线,我果断选择了Apipost!

· 通过 API 将Deepseek响应流式内容输出到前端