Kubernetes - - k8s - v1.12.3 使用Helm安装harbor

1,Helm 介绍

- 核心术语:

- Chart:一个helm程序包

- Repository:Charts仓库,https/http 服务器

- Release:特定的Chart部署与目标集群上的一个实例

- Chart -> Config -> Release

- 程序架构:

- Helm:客户端,管理本地的Chart仓库,管理Chart,与Tiller服务器交互,发送Chart,实现安装、查询、卸载等操作

- Tiller:服务端,接收helm发来的Charts与Config,合并生成 Release

2,Helm安装

- Helm由两个组件组成:

- HelmClinet:客户端,拥有对Repository、Chart、Release等对象的管理能力。

- TillerServer:负责客户端指令和k8s集群之间的交互,根据Chart定义,生成和管理各种k8s的资源对象。

2.1 安装HelmClient

-

可以通过二进制文件或脚本方式进行安装。

-

下载最新版二进制文件:https://github.com/helm/helm/releases

-

本文下载 helm-v2.11.0 版本

wget https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gz

- 解压

tar zxf helm-v2.11.0-linux-amd64.tar.gz

- 移动到 /usr/local/bin 下

cp linux-amd64/helm linux-amd64/tiller /usr/local/bin/

2.2 安装TillerServer

- 依赖包

yum -y install socat

- 所有节点下载tiller:v[helm-version]镜像,helm-version为上面helm的版本2.11.0

docker pull xiaoqshuo/tiller:v2.11.0

- 使用helm init安装tiller

[root@k8s-master01 ~]# helm init --tiller-image xiaoqshuo/tiller:v2.11.0

Creating /root/.helm

Creating /root/.helm/repository

Creating /root/.helm/repository/cache

Creating /root/.helm/repository/local

Creating /root/.helm/plugins

Creating /root/.helm/starters

Creating /root/.helm/cache/archive

Creating /root/.helm/repository/repositories.yaml

Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com

Adding local repo with URL: http://127.0.0.1:8879/charts

$HELM_HOME has been configured at /root/.helm.

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation

Happy Helming!

2.3 查看helm version及pod状态

[root@k8s-master01 opt]# helm version

Client: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"}

[root@k8s-master01 opt]# kubectl get pod -n kube-system | grep tiller

tiller-deploy-84f64bdb87-w69rw 1/1 Running 0 88s

[root@k8s-master01 opt]# kubectl get pod,svc -n kube-system | grep tiller

pod/tiller-deploy-84f64bdb87-w69rw 1/1 Running 0 94s

service/tiller-deploy ClusterIP 10.108.21.50 <none> 44134/TCP 95s

2.4 报错

# helm list

Error: configmaps is forbidden: User "system:serviceaccount:kube-system:default" cannot list resource "configmaps" in API group "" in the namespace "kube-system"

- 执行以下命令创建serviceaccount tiller并且给它集群管理权限

kubectl create serviceaccount --namespace kube-system tiller

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

2.5 离线部署tiller

- 下载tiller镜像

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.11.0

docker tag registry.k8s.com:8888/tiller:v2.11.0

docker push registry.k8s.com:8888/tiller:v2.11.0

- 部署rabc权限tiller-rbac.yaml

$ cat tiller-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

- helm 初始化启动 tiller

helm init --upgrade --service-account tiller --tiller-image registry.k8s.com:8888/tiller:v2.11.0 --stable-repo-url http://192.168.1.128/charts

- 备注:上面命令中的

--stable-repo-url http://192.168.1.128/charts这个是我用nginx http做的,这儿下面放了一个文件index.yaml文件,可以从这儿下载到https://kubernetes-charts.storage.googleapis.com/index.yaml或https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts/index.yaml

3,harbor

3.1 安装 harbor

3.1.1 换 helm 源

- 查看 helm 源

[root@k8s-master01 ~]# helm repo list

NAME URL

stable https://kubernetes-charts.storage.googleapis.com

local http://127.0.0.1:8879/charts

- 移除默认源以及本地源

[root@k8s-master01 ~]# helm repo remove stable

"stable" has been removed from your repositories

[root@k8s-master01 ~]# helm repo remove local

"local" has been removed from your repositories

- 添加 aliyun 源

[root@k8s-master01 ~]# helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

"aliyun" has been added to your repositories

[root@k8s-master01 ~]# helm repo list

NAME URL

aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

3.1.2 下载harbor

- checkout到0.3.0分支

git clone https://github.com/goharbor/harbor-helm.git

3.1.3 更改requirement.yam

[root@k8s-master01 harbor-helm]# cat requirements.yaml

dependencies:

- name: redis

version: 1.1.15

repository: https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

# repository: https://kubernetes-charts.storage.googleapis.com

3.1.4 下载依赖

[root@k8s-master01 harbor-helm]# helm dependency update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "aliyun" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 1 charts

Downloading redis from repo https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

Deleting outdated charts

3.1.5 所有节点下载相关镜像

docker pull goharbor/chartmuseum-photon:v0.7.1-v1.6.0

docker pull goharbor/harbor-adminserver:v1.6.0

docker pull goharbor/harbor-jobservice:v1.6.0

docker pull goharbor/harbor-ui:v1.6.0

docker pull goharbor/harbor-db:v1.6.0

docker pull goharbor/registry-photon:v2.6.2-v1.6.0

docker pull goharbor/chartmuseum-photon:v0.7.1-v1.6.0

docker pull goharbor/clair-photon:v2.0.5-v1.6.0

docker pull goharbor/notary-server-photon:v0.5.1-v1.6.0

docker pull goharbor/notary-signer-photon:v0.5.1-v1.6.0

docker pull bitnami/redis:4.0.8-r2

3.1.6 更改 values.yaml

- 更改values.yaml所有的storageClass为storageClass: "gluster-heketi"

sed -i 's@# storageClass: "-"@storageClass: "gluster-heketi"@g' values.yaml

volumes:

data:

storageClass: "gluster-heketi"

accessMode: ReadWriteOnce

size: 1Gi

- 修改values.yaml的redis默认配置,添加port至master

redis:

# if external Redis is used, set "external.enabled" to "true"

# and fill the connection informations in "external" section.

# or the internal Redis will be used

usePassword: false

password: "changeit"

cluster:

enabled: false

master:

port: "6379"

persistence:

enabled: *persistence_enabled

storageClass: "gluster-heketi"

accessMode: ReadWriteOnce

size: 1Gi

- 修改charts/redis-1.1.15.tgz 里面的redis下template下的svc的name: {{ template "redis.fullname" . }}-master

sed -i 's#name: {{ template "redis.fullname" . }}#name: {{ template "redis.fullname" . }}-master#g' redis/templates/svc.yaml

[root@k8s-master01 charts]# more !$

more redis/templates/svc.yaml

apiVersion: v1

kind: Service

metadata:

name: {{ template "redis.fullname" . }}-master

labels:

app: {{ template "redis.fullname" . }}

chart: "{{ .Chart.Name }}-{{ .Chart.Version }}"

release: "{{ .Release.Name }}"

heritage: "{{ .Release.Service }}"

annotations:

{{- if .Values.service.annotations }}

{{ toYaml .Values.service.annotations | indent 4 }}

{{- end }}

{{- if .Values.metrics.enabled }}

{{ toYaml .Values.metrics.annotations | indent 4 }}

{{- end }}

spec:

type: {{ .Values.serviceType }}

{{ if eq .Values.serviceType "LoadBalancer" -}} {{ if .Values.service.loadBalancerIP -}}

loadBalancerIP: {{ .Values.service.loadBalancerIP }}

{{ end -}}

{{- end -}}

ports:

- name: redis

port: 6379

targetPort: redis

{{- if .Values.metrics.enabled }}

- name: metrics

port: 9121

targetPort: metrics

{{- end }}

selector:

app: {{ template "redis.fullname" . }}

- 删除原来的tgz包,重新打包

[root@k8s-master01 charts]# tar zcf redis-1.1.15.tgz redis/

- 注意修改相关存储空间的大小,比如registry。

3.1.7 安装harbor

helm install --name harbor-v1 . --wait --timeout 1500 --debug --namespace harbor

[root@k8s-master01 harbor-helm]# helm install --name harbor-v1 . --wait --timeout 1500 --debug --namespace harbor

[debug] Created tunnel using local port: '42156'

[debug] SERVER: "127.0.0.1:42156"

[debug] Original chart version: ""

[debug] CHART PATH: /opt/k8s-cluster/harbor-helm

Error: error unpacking redis-1.1.15.tgz.bak in harbor: chart metadata (Chart.yaml) missing

- 如果报forbidden的错误,需要创建serveraccount

kubectl create serviceaccount --namespace kube-system tiller

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

[root@k8s-master01 harbor-helm]# kubectl create serviceaccount --namespace kube-system tiller

serviceaccount/tiller created

[root@k8s-master01 harbor-helm]# kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

clusterrolebinding.rbac.authorization.k8s.io/tiller-cluster-rule created

[root@k8s-master01 harbor-helm]# kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

deployment.extensions/tiller-deploy patched

- 再次部署

[root@k8s-master01 harbor-helm]# helm install --name harbor-v1 . --wait --timeout 1500 --debug --namespace harbor

[debug] Created tunnel using local port: '45170'

[debug] SERVER: "127.0.0.1:45170"

[debug] Original chart version: ""

[debug] CHART PATH: /opt/k8s-cluster/harbor-helm

...

中间为配置文件

...

LAST DEPLOYED: Mon Dec 17 15:55:15 2018

NAMESPACE: harbor

STATUS: DEPLOYED

RESOURCES:

==> v1beta1/Ingress

NAME AGE

harbor-v1-harbor-ingress 1m

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

harbor-v1-redis-b46754c6-bqqpg 1/1 Running 0 1m

harbor-v1-harbor-adminserver-55d6846ccd-hcsw2 1/1 Running 0 1m

harbor-v1-harbor-chartmuseum-86766b666f-84h5z 1/1 Running 0 1m

harbor-v1-harbor-clair-558485cdff-nv8pl 1/1 Running 0 1m

harbor-v1-harbor-jobservice-667fd5c856-4kkgl 1/1 Running 0 1m

harbor-v1-harbor-notary-server-74f7c7c78d-qpbxd 1/1 Running 0 1m

harbor-v1-harbor-notary-signer-58d56f6f85-b5p46 1/1 Running 0 1m

harbor-v1-harbor-registry-5dfb58f55-7k9kc 1/1 Running 0 1m

harbor-v1-harbor-ui-6644789c84-tmmdp 1/1 Running 1 1m

harbor-v1-harbor-database-0 1/1 Running 0 1m

==> v1/Secret

NAME AGE

harbor-v1-harbor-adminserver 1m

harbor-v1-harbor-chartmuseum 1m

harbor-v1-harbor-database 1m

harbor-v1-harbor-ingress 1m

harbor-v1-harbor-jobservice 1m

harbor-v1-harbor-registry 1m

harbor-v1-harbor-ui 1m

==> v1/ConfigMap

harbor-v1-harbor-adminserver 1m

harbor-v1-harbor-chartmuseum 1m

harbor-v1-harbor-clair 1m

harbor-v1-harbor-jobservice 1m

harbor-v1-harbor-notary 1m

harbor-v1-harbor-registry 1m

harbor-v1-harbor-ui 1m

==> v1/PersistentVolumeClaim

harbor-v1-redis 1m

harbor-v1-harbor-chartmuseum 1m

harbor-v1-harbor-registry 1m

==> v1/Service

harbor-v1-redis-master 1m

harbor-v1-harbor-adminserver 1m

harbor-v1-harbor-chartmuseum 1m

harbor-v1-harbor-clair 1m

harbor-v1-harbor-database 1m

harbor-v1-harbor-jobservice 1m

harbor-v1-harbor-notary-server 1m

harbor-v1-harbor-notary-signer 1m

harbor-v1-harbor-registry 1m

harbor-v1-harbor-ui 1m

==> v1beta1/Deployment

harbor-v1-redis 1m

harbor-v1-harbor-adminserver 1m

harbor-v1-harbor-chartmuseum 1m

harbor-v1-harbor-clair 1m

harbor-v1-harbor-jobservice 1m

harbor-v1-harbor-notary-server 1m

harbor-v1-harbor-notary-signer 1m

harbor-v1-harbor-registry 1m

harbor-v1-harbor-ui 1m

==> v1beta2/StatefulSet

harbor-v1-harbor-database 1m

NOTES:

Please wait for several minutes for Harbor deployment to complete.

Then you should be able to visit the UI portal at https://core.harbor.domain.

For more details, please visit https://github.com/goharbor/harbor.

- 查看pod

[root@k8s-master01 harbor-helm]# kubectl get pod -n harbor | grep harbor

harbor-v1-harbor-adminserver-55d6846ccd-hcsw2 1/1 Running 6 8m36s

harbor-v1-harbor-chartmuseum-86766b666f-84h5z 1/1 Running 0 8m36s

harbor-v1-harbor-clair-558485cdff-nv8pl 1/1 Running 5 8m36s

harbor-v1-harbor-database-0 1/1 Running 0 8m34s

harbor-v1-harbor-jobservice-667fd5c856-4kkgl 1/1 Running 3 8m36s

harbor-v1-harbor-notary-server-74f7c7c78d-qpbxd 1/1 Running 4 8m36s

harbor-v1-harbor-notary-signer-58d56f6f85-b5p46 1/1 Running 4 8m35s

harbor-v1-harbor-registry-5dfb58f55-7k9kc 1/1 Running 0 8m35s

harbor-v1-harbor-ui-6644789c84-tmmdp 1/1 Running 5 8m35s

harbor-v1-redis-b46754c6-bqqpg 1/1 Running 0 8m36s

- 查看service

[root@k8s-master01 harbor-helm]# kubectl get svc -n harbor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

glusterfs-dynamic-database-data-harbor-v1-harbor-database-0 ClusterIP 10.96.254.80 <none> 1/TCP 119s

glusterfs-dynamic-harbor-v1-harbor-chartmuseum ClusterIP 10.107.60.205 <none> 1/TCP 2m6s

glusterfs-dynamic-harbor-v1-harbor-registry ClusterIP 10.106.114.23 <none> 1/TCP 2m36s

glusterfs-dynamic-harbor-v1-redis ClusterIP 10.97.112.255 <none> 1/TCP 2m29s

harbor-v1-harbor-adminserver ClusterIP 10.109.165.178 <none> 80/TCP 3m9s

harbor-v1-harbor-chartmuseum ClusterIP 10.111.121.23 <none> 80/TCP 3m9s

harbor-v1-harbor-clair ClusterIP 10.108.133.202 <none> 6060/TCP 3m8s

harbor-v1-harbor-database ClusterIP 10.104.27.211 <none> 5432/TCP 3m8s

harbor-v1-harbor-jobservice ClusterIP 10.102.60.45 <none> 80/TCP 3m7s

harbor-v1-harbor-notary-server ClusterIP 10.107.43.156 <none> 4443/TCP 3m7s

harbor-v1-harbor-notary-signer ClusterIP 10.98.180.61 <none> 7899/TCP 3m7s

harbor-v1-harbor-registry ClusterIP 10.104.125.52 <none> 5000/TCP 3m6s

harbor-v1-harbor-ui ClusterIP 10.101.63.66 <none> 80/TCP 3m6s

harbor-v1-redis-master ClusterIP 10.106.63.183 <none> 6379/TCP 3m9s

- 查看pv和pvc

[root@k8s-master01 harbor-helm]# kubectl get pv,pvc -n harbor | grep harbor

persistentvolume/pvc-18da32d1-01d1-11e9-b859-000c2927a0d0 8Gi RWO Delete Bound harbor/harbor-v1-redisgluster-heketi 3m46s

persistentvolume/pvc-18e270d6-01d1-11e9-b859-000c2927a0d0 5Gi RWO Delete Bound harbor/harbor-v1-harbor-chartmuseumgluster-heketi 3m23s

persistentvolume/pvc-18e6b03e-01d1-11e9-b859-000c2927a0d0 5Gi RWO Delete Bound harbor/harbor-v1-harbor-registrygluster-heketi 3m53s

persistentvolume/pvc-1d02d407-01d1-11e9-b859-000c2927a0d0 1Gi RWO Delete Bound harbor/database-data-harbor-v1-harbor-database-0gluster-heketi 3m16s

persistentvolumeclaim/database-data-harbor-v1-harbor-database-0 Bound pvc-1d02d407-01d1-11e9-b859-000c2927a0d0 1Gi RWO gluster-heketi 4m20s

persistentvolumeclaim/harbor-v1-harbor-chartmuseum Bound pvc-18e270d6-01d1-11e9-b859-000c2927a0d0 5Gi RWO gluster-heketi 4m27s

persistentvolumeclaim/harbor-v1-harbor-registry Bound pvc-18e6b03e-01d1-11e9-b859-000c2927a0d0 5Gi RWO gluster-heketi 4m27s

persistentvolumeclaim/harbor-v1-redis Bound pvc-18da32d1-01d1-11e9-b859-000c2927a0d0 8Gi RWO gluster-heketi 4m27s

- 查看ingress

[root@k8s-master01 harbor-helm]# kubectl get ingress -n harbor

NAME HOSTS ADDRESS PORTS AGE

harbor-v1-harbor-ingress core.harbor.domain,notary.harbor.domain 80, 443 3m27s

- 安装时也可以指定域名:--set externalURL=xxx.com

- 卸载:helm del --purge harbor-v1

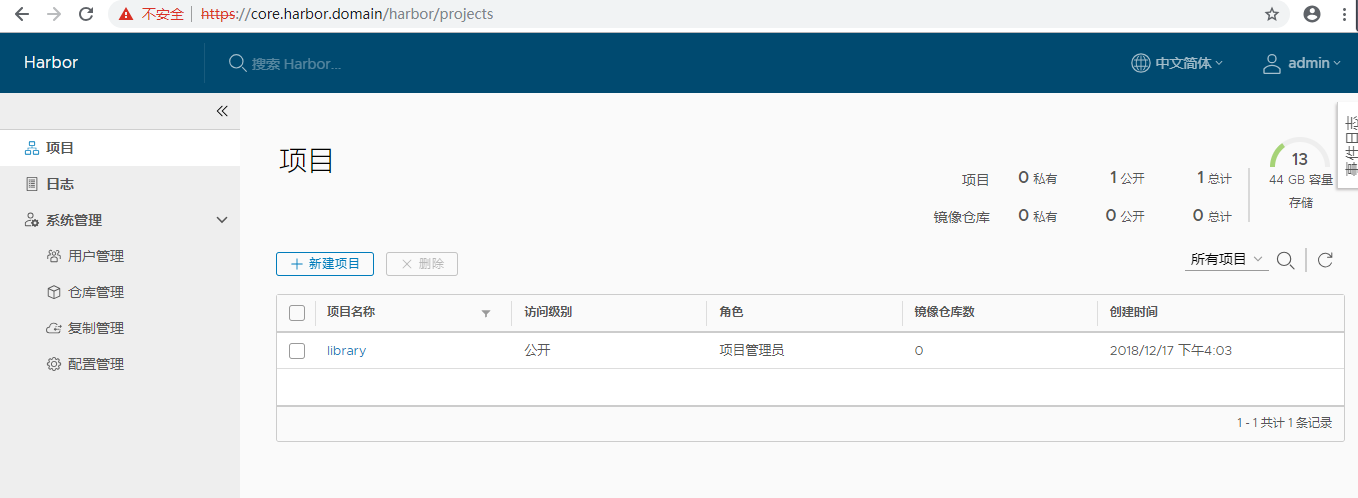

3.2 Harbor使用

3.2.1 访问测试

- 需要解析上述域名core.harbor.domain至k8s任意节点

- https://core.harbor.domain

3.2.2 登录

- 默认账号密码:admin/Harbor12345

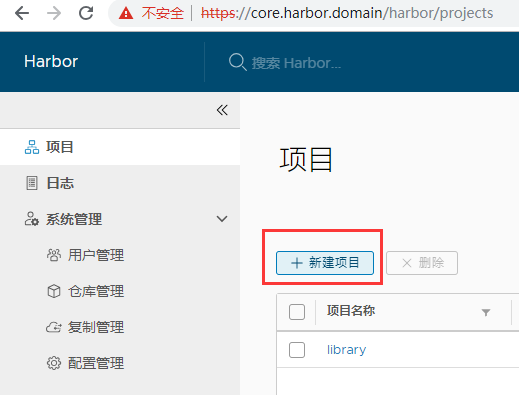

3.2.3 创建开发环境仓库

3.3 在k8s中使用harbor

3.3.1 查看harbor自带证书

[root@k8s-master01 harbor-helm]# kubectl get secrets/harbor-v1-harbor-ingress -n harbor -o jsonpath="{.data.ca\.crt}" | base64 --decode

-----BEGIN CERTIFICATE-----

MIIC9TCCAd2gAwIBAgIRANwxR0iCGk5tbLIuMaoDBPgwDQYJKoZIhvcNAQELBQAw

FDESMBAGA1UEAxMJaGFyYm9yLWNhMB4XDTE4MTIxNzA3NTUxNloXDTI4MTIxNDA3

NTUxNlowFDESMBAGA1UEAxMJaGFyYm9yLWNhMIIBIjANBgkqhkiG9w0BAQEFAAOC

AQ8AMIIBCgKCAQEAu11h4ofcz31Dhv1Ll4ljbD9MbSSYzpXE5SdPDYxK2/GYCbbP

wTQ5Lm0wyd45yUqIxoCDl8b+v4FqAjXLsm6HbP6SKVTVStFTJIn2gog2ypmObXqK

pp8dtSlgYlSoldZC4i73Oh8P72B3y/dUysyxrAYrsaLRr9YI0EYO0XQGBX9veENm

d4cJtcNuXU4WCoNZlvBT59Z2Vjbk2rXnb441Zk9K6aD8h2e+ktFAeJb9JFLqvfCz

u0puOIpYcLVLiTrMzarn9TFpJkyKcKp1bE6mbTCTtZNV/kFJiJNuPOG1N7Mb+ZzD

8XiKUYB8/mWTY5If9cGKMh7xnzALEdPdalZJJQIDAQABo0IwQDAOBgNVHQ8BAf8E

BAMCAqQwHQYDVR0lBBYwFAYIKwYBBQUHAwEGCCsGAQUFBwMCMA8GA1UdEwEB/wQF

MAMBAf8wDQYJKoZIhvcNAQELBQADggEBAKC9HZJDAS4Cx6KJcgsALOUzOhktP39B

cw9/PSi8X9kuTsPYxP1Rdogei38W2TRvgPrbPgKwCk48OnLR0myGnUaytjlbHXKz

HrZGtRDzoyjw7XCDwXesqSMpJ+yz8j3DSuyLwApkQKIle2Z+nz3eINkxvkdA7ejY

1kN21CptEKxBXN7ZT40zPkBnJylADaeMFOV+AcgAKkbzfczBNHMOok349a+OiapO

FjZbwgcx4rNxj0+v4Pzvb7qyNpfp7kEXpsQu1rjwLWZwjUvT5bdYhKoNKaEnwTGL

9B6dJBSNJ+5oS/4WoMt7pzuwKxoVpSJmNo2wSkG+R5sB8stfefZxKyg=

-----END CERTIFICATE-----

3.3.2 创建证书

[root@k8s-master01 harbor-helm]# mkdir -p /etc/docker/certs.d/core.harbor.domain/

[root@k8s-master01 harbor-helm]# cat <<EOF > /etc/docker/certs.d/core.harbor.domain/ca.crt

-----BEGIN CERTIFICATE-----

MIIC9TCCAd2gAwIBAgIRANwxR0iCGk5tbLIuMaoDBPgwDQYJKoZIhvcNAQELBQAw

FDESMBAGA1UEAxMJaGFyYm9yLWNhMB4XDTE4MTIxNzA3NTUxNloXDTI4MTIxNDA3

NTUxNlowFDESMBAGA1UEAxMJaGFyYm9yLWNhMIIBIjANBgkqhkiG9w0BAQEFAAOC

AQ8AMIIBCgKCAQEAu11h4ofcz31Dhv1Ll4ljbD9MbSSYzpXE5SdPDYxK2/GYCbbP

wTQ5Lm0wyd45yUqIxoCDl8b+v4FqAjXLsm6HbP6SKVTVStFTJIn2gog2ypmObXqK

pp8dtSlgYlSoldZC4i73Oh8P72B3y/dUysyxrAYrsaLRr9YI0EYO0XQGBX9veENm

d4cJtcNuXU4WCoNZlvBT59Z2Vjbk2rXnb441Zk9K6aD8h2e+ktFAeJb9JFLqvfCz

u0puOIpYcLVLiTrMzarn9TFpJkyKcKp1bE6mbTCTtZNV/kFJiJNuPOG1N7Mb+ZzD

8XiKUYB8/mWTY5If9cGKMh7xnzALEdPdalZJJQIDAQABo0IwQDAOBgNVHQ8BAf8E

BAMCAqQwHQYDVR0lBBYwFAYIKwYBBQUHAwEGCCsGAQUFBwMCMA8GA1UdEwEB/wQF

MAMBAf8wDQYJKoZIhvcNAQELBQADggEBAKC9HZJDAS4Cx6KJcgsALOUzOhktP39B

cw9/PSi8X9kuTsPYxP1Rdogei38W2TRvgPrbPgKwCk48OnLR0myGnUaytjlbHXKz

HrZGtRDzoyjw7XCDwXesqSMpJ+yz8j3DSuyLwApkQKIle2Z+nz3eINkxvkdA7ejY

1kN21CptEKxBXN7ZT40zPkBnJylADaeMFOV+AcgAKkbzfczBNHMOok349a+OiapO

FjZbwgcx4rNxj0+v4Pzvb7qyNpfp7kEXpsQu1rjwLWZwjUvT5bdYhKoNKaEnwTGL

9B6dJBSNJ+5oS/4WoMt7pzuwKxoVpSJmNo2wSkG+R5sB8stfefZxKyg=

-----END CERTIFICATE-----

EOF

3.3.3 登录 harbor

- 重启 docker

[root@k8s-master01 harbor-helm]# systemctl restart docker

- 账号和密码:admin/Harbor12345

[root@k8s-master01 harbor-helm]# docker login core.harbor.domain

Username: admin

Password:

Login Succeeded

3.3.4 报错证书不信任错误x509: certificate signed by unknown authority

- 可以添加信任

chmod 644 /etc/pki/ca-trust/extracted/pem/tls-ca-bundle.pem

- 将上述ca.crt添加到/etc/pki/tls/certs/ca-bundle.crt即可

cp /etc/docker/certs.d/core.harbor.domain/ca.crt /etc/pki/tls/certs/ca-bundle.crt

chmod 444 /etc/pki/ca-trust/extracted/pem/tls-ca-bundle.pem

3.3.5 上传镜像

- 例如镜像 busybox:1.27

[root@k8s-master01 harbor-helm]# docker images | grep busybox

busybox 1.27 6ad733544a63 13 months ago 1.13 MB

busybox 1.25.0 2b8fd9751c4c 2 years ago 1.09 MB

- tag

[root@k8s-master01 harbor-helm]# docker tag busybox:1.27 core.harbor.domain/develop/busybox:1.27

- 上传

[root@k8s-master01 harbor-helm]# docker push core.harbor.domain/develop/busybox:1.27

The push refers to a repository [core.harbor.domain/develop/busybox]

0271b8eebde3: Pushed

1.27: digest: sha256:179cf024c8a22f1621ea012bfc84b0df7e393cb80bf3638ac80e30d23e69147f size: 527

- 登录 web 查看

4, 总结

-

部署过程中遇到的问题:

-

- 由于某种原因无法访问https://kubernetes-charts.storage.googleapis.com,也懒得FQ,就使用阿里的helm仓库。(如果FQ了,就没有以下问题)

-

- 由于换成了阿里云的仓库,找不到源requirement.yaml中的redis版本,故修改阿里云仓库中有的redis。

-

- 使用了GFS动态存储,持久化了Harbor,需要更改values.yaml和redis里面的values.yaml中的storageClass。

-

- 阿里云仓库中的redis启用了密码验证,但是harbor chart的配置中未启用密码,所以干脆禁用了redis的密码。

-

- 使用Helm部署完Harbor以后,jobservice和harbor-ui的pods不断重启,通过日志发现未能解析Redis的service,原因是harbor chart里面配置Redis的service是harbor-v1-redis-master,而通过helm dependency update下载的Redis Chart service配置的harbor-v1-redis,为了方便,直接改了redis的svc.yaml文件。

-

- 更改完上述文件以后pods还是处于一直重启的状态,且报错:Failed to load and run worker pool: connect to redis server timeout: dial tcp 10.96.137.238:0: i/o timeout,发现Redis的地址+端口少了端口,最后经查证是harbor chart的values的redis配置port的参数,加上后重新部署即成功。

-

- 由于Helm安装的harbor默认启用了https,故直接配置证书以提高安全性。

-

- 将Harbor安装到k8s上,原作者推荐的是Helm安装,详情见:https://github.com/goharbor/harbor/blob/master/docs/kubernetes_deployment.md,文档见:https://github.com/goharbor/harbor-helm

-

- 个人认为Harbor应该独立于k8s集群之外使用docker-compose单独部署(https://github.com/goharbor/harbor/blob/master/docs/installation_guide.md),这也是最常见的方式,我目前使用的是此种方式(此文档为第一次部署harbor到k8s,也为了介绍Helm),而且便于维护及扩展,以及配置LDAP等都很方便。

-

- Helm是非常强大的k8s包管理工具。

-

- Harbor集成openLDAP点击

-

-

参考: