1,创建 redis-cluster

1.1 创建PV

- 此处采用的是静态PV方式,后端使用的是NFS,为了方便扩展可以使用动态PV较好。

- 注:本文档使用 k8s-node01 为 NFS 服务器

[root@k8s-node01 nfs]# mkdir -p redis-cluster/{redis-cluster00,redis-cluster01,redis-cluster02,redis-cluster03,redis-cluster04,redis-cluster05}

[root@k8s-node01 nfs]# more /etc/exports

/nfs/redis-cluster/ 192.168.2.0/24(rw,sync,no_subtree_check,no_root_squash)

1.2 创建namespace

kubectl create namespace public-service

- 注:如果不使用public-service,需要更改所有yaml文件的public-service为你namespace。

sed -i "s#public-service#YOUR_NAMESPACE#g" *.yaml

1.3 创建集群

[root@k8s-master01 redis]# kubectl apply -f k8s-redis-cluster/

configmap/redis-cluster-config created

persistentvolume/pv-redis-cluster-1 created

persistentvolume/pv-redis-cluster-2 created

persistentvolume/pv-redis-cluster-3 created

serviceaccount/redis-cluster created

role.rbac.authorization.k8s.io/redis-cluster created

rolebinding.rbac.authorization.k8s.io/redis-cluster created

service/redis-cluster-ss created

statefulset.apps/redis-cluster-ss created

1.4 查看 pod,pv,pvc

# pv

[root@k8s-master01 k8s-redis-cluster]# kubectl get pv -n public-service

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-redis-cluster-0 1Gi RWO Recycle Terminating public-service/redis-cluster-storage-redis-cluster-ss-5 redis-cluster-storage-class 3h40m

pv-redis-cluster-1 1Gi RWO Recycle Terminating public-service/redis-cluster-storage-redis-cluster-ss-0 redis-cluster-storage-class 3h40m

pv-redis-cluster-2 1Gi RWO Recycle Terminating public-service/redis-cluster-storage-redis-cluster-ss-1 redis-cluster-storage-class 3h40m

pv-redis-cluster-3 1Gi RWO Recycle Terminating public-service/redis-cluster-storage-redis-cluster-ss-2 redis-cluster-storage-class 3h40m

pv-redis-cluster-4 1Gi RWO Recycle Terminating public-service/redis-cluster-storage-redis-cluster-ss-3 redis-cluster-storage-class 3h40m

pv-redis-cluster-5 1Gi RWO Recycle Terminating public-service/redis-cluster-storage-redis-cluster-ss-4 redis-cluster-storage-class 3h40m

# pvc

[root@k8s-master01 k8s-redis-cluster]# kubectl get pvc -n public-service

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

redis-cluster-storage-redis-cluster-ss-0 Bound pv-redis-cluster-1 1Gi RWO redis-cluster-storage-class 3h40m

redis-cluster-storage-redis-cluster-ss-1 Bound pv-redis-cluster-2 1Gi RWO redis-cluster-storage-class 3h40m

redis-cluster-storage-redis-cluster-ss-2 Bound pv-redis-cluster-3 1Gi RWO redis-cluster-storage-class 3h40m

redis-cluster-storage-redis-cluster-ss-3 Bound pv-redis-cluster-4 1Gi RWO redis-cluster-storage-class 3h40m

redis-cluster-storage-redis-cluster-ss-4 Bound pv-redis-cluster-5 1Gi RWO redis-cluster-storage-class 3h40m

redis-cluster-storage-redis-cluster-ss-5 Bound pv-redis-cluster-0 1Gi RWO redis-cluster-storage-class 3h40m

# pod

[root@k8s-master01 k8s-redis-cluster]# kubectl get pod -n public-service

NAME READY STATUS RESTARTS AGE

redis-cluster-ss-0 1/1 Running 0 148m

redis-cluster-ss-1 1/1 Running 0 148m

redis-cluster-ss-2 1/1 Running 0 148m

redis-cluster-ss-3 1/1 Running 0 148m

redis-cluster-ss-4 1/1 Running 0 148m

redis-cluster-ss-5 1/1 Running 0 148m

# service

[root@k8s-master01 k8s-redis-cluster]# kubectl get service -n public-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

redis-cluster-ss ClusterIP None <none> 6379/TCP 149m

- service主要提供pods之间的互访,StatefulSet主要用Headless Service通讯,格式:statefulSetName-{0..N-1}.serviceName.namespace.svc.cluster.local

- serviceName为Headless Service的名字

- 0..N-1为Pod所在的序号,从0开始到N-1

- statefulSetName为StatefulSet的名字

- namespace为服务所在的namespace,Headless Servic和StatefulSet必须在相同的namespace

- .cluster.local为Cluster Domain

- 如本集群的为:

redis-cluster-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local:6379

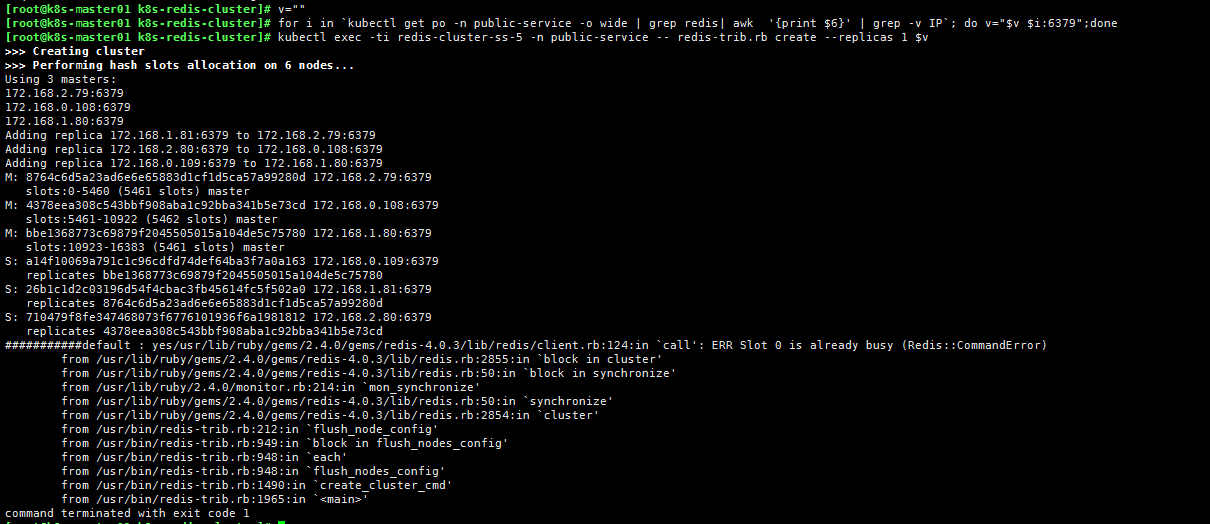

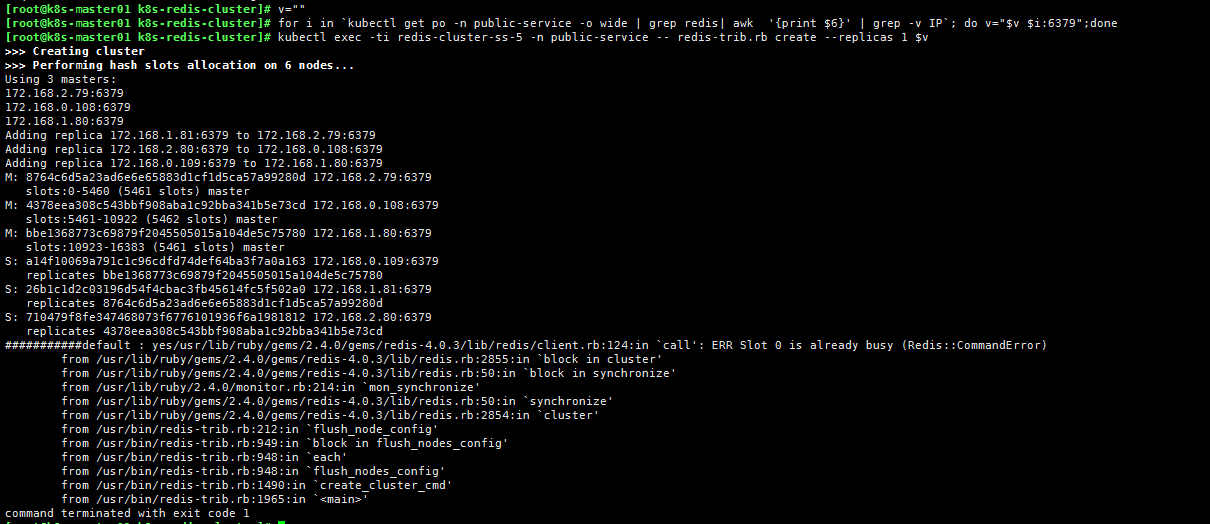

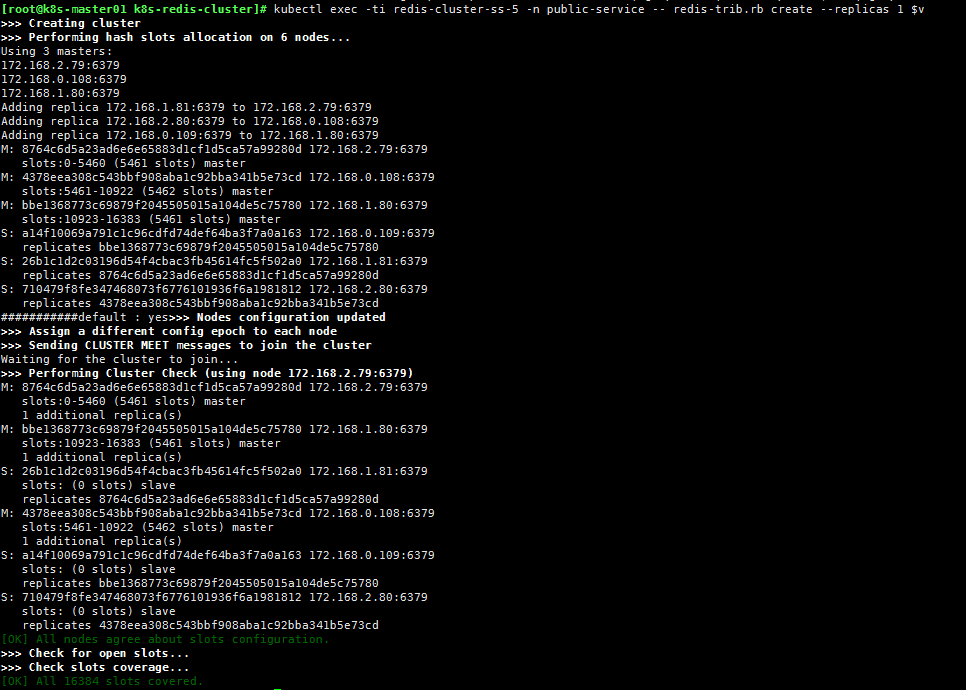

1.5 创建slot

v=""

for i in `kubectl get po -n public-service -o wide | grep redis| awk '{print $6}' | grep -v IP`; do v="$v $i:6379";done

kubectl exec -ti redis-cluster-ss-5 -n public-service -- redis-trib.rb create --replicas 1 $v

- 先清空数据,在执行 cluster reset,最后在次执行 创建slot

# 清空数据

for i in `kubectl get po -n public-service -o wide | grep redis| awk '{print $1}' | grep -v IP`; do kubectl exec -it $i -n public-service -- redis-cli -h $i.redis-cluster-ss.public-service.svc.cluster.local flushall;done

# 集群初始化

for i in `kubectl get po -n public-service -o wide | grep redis| awk '{print $1}' | grep -v IP`; do kubectl exec -it $i -n public-service -- redis-cli -h $i.redis-cluster-ss.public-service.svc.cluster.local cluster reset;done

1.6 查看 cluster 信息

# 检查node 节点

[root@k8s-master01 k8s-redis-cluster]# kubectl exec -ti redis-cluster-ss-0 -n public-service -- redis-trib.rb check redis-cluster-ss-0:6379

>>> Performing Cluster Check (using node redis-cluster-ss-0:6379)

M: 8764c6d5a23ad6e6e65883d1cf1d5ca57a99280d redis-cluster-ss-0:6379

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: bbe1368773c69879f2045505015a104de5c75780 172.168.1.80:6379

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: 26b1c1d2c03196d54f4cbac3fb45614fc5f502a0 172.168.1.81:6379

slots: (0 slots) slave

replicates 8764c6d5a23ad6e6e65883d1cf1d5ca57a99280d

M: 4378eea308c543bbf908aba1c92bba341b5e73cd 172.168.0.108:6379

slots:5461-10922 (5462 slots) master

1 additional replica(s)

S: a14f10069a791c1c96cdfd74def64ba3f7a0a163 172.168.0.109:6379

slots: (0 slots) slave

replicates bbe1368773c69879f2045505015a104de5c75780

S: 710479f8fe347468073f6776101936f6a1981812 172.168.2.80:6379

slots: (0 slots) slave

replicates 4378eea308c543bbf908aba1c92bba341b5e73cd

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

# 查看node节点

[root@k8s-master01 k8s-redis-cluster]# kubectl exec -ti redis-cluster-ss-0 -n public-service -- redis-cli cluster nodes

bbe1368773c69879f2045505015a104de5c75780 172.168.1.80:6379@16379 master - 0 1544420368969 3 connected 10923-16383

26b1c1d2c03196d54f4cbac3fb45614fc5f502a0 172.168.1.81:6379@16379 slave 8764c6d5a23ad6e6e65883d1cf1d5ca57a99280d 0 1544420368000 5 connected

4378eea308c543bbf908aba1c92bba341b5e73cd 172.168.0.108:6379@16379 master - 0 1544420369971 2 connected 5461-10922

a14f10069a791c1c96cdfd74def64ba3f7a0a163 172.168.0.109:6379@16379 slave bbe1368773c69879f2045505015a104de5c75780 0 1544420368000 4 connected

8764c6d5a23ad6e6e65883d1cf1d5ca57a99280d 172.168.2.79:6379@16379 myself,master - 0 1544420368000 1 connected 0-5460

710479f8fe347468073f6776101936f6a1981812 172.168.2.80:6379@16379 slave 4378eea308c543bbf908aba1c92bba341b5e73cd 0 1544420367000 6 connected

# 查看集群状态信息

[root@k8s-master01 k8s-redis-cluster]# kubectl exec -ti redis-cluster-ss-0 -n public-service -- redis-cli cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:711

cluster_stats_messages_pong_sent:717

cluster_stats_messages_sent:1428

cluster_stats_messages_ping_received:712

cluster_stats_messages_pong_received:711

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:1428

# 设置key

[root@k8s-master01 k8s-redis-cluster]# kubectl exec -ti redis-cluster-ss-3 -n public-service -- redis-cli -c set test redis-cluster-ss-3

OK

# 查询key

[root@k8s-master01 k8s-redis-cluster]# kubectl exec -ti redis-cluster-ss-3 -n public-service -- redis-cli -c get test

"redis-cluster-ss-3"

[root@k8s-master01 k8s-redis-cluster]# kubectl exec -ti redis-cluster-ss-4 -n public-service -- redis-cli -c get test

"redis-cluster-ss-3"

2,创建 redis-sentinel

2.1 创建PV

- 此处采用的是静态PV方式,后端使用的是NFS,为了方便扩展可以使用动态PV较好。

- 注:本文档使用 k8s-node01 为 NFS 服务器

[root@k8s-node01 nfs]# mkdir -p redis-sentinel/{redis-sentinel00,redis-sentinel01,redis-sentinel02}

[root@k8s-node01 nfs]# more /etc/exports

/nfs/redis-sentinel/ 192.168.2.0/24(rw,sync,no_subtree_check,no_root_squash)

2.2 创建namespace

kubectl create namespace public-service

- 注:如果不使用public-service,需要更改所有yaml文件的public-service为你namespace。

sed -i "s#public-service#YOUR_NAMESPACE#g" *.yaml

2.3 创建集群

2.3.1 创建pv,注意Redis的空间大小按需修改

[root@k8s-master01 k8s-redis-sentinel]# kubectl create -f redis-sentinel-pv.yaml

persistentvolume/pv-redis-sentinel-0 created

persistentvolume/pv-redis-sentinel-1 created

persistentvolume/pv-redis-sentinel-2 created

[root@k8s-master01 k8s-redis-sentinel]# kubectl get pv -n public-service

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-redis-sentinel-0 4Gi RWX Recycle Bound public-service/redis-sentinel-slave-storage-redis-sentinel-slave-ss-0 redis-sentinel-storage-class 6m15s

pv-redis-sentinel-1 4Gi RWX Recycle Bound public-service/redis-sentinel-slave-storage-redis-sentinel-slave-ss-1 redis-sentinel-storage-class 6m15s

pv-redis-sentinel-2 4Gi RWX Recycle Bound public-service/redis-sentinel-master-storage-redis-sentinel-master-ss-0 redis-sentinel-storage-class

[root@k8s-master01 k8s-redis-sentinel]# kubectl get pvc -n public-service

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

redis-sentinel-master-storage-redis-sentinel-master-ss-0 Bound pv-redis-sentinel-2 4Gi RWX redis-sentinel-storage-class 45s

redis-sentinel-slave-storage-redis-sentinel-slave-ss-0 Bound pv-redis-sentinel-0 4Gi RWX redis-sentinel-storage-class 45s

2.3.2 Redis配置按需修改,默认使用的是rdb存储模式

[root@k8s-master01 k8s-redis-sentinel]# kubectl create -f redis-sentinel-configmap.yaml

configmap/redis-sentinel-config created

[root@k8s-master01 k8s-redis-sentinel]# kubectl get configmap -n public-service

NAME DATA AGE

redis-sentinel-config 3 17s

- 注意:此时configmap中redis-slave.conf的slaveof的master地址为ss里面的Headless Service地址

2.3.3 创建service

- service主要提供pods之间的互访,StatefulSet主要用Headless Service通讯,格式:statefulSetName-{0..N-1}.serviceName.namespace.svc.cluster.local

- serviceName为Headless Service的名字

- 0..N-1为Pod所在的序号,从0开始到N-1

- statefulSetName为StatefulSet的名字

- namespace为服务所在的namespace,Headless Servic和StatefulSet必须在相同的namespace

- .cluster.local为Cluster Domain

- 如本集群的HS为:

- Master:

redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local:6379

- Slave:

redis-sentinel-slave-ss-0.redis-sentinel-slave-ss.public-service.svc.cluster.local:6379redis-sentinel-slave-ss-1.redis-sentinel-slave-ss.public-service.svc.cluster.local:6379

[root@k8s-master01 k8s-redis-sentinel]# kubectl create -f redis-sentinel-service-master.yaml -f redis-sentinel-service-slave.yaml

service/redis-sentinel-master-ss created

service/redis-sentinel-slave-ss created

[root@k8s-master01 k8s-redis-sentinel]# kubectl get service -n public-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

redis-sentinel-master-ss ClusterIP None <none> 6379/TCP 19s

redis-sentinel-slave-ss ClusterIP None <none> 6379/TCP 19s

2.3.4 创建StatefulSet

[root@k8s-master01 k8s-redis-sentinel]# kubectl create -f redis-sentinel-rbac.yaml -f redis-sentinel-ss-master.yaml -f redis-sentinel-ss-slave.yaml

serviceaccount/redis-sentinel created

role.rbac.authorization.k8s.io/redis-sentinel created

rolebinding.rbac.authorization.k8s.io/redis-sentinel created

statefulset.apps/redis-sentinel-master-ss created

statefulset.apps/redis-sentinel-slave-ss created

[root@k8s-master01 k8s-redis-sentinel]# kubectl get statefulset -n public-service

NAME DESIRED CURRENT AGE

redis-sentinel-master-ss 1 1 15s

redis-sentinel-slave-ss 2 1 15s

[root@k8s-master01 k8s-redis-sentinel]# kubectl get pods -n public-service

NAME READY STATUS RESTARTS AGE

redis-sentinel-master-ss-0 1/1 Running 0 2m16s

redis-sentinel-slave-ss-0 1/1 Running 0 2m16s

redis-sentinel-slave-ss-1 1/1 Running 0 88s

- 注:此时相当于已经在k8s上创建了Redis的主从模式。

2.4 通讯测试

2.4.1 master连接slave测试

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-master-ss-0 -n public-service -- redis-cli -h redis-sentinel-slave-ss-0.redis-sentinel-slave-ss.public-service.svc.cluster.local ping

PONG

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-master-ss-0 -n public-service -- redis-cli -h redis-sentinel-slave-ss-1.redis-sentinel-slave-ss.public-service.svc.cluster.local ping

PONG

2.4.2 slave连接master测试

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-0 -n public-service -- redis-cli -h redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local ping

PONG

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli -h redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local ping

PONG

2.4.3 同步状态查看

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli -h redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local info replication

# Replication

role:master

connected_slaves:2

slave0:ip=172.168.2.82,port=6379,state=online,offset=518,lag=1

slave1:ip=172.168.1.82,port=6379,state=online,offset=518,lag=1

master_replid:424948340bbbcf3f10c97ec61ebdecc66c8db096

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:518

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:518

2.4.4 同步测试

# master写入数据

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli -h redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local set test test_data

OK

# master获取数据

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli -h redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local get test

"test_data"

# slave获取数据

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli get test

"test_data"

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli set k v

(error) READONLY You can't write against a read only replica.

2.4.5 NFS查看数据存储

[root@k8s-node01 redis-sentinel]# tree

.

├── redis-sentinel00

│ ├── dump.rdb

│ └── redis-slave.conf

├── redis-sentinel01

│ ├── dump.rdb

│ └── redis-slave.conf

└── redis-sentinel02

├── dump.rdb

└── redis-master.conf

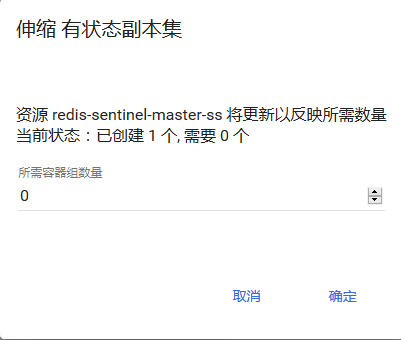

- 说明:个人认为在k8s上搭建Redis sentinel完全没有意义,经过测试,当master节点宕机后,sentinel选择新的节点当主节点,当原master恢复后,此时无法再次成为集群节点。因为在物理机上部署时,sentinel探测以及更改配置文件都是以IP的形式,集群复制也是以IP的形式,但是在容器中,虽然采用的StatefulSet的Headless Service来建立的主从,但是主从建立后,master、slave、sentinel记录还是解析后的IP,但是pod的IP每次重启都会改变,所有sentinel无法识别宕机后又重新启动的master节点,所以一直无法加入集群,虽然可以通过固定podIP或者使用NodePort的方式来固定,或者通过sentinel获取当前master的IP来修改配置文件,但是个人觉得也是没有必要的,sentinel实现的是高可用Redis主从,检测Redis Master的状态,进行主从切换等操作,但是在k8s中,无论是dc或者ss,都会保证pod以期望的值进行运行,再加上k8s自带的活性检测,当端口不可用或者服务不可用时会自动重启pod或者pod的中的服务,所以当在k8s中建立了Redis主从同步后,相当于已经成为了高可用状态,并且sentinel进行主从切换的时间不一定有k8s重建pod的时间快,所以个人认为在k8s上搭建sentinel没有意义。所以下面搭建sentinel的步骤无需在看

2.5 创建sentinel

[root@k8s-master01 k8s-redis-sentinel]# kubectl create -f redis-sentinel-ss-sentinel.yaml -f redis-sentinel-service-sentinel.yaml

statefulset.apps/redis-sentinel-sentinel-ss created

service/redis-sentinel-sentinel-ss created

[root@k8s-master01 k8s-redis-sentinel]# kubectl get service -n public-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

redis-sentinel-master-ss ClusterIP None <none> 6379/TCP 13m

redis-sentinel-sentinel-ss ClusterIP None <none> 26379/TCP 31s

redis-sentinel-slave-ss ClusterIP None <none> 6379/TCP 13m

[root@k8s-master01 k8s-redis-sentinel]# kubectl get statefulset -n public-service

NAME DESIRED CURRENT AGE

redis-sentinel-master-ss 1 1 12m

redis-sentinel-sentinel-ss 3 3 48s

redis-sentinel-slave-ss 2 2 12m

[root@k8s-master01 k8s-redis-sentinel]# kubectl get pods -n public-service | grep sentinel

redis-sentinel-master-ss-0 1/1 Running 0 13m

redis-sentinel-sentinel-ss-0 1/1 Running 0 63s

redis-sentinel-sentinel-ss-1 1/1 Running 0 18s

redis-sentinel-sentinel-ss-2 1/1 Running 0 16s

redis-sentinel-slave-ss-0 1/1 Running 0 13m

redis-sentinel-slave-ss-1 1/1 Running 0 12m

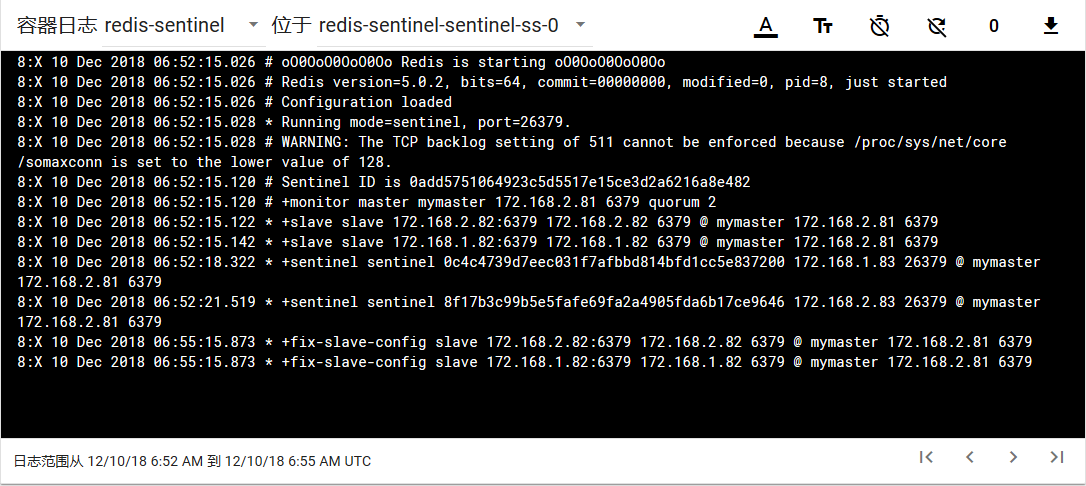

2.5.1 查看哨兵状态

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-sentinel-ss-0 -n public-service -- redis-cli -h 127.0.0.1 -p 26379 info Sentinel

# Sentinel

sentinel_masters:1

sentinel_tilt:0

sentinel_running_scripts:0

sentinel_scripts_queue_length:0

sentinel_simulate_failure_flags:0

master0:name=mymaster,status=ok,address=172.168.2.81:6379,slaves=2,sentinels=3

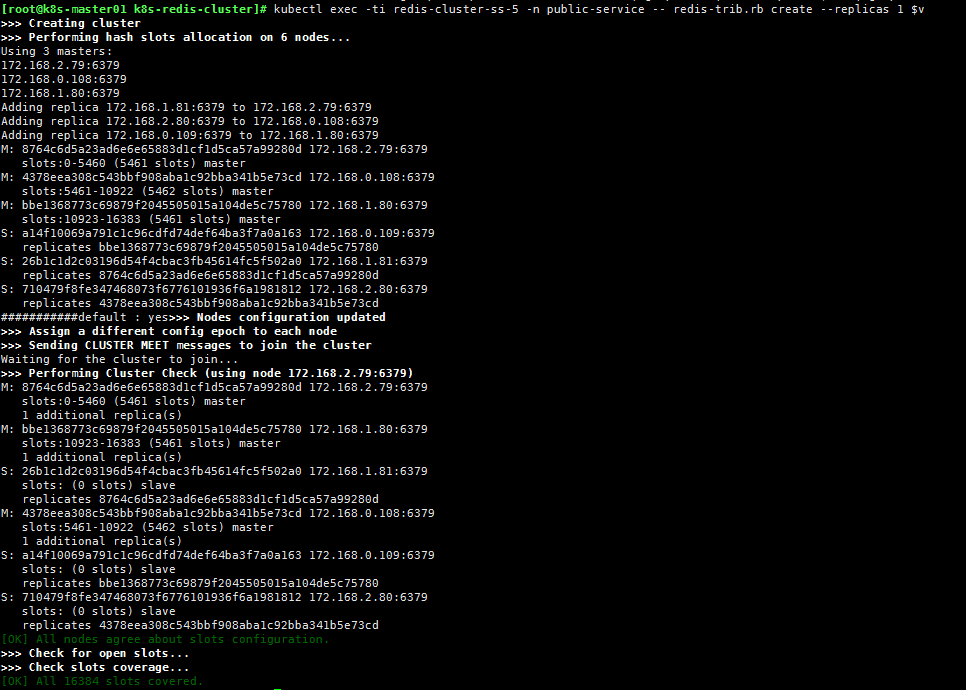

2.5.2 查看日志

2.6 容灾测试

2.6.1 查看数据

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-master-ss-0 -n public-service -- redis-cli -h 127.0.0.1 -p 6379 get test

"test_data"

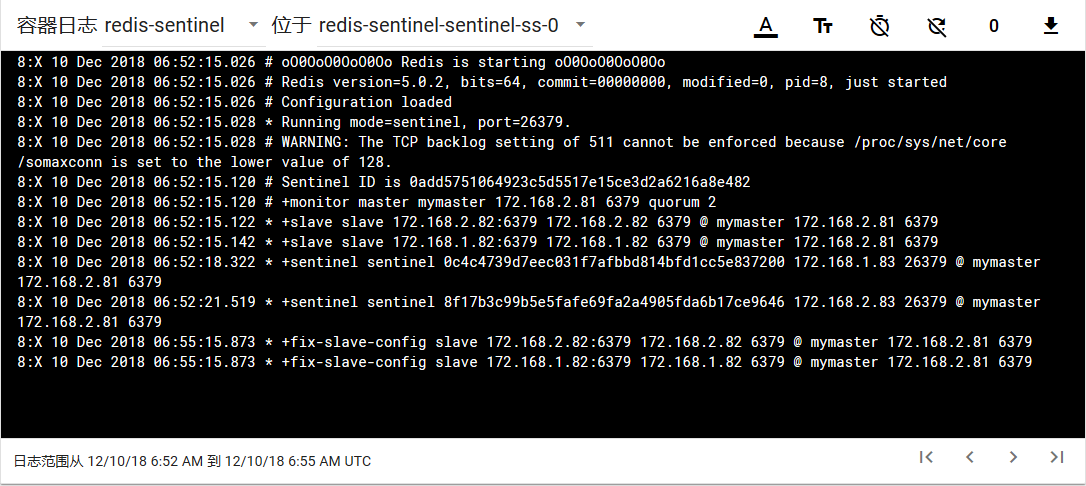

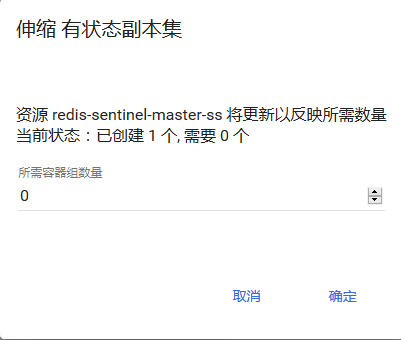

2.6.2 关闭master节点

2.6.3 查看sentinel状态

# pod

[root@k8s-master01 k8s-redis-sentinel]# kubectl get pods -n public-service -o wide| grep sentinel

redis-sentinel-sentinel-ss-0 1/1 Running 0 13m 172.168.0.110 k8s-master01 <none>

redis-sentinel-sentinel-ss-1 1/1 Running 0 12m 172.168.1.83 k8s-node02 <none>

redis-sentinel-sentinel-ss-2 1/1 Running 0 12m 172.168.2.83 k8s-node01 <none>

redis-sentinel-slave-ss-0 1/1 Running 0 25m 172.168.2.82 k8s-node01 <none>

redis-sentinel-slave-ss-1 1/1 Running 0 24m 172.168.1.82 k8s-node02 <none>

# Sentinel

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-sentinel-ss-2 -n public-service -- redis-cli -h 127.0.0.1 -p 26379 info Sentinel

# Sentinel

sentinel_masters:1

sentinel_tilt:0

sentinel_running_scripts:0

sentinel_scripts_queue_length:0

sentinel_simulate_failure_flags:0

master0:name=mymaster,status=ok,address=172.168.2.81:6379,slaves=2,sentinels=3

# info

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-0 -n public-service -- redis-cli -h 127.0.0.1 -p 6379 info replication

# Replication

role:slave

master_host:172.168.2.81

master_port:6379

master_link_status:down

master_last_io_seconds_ago:-1

master_sync_in_progress:0

slave_repl_offset:151040

master_link_down_since_seconds:29

slave_priority:100

slave_read_only:1

connected_slaves:0

master_replid:424948340bbbcf3f10c97ec61ebdecc66c8db096

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:151040

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:151040

[root@k8s-master01 k8s-redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-0 -n public-service -- redis-cli -h 127.0.0.1 -p 6379 get test

"test_data"