Kubernetes - - k8s - v1.12.3 prometheus traefik组件安装及集群测试

1,traefik

-

traefik:HTTP层路由,官网:http://traefik.cn/,文档:https://docs.traefik.io/user-guide/kubernetes/

- 功能和nginx ingress类似。

- 相对于nginx ingress,traefix能够实时跟Kubernetes API 交互,感知后端 Service、Pod 变化,自动更新配置并热重载。Traefik 更快速更方便,同时支持更多的特性,使反向代理、负载均衡更直接更高效。

-

k8s集群部署Traefik,结合上一篇文章。

1.1 创建k8s-master-lb的证书:

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=k8s-master-lb"

[root@k8s-master01 certificate]# pwd

/opt/k8-ha-install/certificate

[root@k8s-master01 certificate]# openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=k8s-master-lb"

Generating a 2048 bit RSA private key

.....................................................................................+++

..................................................+++

writing new private key to 'tls.key'

-----

- 把证书写入到k8s的secret

kubectl -n kube-system create secret generic traefik-cert --from-file=tls.key --from-file=tls.crt

[root@k8s-master01 certificate]# kubectl -n kube-system create secret generic traefik-cert --from-file=tls.key --from-file=tls.crt

secret/traefik-cert created

- 安装traefix

[root@k8s-master01 k8-ha-install]# kubectl apply -f traefik/

serviceaccount/traefik-ingress-controller created

clusterrole.rbac.authorization.k8s.io/traefik-ingress-controller created

clusterrolebinding.rbac.authorization.k8s.io/traefik-ingress-controller created

configmap/traefik-conf created

daemonset.extensions/traefik-ingress-controller created

service/traefik-web-ui created

ingress.extensions/traefik-jenkins created

- 查看pods,因为创建的类型是DaemonSet所有每个节点都会创建一个Traefix的pod

[root@k8s-master01 k8-ha-install]# kubectl get pods --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

kube-system calico-node-9cw9r 2/2 Running 0 19h 192.168.2.101 k8s-node01 <none>

kube-system calico-node-l6m78 2/2 Running 0 19h 192.168.2.102 k8s-node02 <none>

kube-system calico-node-lhqg9 2/2 Running 0 19h 192.168.2.100 k8s-master01 <none>

kube-system coredns-6c66ffc55b-8rlpp 1/1 Running 0 22h 172.168.1.3 k8s-node02 <none>

kube-system coredns-6c66ffc55b-qdvfg 1/1 Running 0 22h 172.168.1.2 k8s-node02 <none>

kube-system etcd-k8s-master01 1/1 Running 0 22h 192.168.2.100 k8s-master01 <none>

kube-system etcd-k8s-node01 1/1 Running 0 21h 192.168.2.101 k8s-node01 <none>

kube-system etcd-k8s-node02 1/1 Running 0 21h 192.168.2.102 k8s-node02 <none>

kube-system heapster-56c97d8b49-qp97p 1/1 Running 0 19h 172.168.1.4 k8s-node02 <none>

kube-system kube-apiserver-k8s-master01 1/1 Running 0 19h 192.168.2.100 k8s-master01 <none>

kube-system kube-apiserver-k8s-node01 1/1 Running 0 19h 192.168.2.101 k8s-node01 <none>

kube-system kube-apiserver-k8s-node02 1/1 Running 0 19h 192.168.2.102 k8s-node02 <none>

kube-system kube-controller-manager-k8s-master01 1/1 Running 0 19h 192.168.2.100 k8s-master01 <none>

kube-system kube-controller-manager-k8s-node01 1/1 Running 0 21h 192.168.2.101 k8s-node01 <none>

kube-system kube-controller-manager-k8s-node02 1/1 Running 1 21h 192.168.2.102 k8s-node02 <none>

kube-system kube-proxy-62hjm 1/1 Running 0 21h 192.168.2.102 k8s-node02 <none>

kube-system kube-proxy-79fbq 1/1 Running 0 21h 192.168.2.101 k8s-node01 <none>

kube-system kube-proxy-jsnhl 1/1 Running 0 22h 192.168.2.100 k8s-master01 <none>

kube-system kube-scheduler-k8s-master01 1/1 Running 2 22h 192.168.2.100 k8s-master01 <none>

kube-system kube-scheduler-k8s-node01 1/1 Running 0 21h 192.168.2.101 k8s-node01 <none>

kube-system kube-scheduler-k8s-node02 1/1 Running 0 21h 192.168.2.102 k8s-node02 <none>

kube-system kubernetes-dashboard-64d4f8997d-l6q9j 1/1 Running 0 18h 172.168.0.4 k8s-master01 <none>

kube-system metrics-server-b6bc985c4-8twwg 1/1 Running 0 19h 172.168.0.3 k8s-master01 <none>

kube-system monitoring-grafana-6f8dc9f99f-bwrrt 1/1 Running 0 19h 172.168.2.2 k8s-node01 <none>

kube-system monitoring-influxdb-556dcc774d-45p9t 1/1 Running 0 19h 172.168.0.2 k8s-master01 <none>

kube-system traefik-ingress-controller-njmzh 1/1 Running 0 112s 172.168.1.6 k8s-node02 <none>

kube-system traefik-ingress-controller-q74l8 1/1 Running 0 112s 172.168.0.5 k8s-master01 <none>

kube-system traefik-ingress-controller-sqv9z 1/1 Running 0 112s 172.168.2.3 k8s-node01 <none>

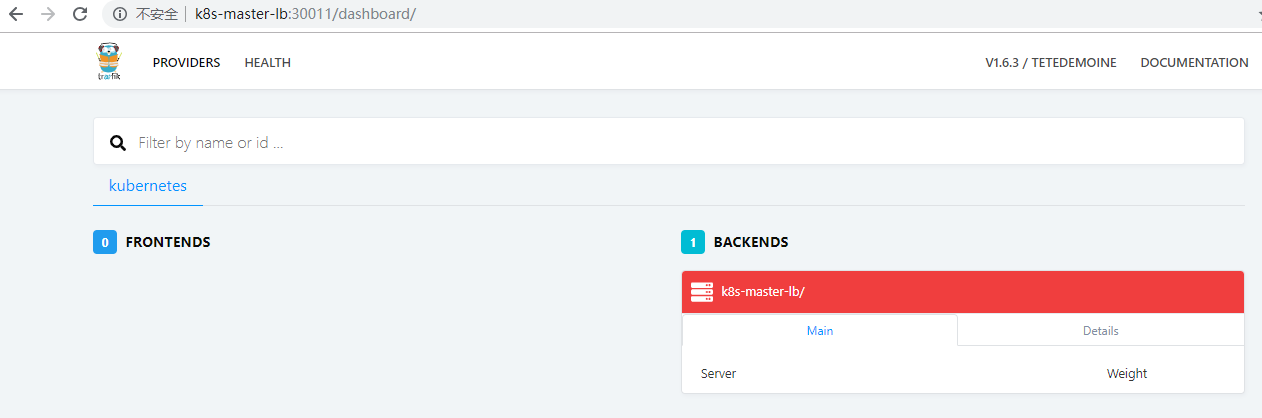

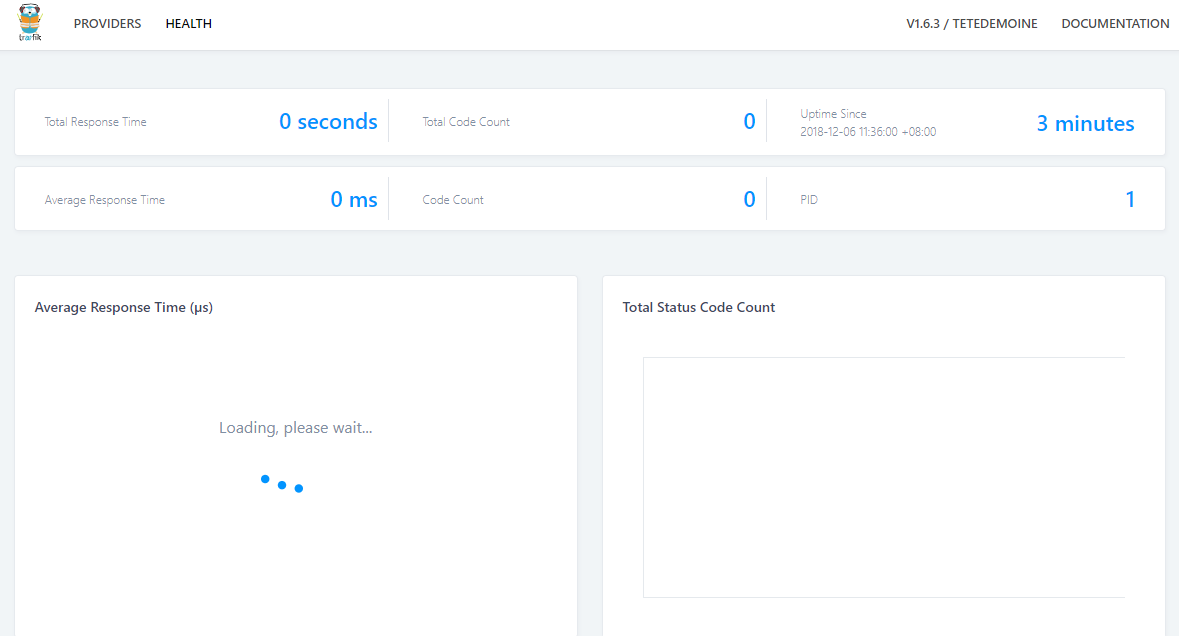

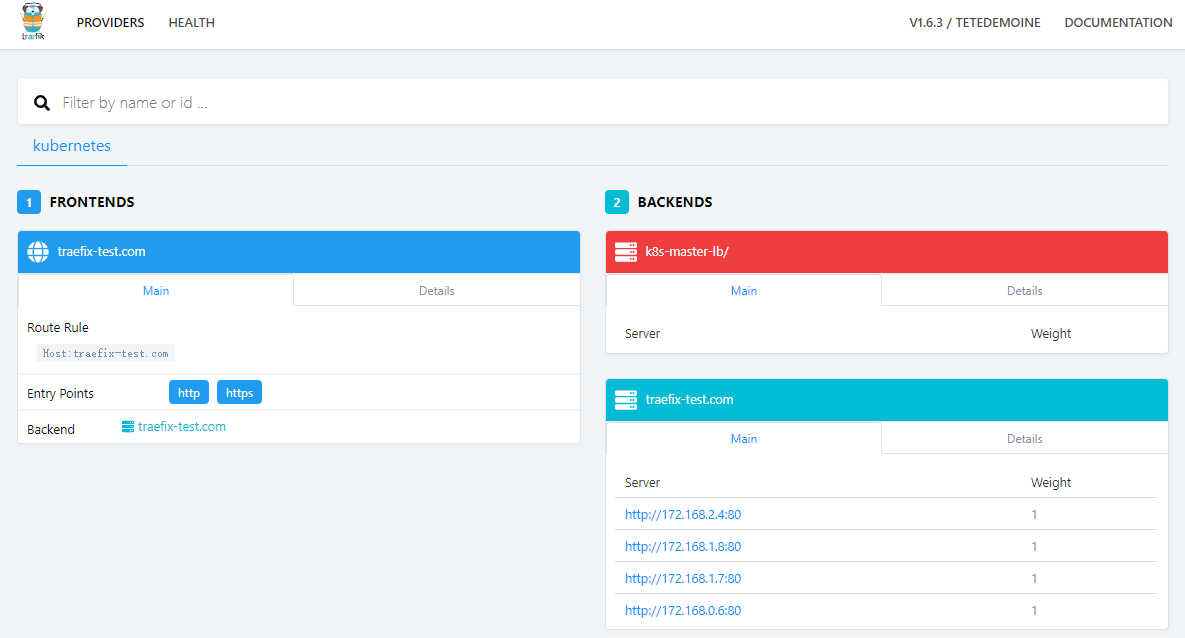

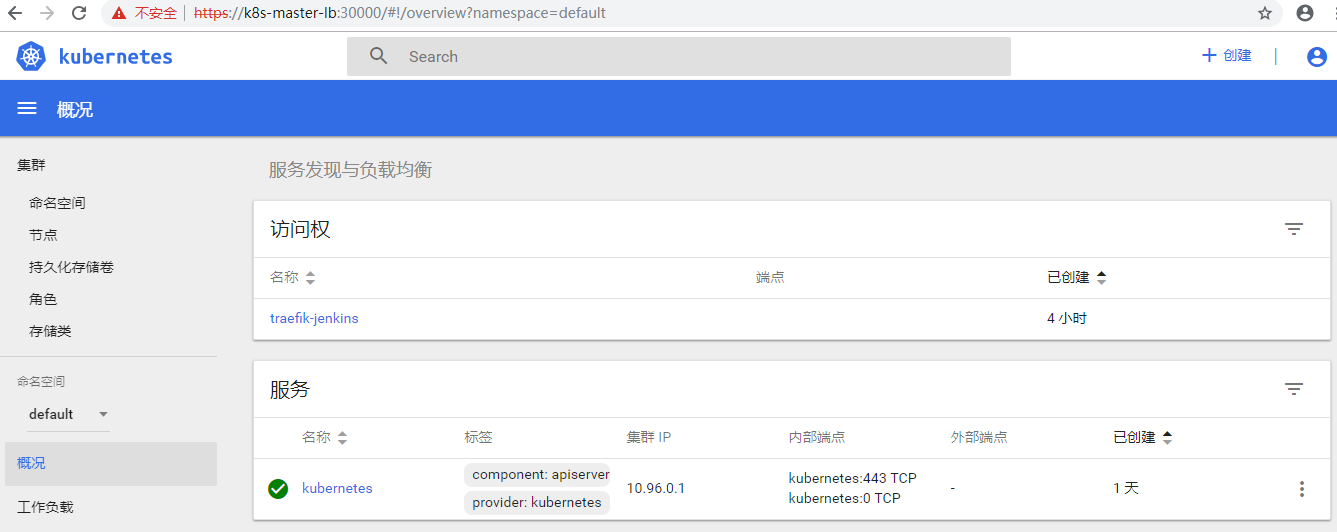

- 打开Traefix的Web UI:http://k8s-master-lb:30011/

- 创建测试web应用

- 模板

[root@k8s-master01 ~]# cat traefix-test.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

template:

metadata:

labels:

name: nginx-svc

namespace: traefix-test

spec:

selector:

run: ngx-pod

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: ngx-pod

spec:

replicas: 4

template:

metadata:

labels:

run: ngx-pod

spec:

containers:

- name: nginx

image: nginx:1.10

ports:

- containerPort: 80

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ngx-ing

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: traefix-test.com

http:

paths:

- backend:

serviceName: nginx-svc

servicePort: 80

- 创建 traefix-test

[root@k8s-master01 ~]# kubectl create -f traefix-test.yaml

service/nginx-svc created

deployment.apps/ngx-pod created

ingress.extensions/ngx-ing created

- 查看pod

[root@k8s-master01 test-web]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

ngx-pod-555cb5c594-2st5m 1/1 Running 0 7m37s 172.168.2.4 k8s-node01 <none>

ngx-pod-555cb5c594-dvjzw 1/1 Running 0 7m37s 172.168.1.8 k8s-node02 <none>

ngx-pod-555cb5c594-gdbn7 1/1 Running 0 7m37s 172.168.1.7 k8s-node02 <none>

ngx-pod-555cb5c594-pvgkh 1/1 Running 0 7m37s 172.168.0.6 k8s-master01 <none>

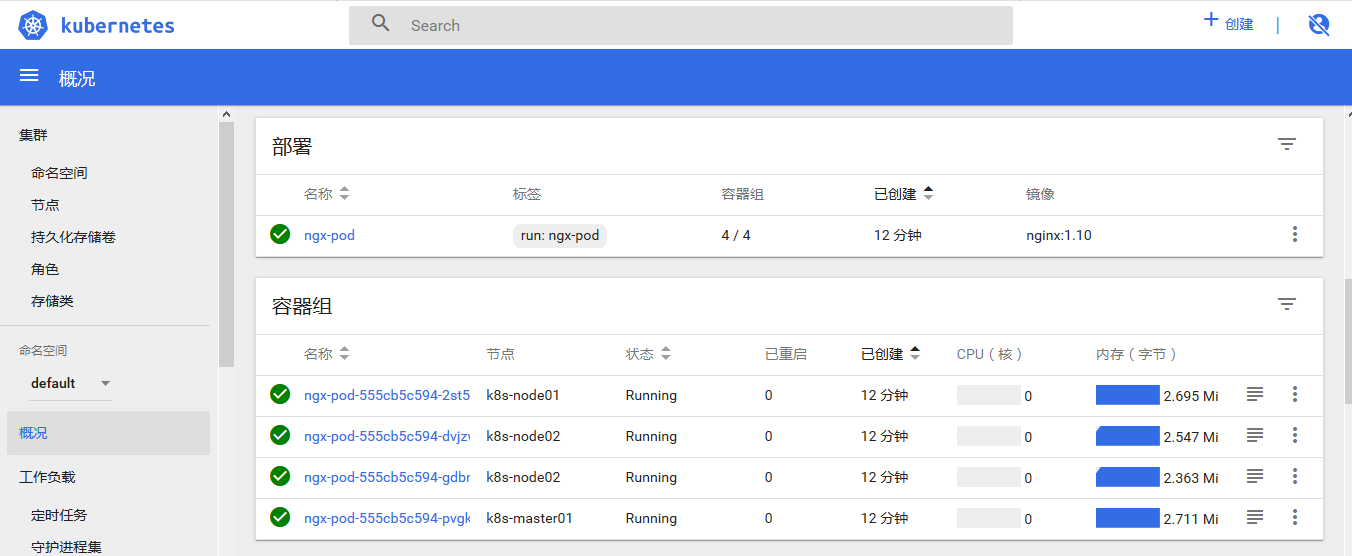

- traefix UI查看

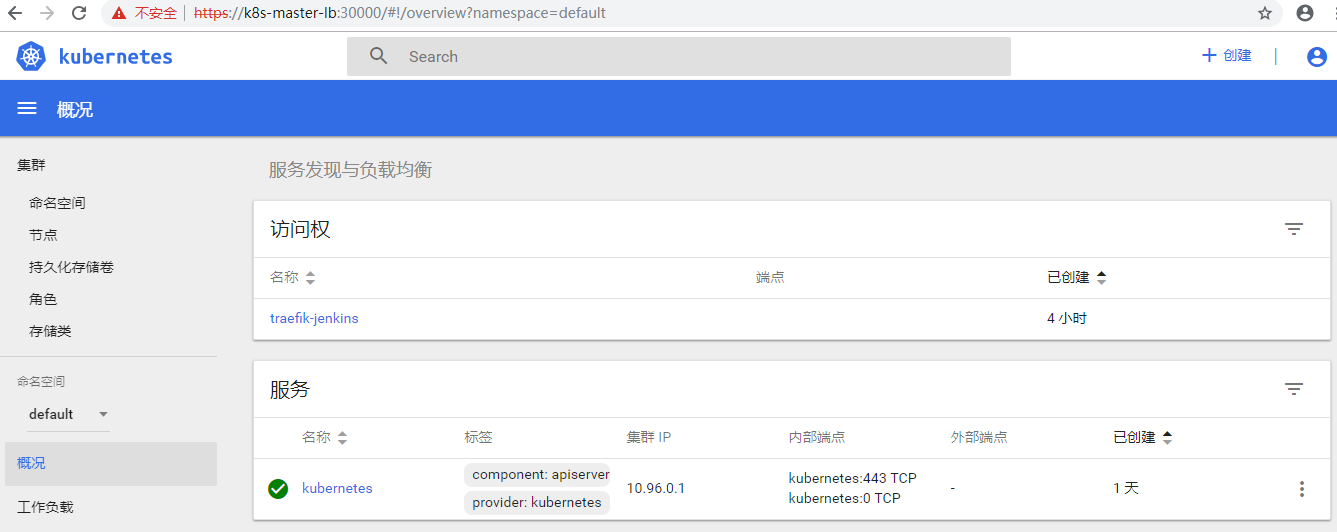

- k8s dashboard

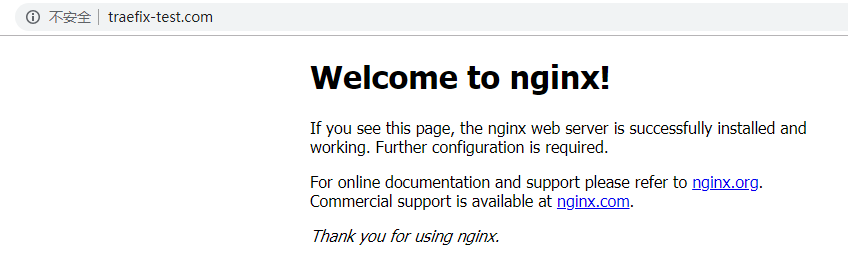

- 访问测试:将域名http://traefix-test.com/解析到任何一个node节点即可访问

1.2 HTTPS证书配置

- 利用上述创建的nginx,再次创建https的ingress

[root@k8s-master01 test-web]# pwd

/opt/k8-ha-install/test-web

[root@k8s-master01 test-web]# cat traefix-https.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-https-test

namespace: default

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: traefix-test.com

http:

paths:

- backend:

serviceName: nginx-svc

servicePort: 80

tls:

- secretName: nginx-test-tls

- 创建证书,线上为公司购买的证书

[root@k8s-master01 nginx-cert]# pwd

/opt/k8-ha-install/test-web/nginx-cert

[root@k8s-master01 nginx-cert]# openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=traefix-test.com"

Generating a 2048 bit RSA private key

.................................+++

.........................................................+++

writing new private key to 'tls.key'

-----

- 导入证书

kubectl -n default create secret tls nginx-test-tls --key=tls.key --cert=tls.crt

- 创建ingress

[root@k8s-master01 test-web]# kubectl create -f traefix-https.yaml

ingress.extensions/nginx-https-test created

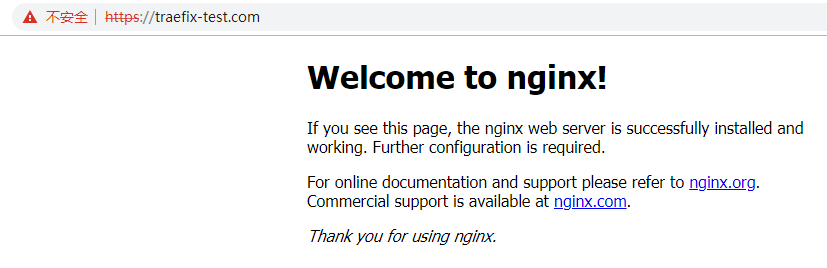

- 访问测试:

- 注:其他方法查看官方文档:https://docs.traefik.io/user-guide/kubernetes/

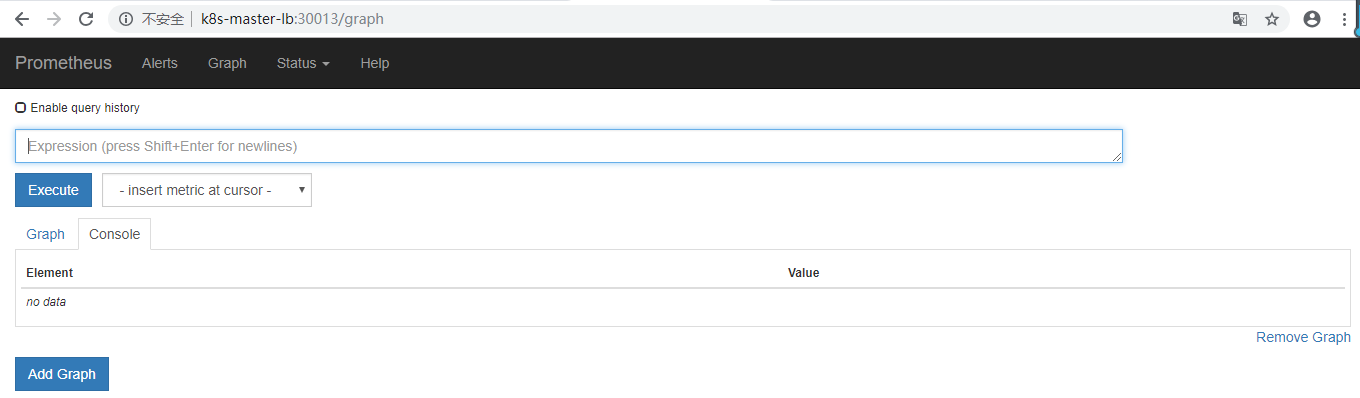

2,prometheus

2.1 安装prometheus

[root@k8s-master01 k8-ha-install]# kubectl apply -f prometheus/

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

configmap/prometheus-server-conf created

deployment.extensions/prometheus created

service/prometheus created

- 查看pod

[root@k8s-master01 k8-ha-install]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

...

prometheus-74869dd67b-t4r2z 1/1 Running 0 105s 172.168.2.5 k8s-node01 <none>

...

2.2 安装使用grafana(此处使用自装grafana)

wget https://dl.grafana.com/oss/release/grafana-5.4.0-1.x86_64.rpm

yum -y localinstall grafana-5.4.0-1.x86_64.rpm

or

yum install https://s3-us-west-2.amazonaws.com/grafana-releases/release/grafana-4.4.3-1.x86_64.rpm -y

- 启动grafana

systemctl enable grafana-server && systemctl start grafana-server

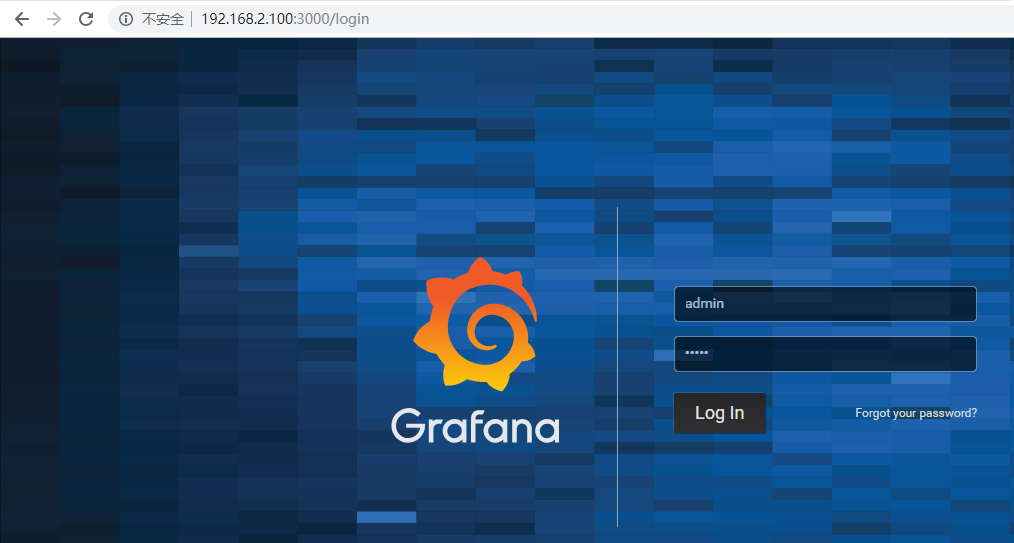

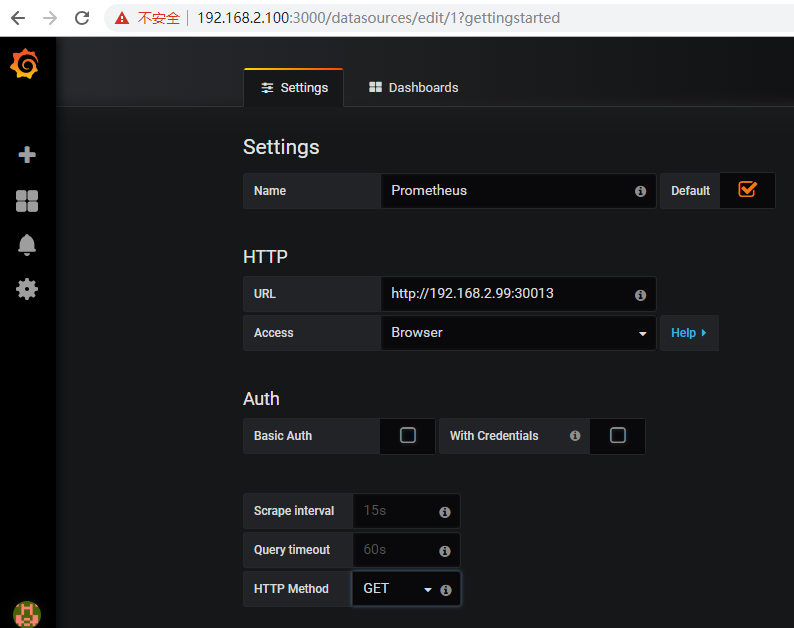

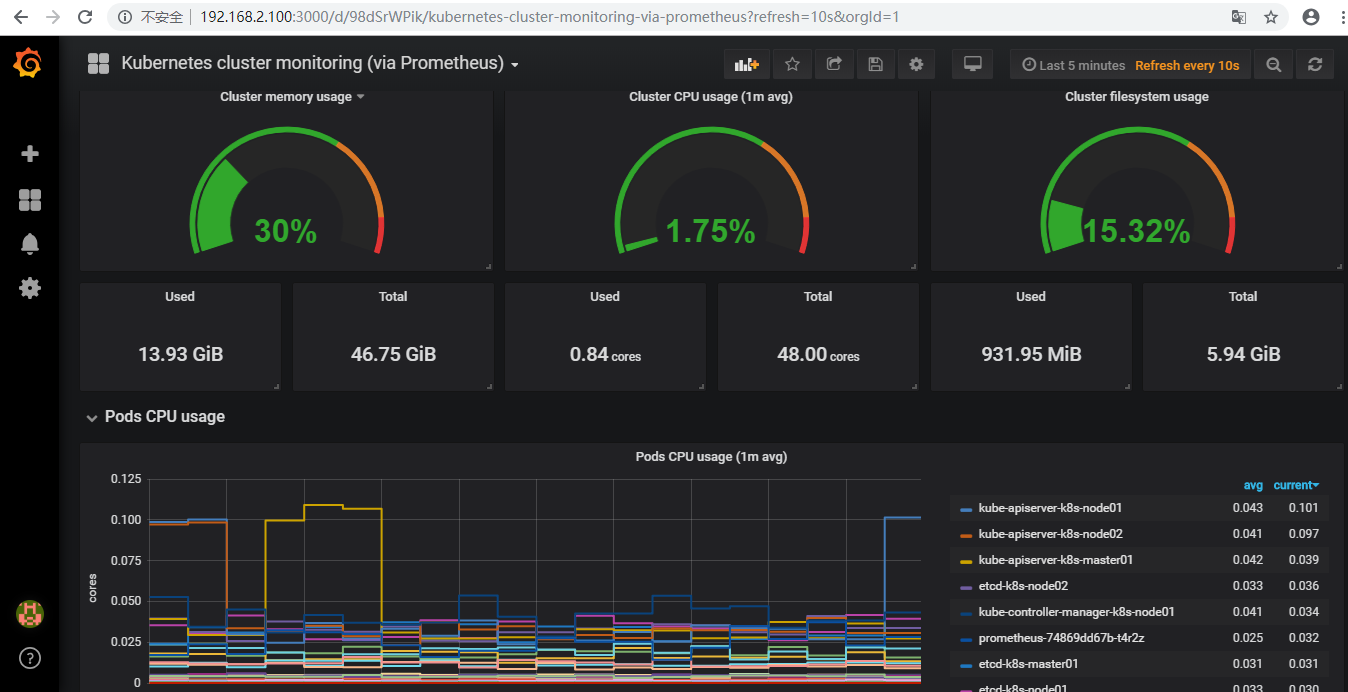

- 访问:http://192.168.2.100:3000,账号密码admin,配置prometheus的DataSource

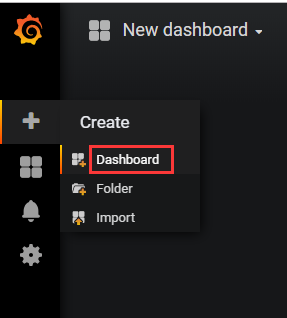

- 创建 dashborad

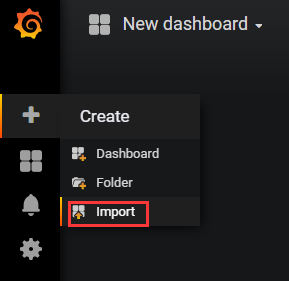

- 导入模板:/opt/k8-ha-install/heapster/grafana-dashboard

- 查看数据

- grafana文档:http://docs.grafana.org/

3,集群验证

3.1 验证集群高可用

- 创建一个副本为3的deployment

[root@k8s-master01 ~]# kubectl run nginx --image=nginx --replicas=3 --port=80

kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.

deployment.apps/nginx created

[root@k8s-master01 ~]# kubectl get deployment --all-namespaces

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

default nginx 3 3 3 3 17s

- 查看pods

[root@k8s-master01 ~]# kubectl get pods -l=run=nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-cdb6b5b95-hgxhq 1/1 Running 0 51s 172.168.0.9 k8s-master01 <none>

nginx-cdb6b5b95-mgzwk 1/1 Running 0 51s 172.168.2.8 k8s-node01 <none>

nginx-cdb6b5b95-svnc9 1/1 Running 0 51s 172.168.1.13 k8s-node02 <none>

- 创建service

[root@k8s-master01 ~]# kubectl expose deployment nginx --type=NodePort --port=80

service/nginx exposed

- 查看service

[root@k8s-master01 ~]# kubectl get service -n default

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25h

nginx NodePort 10.107.27.156 <none> 80:32588/TCP 17s

- 访问测试:http://nginx-test.com:32588/ 解析到任何一个node节点即可访问

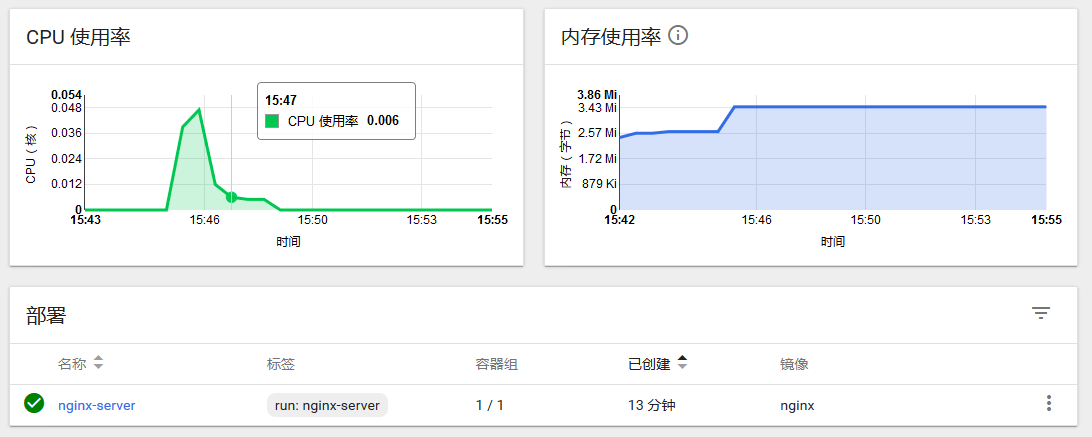

- 测试HPA自动弹性伸缩

# 创建测试服务

kubectl run nginx-server --requests=cpu=10m --image=nginx --port=80

# 创建service

kubectl expose deployment nginx-server --port=80

# 创建hpa (水平自动伸缩)

kubectl autoscale deployment nginx-server --cpu-percent=10 --min=1 --max=10

- 查看pod

[root@k8s-node01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-server-699678d8d6-76nmt 1/1 Running 0 2m3s

- 查看当前nginx-server的ClusterIP

[root@k8s-master01 ~]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 26h

nginx-server ClusterIP 10.109.53.60 <none> 80/TCP 25s

- 创建hpa (水平自动伸缩)

[root@k8s-node01 ~]# kubectl get horizontalpodautoscaler.autoscaling

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

nginx-server Deployment/nginx-server <unknown>/10% 1 10 1 74s

- 给测试服务增加负载

while true; do wget -q -O- http://10.109.53.60 > /dev/null; done

- 查看当前扩容情况

[root@k8s-node01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-server-699678d8d6-52drv 1/1 Running 0 110s

nginx-server-699678d8d6-76nmt 1/1 Running 0 5m52s

nginx-server-699678d8d6-bcqpp 1/1 Running 0 94s

nginx-server-699678d8d6-brk78 1/1 Running 0 109s

nginx-server-699678d8d6-ffl5m 1/1 Running 0 94s

nginx-server-699678d8d6-hnjf4 1/1 Running 0 94s

nginx-server-699678d8d6-kkgwm 1/1 Running 0 79s

nginx-server-699678d8d6-n2kl8 1/1 Running 0 109s

nginx-server-699678d8d6-n7llm 1/1 Running 0 95s

nginx-server-699678d8d6-r7bdq 1/1 Running 0 79s

[root@k8s-node01 ~]# kubectl get horizontalpodautoscaler.autoscaling

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

nginx-server Deployment/nginx-server 60%/10% 1 10 10 5m56s

- 终止增加负载,结束增加负载后,pod自动缩容(自动缩容需要大概10-15分钟)

[root@k8s-node01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-server-699678d8d6-76nmt 1/1 Running 0 12m

- 删除测试数据

[root@k8s-master01 ~]# kubectl delete deploy,svc,hpa nginx-server

deployment.extensions "nginx-server" deleted

service "nginx-server" deleted

horizontalpodautoscaler.autoscaling "nginx-server" deleted

4,集群稳定性测试

- 关闭master01电源

shutdown -h now

- master02查看

[root@k8s-node01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady master 26h v1.12.3

k8s-node01 Ready master 26h v1.12.3

k8s-node02 Ready master 26h v1.12.3

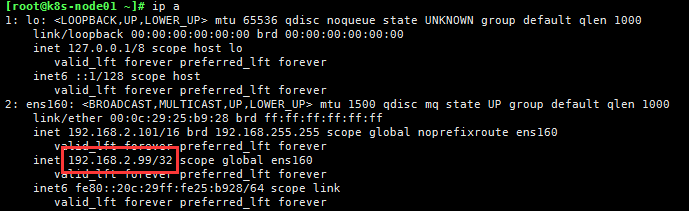

- VIP以漂移至master02(node02)

- 访问测试

- 重新开机 master01

- 查看节点状态

[root@k8s-node01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 26h v1.12.3

k8s-node01 Ready master 26h v1.12.3

k8s-node02 Ready master 26h v1.12.3

- 查看所有 pod

[root@k8s-master01 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-node-9cw9r 2/2 Running 0 23h

calico-node-l6m78 2/2 Running 0 23h

calico-node-lhqg9 2/2 Running 2 23h

coredns-6c66ffc55b-8rlpp 1/1 Running 0 26h

coredns-6c66ffc55b-qdvfg 1/1 Running 0 26h

etcd-k8s-master01 1/1 Running 1 62s

etcd-k8s-node01 1/1 Running 0 26h

etcd-k8s-node02 1/1 Running 0 26h

heapster-56c97d8b49-qp97p 1/1 Running 0 23h

kube-apiserver-k8s-master01 1/1 Running 1 62s

kube-apiserver-k8s-node01 1/1 Running 0 23h

kube-apiserver-k8s-node02 1/1 Running 0 23h

kube-controller-manager-k8s-master01 1/1 Running 1 62s

kube-controller-manager-k8s-node01 1/1 Running 0 26h

kube-controller-manager-k8s-node02 1/1 Running 1 26h

kube-proxy-62hjm 1/1 Running 0 26h

kube-proxy-79fbq 1/1 Running 0 26h

kube-proxy-jsnhl 1/1 Running 1 26h

kube-scheduler-k8s-master01 1/1 Running 3 63s

kube-scheduler-k8s-node01 1/1 Running 0 26h

kube-scheduler-k8s-node02 1/1 Running 0 26h

kubernetes-dashboard-64d4f8997d-wrfmm 1/1 Running 0 18m

metrics-server-b6bc985c4-mltp8 1/1 Running 0 18m

monitoring-grafana-6f8dc9f99f-bwrrt 1/1 Running 0 23h

monitoring-influxdb-556dcc774d-q7pk5 1/1 Running 0 18m

prometheus-74869dd67b-t4r2z 1/1 Running 0 147m

traefik-ingress-controller-njmzh 1/1 Running 0 4h40m

traefik-ingress-controller-q74l8 1/1 Running 1 4h40m

traefik-ingress-controller-sqv9z 1/1 Running 0 4h40m

- 访问测试