PhiData 一款开发AI搜索、agents智能体和工作流应用的AI框架

引言

在人工智能领域,构建一个能够理解并响应用户需求的智能助手是一项挑战性的任务。PhiData作为一个开源框架,为开发者提供了构建具有长期记忆、丰富知识和强大工具的AI助手的可能性。本文将介绍PhiData的核心优势、应用示例以及如何使用PhiData来构建自己的AI助手。

PhiData的设计理念如下,一个Assistant是以LLM为核心,加上长期记忆(Memory)、知识(结构化、非结构化)和工具(Tools,比如搜索、api调用等),这样就组成了一个完整的Assistant。

基于这些设计理念,PhiData可以帮助用户方便的构建助手应用,这些助手不仅拥有长期记忆,能够记住与用户的每一次对话,还具备丰富的业务知识和执行各种动作的能力。

如何使用PhiData

使用PhiData非常简单,以下是基本步骤:

- 创建助手:首先,你需要创建一个Assistant对象。

- 添加组件:然后,为助手添加所需的工具、知识和存储。

- 服务部署:最后,使用Streamlit、FastApi或Django等工具,将你的AI助手部署为一个应用程序。

比如,我们构建一个搜索助手:

首先创建 assistant.py

from phi.assistant import Assistant

from phi.tools.duckduckgo import DuckDuckGo

assistant = Assistant(tools=[DuckDuckGo()], show_tool_calls=True)

assistant.print_response("Whats happening in France?", markdown=True)

安装依赖库, 然后设置OPENAI_API_KEY 后就可以执行 Assistant

pip install openai duckduckgo-search

export OPENAI_API_KEY=sk-xxxx

python assistant.py

当然,如果没有openai的api,可以去免费申请Groq,然后LLM设置为groq:

from phi.llm.groq import Groq

assistant = Assistant(

llm=Groq(model="mixtral-8x7b-32768", api_key="your_api_key"),

tools=[DuckDuckGo(proxies={"https": "http://127.0.0.1:7890"})],

show_tool_calls=True,

markdown=True,

)

assistant.print_response("历史上的5月23日发生了什么大事")

我们运行,就等得到类似下面的结果:

结果尚可,但是回复用的英文,应该是框架默认的prompt是英文的缘故

应用示例

官方提供了一些example

- LLM OS: 使用大型语言模型(LLMs)作为核心处理单元来构建一个新兴的操作系统.

- Autonomous RAG: 自主RAG 结合检索和生成任务的模型,赋予了LLMs搜索它们的知识库、互联网或聊天记录的能力。

- Local RAG: 本地RAG 利用Ollama和PgVector等技术,使得RAG模型可以完全在本地运行。

- Investment Researcher: 投资研究员 利用Llama3和Groq生成关于股票的投资报告.

- News Articles: 使用Llama3和Groq撰写新闻文章。

- Video Summaries: 使用Llama3和Groq生成YouTube视频的摘要。

- Research Assistant: 利用Llama3和Groq帮助研究人员编写研究报告。

PhiData开发agents

官方仓库的cookbook目录里有一个demo agents程序,使用streamlit开发了交互界面,官方默认是用的open ai的GPT4。我们简单修改,支持groq。

左边支持选择模型,选择Tools与Assistant。我们来一探究竟,这里的tools与Assistant是如何协作的。

运行这个agents,我们从debug信息可以看到prompt。

DEBUG ============== system ==============

DEBUG You are a powerful AI Agent called `Optimus Prime v7`.

You have access to a set of tools and a team of AI Assistants at your disposal.

Your goal is to assist the user in the best way possible.

You must follow these instructions carefully:

<instructions>

1. When the user sends a message, first **think** and determine if:

- You can answer by using a tool available to you

- You need to search the knowledge base

- You need to search the internet

- You need to delegate the task to a team member

- You need to ask a clarifying question

2. If the user asks about a topic, first ALWAYS search your knowledge base using the `search_knowledge_base` tool.

3. If you dont find relevant information in your knowledge base, use the `duckduckgo_search` tool to search the internet.

4. If the user asks to summarize the conversation or if you need to reference your chat history with the user, use the `get_chat_history` tool.

5. If the users message is unclear, ask clarifying questions to get more information.

6. Carefully read the information you have gathered and provide a clear and concise answer to the user.

7. Do not use phrases like 'based on my knowledge' or 'depending on the information'.

8. You can delegate tasks to an AI Assistant in your team depending of their role and the tools available to them.

9. Use markdown to format your answers.

10. The current time is 2024-05-23 12:25:32.410842

11. You can use the `read_file` tool to read a file, `save_file` to save a file, and `list_files` to list files in the working directory.

12. To answer questions about my favorite movies, delegate the task to the `Data Analyst`.

13. To write and run python code, delegate the task to the `Python Assistant`.

14. To write a research report, delegate the task to the `Research Assistant`. Return the report in the <report_format> to the user as is, without any

additional text like 'here is the report'.

15. To get an investment report on a stock, delegate the task to the `Investment Assistant`. Return the report in the <report_format> to the user without any

additional text like 'here is the report'.

16. Answer any questions they may have using the information in the report.

17. Never provide investment advise without the investment report.

18. Use markdown to format your answers.

19. The current time is 2024-05-23 12:27:35.569693

20. You can use the `read_file` tool to read a file, `save_file` to save a file, and `list_files` to list files in the working directory.

21. To answer questions about my favorite movies, delegate the task to the `Data Analyst`.

22. To write and run python code, delegate the task to the `Python Assistant`.

23. To write a research report, delegate the task to the `Research Assistant`. Return the report in the <report_format> to the user as is, without any

additional text like 'here is the report'.

24. To get an investment report on a stock, delegate the task to the `Investment Assistant`. Return the report in the <report_format> to the user without any

additional text like 'here is the report'.

25. Answer any questions they may have using the information in the report.

26. Never provide investment advise without the investment report.

</instructions>

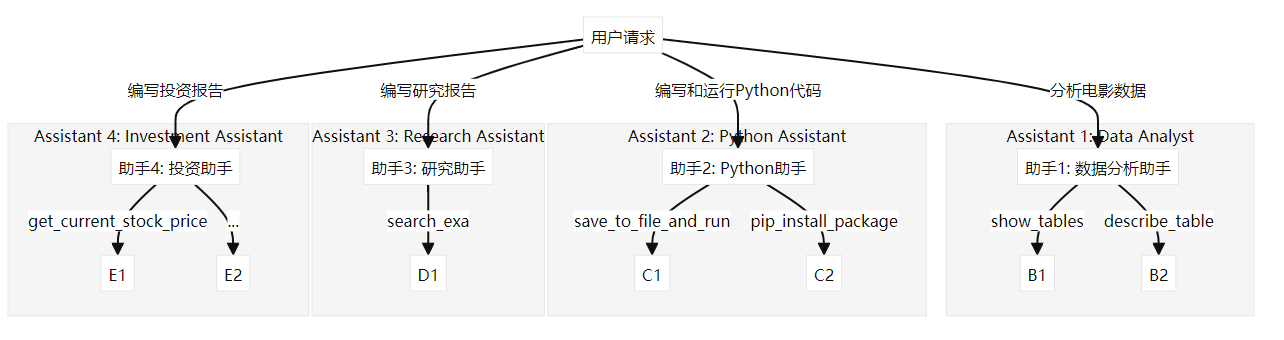

You can delegate tasks to the following assistants:

<assistants>

Assistant 1:

Name: Data Analyst

Role: Analyze movie data and provide insights

Available tools: show_tables, describe_table, inspect_query, run_query, create_table_from_path, summarize_table, export_table_to_path, save_file

Assistant 2:

Name: Python Assistant

Role: Write and run python code

Available tools: save_to_file_and_run, pip_install_package

Assistant 3:

Name: Research Assistant

Role: Write a research report on a given topic

Available tools: search_exa

Assistant 4:

Name: Investment Assistant

Role: Write a investment report on a given company (stock) symbol

Available tools: get_current_stock_price, get_company_info, get_analyst_recommendations, get_company_news

</assistants>

DEBUG ============== assistant ==============

DEBUG Hi, I'm Optimus Prime v7, your powerful AI Assistant. Send me on my mission boss :statue_of_liberty:

DEBUG ============== user ==============

DEBUG 英伟达最新财报

DEBUG ============== assistant ==============

DEBUG I understand you're looking for the Nvidia (Engligh Wade) latest financial report. To achieve this task, I need to delegate it to the Investment Assistant as

it's their area of expertise and has the necessary tools available for this job.

Here is the result:

Tool use failed: <tool-use>

{

"tool_call": {

"id": "pending",

"type": "function",

"function": {

"name": "get_investment_report"

},

"parameters": {

"symbol": "NVDA"

}

}

}

</tool-use>

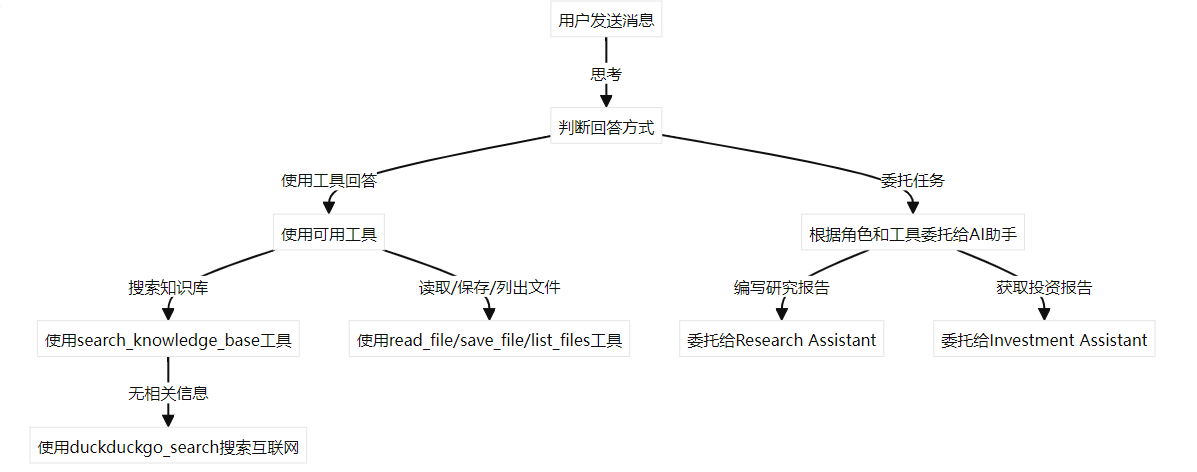

上面的instructions 内容较多,我们抽主要的内容画一个graph图,就方便理解了:

- 让LLM可以通过tools来处理一些具体的问题

- 对于一些类别的任务,可以委托给特定的助手,比如研究报告、投资助手等

然后针对可以委托的助手,prompt增加了具体的描述,方便LLM去调度,每个助手内部也有各种tools,LLM可以选择合适的。

当我们问英伟达最新财报, LLM 识别到需求,选用Investment Assistant助手,然后调用get_investment_report 工具

ps: 笔者运行环境中这个工具调用失败了

再换个问题, 今天法国发生了什么, 这个成功调用了DD搜索。

============== user ==============

DEBUG 今天法国发生了什么

DEBUG Time to generate response: 6.3184s

DEBUG ============== assistant ==============

DEBUG It seems there isn't a specific tool that provides the information you're asking for, so here's the answer to your question:

Today's news from France include:

- According to Reuters, trade unions at the French ports of Le Havre and Marseille-Fos have postponed planned strikes initially planned for today and

tomorrow. (<https://www.reuters.com/business/retail-consumer/french-port-workers-postpone-strike-action-2023-05-23/>)

- Le Point (French news outlet) reports that police detained 23 people after clashes near a migrant camp on the outskirts of Paris on Monday night.

(<https://www.lepoint.fr/societe/immigration/revolte-dans-un-camp-de-migrants-a-paris-01-05-2023-2429234_26.php>)

- French Prime Minister Élisabeth Borne visited the damaged areas of the flooded Aude and Hérault regions.

(<https://www.france24.com/fr/france/20230523-les-inondations-en-aude-et-herault-elisabeth-borne-sur-le-terrain-pour-rencontrer-les-sinistres>)

PhiData开发workflow应用

当前像coze、dify 这样的产品都支持workflow功能,可以可视化的定义workflow来解决一些相对负责的问题,而PhiData提供了通过code编排workflow的功能。

PhiData的workflow是可以串联assistant, 比如下面的例子:

import json

import httpx

from phi.assistant import Assistant

from phi.llm.groq import Groq

from phi.workflow import Workflow, Task

from phi.utils.log import logger

llm_id: str = "llama3-70b-8192"

def get_top_hackernews_stories(num_stories: int = 10) -> str:

"""Use this function to get top stories from Hacker News.

Args:

num_stories (int): Number of stories to return. Defaults to 10.

Returns:

str: JSON string of top stories.

"""

# Fetch top story IDs

response = httpx.get("https://hacker-news.firebaseio.com/v0/topstories.json")

story_ids = response.json()

# Fetch story details

stories = []

for story_id in story_ids[:num_stories]:

story_response = httpx.get(f"https://hacker-news.firebaseio.com/v0/item/{story_id}.json")

story = story_response.json()

story["username"] = story["by"]

stories.append(story)

return json.dumps(stories)

def get_user_details(username: str) -> str:

"""Use this function to get the details of a Hacker News user using their username.

Args:

username (str): Username of the user to get details for.

Returns:

str: JSON string of the user details.

"""

try:

logger.info(f"Getting details for user: {username}")

user = httpx.get(f"https://hacker-news.firebaseio.com/v0/user/{username}.json").json()

user_details = {

"id": user.get("user_id"),

"karma": user.get("karma"),

"about": user.get("about"),

"total_items_submitted": len(user.get("submitted", [])),

}

return json.dumps(user_details)

except Exception as e:

logger.exception(e)

return f"Error getting user details: {e}"

groq = Groq(model=llm_id, api_key="你的API KEY")

hn_top_stories = Assistant(

name="HackerNews Top Stories",

llm=groq,

tools=[get_top_hackernews_stories],

show_tool_calls=True,

)

hn_user_researcher = Assistant(

name="HackerNews User Researcher",

llm=groq,

tools=[get_user_details],

show_tool_calls=True,

)

writer = Assistant(

name="HackerNews Writer",

llm=groq,

show_tool_calls=False,

markdown=True

)

hn_workflow = Workflow(

llm=groq,

name="HackerNews 工作流",

tasks=[

Task(description="Get top hackernews stories", assistant=hn_top_stories, show_output=False),

Task(description="Get information about hackernews users", assistant=hn_user_researcher, show_output=False),

Task(description="写一篇有吸引力的介绍文章,用中文输出", assistant=writer),

],

debug_mode=True,

)

# 写一篇关于在 HackerNews 上拥有前两个热门故事的用户的报告

hn_workflow.print_response("Write a report about the users with the top 2 stories on hackernews", markdown=True)

定义了三个assistant, 获取hackernews排行榜,获取文章的作者,生成文章,通过Workflow来实现这三个assistant的编排。

运行结果:

┌──────────┬──────────────────────────────────────────────────────────────────┐

│ │ Write a report about the users with the top 2 stories on │

│ Message │ hackernews │

├──────────┼──────────────────────────────────────────────────────────────────┤

│ Response │ ┌──────────────────────────────────────────────────────────────┐ │

│ (17.0s) │ │ ** HackerNews 知名人物介绍 ** │ │

│ │ └──────────────────────────────────────────────────────────────┘ │

│ │ │

│ │ 近日,我们对 HackerNews │

│ │ 的前两名热门故事进行了分析,并对这些故事背后的用户进行了深入挖 … │

│ │ │

│ │ │

│ │ ** 排名第一:onhacker ** │

│ │ │

│ │ 以 258 分领跑 HackerNews排行榜的用户是 │

│ │ onhacker,他的故事《Windows 10 │

│ │ 壁纸原来是真实拍摄的(2015)》引起了大家的关注。onhacker 的 │

│ │ HackerNews 账户信息显示,他的 karma 分数为 106,共提交了 27 │

│ │ 条内容。他的个人介绍页面上写道:“distributing distrubuted to │

│ │ distrub”,显露出了他幽默的一面。 │

│ │ │

│ │ │

│ │ ** 排名第二:richardatlarge ** │

│ │ │

│ │ 排在第二位的用户是 richardatlarge,他的故事《OpenAI 并未复制 │

│ │ Scarlett Johansson 的声音,记录显示》获得了 102 │

│ │ 分。richardatlarge 的 HackerNews 账户信息显示,他的 karma │

│ │ 分数高达 1095,共提交了 354 │

│ │ 条内容。他是一名来自美国、现居新西兰的作家,个人介绍页面上留下 … │

│ │ from the US, living in NZ”。 │

│ │ │

│ │ 通过这两位用户的介绍,我们可以看到他们在 HackerNews │

│ │ 社区中的影响力和贡献。他们热衷于分享信息和想法,引发了大家的热 … │

└──────────┴──────────────────────────────────────────────────────────────────┘

llama3 的中文被弱化了,结果比较一般。

总结

PhiData以其强大的功能集成和灵活的部署选项,为AI产品开发提供了极大的便利和高效性。它为构建智能AI助手提供了一个全新的视角,让开发者能够探索AI的无限可能。如果你对构建AI产品感兴趣,不妨试试PhiData。

浙公网安备 33010602011771号

浙公网安备 33010602011771号