利用大模型翻译论文集的摘要列表

利用大模型翻译论文集的摘要列表

看论文的时候发现可以把论文摘要的列表用大模型一次性翻译导出到Markdown,再导入笔记软件。

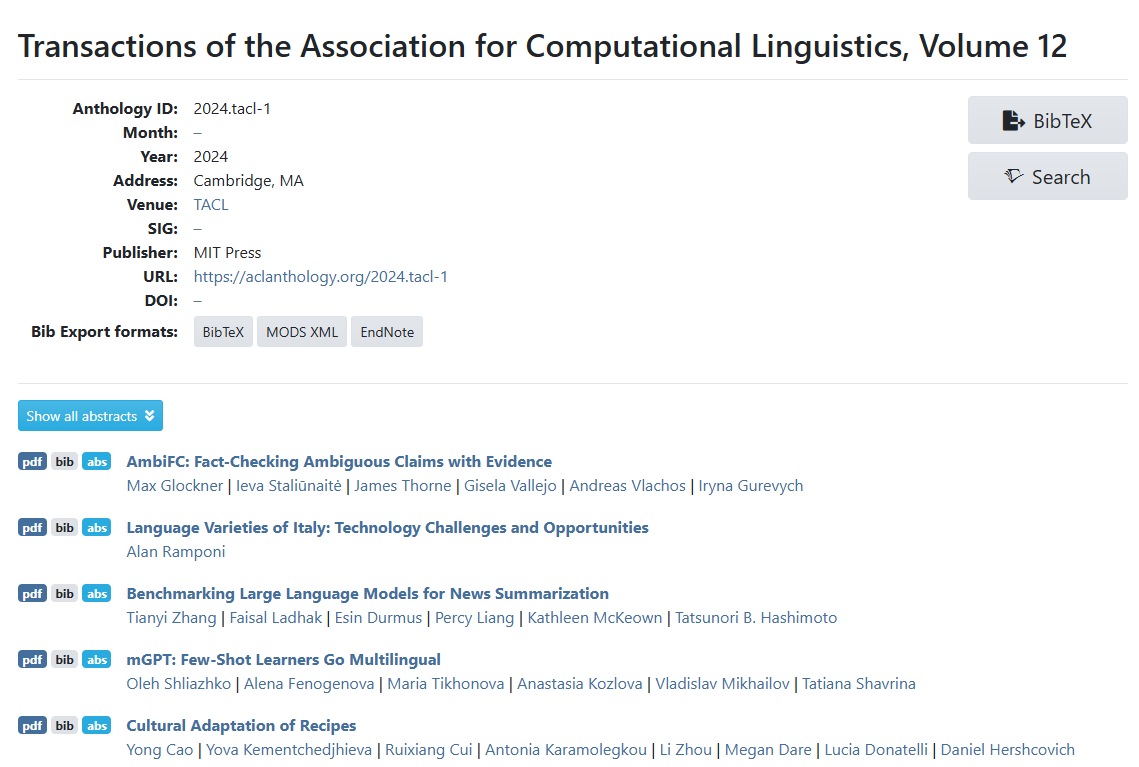

举例:Transactions of the Association for Computational Linguistics, Volume 12 - ACL Anthology,长这样:

把下面的内容复制出来是下面这样的。可以看到基本结构很整齐,用pdfbibabs这个字符串进行分隔论文,论文的三行分别是标题、作者和摘要,作者列表通过|进行分隔。

pdfbibabs

AmbiFC: Fact-Checking Ambiguous Claims with Evidence

Max Glockner | Ieva Staliūnaitė | James Thorne | Gisela Vallejo | Andreas Vlachos | Iryna Gurevych

Automated fact-checking systems verify claims against evidence to predict their veracity. In real-world scenarios, the retrieved evidence may not unambiguously support or refute the claim and yield conflicting but valid interpretations. Existing fact-checking datasets assume that the models developed with them predict a single veracity label for each claim, thus discouraging the handling of such ambiguity. To address this issue we present AmbiFC,1 a fact-checking dataset with 10k claims derived from real-world information needs. It contains fine-grained evidence annotations of 50k passages from 5k Wikipedia pages. We analyze the disagreements arising from ambiguity when comparing claims against evidence in AmbiFC, observing a strong correlation of annotator disagreement with linguistic phenomena such as underspecification and probabilistic reasoning. We develop models for predicting veracity handling this ambiguity via soft labels, and find that a pipeline that learns the label distribution for sentence-level evidence selection and veracity prediction yields the best performance. We compare models trained on different subsets of AmbiFC and show that models trained on the ambiguous instances perform better when faced with the identified linguistic phenomena.

pdfbibabs

Language Varieties of Italy: Technology Challenges and Opportunities

Alan Ramponi

Italy is characterized by a one-of-a-kind linguistic diversity landscape in Europe, which implicitly encodes local knowledge, cultural traditions, artistic expressions, and history of its speakers. However, most local languages and dialects in Italy are at risk of disappearing within a few generations. The NLP community has recently begun to engage with endangered languages, including those of Italy. Yet, most efforts assume that these varieties are under-resourced language monoliths with an established written form and homogeneous functions and needs, and thus highly interchangeable with each other and with high-resource, standardized languages. In this paper, we introduce the linguistic context of Italy and challenge the default machine-centric assumptions of NLP for Italy’s language varieties. We advocate for a shift in the paradigm from machine-centric to speaker-centric NLP, and provide recommendations and opportunities for work that prioritizes languages and their speakers over technological advances. To facilitate the process, we finally propose building a local community towards responsible, participatory efforts aimed at supporting vitality of languages and dialects of Italy.

直接预处理成json文件。

import json

content = open('data/paper_with_abs_ori.txt', 'r', encoding='utf-8').read()

# 分隔每篇论文

papers = content.strip().split('pdfbibabs')

papers = [p.strip() for p in papers]

papers = [p for p in papers if p != '']

# 处理每篇论文

papers_with_abs = []

for paper in papers:

lines = paper.splitlines()

title = lines[0].strip()

authors = lines[1].strip().split(' | ')

authors = [a.strip() for a in authors]

abstract = '\n'.join([line.strip() for line in lines[2:]]).strip()

papers_with_abs.append({

'title': title,

'authors': authors,

'abstract': abstract

})

# 保存结果

with open('data/paper_with_abs.json', 'w', encoding='utf-8') as f:

json.dump(papers_with_abs, f, ensure_ascii=False, indent=4)

处理成如下样子:

[

{

"title": "AmbiFC: Fact-Checking Ambiguous Claims with Evidence",

"authors": [

"Max Glockner",

"Ieva Staliūnaitė",

"James Thorne",

"Gisela Vallejo",

"Andreas Vlachos",

"Iryna Gurevych"

],

"abstract": "Automated fact-checking systems verify claims against evidence to predict their veracity. In real-world scenarios, the retrieved evidence may not unambiguously support or refute the claim and yield conflicting but valid interpretations. Existing fact-checking datasets assume that the models developed with them predict a single veracity label for each claim, thus discouraging the handling of such ambiguity. To address this issue we present AmbiFC,1 a fact-checking dataset with 10k claims derived from real-world information needs. It contains fine-grained evidence annotations of 50k passages from 5k Wikipedia pages. We analyze the disagreements arising from ambiguity when comparing claims against evidence in AmbiFC, observing a strong correlation of annotator disagreement with linguistic phenomena such as underspecification and probabilistic reasoning. We develop models for predicting veracity handling this ambiguity via soft labels, and find that a pipeline that learns the label distribution for sentence-level evidence selection and veracity prediction yields the best performance. We compare models trained on different subsets of AmbiFC and show that models trained on the ambiguous instances perform better when faced with the identified linguistic phenomena."

},

用大模型的API对摘要进行翻译,这里使用的是OpenAI库,不仅是OpenAI的大模型,通义千问的API也是兼容OpenAI库的。

"""

读取从ACL论文网站复制来的论文集摘要列表,调用OpenAI API逐一翻译摘要。

"""

import json

import openai

client = openai.OpenAI(

base_url="填写自己的服务url",

api_key="填写自己的API Key"

)

def call_with_openai(prompt_sys, prompt):

response = client.chat.completions.create(

# model="gpt-3.5-turbo",

model="gpt-4o-mini",

messages=[

{"role": "system", "content": prompt_sys},

{"role": "user", "content": prompt}

],

# temperature=0.7,

# max_tokens=256,

# top_p=1,

# frequency_penalty=0,

# presence_penalty=0

)

return response.choices[0].message.content

prompt_sys = """

你是一个专业的学术论文翻译,请将输入的文本翻译成中文。

""".strip()

prompt_pattern = """

请将下述论文摘要翻译成中文。

{abstract}

""".strip()

# 读取预处理的文件。

papers = json.load(open('data/paper_with_abs.json', 'r', encoding='utf-8'))

for i, paper in enumerate(papers):

print(f'{i}/{len(papers)}')

abstract = paper['abstract']

prompt = prompt_pattern.format(abstract=abstract)

result = call_with_openai(prompt_sys, prompt)

print(result)

paper['abstract_cn'] = result

json.dump(papers, open('data/paper_with_abs_cn.json', 'w', encoding='utf-8'), ensure_ascii=False, indent=4)

得到结果如下:

[

{

"title": "AmbiFC: Fact-Checking Ambiguous Claims with Evidence",

"authors": [

"Max Glockner",

"Ieva Staliūnaitė",

"James Thorne",

"Gisela Vallejo",

"Andreas Vlachos",

"Iryna Gurevych"

],

"abstract": "Automated fact-checking systems verify claims against evidence to predict their veracity. In real-world scenarios, the retrieved evidence may not unambiguously support or refute the claim and yield conflicting but valid interpretations. Existing fact-checking datasets assume that the models developed with them predict a single veracity label for each claim, thus discouraging the handling of such ambiguity. To address this issue we present AmbiFC,1 a fact-checking dataset with 10k claims derived from real-world information needs. It contains fine-grained evidence annotations of 50k passages from 5k Wikipedia pages. We analyze the disagreements arising from ambiguity when comparing claims against evidence in AmbiFC, observing a strong correlation of annotator disagreement with linguistic phenomena such as underspecification and probabilistic reasoning. We develop models for predicting veracity handling this ambiguity via soft labels, and find that a pipeline that learns the label distribution for sentence-level evidence selection and veracity prediction yields the best performance. We compare models trained on different subsets of AmbiFC and show that models trained on the ambiguous instances perform better when faced with the identified linguistic phenomena.",

"abstract_cn": "自动化事实检验系统通过将主张与证据进行核对来预测其真实性。在现实场景中,检索到的证据可能并不明确支持或反驳该主张,并可能产生冲突但有效的解释。现有的事实检验数据集假设使用他们开发的模型为每个主张预测一个单一的真实性标签,从而抑制了对这种模糊性的处理。为了解决这个问题,我们提出了AmbiFC,一个包含来自现实信息需求的10,000个主张的事实检验数据集。它包含来自5,000个维基百科页面的50,000篇段落的细粒度证据注释。我们分析了在比较AmbiFC中的主张与证据时,由于模糊性所引起的分歧,观察到注释者之间的分歧与语言现象(如不足规范性和概率推理)之间存在强相关性。我们开发了处理这种模糊性的真实性预测模型,采用软标签,发现一个学习句子级证据选择和真实性预测标签分布的流程能够实现最佳性能。我们比较了在AmbiFC不同子集上训练的模型,结果表明在处理已识别的语言现象时,在模糊实例上训练的模型表现更好。"

},

最后把json格式的结果转换为markdown格式,方便导入笔记软件,代码参考:

import json

pattern_md = """

- `Title`: {title}

- `Authors`: {authors}

> {abstract_cn}

""".strip()

papers = json.load(open('data/paper_with_abs_cn.json', 'r', encoding='utf-8'))

content_md = []

for i, paper in enumerate(papers):

title = paper['title']

authors = paper['authors']

authors = ' | '.join(authors)

abstract_cn = paper['abstract_cn']

md = pattern_md.format(title=title, authors=authors, abstract_cn=abstract_cn)

content_md.append(md)

content_md = '\n--------------------------\n'.join(content_md)

with open('data/paper_with_abs_cn.md', 'w', encoding='utf-8') as f:

f.write(content_md)

最后的结果,参考:

- `Title`: AmbiFC: Fact-Checking Ambiguous Claims with Evidence

- `Authors`: Max Glockner | Ieva Staliūnaitė | James Thorne | Gisela Vallejo | Andreas Vlachos | Iryna Gurevych

> 自动化事实检验系统通过将主张与证据进行核对来预测其真实性。在现实场景中,检索到的证据可能并不明确支持或反驳该主张,并可能产生冲突但有效的解释。现有的事实检验数据集假设使用他们开发的模型为每个主张预测一个单一的真实性标签,从而抑制了对这种模糊性的处理。为了解决这个问题,我们提出了AmbiFC,一个包含来自现实信息需求的10,000个主张的事实检验数据集。它包含来自5,000个维基百科页面的50,000篇段落的细粒度证据注释。我们分析了在比较AmbiFC中的主张与证据时,由于模糊性所引起的分歧,观察到注释者之间的分歧与语言现象(如不足规范性和概率推理)之间存在强相关性。我们开发了处理这种模糊性的真实性预测模型,采用软标签,发现一个学习句子级证据选择和真实性预测标签分布的流程能够实现最佳性能。我们比较了在AmbiFC不同子集上训练的模型,结果表明在处理已识别的语言现象时,在模糊实例上训练的模型表现更好。

--------------------------

- `Title`: Language Varieties of Italy: Technology Challenges and Opportunities

- `Authors`: Alan Ramponi

> 意大利以其独特的语言多样性在欧洲独树一帜,这种多样性隐含地编码了其说话者的地方知识、文化传统、艺术表达和历史。然而,意大利的大多数地方语言和方言在未来几代中面临消失的风险。近年来,自然语言处理(NLP)社区开始关注濒危语言,包括意大利的语言。然而,大多数努力都假设这些语言变体是资源匮乏的语言单体,具有既定的书写形式和同质化的功能与需求,因此在高资源的标准化语言之间以及彼此之间高度可互换。本文介绍了意大利的语言背景,并质疑了针对意大利语言变体的默认机器中心假设。我们主张将范式从机器中心转向说话者中心的自然语言处理,并提供以语言及其说话者为优先,而非技术进步的工作建议和机会。最后,我们提议建立一个地方社区,推动负责任的参与性努力,以支持意大利语言和方言的活力。

--------------------------

浙公网安备 33010602011771号

浙公网安备 33010602011771号