一、playbook 任务标签

1.标签的作用

默认情况下,Ansible在执行一个playbook时,会执行playbook中定义的所有任务,

Ansible的标签(tag)功能可以给单独任务甚至整个playbook打上标签,

然后利用这些标签来指定要运行playbook中的个别任务,或不执行指定的任务。

2.打标签的方式

1.对一个task下面的一个name打一个标签

2.对一个task下面的一个name打多个标签

3.对task下面的多个name打一个标签

3.打标签

1)对一个task打一个标签

.. ... ...

- name: Config nginx Server

copy:

src: /root/conf/linux.wp.com.conf

dest: /etc/nginx/conf.d/

notify:

- restart_web_nginx

- get_nginx_status

when: ansible_fqdn is match "web*"

tags: config_web

... ... ...

2)对一个task下面的一个name打多个标签

- name: Config nginx Server

copy:

src: /root/conf/linux.wp.com.conf

dest: /etc/nginx/conf.d/

notify:

- restart_web_nginx

- get_nginx_status

when: ansible_fqdn is match "web*"

tags:

- config_web

- config_nginx

3)对task下面的多个name打一个标签

- name: Config slb Server

copy:

src: /root/conf/proxy.conf

dest: /etc/nginx/conf.d

notify: restart_slb

when: ansible_fqdn == "lb01"

tags: config_nginx

- name: Config nginx Server

copy:

src: /root/conf/linux.wp.com.conf

dest: /etc/nginx/conf.d/

notify:

- restart_web_nginx

- get_nginx_status

when: ansible_fqdn is match "web*"

tags:

- config_web

- config_nginx

4.标签的使用

#查看所有的标签(也可以查看该剧本有多少 hosts )

[root@m01 ~]# ansible-playbook lnmp6.yml --list-tags

#执行标签指定的动作

[root@m01 ~]# ansible-playbook lnmp2.yml -t config_web

#执行指定多个标签的动作

[root@m01 ~]# ansible-playbook lnmp2.yml -t config_nginx,config_web

#跳过指定的标签动作

[root@m01 ~]# ansible-playbook lnmp2.yml --skip-tags config_nginx

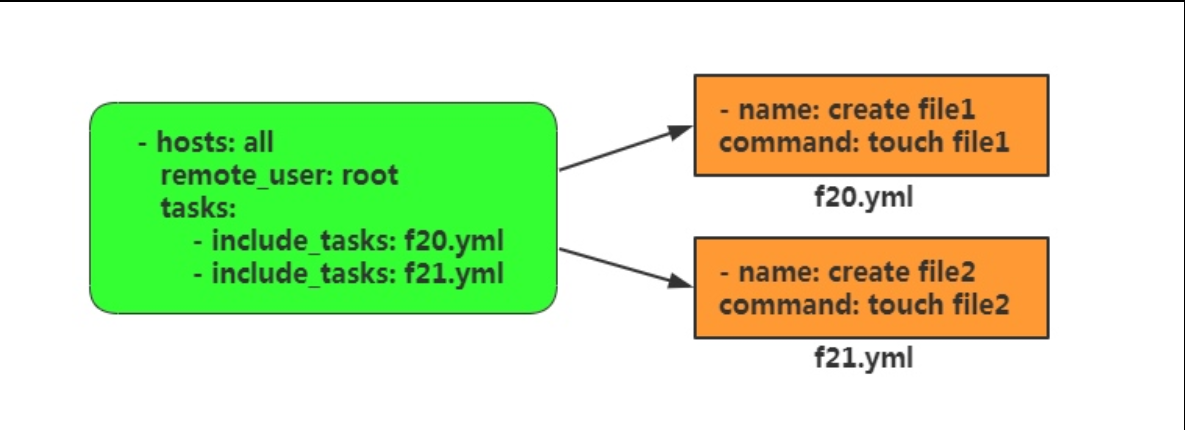

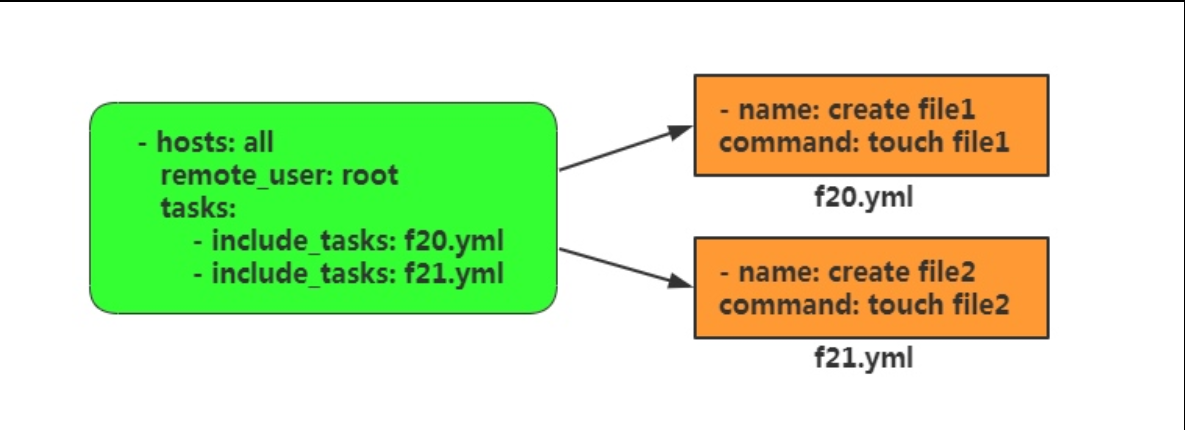

二、playbook的复用

在之前写playbook的过程中,我们发现,写多个playbook没有办法,一键执行,

这样我们还要单个playbook挨个去执行,很鸡肋。所以在playbook中有一个功能,叫做include用来动态调用task任务列表。

1.只调用tasks

1)编写安装nginx

[root@m01 ~]# cat nginx.yml

- name: Install Nginx Server

yum:

name: nginx

state: present

2)编写启动nginx

[root@m01 ~]# cat start.yml

- name: Start Nginx Server

systemd:

name: nginx

state: started

enabled: yes

3)编写调用的剧本

[root@m01 ~]# cat main.yml

- hosts: nfs

tasks:

- include_tasks: nginx.yml

- include_tasks: start.yml

4)直接调用写好的playbook

[root@m01 ~]# cat main.yml

- import_playbook: lnmp1.yml

- import_playbook: lnmp2.yml

三、playbook忽略错误

默认playbook会检测task执行的返回状态,如果遇到错误则会立即终止playbook的后续task执行,

然而有些时候playbook即使执行错误了也要让其继续执行。

加入参数:ignore_errors:yes 忽略错误

1.一般使用

- name: Get PHP Install status

shell: "rpm -qa | grep php"

ignore_errors: yes

register: get_php_install_status

- name: Install PHP Server

shell: yum localinstall -y /tmp/*.rpm

when:

- ansible_fqdn is match "web*"

- get_php_install_status.rc != 0

四、playbook错误处理

当task执行失败时,playbook将不再继续执行,包括如果在task中设置了handler也不会被执行。

1.强制调用handlers

[root@m01 ~]# cat handler.yml

- hosts: web_group

force_handlers: yes

tasks:

- name: config httpd server

template:

src: ./httpd.j2

dest: /etc/httpd/conf

notify:

- Restart Httpd Server

- Restart PHP Server

- name: Install Http Server

yum:

name: htttpd

state: present

- name: start httpd server

service:

name:httpd

state: started

enabled: yes

handlers:

- name: Restart Httpd Server

systemd:

name: httpd

state: restarted

- name: Restart PHP Server

systemd:

name: php-fpm

state: restarted

2.抑制changed

#被管理主机没有发生变化,可以使用参数将change状态改为ok

- name: Get PHP Install status

shell: "rpm -qa | grep php"

ignore_errors: yes

changed_when: false

register: get_php_install_status

五、Ansible的jinja2

1.什么是jinja2

jinja2是Python的全功能模板引擎

就是一个配置文件的模板,支持变量

#Jinja2与Ansible啥关系

Ansible通常会使用jinja2模板来修改被管理主机的配置文件等...在saltstack中同样会使用到jinja2

如果在100台主机上安装nginx,每台nginx的端口都不一样,如何解决?

#Ansible如何使用Jinja2

使用Ansible的jinja2模板也就是使用template模块,该模块和copy模块一样,

都是将文件复制到远端主机上去,但是区别在于,template模块可以获取到文件中的变量,而copy则是原封不动的把文件内容复制过去。

之前我们在推送rsync的backup脚本时,想把脚本中的变量名改成主机名,

如果使用copy模块则推送过去的就是{{ ansible_fqdn }},不变,如果使用template,则会变成对应的主机名

#Ansible使用Jinja2注意事项

Ansible允许jinja2模板中使用条件判断和循环,但是不允许在playbook中使用。

注意:不是每个管理员都需要这个特性,但是有些时候jinja2模块能大大提高效率。

六、jinja2的使用

```bash

{{ EXPR }} 输出变量值,会输出自定义的变量值或facts

1.playbook文件使用template模块

2.模板文件里面变量使用{{名称}},比如{{PORT}}或使用facts

2.Jinja2模板逻辑判断

1)循环

#shell脚本的循环

[root@m01 ~]# vim xh.sh

#!/bin/bash

for i in `seq 10`

do

echo $i

done

#Jinja2的循环表达式

{% for i in range(10) %}

echo $i

{% endfor %}

2)判断

#shell脚本的判断

[root@m01 ~]# vim pd.sh

#!/bin/bash

age=$1

if [ $age -lt 18 ];then

echo "小姐姐"

else

echo "大妈"

fi

#Jinja2的条件判断

{% if EXPR %}

{% elif EXPR %}

{% else %}

{% endif %}

#注释

{# COMMENT #}

3.jinja2模板测试

1)登录文件测试

#编写j2模板

[root@m01 ~]# vim motd.j2

欢迎来到 {{ ansible_fqdn }}

该服务器总内存: {{ ansible_memtotal_mb }} MB

该服务器剩余内存: {{ ansible_memfree_mb }} MB

#编写剧本

[root@m01 ~]# vim motd.yml

- hosts: all

tasks:

- name: Config motd

template:

src: /root/motd.j2

dest: /etc/motd

#执行剧本

[root@m01 ~]# ansible-playbook motd.yml

#查看远端服务器内容

[root@backup ~]# cat /etc/motd

欢迎来到 backup

该服务器总内存: 972 MB

该服务器剩余内存: 582 MB

[root@db01 ~]# cat /etc/motd

欢迎来到 db01

该服务器总内存: 972 MB

该服务器剩余内存: 582 MB

2)使用jinja2模板管理mysql

#配置模板

[root@m01 ~]# vim /etc/my.j2

[mysqld]

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

symbolic-links=0

{% if ansible_memtotal_mb == 972 %}

innodb_log_buffer_poll_size= 800M

{% elif ansible_memtotal_mb == 1980 %}

innodb_log_buffer_poll_size= 1600M

{% endif %}

... ...

#配置剧本

[root@m01 ~]# vim mysql.yml

- hosts: db_group

tasks:

- name: Config mysql

template:

src: /etc/my.cnf

dest: /etc/

#执行

[root@m01 ~]# ansible-playbook mysql.yml

#查看

[root@db01 ~]# vim /etc/my.cnf

[mysqld]

innodb_log_buffer_poll_size= 800M

[root@db03 ~]# vim /etc/my.cnf

[mysqld]

innodb_log_buffer_poll_size= 1600M

4.配置负载均衡的jinja2模板

1)正经的配置

[root@m01 ~]# cat conf/proxy_new.conf

upstream web {

server 172.16.1.7;

server 172.16.1.8;

}

server {

listen 80;

server_name linux.wp.com;

location / {

proxy_pass http://web;

include proxy_params;

}

}

2)不正经的配置

[root@m01 ~]# vim conf/proxy.j2

upstream {{ server_name }} {

{% for i in range(7,9) %}

server {{ ip }}.{{ i }};

{% endfor %}

}

server {

listen {{ port }};

server_name {{ server_name }};

location / {

proxy_pass http://{{ server_name }};

include proxy_params;

}

}

3)配置额外变量

[root@m01 ~]# vim upstream_vars.yml

ip: 172.16.1

web: web

port: 80

server_name: linux.wp.com

4)配置剧本推送

[root@m01 ~]# vim proxy.yml

- hosts: lb01

vars_files: upstream_vars.yml

tasks:

- name: Config SLB

template:

src: /root/conf/proxy.j2

dest: /etc/nginx/conf.d/proxy.conf

- name: Restart SLB Nginx

systemd:

name: nginx

state: restarted

5.使用jinja2模板配置keepalived

1)配置keepalived配置文件(正经配置)

#keepalived master 配置文件

global_defs {

router_id lb01

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 50

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.3

}

}

#keepalived backup配置文件

global_defs {

router_id lb02

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 50

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.3

}

}

2)配置keepalived配置文件(不正经配置)

[root@m01 ~]# vim conf/keepalived.j2

global_defs {

router_id {{ ansible_fqdn }}

}

vrrp_instance VI_1 {

{% if ansible_fqdn == "lb01" %}

state MASTER

priority 100

{% else %}

state BACKUP

priority 90

{% endif %}

interface eth0

virtual_router_id 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

{{ vip }}

}

}

3)配置变量

[root@m01 ~]# vim upstream_vars.yml

ip: 172.16.1

web: web

port: 80

server_name: linux.wp.com

vip: 10.0.0.3

4)配置主机清单

#配置主机清单

[root@m01 ~]# vim /etc/ansible/hosts

[slb]

lb01 ansible_ssh_pass='1'

lb02 ansible_ssh_pass='1'

#配置hosts

[root@m01 ~]# vim /etc/hosts

....

172.16.1.5 lb02

5)编写keepalived剧本

[root@m01 ~]# cat keepalived.yml

- hosts: slb

vars_files: upstream_vars.yml

tasks:

- name: Install keepalived

yum:

name: keepalived

state: present

- name: Config keepalive

template:

src: /root/conf/keepalived.j2

dest: /etc/keepalived/keepalived.conf

- name: Start keepalived

systemd:

name: keepalived

state: restarted

6.整合剧本,一件部署wordpress

1)配置主机清单

[root@m01 ~]# cat /etc/ansible/hosts

[web_group]

web01 ansible_ssh_pass='1'

web02 ansible_ssh_pass='1'

#web03 ansible_ssh_pass='1'

[slb]

lb01 ansible_ssh_pass='1'

lb02 ansible_ssh_pass='1'

[db_group]

db01 ansible_ssh_pass='1'

db03 ansible_ssh_pass='1'

[nfs_server]

nfs ansible_ssh_pass='1'

[backup_server]

backup ansible_ssh_pass='1'

[nginx_group:children]

web_group

slb

[nfs_group:children]

nfs_server

web_group

[nginx_group:vars]

web=host_vars

2)配置keepalived剧本

[root@m01 ~]# cat keepalived.yml

- hosts: slb

vars_files: upstream_vars.yml

tasks:

- name: Install keepalived

yum:

name: keepalived

state: present

- name: Config keepalive

template:

src: /root/conf/keepalived.j2

dest: /etc/keepalived/keepalived.conf

- name: Start keepalived

systemd:

name: keepalived

state: restarted

浙公网安备 33010602011771号

浙公网安备 33010602011771号