ubuntu18.04使用kubeadm部署k8s单节点

实验目的:

体验kubeadm部署k8s服务,全流程体验!

实验环境:

- ubuntu18.04

- 联网在线部署

- kubeadm

01、系统检查

- 节点主机名唯一,建议写入/etc/hosts

- 禁止swap分区

- 关闭防火墙

root@ubuntu:~# hostnamectl set-hostname k8s-master

tail /etc/hosts

192.168.3.101 k8s-master

root@ubuntu:~# ufw status

Status: inactive

# swapoff -a # 临时

# vim /etc/fstab # 永久

02、docker-ce

# step 1: 安装必要的一些系统工具

apt-get -y install apt-transport-https ca-certificates curl software-properties-common

# step 2: 安装GPG证书

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | apt-key add -

# Step 3: 写入软件源信息

add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

# Step 4: 更新并安装 Docker-CE

apt-get -y update

# 安装指定版本的Docker-CE:

# Step 1: 查找Docker-CE的版本:

apt-cache madison docker-ce

# sudo apt-get -y install docker-ce=[VERSION] //安装格式

apt-get -y install docker-ce=18.06.3~ce~3-0~ubuntu

###配置docker-hub源

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://dhq9bx4f.mirror.aliyuncs.com"]

}

EOF

systemctl daemon-reload && systemctl restart docker

docker-ce安装请参考阿里云文档:https://yq.aliyun.com/articles/110806

03、kubeadm

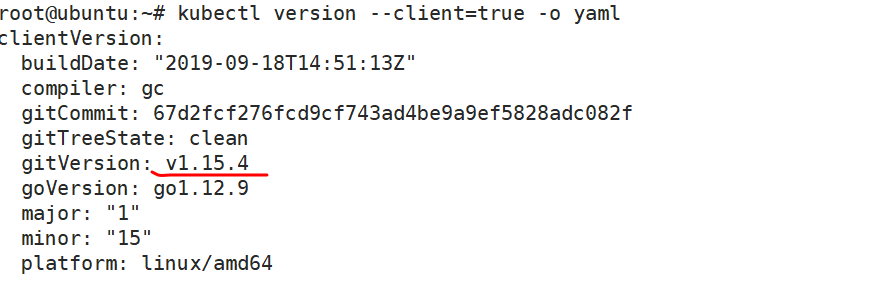

注意:建议kubelet、kubeadm、kubectl 跟kubernetes dashboard最新的支持版本一直

https://github.com/kubernetes/dashboard/releases/tag/v2.0.0-beta4

apt-get update && apt-get install -y apt-transport-https

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

#新增源

add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main"

apt-get update

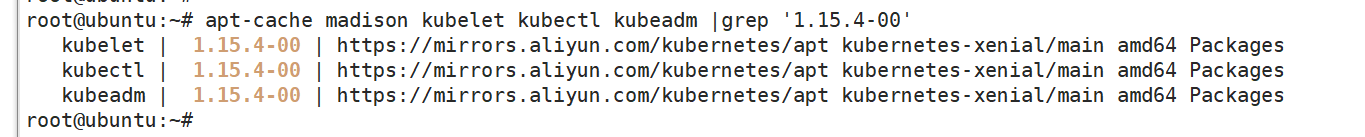

apt-cache madison kubelet kubectl kubeadm |grep '1.15.4-00' //查看1.15的最新版本

apt install -y kubelet=1.15.4-00 kubectl=1.15.4-00 kubeadm=1.15.4-00 //安装指定的版本

配置kubelet禁用swap

tee /etc/default/kubelet <<-'EOF'

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

EOF

systemctl daemon-reload && systemctl restart kubelet

注意:目前kubelet服务是启动异常的由于缺少很多参数配置文件,需要等待kubeadm init 后生成,就会自动启动了

04、初始化k8s

注意: kubeadm初始化捷报:1.13+,阿里云支持镜像拉取 registry.aliyuncs.com/google_containers //感谢阿里云,提供便利

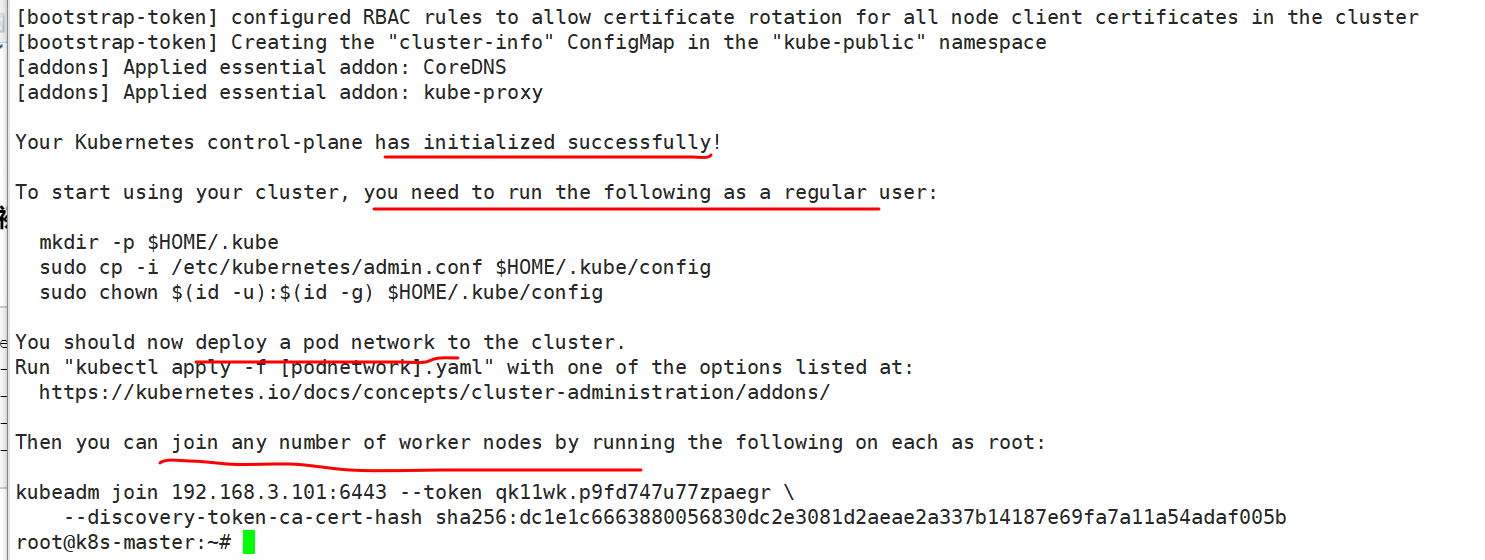

kubeadm init \ --kubernetes-version=v1.15.4 \ --image-repository registry.aliyuncs.com/google_containers \ --pod-network-cidr=10.24.0.0/16 \ --ignore-preflight-errors=Swap

###在当前账户下执行,kubectl配置调用

root@k8s-master:~# mkdir -p $HOME/.kube root@k8s-master:~# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config root@k8s-master:~# chown $(id -u):$(id -g) $HOME/.kube/config

###k8s网络

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

https://github.com/coreos/flannel //用的flannel的overlay 实现多节点pod通信

For Kubernetes v1.7+

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

root@k8s-master:~# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml podsecuritypolicy.policy/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds-amd64 created daemonset.apps/kube-flannel-ds-arm64 created daemonset.apps/kube-flannel-ds-arm created daemonset.apps/kube-flannel-ds-ppc64le created daemonset.apps/kube-flannel-ds-s390x created root@k8s-master:~# root@k8s-master:~# root@k8s-master:~# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-bccdc95cf-lnbl9 0/1 Pending 0 2m52s kube-system coredns-bccdc95cf-zr8bk 0/1 Pending 0 2m52s kube-system etcd-k8s-master 1/1 Running 0 2m8s kube-system kube-apiserver-k8s-master 1/1 Running 0 2m10s kube-system kube-controller-manager-k8s-master 1/1 Running 0 2m7s kube-system kube-flannel-ds-amd64-wpj2p 0/1 Init:0/1 0 12s //如果拉取不下来,自己pull就可以 kube-system kube-proxy-dt9p4 1/1 Running 0 2m51s kube-system kube-scheduler-k8s-master 1/1 Running 0 2m12s

root@ubuntu:~# grep -i image kube-flannel.yml

image: quay.io/coreos/flannel:v0.11.0-amd64

image: quay.io/coreos/flannel:v0.11.0-amd64

image: quay.io/coreos/flannel:v0.11.0-arm64

image: quay.io/coreos/flannel:v0.11.0-arm64

image: quay.io/coreos/flannel:v0.11.0-arm

image: quay.io/coreos/flannel:v0.11.0-arm

image: quay.io/coreos/flannel:v0.11.0-ppc64le

image: quay.io/coreos/flannel:v0.11.0-ppc64le

image: quay.io/coreos/flannel:v0.11.0-s390x

image: quay.io/coreos/flannel:v0.11.0-s390x

docker pull quay.io/coreos/flannel:v0.11.0-amd64

###目前一切正常,image均正常拉取及运行

###dashboard

https://github.com/kubernetes/dashboard#kubernetes-dashboard

https://github.com/kubernetes/dashboard/releases

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta4/aio/deploy/recommended.yaml

root@k8s-master:~# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-bccdc95cf-lnbl9 1/1 Running 0 6m13s kube-system coredns-bccdc95cf-zr8bk 1/1 Running 0 6m13s kube-system etcd-k8s-master 1/1 Running 0 5m29s kube-system kube-apiserver-k8s-master 1/1 Running 0 5m31s kube-system kube-controller-manager-k8s-master 1/1 Running 0 5m28s kube-system kube-flannel-ds-amd64-wpj2p 1/1 Running 0 3m33s kube-system kube-proxy-dt9p4 1/1 Running 0 6m12s kube-system kube-scheduler-k8s-master 1/1 Running 0 5m33s kubernetes-dashboard dashboard-metrics-scraper-fb986f88d-44wp2 0/1 ContainerCreating 0 32s kubernetes-dashboard kubernetes-dashboard-6bb65fcc49-hzzbw 1/1 Running 0 32s root@k8s-master:~# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-bccdc95cf-lnbl9 1/1 Running 0 6m27s kube-system coredns-bccdc95cf-zr8bk 1/1 Running 0 6m27s kube-system etcd-k8s-master 1/1 Running 0 5m43s kube-system kube-apiserver-k8s-master 1/1 Running 0 5m45s kube-system kube-controller-manager-k8s-master 1/1 Running 0 5m42s kube-system kube-flannel-ds-amd64-wpj2p 1/1 Running 0 3m47s kube-system kube-proxy-dt9p4 1/1 Running 0 6m26s kube-system kube-scheduler-k8s-master 1/1 Running 0 5m47s kubernetes-dashboard dashboard-metrics-scraper-fb986f88d-44wp2 1/1 Running 0 46s kubernetes-dashboard kubernetes-dashboard-6bb65fcc49-hzzbw 1/1 Running 0 46s root@k8s-master:~# kubectl get namespaces NAME STATUS AGE default Active 7m13s kube-node-lease Active 7m17s kube-public Active 7m17s kube-system Active 7m17s kubernetes-dashboard Active 78s

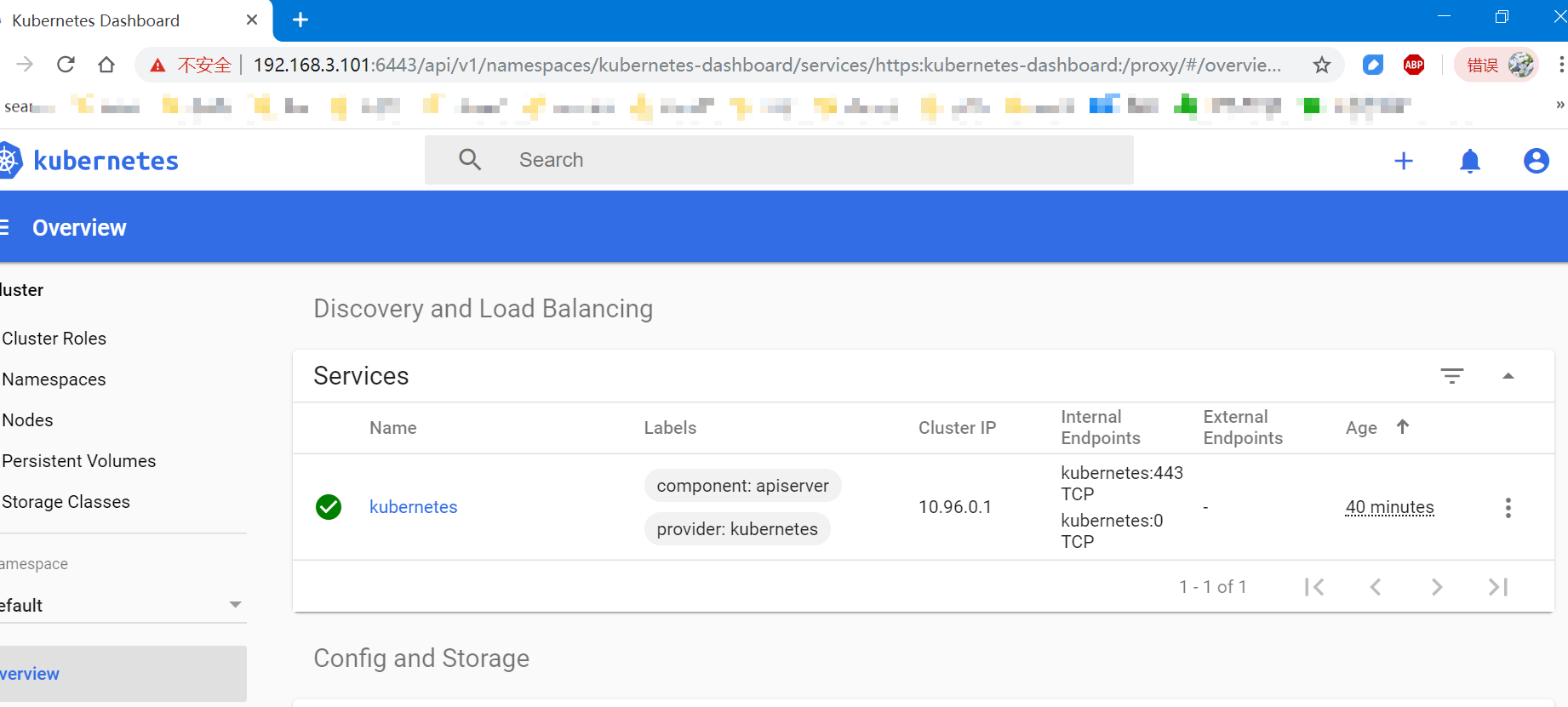

解决kubernetes-dashboard本地打开的问题!!!

具体的可以参考:https://www.cnblogs.com/rainingnight/p/deploying-k8s-dashboard-ui.html

root@k8s-master:~# kubectl cluster-info

Kubernetes master is running at https://192.168.3.101:6443 //apiserver在host暴露的地址

KubeDNS is running at https://192.168.3.101:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

###访问地址

https://<master-ip>:<apiserver-port>/api/v1/namespaces/xxxxxxxx/services/https:kubernetes-dashboard:/proxy/ //请注意namespace

https://192.168.3.101:6443/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

1.创建服务账号 首先创建一个叫admin-user的服务账号,并放在kube-system名称空间下: # admin-user.yaml apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kube-system 执行kubectl create命令: kubectl create -f admin-user.yaml 2.绑定角色 默认情况下,kubeadm创建集群时已经创建了admin角色,我们直接绑定即可: # admin-user-role-binding.yaml apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kube-system 执行kubectl create命令: kubectl create -f admin-user-role-binding.yaml 3.获取Token

现在我们需要找到新创建的用户的Token,以便用来登录dashboard:

root@k8s-master:~# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $

1}')Name: admin-user-token-b9bwj

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: cf9336ee-2070-49bb-91ee-123b1540cd63

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3

VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWI5YndqIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJjZjkzMzZlZS0yMDcwLTQ5YmItOTFlZS0xMjNiMTU0MGNkNjMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.nc8N-M0M580d3XdQKkvhj9WEH-7f_kEzkEGSJLmsI1rrlY0a_iGwBTilQ6baFHFKU9ERcW-u8aq85nFHkM7-mJfltAvvbtSbDOr_BUxrP11xvgkCGjPjgnISj1BvAaQlX0IXqpAFEEuJsUUvms9iKlhbF26PbOo1_1DnVnZ1ALlsXcyqoG0-VzbgKuHZPGANJdT17zsfw-xUYWyDBHQhn-BgsryonrwRhZdOWONsy4VH_qyrH3yIv1fazR58Sc4RxU58d1pSTatvCmLWW8AwcMAFfol0lHcZtgMzxecr41sDkySH5eEjWfsDv5OsYFsRoiR_ws65hqUZcjkQ6vclGQ

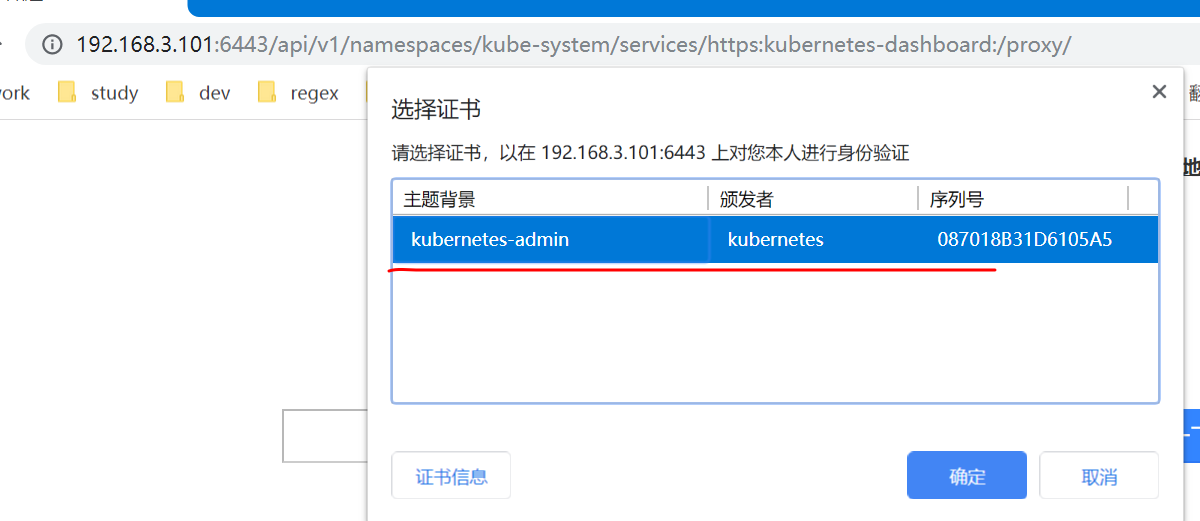

###制作证书

k8s默认启用了RBAC,并为未认证用户赋予了一个默认的身份:anonymous

对于API Server来说,它是使用证书进行认证的,我们需要先创建一个证书:

我们使用client-certificate-data和client-key-data生成一个p12文件,可使用下列命令:

# 生成client-certificate-data grep 'client-certificate-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.crt # 生成client-key-data grep 'client-key-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.key # 生成p12 openssl pkcs12 -export -clcerts -inkey kubecfg.key -in kubecfg.crt -out kubecfg.p12 -name "kubernetes-client"

下载kubecfg.p12,在window双击安装证书

chrome 打开地址:

https://192.168.3.101:6443/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

注意dashboard的namespaces

进去,输入token即可进入,注意:token的值一行,不要分行

###单节点k8s,默认pod不被调度在master节点

kubectl taint nodes --all node-role.kubernetes.io/master- //去污点,master节点可以被调度

root@k8s-master:~# kubectl taint nodes --all node-role.kubernetes.io/master-

node/k8s-master untainted

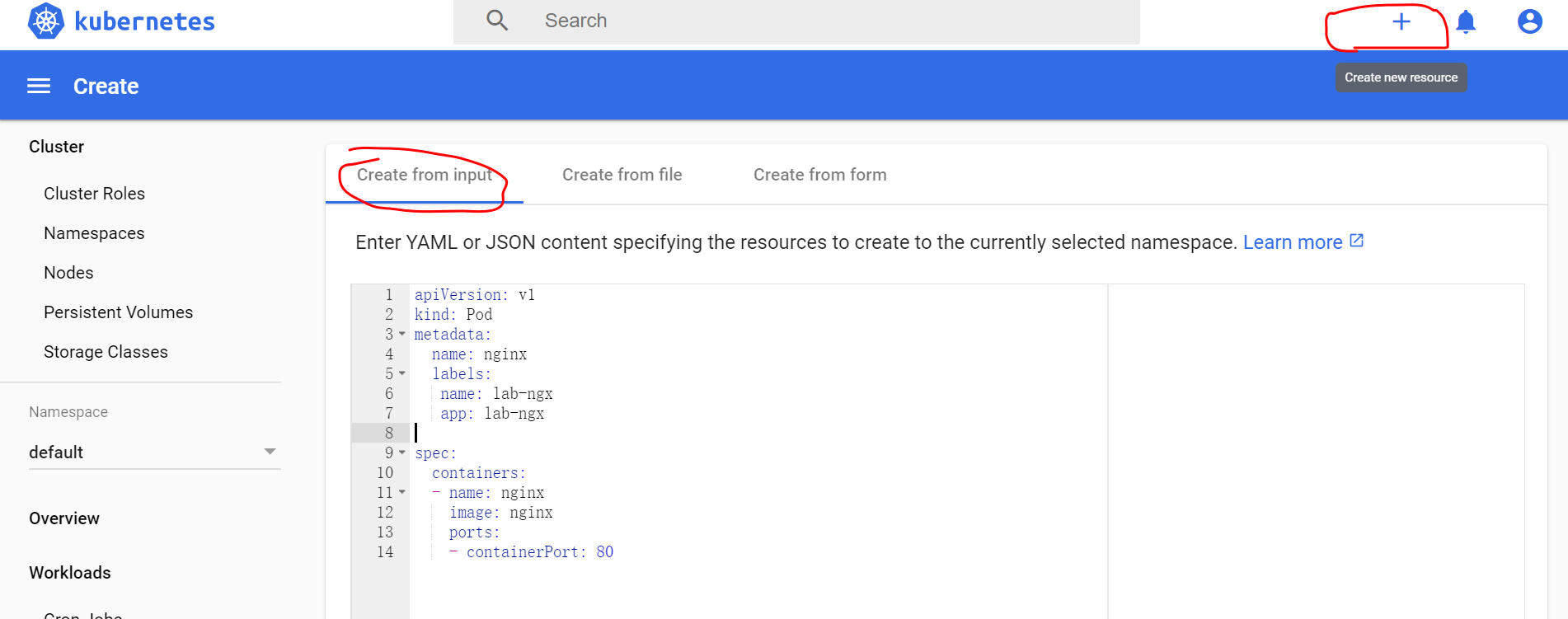

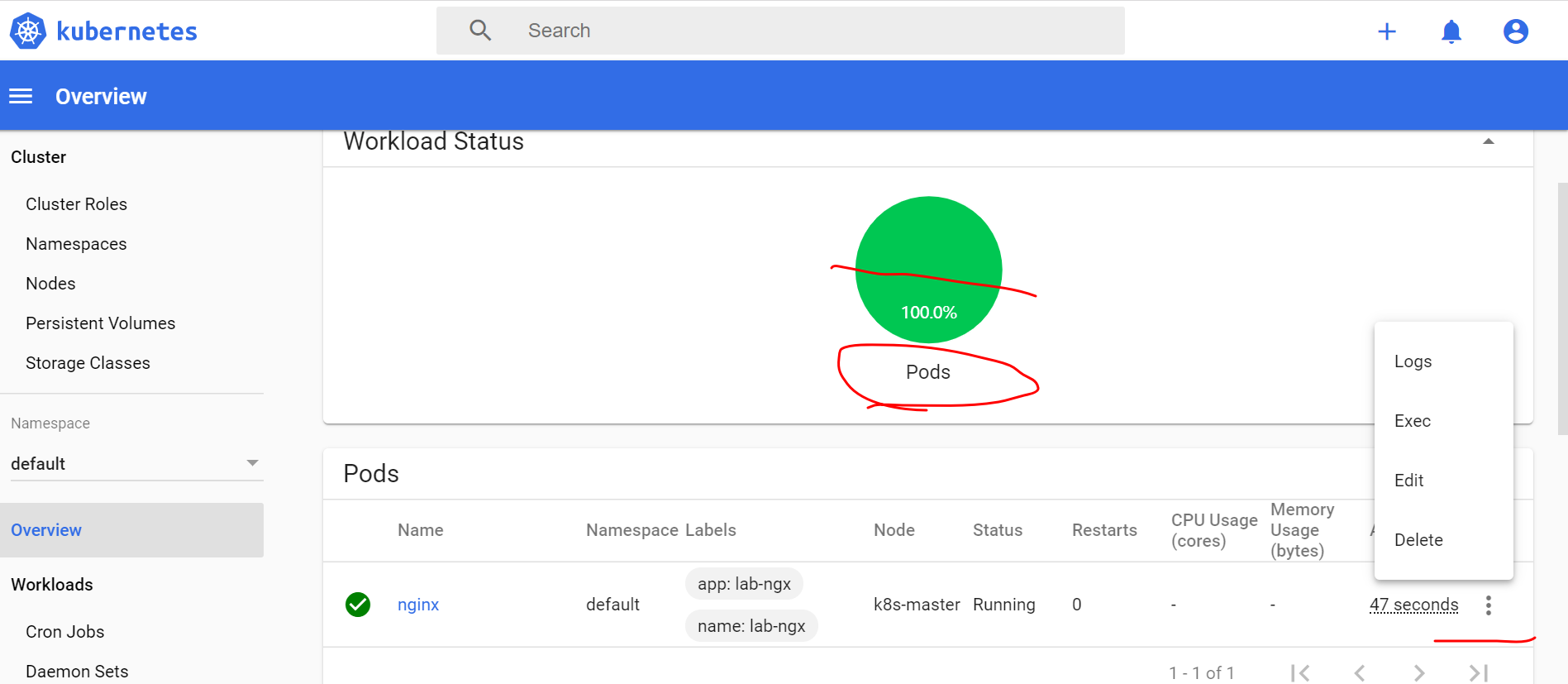

05、测试

在kubernetes-ui上执行yml文件,简单快捷,爽的一笔

执行update,即可在页面创建pod,pod的颜色代表不同的含义,等待拉取部署

总的来说:

部署k8s,自己摸索了很久,看着挺简单的,自己搞起来很费劲早起的版本,没有阿里云的支持很难拉取国外的image导致部署不成功!