CentOS7安装Kubernetes1.16.3

一、概述

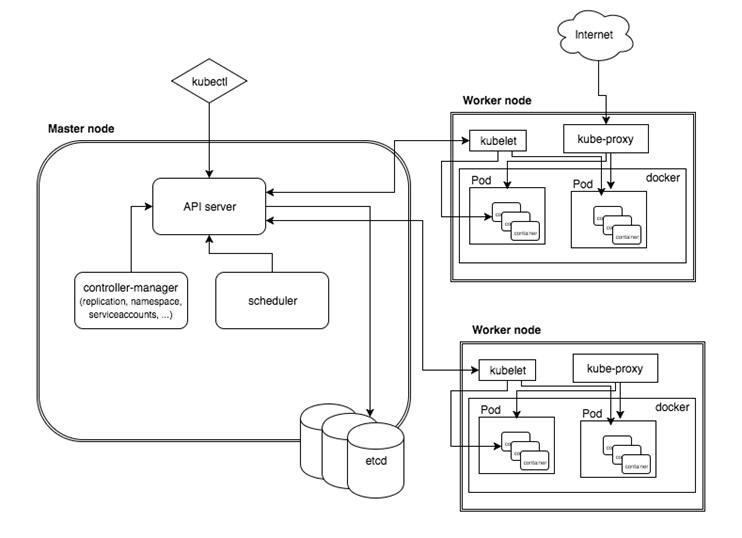

手工搭建 Kubernetes 集群是一件很繁琐的事情,为了简化这些操作,就产生了很多安装配置工具,如 Kubeadm ,Kubespray,RKE 等组件,我最终选择了官方的 Kubeadm 主要是不同的 Kubernetes 版本都有一些差异,Kubeadm 更新与支持的会好一些。Kubeadm 是 Kubernetes 官方提供的快速安装和初始化 Kubernetes 集群的工具,目前的还处于孵化开发状态,跟随 Kubernetes 每个新版本的发布都会同步更新, 强烈建议先看下官方的文档了解下各个组件与对象的作用。

https://kubernetes.io/docs/concepts/

https://kubernetes.io/docs/setup/independent/install-kubeadm/

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

系统环境

| 系统 | 内核 | docker | ip | 主机名 | 配置 |

|---|---|---|---|---|---|

| centos 7.6 | 3.10.0-957.el7.x86_64 | 19.03.5 | 192.168.31.150 | k8s-master | 2核4G |

| centos 7.6 | 3.10.0-957.el7.x86_64 | 19.03.5 | 192.168.31.183 | k8s-node01 | 2核4G |

注意:请确保CPU至少2核,内存2G

二、准备工作

关闭防火墙

如果各个主机启用了防火墙,需要开放Kubernetes各个组件所需要的端口,可以查看Installing kubeadm中的”Check required ports”一节。 这里简单起见在各节点禁用防火墙:

systemctl stop firewalld

systemctl disable firewalld

禁用SELINUX

# 临时禁用 setenforce 0 # 永久禁用 vim /etc/selinux/config # 或者修改/etc/sysconfig/selinux SELINUX=disabled

修改k8s.conf文件

cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system

开启ipv4转发

vi /etc/sysctl.conf

内容如下:

net.ipv4.ip_forward = 1

刷新参数

sysctl -p

关闭swap

# 临时关闭

swapoff -a

修改 /etc/fstab 文件,注释掉 SWAP 的自动挂载(永久关闭swap,重启后生效)

# 注释掉以下字段 /dev/mapper/cl-swap swap swap defaults 0 0

安装docker

这里就不再叙述了,请参考链接:

https://www.cnblogs.com/xiao987334176/p/11771657.html

修改主机名

hostnamectl set-hostname k8s-master

注意:主机名不能带下划线,只能带中划线

否则安装k8s会报错

could not convert cfg to an internal cfg: nodeRegistration.name: Invalid value: "k8s_master": a DNS-1123 subdomain must consist of lower case alphanumeric characters, '-' or '.', and must start and end with an alphanumeric character (e.g. 'example.com', regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*')

三、安装kubeadm,kubelet,kubectl

在各节点安装kubeadm,kubelet,kubectl

修改yum安装源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

安装软件

目前最新版本是:1.16.3

yum install -y kubelet-1.16.3-0 kubeadm-1.16.3-0 kubectl-1.16.3-0 systemctl enable kubelet && systemctl start kubelet

以上,就是master和node都需要操作的部分。

四、初始化Master节点

运行初始化命令

kubeadm init --kubernetes-version=1.16.3 \ --apiserver-advertise-address=192.168.31.150 \ --image-repository registry.aliyuncs.com/google_containers \ --service-cidr=10.1.0.0/16 \ --pod-network-cidr=10.244.0.0/16

参数解释:

–kubernetes-version: 用于指定k8s版本; –apiserver-advertise-address:用于指定kube-apiserver监听的ip地址,就是 master本机IP地址。 –pod-network-cidr:用于指定Pod的网络范围; 10.244.0.0/16 –service-cidr:用于指定SVC的网络范围; –image-repository: 指定阿里云镜像仓库地址

注意:修改–apiserver-advertise-address为你自己的环境的master ip

这一步很关键,由于kubeadm 默认从官网k8s.grc.io下载所需镜像,国内无法访问,因此需要通过–image-repository指定阿里云镜像仓库地址

集群初始化成功后返回如下信息:

记录生成的最后部分内容,此内容需要在其它节点加入Kubernetes集群时执行。

输出如下:

... Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.31.150:6443 --token ute1qr.ylhan3tn3eohip20 \ --discovery-token-ca-cert-hash sha256:f7b37ecd602deb59e0ddc2a0cfa842f8c3950690f43a5d552a7cefef37d1fa31

配置kubectl工具

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

安装Calico

mkdir k8s cd k8s wget https://docs.projectcalico.org/v3.10/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml ## 将192.168.0.0/16修改ip地址为10.244.0.0/16 sed -i 's/192.168.0.0/10.244.0.0/g' calico.yaml

加载Calico

kubectl apply -f calico.yaml

查看Pod状态

等待几分钟,确保所有的Pod都处于Running状态

[root@k8s_master k8s]# kubectl get pod --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system calico-kube-controllers-6b64bcd855-tdv2h 1/1 Running 0 2m37s 192.168.235.195 k8s-master <none> <none> kube-system calico-node-4xgk8 1/1 Running 0 2m38s 192.168.31.150 k8s-master <none> <none> kube-system coredns-58cc8c89f4-8672x 1/1 Running 0 45m 192.168.235.194 k8s-master <none> <none> kube-system coredns-58cc8c89f4-8h8tq 1/1 Running 0 45m 192.168.235.193 k8s-master <none> <none> kube-system etcd-k8s-master 1/1 Running 0 44m 192.168.31.150 k8s-master <none> <none> kube-system kube-apiserver-k8s-master 1/1 Running 0 44m 192.168.31.150 k8s-master <none> <none> kube-system kube-controller-manager-k8s-master 1/1 Running 0 44m 192.168.31.150 k8s-master <none> <none> kube-system kube-proxy-6f42j 1/1 Running 0 45m 192.168.31.150 k8s-master <none> <none> kube-system kube-scheduler-k8s-master 1/1 Running 0 44m 192.168.31.150 k8s-master <none> <none>

注意:calico-kube-controllers容器的网段不是10.244.0.0/16

删除Calico,重新加载

kubectl delete -f calico.yaml

kubectl apply -f calico.yaml

再次查看ip

[root@k8s-master k8s]# kubectl get pod --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system calico-kube-controllers-6b64bcd855-qn6bs 0/1 Running 0 18s 10.244.235.193 k8s-master <none> <none> kube-system calico-node-cdnvz 1/1 Running 0 18s 192.168.31.150 k8s-master <none> <none> kube-system coredns-58cc8c89f4-8672x 1/1 Running 1 5h22m 192.168.235.197 k8s-master <none> <none> kube-system coredns-58cc8c89f4-8h8tq 1/1 Running 1 5h22m 192.168.235.196 k8s-master <none> <none> kube-system etcd-k8s-master 1/1 Running 1 5h22m 192.168.31.150 k8s-master <none> <none> kube-system kube-apiserver-k8s-master 1/1 Running 1 5h21m 192.168.31.150 k8s-master <none> <none> kube-system kube-controller-manager-k8s-master 1/1 Running 1 5h22m 192.168.31.150 k8s-master <none> <none> kube-system kube-proxy-6f42j 1/1 Running 1 5h22m 192.168.31.150 k8s-master <none> <none> kube-system kube-scheduler-k8s-master 1/1 Running 1 5h21m 192.168.31.150 k8s-master <none> <none>

发现,ip地址已经是10.244.0.0/16 网段了。

设置开机启动

systemctl enable kubelet

五、node加入集群

准备工作

请查看上文中的准备工作,确保都执行了!!!

修改主机名部分,改为k8s-node01

hostnamectl set-hostname k8s-node01

加入节点

登录到node节点,确保已经安装了docker和kubeadm,kubelet,kubectl

kubeadm join 192.168.31.150:6443 --token ute1qr.ylhan3tn3eohip20 \ --discovery-token-ca-cert-hash sha256:f7b37ecd602deb59e0ddc2a0cfa842f8c3950690f43a5d552a7cefef37d1fa31

设置开机启动

systemctl enable kubelet

查看节点

登录到master,使用命令查看

[root@k8s_master k8s]# kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-master Ready master 87m v1.16.3 192.168.31.150 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://19.3.5 k8s-node01 Ready <none> 5m14s v1.16.3 192.168.31.183 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://19.3.5

六、创建Pod

创建nginx

kubectl create deployment nginx --image=nginx kubectl expose deployment nginx --port=80 --type=NodePort

查看pod和svc

[root@k8s-master k8s]# kubectl get pod,svc -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod/nginx-86c57db685-z2kdd 1/1 Running 0 18m 10.244.85.194 k8s-node01 <none> <none> NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 111m <none> service/nginx NodePort 10.1.111.179 <none> 80:30876/TCP 24m app=nginx

允许外网访问nodePort

iptables -P FORWARD ACCEPT

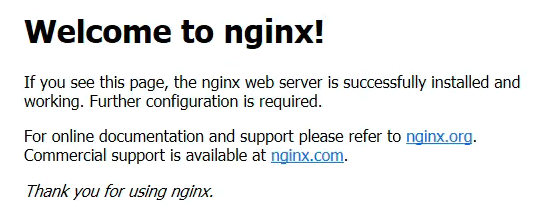

测试访问

使用master ip+nodeport端口方式访问

http://192.168.31.150:30876/

效果如下:

命令补全

(仅master)

yum install -y bash-completion source <(kubectl completion bash) echo "source <(kubectl completion bash)" >> ~/.bashrc source ~/.bashrc

必须退出一次,再次登录,就可以了

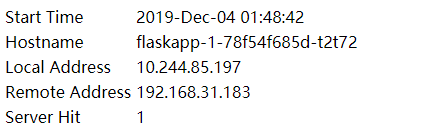

七、使用yml发布应用

以flaskapp为例子

flaskapp-deployment.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: flaskapp-1 spec: selector: matchLabels: run: flaskapp-1 replicas: 1 template: metadata: labels: run: flaskapp-1 spec: containers: - name: flaskapp-1 image: jcdemo/flaskapp ports: - containerPort: 5000

flaskapp-service.yaml

apiVersion: v1 kind: Service metadata: name: flaskapp-1 labels: run: flaskapp-1 spec: type: NodePort ports: - port: 5000 name: flaskapp-port targetPort: 5000 protocol: TCP nodePort: 30005 selector: run: flaskapp-1

加载yml文件

kubectl apply -f flaskapp-service.yaml

kubectl apply -f flaskapp-deployment.yaml

访问页面

使用master ip+nodeport访问

http://192.168.31.183:30005/

效果如下:

注意:使用node节点ip+nodeport也可以访问。

本文参考链接:

https://yq.aliyun.com/articles/626118

https://blog.csdn.net/fenglailea/article/details/88745642

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

2018-11-20 python 删除前3天的文件