k8s单机部署1.11.5

一、概述

由于服务器有限,因此只能用虚拟机搭建 k8s。但是开3个节点,电脑卡的不行。

k8s中文社区封装了一个 Minikube,用来搭建单机版,链接如下:

https://yq.aliyun.com/articles/221687

我测试过了,是可以运行。但是,没有calico网络插件。根据其他文档来部署calico,始终无法使用!

因此,靠人不如靠自己。自己搭建的,心里有数!

二、环境依赖

环境说明

| 配置 | 操作系统 | 主机名 | IP地址 | 功能 |

| 2核4G 40G磁盘 | ubuntu-16.04.5-server-amd64 | ubuntu | 192.168.91.128 | 主节点,etcd,docker registry |

请确保核心至少有2核,内存不要太小!

本文所使用的操作系统为:ubuntu:16.04,注意:切勿使用18.04,会导致安装失败!

k8s依赖于etcd和docker私有库,下面会一一介绍。

文件准备

k8s-1.11.5 目录下载链接如下:

链接:https://pan.baidu.com/s/152ECxRuq3aX4HQco4GH6cA

提取码:bz5h

目录结构如下:

./ ├── calico-cni-1.11.8.tar.gz ├── calico-kube-controllers-1.0.5.tar.gz ├── calico-node-2.6.12.tar.gz ├── calico.yaml ├── coredns-1.1.3.tar.gz ├── cri-tools_1.12.0-00_amd64.deb ├── kubeadm_1.11.5-00_amd64.deb ├── kube-apiserver-amd64-v1.11.5.tar.gz ├── kube-controller-manager-amd64-v1.11.5.tar.gz ├── kubectl_1.11.5-00_amd64.deb ├── kubelet_1.11.5-00_amd64.deb ├── kube-proxy-amd64-v1.11.5.tar.gz ├── kubernetes-cni_0.6.0-00_amd64.deb ├── kube-scheduler-amd64-v1.11.5.tar.gz └── pause3.1.tar.gz

必须将 k8s-1.11.5 目录放置到 /repo/k8s-1.11.5 目录下

etcd

etcd 目录结构如下:

./ ├── etcd-v3.3.10-linux-amd64.tar.gz └── etcd_v3.3.10.sh

etcd_v3.3.10.sh

#/bin/bash # 单击版etcd安装脚本 # 本脚本,只能在本地服务器安装。 # 请确保etcd-v3.3.10-linux-amd64.tar.gz文件和当前脚本在同一目录下。 # 务必使用root用户执行此脚本! # 确保可以直接执行python3,因为倒数第4行,有一个json格式化输出。如果不需要可以忽略 #set -e # 输入本机ip while true do echo '请输入本机ip' echo 'Example: 192.168.0.1' echo -e "etcd server ip=\c" read ETCD_Server if [ "$ETCD_Server" == "" ];then echo 'No input etcd server IP' else #echo 'No input etcd server IP' break fi done # etcd启动服务 cat > /lib/systemd/system/etcd.service <<EOF [Unit] Description=etcd - highly-available key value store Documentation=https://github.com/coreos/etcd Documentation=man:etcd After=network.target Wants=network-online.target [Service] Environment=DAEMON_ARGS= Environment=ETCD_NAME=%H Environment=ETCD_DATA_DIR=/var/lib/etcd/default EnvironmentFile=-/etc/default/%p Type=notify User=etcd PermissionsStartOnly=true #ExecStart=/bin/sh -c "GOMAXPROCS=\$(nproc) /usr/bin/etcd \$DAEMON_ARGS" ExecStart=/usr/bin/etcd \$DAEMON_ARGS Restart=on-abnormal #RestartSec=10s #LimitNOFILE=65536 [Install] WantedBy=multi-user.target Alias=etcd3.service EOF # 主机名 name=`hostname` # etcd的http连接地址 initial_cluster="http://$ETCD_Server:2380" # 判断进程是否启动 A=`ps -ef|grep /usr/bin/etcd|grep -v grep|wc -l` if [ $A -ne 0 ];then # 杀掉进程 killall etcd fi # 删除etcd相关文件 rm -rf /var/lib/etcd/* rm -rf /etc/default/etcd # 设置时区 ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime # 判断压缩文件 if [ ! -f "etcd-v3.3.10-linux-amd64.tar.gz" ];then echo "当前目录etcd-v3.3.10-linux-amd64.tar.gz文件不存在" exit fi # 安装etcd tar zxf etcd-v3.3.10-linux-amd64.tar.gz -C /tmp/ cp -f /tmp/etcd-v3.3.10-linux-amd64/etcd /usr/bin/ cp -f /tmp/etcd-v3.3.10-linux-amd64/etcdctl /usr/bin/ # etcd配置文件 cat > /etc/default/etcd <<EOF ETCD_NAME=$name ETCD_DATA_DIR="/var/lib/etcd/" ETCD_LISTEN_PEER_URLS="http://$ETCD_Server:2380" ETCD_LISTEN_CLIENT_URLS="http://$ETCD_Server:2379,http://127.0.0.1:4001" ETCD_INITIAL_ADVERTISE_PEER_URLS="http://$ETCD_Server:2380" ETCD_INITIAL_CLUSTER="$ETCD_Servernitial_cluster" ETCD_INITIAL_CLUSTER_STATE="new" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-sdn" ETCD_ADVERTISE_CLIENT_URLS="http://$ETCD_Server:2379" EOF # 临时脚本,添加用户和组 cat > /tmp/foruser <<EOF #!/bin/bash if [ \`cat /etc/group|grep etcd|wc -l\` -eq 0 ];then groupadd -g 217 etcd;fi if [ \`cat /etc/passwd|grep etcd|wc -l\` -eq 0 ];then mkdir -p /var/lib/etcd && useradd -g 217 -u 111 etcd -d /var/lib/etcd/ -s /bin/false;fi if [ \`cat /etc/profile|grep ETCDCTL_API|wc -l\` -eq 0 ];then bash -c "echo 'export ETCDCTL_API=3' >> /etc/profile" && bash -c "source /etc/profile";fi EOF # 执行脚本 bash /tmp/foruser # 启动服务 systemctl daemon-reload systemctl enable etcd.service chown -R etcd:etcd /var/lib/etcd systemctl restart etcd.service #netstat -anpt | grep 2379 # 查看版本 etcdctl -v # 访问API, -s 去掉curl的统计信息. python3 -m json.tool 表示json格式化 curl $initial_cluster/version -s | python3 -m json.tool # 删除临时文件 rm -rf /tmp/foruser /tmp/etcd-v3.3.10-linux-amd64

etcd目录可以任意放置,这里我直接放到/root目录中

执行脚本

bash etcd_v3.3.10.sh

脚本执行输出:

请输入本机ip Example: 192.168.0.1 etcd server ip=192.168.0.162 etcdctl version: 3.3.10 API version: 2 { "etcdserver": "3.3.10", "etcdcluster": "3.3.0" }

docker registry

安装docker

apt-get install -y docker.io

注意:千万不要安装 docker-ce,否则安装k8s报错

[preflight] Some fatal errors occurred: [ERROR SystemVerification]: unsupported docker version: 18.09.0 [preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

因为k8s不支持最新版本的docker

启动docker registry

docker run -d --name docker-registry --restart=always -p 5000:5000 registry

三、一键安装

在root目录下新建脚本

kube-v1.11.5.sh

#/bin/bash # k8s 1.11.5版本安装,单机版 # 基于ubuntu 16.04 REPO=192.168.0.162 K8S_MASTER_IP=$REPO dockerREG=$REPO:5000 EXTERNAL_ETCD_ENDPOINTS=http://$REPO:2379 curl $EXTERNAL_ETCD_ENDPOINTS/version if [ `echo $?` != 0 ];then echo "etcd没有运行,请执行脚本" echo "bash etcd_v3.3.10.sh" exit fi curl http://$dockerREG/v2/_catalog if [ `echo $?` != 0 ];then echo "docker私有仓库没有运行,请执行命令:" echo "docker run -d --name docker-registry --restart=always -p 5000:5000 registry" exit fi if [ ! -d "/repo/k8s-1.11.5/" ];then echo "/repo/k8s-1.11.5目录不存在,请上传文件夹到指定位置!" exit fi ###################################################################################### #安装相应的组件 sudo apt-get update sudo apt-get install -y ipvsadm --allow-unauthenticated sudo apt-get install -y ebtables socat --allow-unauthenticated Dline=`sudo grep -n LimitNOFILE /lib/systemd/system/docker.service|cut -f 1 -d ":" ` sudo sed -i "$Dline c\LimitNOFILE=1048576" /lib/systemd/system/docker.service # 配置docker cat > daemon.json <<EOF { "insecure-registries":["$dockerREG"],"storage-driver":"overlay2" } EOF # 重启docker sudo cp -f daemon.json /etc/docker/ sudo service docker restart #安装kubernetes 服务器 if [ `dpkg -l|grep kube|wc -l` -ne 0 ];then sudo apt purge -y `dpkg -l|grep kube|awk '{print $2}'` fi #sudo apt-get install -y kubelet kubeadm --allow-unauthenticated dpkg -i /repo/k8s-1.11.5/*.deb #开启cadvisor sudo sed -i 's?config.yaml?config.yaml --cadvisor-port=4194 --eviction-hard=memory.available<512Mi,nodefs.available<13Gi,imagefs.available<100Mi?g' /etc/systemd/system/kubelet.service.d/10-kubeadm.conf #add cgroup drivers if [ `sudo cat /etc/systemd/system/kubelet.service.d/10-kubeadm.conf|grep cgroup-driver|wc -l` -eq 0 ];then sudo sed -i 8i'Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"' /etc/systemd/system/kubelet.service.d/10-kubeadm.conf sudo sed -i "s?`sudo tail -n1 /etc/systemd/system/kubelet.service.d/10-kubeadm.conf`?& $KUBELET_CGROUP_ARGS?g" /etc/systemd/system/kubelet.service.d/10-kubeadm.conf fi sudo systemctl daemon-reload IMAGES="calico-cni-1.11.8.tar.gz calico-kube-controllers-1.0.5.tar.gz calico-node-2.6.12.tar.gz coredns-1.1.3.tar.gz kube-apiserver-amd64-v1.11.5.tar.gz kube-scheduler-amd64-v1.11.5.tar.gz kube-controller-manager-amd64-v1.11.5.tar.gz kube-proxy-amd64-v1.11.5.tar.gz pause3.1.tar.gz" for i in $IMAGES;do #wget -O /tmp/$i http://$repo/k8s-1.11.5/$i sudo docker load</repo/k8s-1.11.5/$i done CalicoPro=$(sudo docker images|grep quay.io|awk -F 'quay.io/' '{print $2}'|awk '{print $1}'|sort|uniq) for i in $CalicoPro;do Proversion=$(sudo docker images|grep quay.io|grep $i|awk '{print $2}') for j in $Proversion;do sudo docker tag quay.io/$i:$j $dockerREG/$i:$j sudo docker push $dockerREG/$i:$j done done sudo systemctl enable kubelet.service sudo systemctl stop kubelet.service #close swap sudo swapoff -a fswap=`cat /etc/fstab |grep swap|awk '{print $1}'` for i in $fswap;do sudo sed -i "s?$i?#$i?g" /etc/fstab done ###################################################################################### sudo kubeadm reset -f sudo rm -rf /etc/kubernetes/* sudo rm -rf /var/lib/kubelet/* sudo rm -f /tmp/kubeadm-conf.yaml cat > /tmp/kubeadm-conf.yaml <<EOF apiVersion: kubeadm.k8s.io/v1alpha1 kind: MasterConfiguration networking: podSubnet: 192.138.0.0/16 #apiServerCertSANs: #- master01 #- master02 #- master03 #- 172.16.2.1 #- 172.16.2.2 #- 172.16.2.3 #- 172.16.2.100 etcd: endpoints: #token: 67e411.zc3617bb21ad7ee3 kubernetesVersion: v1.11.5 #imageRepository: $dockerREG api: advertiseAddress: $K8S_MASTER_IP EOF if [ -z "$EXTERNAL_ETCD_ENDPOINTS" ]; then sudo sed -i 's?etcd:?#etcd?g' /tmp/kubeadm-conf.yaml sudo sed -i 's?endpoints:?#endpoints:?g' /tmp/kubeadm-conf.yaml sudo kubeadm init --config=/tmp/kubeadm-conf.yaml | sudo tee /etc/kube-server-key else for i in `echo $EXTERNAL_ETCD_ENDPOINTS|sed 's?,? ?g'`;do sudo sed -i "15i\ - $i" /tmp/kubeadm-conf.yaml done sudo kubeadm init --config=/tmp/kubeadm-conf.yaml| sudo tee /etc/kube-server-key fi #添加Nodrport端口范围"--service-node-port-range=1000-62000"到/etc/kubernetes/manifests/kube-apiserver.yaml if [ `sudo cat /etc/kubernetes/manifests/kube-apiserver.yaml|grep service-node-port-range|wc -l` -eq 0 ];then line_conf=$(sudo cat /etc/kubernetes/manifests/kube-apiserver.yaml|grep -n "allow-privileged=true"|cut -f 1 -d ":") sudo sed -i -e "$line_conf"i'\ - --service-node-port-range=1000-62000' /etc/kubernetes/manifests/kube-apiserver.yaml line_conf=$(sudo grep -n "insecure-port" /etc/kubernetes/manifests/kube-apiserver.yaml|awk -F ":" '{print $1}') sudo sed -i -e "$line_conf"i"\ - --insecure-bind-address=$K8S_MASTER_IP" /etc/kubernetes/manifests/kube-apiserver.yaml sudo sed -i -e 's?insecure-port=0?insecure-port=8080?g' /etc/kubernetes/manifests/kube-apiserver.yaml fi #设置kubectl权限 mkdir -p $HOME/.kube sudo cp -f /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config ###################################################################################### # Deploy etcd as a master pod ###################################################################################### # We need: (1) modify it to listen on K8S_MASTER_IP so that minion nodes can access. # (2) modify kube-apiserver configuration. # (3) restart the service kubelet. if [ -z "$EXTERNAL_ETCD_ENDPOINTS" ]; then sudo sed -i -e "s/127.0.0.1/$K8S_MASTER_IP/g" /etc/kubernetes/manifests/etcd.yaml sudo sed -i "s?127.0.0.1?$K8S_MASTER_IP?g" /etc/kubernetes/manifests/kube-apiserver.yaml sudo service docker restart sudo service kubelet restart fi ###################################################################################### ###################################################################################### # Deploy calico network plugin ###################################################################################### cp /repo/k8s-1.11.5/calico.yaml /tmp/calico.yaml line=`grep "etcd_endpoints:" -n /tmp/calico.yaml | cut -f 1 -d ":"` if [ -z "$EXTERNAL_ETCD_ENDPOINTS" ]; then sudo sed -i "$line c \ \ etcd_endpoints: \"http://$K8S_MASTER_IP:2379\"" /tmp/calico.yaml else # TODO: multiple instances sudo sed -i "$line c \ \ etcd_endpoints: \"$EXTERNAL_ETCD_ENDPOINTS\"" /tmp/calico.yaml fi # Change CIDR PODNET=$(cat /tmp/kubeadm-conf.yaml|grep podSubnet|awk '{print $NF}'|cut -d "/" -f1) sudo sed -i -e "s/192.168.0.0/$PODNET/g" /tmp/calico.yaml sudo sed -i -e "s?quay.io?$dockerREG?g" /tmp/calico.yaml sudo sed -i -e "s?192.168.0.179?$K8S_MASTER_IP?g" /tmp/calico.yaml # Wait for everything is up except kube-dns, which needs a network plugin sudo sleep 1m mkdir -p k8s-1.11.5 cp /tmp/calico.yaml k8s-1.11.5/ sudo kubectl --kubeconfig=/etc/kubernetes/admin.conf apply -f k8s-1.11.5/calico.yaml sudo sleep 10 # 默认情况下,为了保证 master 的安全,master 是不会被调度到 app 的。你可以取消这个限制通过输入 sudo kubectl taint nodes --all node-role.kubernetes.io/master- # 查看集群中的节点 sudo kubectl get nodes sudo sleep 20 # 查看k8s服务状态 kubectl get po -n kube-system

注意:运行之前,请修改脚本最开头部分的IP地址,为自己的本机IP

运行脚本

bash kube-v1.11.5.sh

执行输出:

... NAME STATUS ROLES AGE VERSION ubuntu NotReady master 1m v1.11.5 NAME READY STATUS RESTARTS AGE calico-kube-controllers-55445fbcb6-8znzw 1/1 Running 0 25s calico-node-49w2t 2/2 Running 0 25s coredns-78fcdf6894-9t8wd 1/1 Running 0 25s coredns-78fcdf6894-ztxm6 1/1 Running 0 25s kube-apiserver-ubuntu 1/1 Running 0 59s kube-controller-manager-ubuntu 1/1 Running 1 59s kube-proxy-6xw6j 1/1 Running 0 25s kube-scheduler-ubuntu 1/1 Running 1 59s

四、验证

flask.yaml

新建yaml文件 flask.yaml

apiVersion: extensions/v1beta1 kind: Deployment metadata: name: flaskapp-1 spec: replicas: 1 template: metadata: labels: name: flaskapp-1 spec: containers: - name: flaskapp-1 image: jcdemo/flaskapp ports: - containerPort: 5000 --- apiVersion: v1 kind: Service metadata: name: flaskapp-1 labels: name: flaskapp-1 spec: type: NodePort ports: - port: 5000 name: flaskapp-port targetPort: 5000 protocol: TCP nodePort: 5000 selector: name: flaskapp-1

创建pod

kubectl create -f flask.yaml --validate

查看Pod状态

root@ubuntu:~# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINAT flaskapp-1-84b7f79cdf-dgctk 1/1 Running 0 6m 192.138.243.195 ubuntu <none>

访问页面

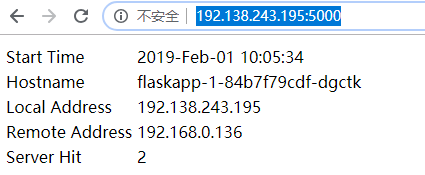

root@ubuntu:~# curl http://192.138.243.195:5000 <html><head><title>Docker + Flask Demo</title></head><body><table><tr><td> Start Time </td> <td>2019-Feb-01 10:05:34</td> </tr><tr><td> Hostname </td> <td>flaskapp-1-84b7f79cdf-dgctk</td> </tr><tr><td> Local Address </td> <td>192.138.243.195</td> </tr><tr><td> Remote Address </td> <td>192.168.0.162</td> </tr><tr><td> Server Hit </td> <td>1</td> </tr></table></body></html>

有输出 Hostname 表示成功了

我想直接使用windows 10访问flask,可不可呢?

不可以!为什么?因为我的电脑不能直接访问 192.138.0.0/16 的网络。这个是k8s的pod 网络。

添加路由

首先需要在windows 10 中添加路由,比如:k8s主控端的地址为 192.168.0.102

确保cmd打开时,以管理员身份运行,否则提示没有权限!

在cmd中输入如下命令:

route add -p 192.138.0.0 MASK 255.255.0.0 192.168.0.162

设置nat规则

iptables -t nat -I POSTROUTING -s 192.168.0.0/24 -d 192.138.0.0/16 -o tunl0 -j MASQUERADE

使用windos 10 访问flask页面,效果如下:

将iptables规则加入到开机启动项中

echo 'iptables -t nat -I POSTROUTING -s 192.168.0.0/24 -d 192.138.0.0/16 -o tunl0 -j MASQUERADE' >> /etc/rc.local

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 【自荐】一款简洁、开源的在线白板工具 Drawnix