import tensorflow as tf

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

(train_image,train_label),(test_image,test_label) = tf.keras.datasets.fashion_mnist.load_data()

# 加载keras里的fashion_mnist数据

train_image = train_image/255 # 对训练集进行归一化

test_image = test_image/255

model = tf.keras.Sequential()

model.add(tf.keras.layers.Flatten(input_shape=(28,28))) # 参考https://blog.csdn.net/qq_46244851/article/details/109584831

model.add(tf.keras.layers.Dense(128,activation='relu'))

model.add(tf.keras.layers.Dense(128,activation='relu'))

model.add(tf.keras.layers.Dense(128,activation='relu')) # 层数多增加了2层

model.add(tf.keras.layers.Dense(10,activation='softmax')) # 输出十个类,用softmax转化成概率值激活

model.compile(

optimizer='adam',

loss='sparse_categorical_crossentropy', # 当label使用数字编码时就使用这个损失函数

metrics=['acc']

)

history=model.fit(train_image,train_label,

validation_data=(test_image,test_label), # 提示训练集上的loss以及acc

epochs=10)

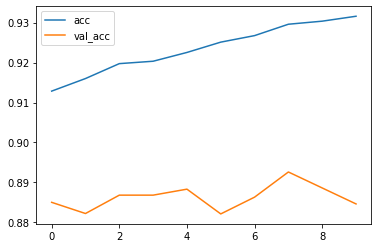

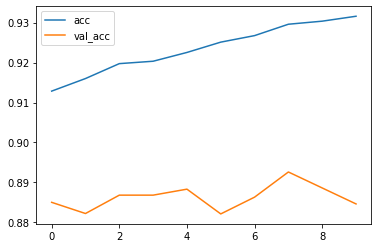

Epoch 1/10

1875/1875 [==============================] - 3s 1ms/step - loss: 0.2287 - acc: 0.9129 - val_loss: 0.3322 - val_acc: 0.8850

Epoch 2/10

1875/1875 [==============================] - 3s 1ms/step - loss: 0.2208 - acc: 0.9160 - val_loss: 0.3488 - val_acc: 0.8822

Epoch 3/10

1875/1875 [==============================] - 3s 1ms/step - loss: 0.2139 - acc: 0.9197 - val_loss: 0.3382 - val_acc: 0.8868

Epoch 4/10

1875/1875 [==============================] - 3s 2ms/step - loss: 0.2092 - acc: 0.9203 - val_loss: 0.3514 - val_acc: 0.8868

Epoch 5/10

1875/1875 [==============================] - 3s 1ms/step - loss: 0.2046 - acc: 0.9225 - val_loss: 0.3459 - val_acc: 0.8883

Epoch 6/10

1875/1875 [==============================] - 3s 1ms/step - loss: 0.1958 - acc: 0.9251 - val_loss: 0.3621 - val_acc: 0.8821

Epoch 7/10

1875/1875 [==============================] - 3s 1ms/step - loss: 0.1911 - acc: 0.9268 - val_loss: 0.3806 - val_acc: 0.8863

Epoch 8/10

1875/1875 [==============================] - 3s 1ms/step - loss: 0.1843 - acc: 0.9296 - val_loss: 0.3520 - val_acc: 0.8926

Epoch 9/10

1875/1875 [==============================] - 3s 1ms/step - loss: 0.1807 - acc: 0.9304 - val_loss: 0.3510 - val_acc: 0.8886

Epoch 10/10

1875/1875 [==============================] - 3s 2ms/step - loss: 0.1767 - acc: 0.9316 - val_loss: 0.3967 - val_acc: 0.8846

history.history.keys()

dict_keys(['loss', 'acc', 'val_loss', 'val_acc'])

plt.plot(history.epoch,history.history.get('loss'),label='loss')plt.plot(history.epoch,history.history.get('val_loss'),label='val_loss')plt.legend() # plt.legend()函数主要的作用就是给图加上图例,就是蓝色表示loss,黄色是val_loss# plt.legend([x,y,z])里面的参数使用的是list的的形式将图表的的名称喂给这和函数。

<matplotlib.legend.Legend at 0x191cfb87d00>

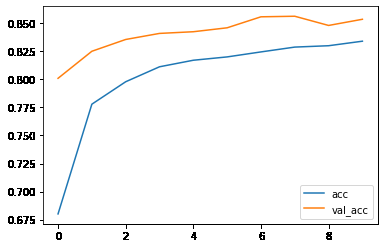

plt.plot(history.epoch,history.history.get('acc'),label='acc')plt.plot(history.epoch,history.history.get('val_acc'),label='val_acc')plt.legend()

<matplotlib.legend.Legend at 0x191d04fae50>

model = tf.keras.Sequential()model.add(tf.keras.layers.Flatten(input_shape=(28,28))) # 参考https://blog.csdn.net/qq_46244851/article/details/109584831model.add(tf.keras.layers.Dense(128,activation='relu'))model.add(tf.keras.layers.Dropout(0.5)) # 随机地丢去50%的隐藏层结点,抑制过拟合model.add(tf.keras.layers.Dense(128,activation='relu'))model.add(tf.keras.layers.Dropout(0.5)) # 随机地丢去50%的隐藏层结点model.add(tf.keras.layers.Dense(128,activation='relu')) # 层数多增加了2层model.add(tf.keras.layers.Dropout(0.5)) # 随机地丢去50%的隐藏层结点model.add(tf.keras.layers.Dense(10,activation='softmax')) # 输出十个类,用softmax转化成概率值激活

model.compile( optimizer='adam', loss='sparse_categorical_crossentropy', # 当label使用数字编码时就使用这个损失函数 metrics=['acc'])

history=model.fit(train_image,train_label, validation_data=(test_image,test_label), # 提示训练集上的loss以及acc epochs=10)

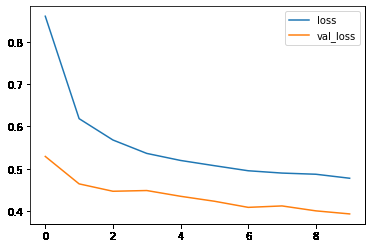

Epoch 1/101875/1875 [==============================] - 4s 2ms/step - loss: 0.8600 - acc: 0.6801 - val_loss: 0.5292 - val_acc: 0.8009Epoch 2/101875/1875 [==============================] - 4s 2ms/step - loss: 0.6184 - acc: 0.7778 - val_loss: 0.4645 - val_acc: 0.8250Epoch 3/101875/1875 [==============================] - 4s 2ms/step - loss: 0.5680 - acc: 0.7978 - val_loss: 0.4471 - val_acc: 0.8355Epoch 4/101875/1875 [==============================] - 4s 2ms/step - loss: 0.5364 - acc: 0.8112 - val_loss: 0.4488 - val_acc: 0.8409Epoch 5/101875/1875 [==============================] - 4s 2ms/step - loss: 0.5198 - acc: 0.8170 - val_loss: 0.4352 - val_acc: 0.8424Epoch 6/101875/1875 [==============================] - 4s 2ms/step - loss: 0.5074 - acc: 0.8200 - val_loss: 0.4235 - val_acc: 0.8459Epoch 7/101875/1875 [==============================] - 3s 2ms/step - loss: 0.4955 - acc: 0.8244 - val_loss: 0.4091 - val_acc: 0.8557Epoch 8/101875/1875 [==============================] - 3s 2ms/step - loss: 0.4900 - acc: 0.8287 - val_loss: 0.4125 - val_acc: 0.8562Epoch 9/101875/1875 [==============================] - 3s 2ms/step - loss: 0.4872 - acc: 0.8299 - val_loss: 0.4008 - val_acc: 0.8480Epoch 10/101875/1875 [==============================] - 3s 2ms/step - loss: 0.4778 - acc: 0.8340 - val_loss: 0.3936 - val_acc: 0.8535

model.summary()

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten_1 (Flatten) (None, 784) 0

_________________________________________________________________

dense_4 (Dense) (None, 128) 100480

_________________________________________________________________

dropout (Dropout) (None, 128) 0

_________________________________________________________________

dense_5 (Dense) (None, 128) 16512

_________________________________________________________________

dropout_1 (Dropout) (None, 128) 0

_________________________________________________________________

dense_6 (Dense) (None, 128) 16512

_________________________________________________________________

dropout_2 (Dropout) (None, 128) 0

_________________________________________________________________

dense_7 (Dense) (None, 10) 1290

=================================================================

Total params: 134,794

Trainable params: 134,794

Non-trainable params: 0

_________________________________________________________________

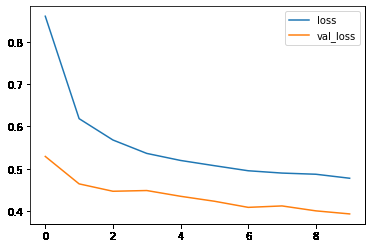

plt.plot(history.epoch,history.history.get('loss'),label='loss')

plt.plot(history.epoch,history.history.get('val_loss'),label='val_loss')

plt.legend() # plt.legend()函数主要的作用就是给图加上图例,就是蓝色表示loss,黄色是val_loss

# plt.legend([x,y,z])里面的参数使用的是list的的形式将图表的的名称喂给这和函数。

<matplotlib.legend.Legend at 0x191cfc76df0>

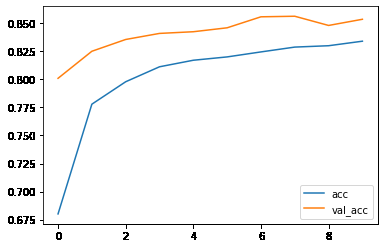

plt.plot(history.epoch,history.history.get('acc'),label='acc')

plt.plot(history.epoch,history.history.get('val_acc'),label='val_acc')

plt.legend()

<matplotlib.legend.Legend at 0x191cfcbf6a0>