shell脚本----周期压缩备份日志文件

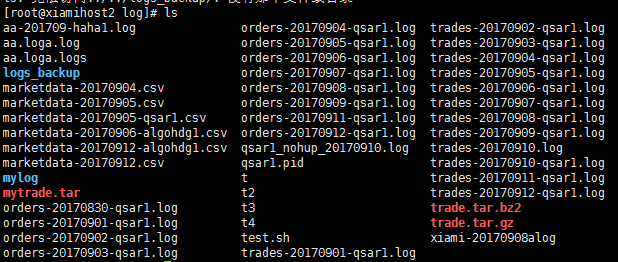

一、日志文件样式

二、目标

1、备份压缩.log结尾&&时间样式为“date +%Y%m%d”的日志文件(如:20170912、20160311等)

2、可指定压缩范围(N天前至当天):如:今天、昨天(date -d "-1 day" +%Y%m%d)至今天、前天至今天

压缩命名格式为:日期.tar.gz(或:日期.tar.bz2),压缩N天范围内文件后将会生成N个压缩包

3、可指定压缩模式(二选一):tar czf 或 tar cjf

4、可指定删除范围:删除N天前的日志文件,如:今天为20170912,删除3天前日志,即删除20170908及其以前的日志文件

5、每周五(不一定周五,可在脚本中指定周几打包)将压缩文件打包(打包成功后删除压缩文件),并上传到日志服务器,上传成功后删除打包文件

6、默认备份当天日志文件,压缩模式为“czf”,删除三天前日志文件

三、脚本内容

#!/bin/bash #author:xiami #date:20170907 #description: compress files of a specified mode and delete logs before a particular date #version:v0.1 strategy_logs_path="/root/apps/myapp/log" date=$(date +%Y%m%d) #------------初始化函数默认参数---------- init_argv(){ #example:init_argv -d 4 -m cjf -r 5 打包压缩近四天及当天日志,压缩模式cjf,删除五天前日志 compress_date=$(date +%Y%m%d) backup_mode="czf" del_days_num=3 backup_path="/root/apps/logs_backup" line=$(ps -ef |grep "ssh-agent"|awk '{if($0!~/grep ssh-agent/)print $0}'|wc -l) while getopts d:m:r: opt do case $opt in d) compress_days="$OPTARG" ;; m) backup_mode="$OPTARG" ;; r) del_days_num="$OPTARG" ;; \?) echo "Usage: `basename $0` [d|m|r]" echo "-d 'Integer' (tar & compress Integer day logfile)" echo "-m 'czf|cjf' (mode)" echo "-r 'Interger' (remove files Integer days ago)" exit 1 ;; esac done if [ ! -d "$backup_path" ];then mkdir -p $backup_path fi # if [ line -lt 1 ];then # echo "Not running ssh-agent" # exit 1 # fi } #------------打包并压缩日志文件操作函数-------------------- tar_compress_log(){ #参数1:压缩日期 参数2:压缩备份模式 local compress_date="$1" local backup_mode="$2" cd "$strategy_logs_path" # ls . -name "*$date*.log"|xargs tar $backup_mode "$date.tar.gz" #压缩当天日志 if [ "$backup_mode" == 'czf' ];then ls | grep ".*[0-9]\{8\}.*\.log$"|grep ".*"$compress_date".*"|xargs tar $backup_mode "$backup_path/"$1".tar.gz" elif [ "$backup_mode" == 'cjf' ];then ls | grep ".*[0-9]\{8\}.*\.log$"|grep ".*"$compress_date".*"|xargs tar $backup_mode "$backup_path/"$1".tar.bz2" else echo "tar mode error";exit 2 fi } #------------打包并压缩N天前到当前日期范围的日志文件---------- compress_range_date(){ #参数1:压缩备份天数 参数2:压缩备份模式 local dates="$date" local compress_days="$1" local backup_mode="$2" if [[ ! -z "$compress_days" && "$compress_days" -ne 0 ]];then for i in `seq 1 "$compress_days"`;do dates=$(date -d "-$i day" +%Y%m%d) # n=$(echo $dates|awk '{print NF}') tar_compress_log "$dates" "$backup_mode" done else tar_compress_log "$dates" "$backup_mode" fi } #------------每周五打包当周压缩文件并在打包成功后删除单个压缩文件------------ tar_file(){ weekday=$(date +%u) if [ "$weekday" -eq 5 ];then cd "$backup_path" ls|xargs tar cf "$date-Fri-logs.tar" && rm *.tar.?z* fi } #-----------删除(del_log_days)天前日志文件(根据mtime)--------- delete_days_log1(){ #参数1:del_days_num local del_days_num="$1" cd "$strategy_logs_path" find . -type f -mtime +$del_days_num |grep ".*[0-9]\{8\}.*\.log"|xargs rm aa -rf #删除操作,请谨慎 } #----------删除(del_log_days)天前日志文件(根据log文件名)---- delete_days_log2(){ #参数1:del_days_num local del_days_num="$1" cd "$strategy_logs_path" local num=$(ls |grep ".*[0-9]\{8\}.*\.log$" |grep -o "[0-9]\{8\}"|awk '!day[$0]++'|wc -l) local files=$(ls |grep ".*[0-9]\{8\}.*\.log$" |grep -o "[0-9]\{8\}"|awk '!day[$0]++') if [ ! -z "$num" ];then local field="" for i in `seq 1 "$num"` do field=$(echo $files|awk '{print $v}' v=$i) if [ "$field" -lt $(date -d "-$del_days_num day" +%Y%m%d) ];then ls *$field* |grep '.*[0-9]\{8\}.*\.log$'|xargs rm aa -rf fi done fi } #-----------上传到日志服务器------------- upload_tgz(){ scp $backup_path/$date.tar.gz 192.168.119.133:/opt/ && rm $backup_path/$date.tar.gz #scp $backup_path/$date*-logs.tar 192.168.119.133:/opt/ && rm $backup_path/$date*-logs.tar } #-----------main------------------------- main(){ init_argv $argv compress_range_date "$compress_days" "$backup_mode" delete_days_log2 $del_days_num tar_file # upload_tgz } #----------执行部分---------------------- argv="" until [ "$#" -eq 0 ];do argv="$argv $1" shift done main $argv

四、运行脚本

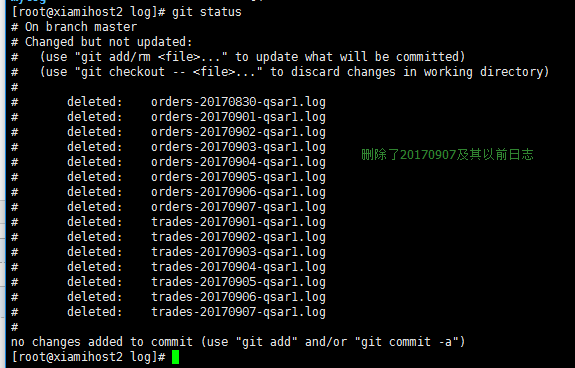

日志文件初始数量为图一所示

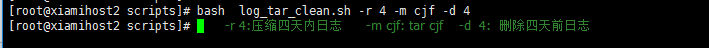

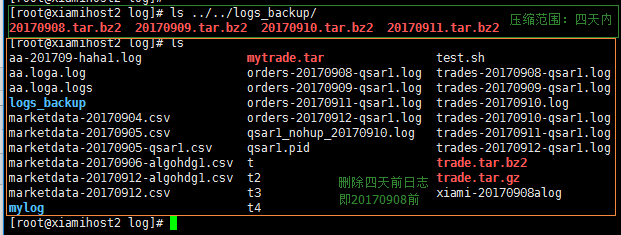

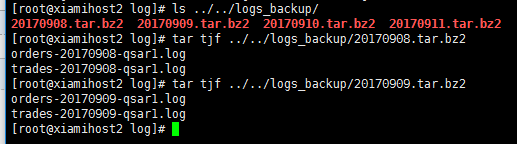

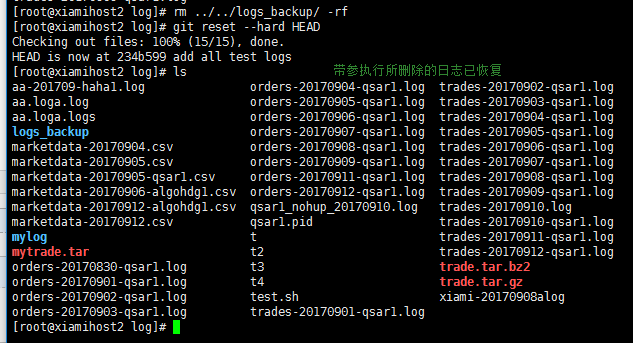

4.1 带参数运行后

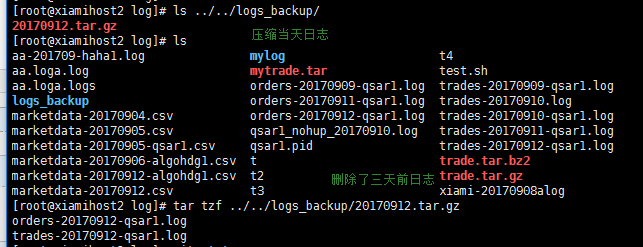

4.2 不带参数运行(默认情况,备份当天日志文件,压缩模式为“czf”,删除三天前日志文件)

为了使测试环境一致,首先恢复被删除日志文件,删除压缩文件

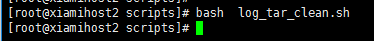

不带参数运行脚本

五、计划任务

略

脚本完善:

1、删除每天的日志文件,机器中只保留近N天(默认14天)的压缩文件

2、考虑到安全性,文件上传改为:远端日志服务器从机器拉取每天对应的日志文件,而不是机器主动上传。

3、一次备份多个目录下日志,目录列表存放于scan_logdir.inc

完善后脚本:

#!/bin/bash #author:xiami #date:20170907 #description: compress files of a specified mode and delete logs before a particular date, reference: http://www.cnblogs.com/xiami-xm/p/7511087.html #version:v0.2 date=$(date +%Y%m%d) scan_log_dir="$(dirname $0)/scan_logdir.inc" #------------初始化函数默认参数---------- init_argv(){ #example:init_argv -d 4 -m cjf -r 5 打包压缩近四天及当天日志,压缩模式cjf,删除五天前日志 compress_date=$(date +%Y%m%d) backup_mode="cjf" del_days_num=3 backup_path="/root/apps/logs_backup" stay_days=14 line=$(ps -ef |grep "ssh-agent"|awk '{if($0!~/grep ssh-agent/)print $0}'|wc -l) while getopts d:m:r:s: opt do case $opt in d) compress_days="$OPTARG" ;; m) backup_mode="$OPTARG" ;; r) del_days_num="$OPTARG" ;; s) stay_days="$OPTARG" ;; \?) echo "Usage: `basename $0` [d|m|r]" echo "-d 'Integer' (tar & compress Integer day logfile)" echo "-m 'czf|cjf' (mode)" echo "-r 'Interger' (remove files Integer days ago)" exit 1 ;; esac done if [ ! -d "$backup_path" ];then mkdir -p $backup_path fi } #------------(实际操作函数)打包并压缩日志文件,压缩成功后立即将文件删除-------------------- tar_compress_log(){ #参数1:压缩日期 参数2:压缩备份模式 local compress_date="$1" local backup_mode="$2" local logs_path="$3" cd "$logs_path" # cd "$strategy_logs_path" # ls . -name "*$date*.log"|xargs tar $backup_mode "$date.tar.gz" #压缩当天日志 if [ "$backup_mode" == 'czf' ];then ls | grep ".*[0-9]\{8\}.*\.\(log\|csv\)$"|grep ".*"$compress_date".*"|xargs tar $backup_mode $backup_path/dir$a.$compress_date.tar.gz >/dev/null 2>&1 && ls | grep ".*[0-9]\{8\}.*\.\(log\|csv\)$"|grep ".*"$compress_date".*"|xargs rm -rf elif [ "$backup_mode" == 'cjf' ];then ls | grep ".*[0-9]\{8\}.*\.\(log\|csv\)$"|grep ".*"$compress_date".*"|xargs tar $backup_mode $backup_path/dir$a.$compress_date.tar.bz2 >/dev/null 2>&1 && ls | grep ".*[0-9]\{8\}.*\.\(log\|csv\)$"|grep ".*"$compress_date".*"|xargs rm -rf else echo "tar mode error";exit 2 fi } #------------检查scan_logdir.inc是否有数据------------------- check_scan(){ local line=$(cat "$scan_log_dir" | wc -l) if [ "$line" -eq 0 ];then exit 3; fi } #------------需要备份的日志目录------------------------------- backup_all_logs_dir(){ check_scan local a=1 while read line;do local logs_path="$line" compress_range_date "$compress_days" "$backup_mode" "$logs_path" # delete_days_log1 "$del_days_num" "$logs_path" delete_days_log2 "$del_days_num" "$logs_path" let a++;echo "-----------------$a----------------------------------" done < "$scan_log_dir" } #------------打包并压缩N天前到当前日期范围的日志文件---------- compress_range_date(){ #参数1:压缩备份天数 参数2:压缩备份模式 local dates="$date" local compress_days="$1" local backup_mode="$2" local logs_path="$3" # if [[ ! -z "$compress_days" && "$compress_days" -ne 0 ]];then if [ ! -z "$compress_days" ];then for i in `seq 0 "$compress_days"`;do dates=$(date -d "-$i day" +%Y%m%d) # n=$(echo $dates|awk '{print NF}') tar_compress_log "$dates" "$backup_mode" "$logs_path" done else tar_compress_log "$dates" "$backup_mode" "$logs_path" fi } #------------每周五打包当周压缩文件并在打包成功后删除单个压缩文件------------ tar_file(){ weekday=$(date +%u) if [ "$weekday" -eq 5 ];then cd "$backup_path" ls|xargs tar cf "$date-Fri-logs.tar" && rm *.tar.?z* fi } #-----------只保留14天内的压缩日志文件------------------------------------ stay_tgz_log(){ local stay_days="$stay_days" local rm_date=$(date -d "-$stay_days day" +%Y%m%d) cd $backup_path ls |grep -o "[0-9]\{8\}"|awk '!aa[$0]++'|while read line;do if [ "$line" -le $rm_date ];then ls *$line* |xargs rm -rf fi done } #-----------删除(del_log_days)天前日志文件(根据mtime)--------- delete_days_log1(){ #参数1:del_days_num local del_days_num="$1" local logs_path="$2" # cd "$strategy_logs_path" cd "$logs_path" find . -type f -mtime +$del_days_num |grep ".*[0-9]\{8\}.*\.\(log\|csv\)$"|xargs rm -rf #删除操作,请谨慎 } #----------删除(del_log_days)天前日志文件(根据log文件名)---- delete_days_log2(){ #参数1:del_days_num local del_days_num="$1" local logs_path="$2" # cd "$strategy_logs_path" cd "$logs_path" local num=$(ls |grep ".*[0-9]\{8\}.*\.\(log\|csv\)$" |grep -o "[0-9]\{8\}"|awk '!day[$0]++'|wc -l) local files=$(ls |grep ".*[0-9]\{8\}.*\.\(log\|csv\)$" |grep -o "[0-9]\{8\}"|awk '!day[$0]++') if [ ! -z "$num" ];then local field="" for i in `seq 1 "$num"` do field=$(echo $files|awk '{print $v}' v=$i) if [ "$field" -le $(date -d "-$del_days_num day" +%Y%m%d) ];then ls *$field* |grep '.*[0-9]\{8\}.*\.\(log\|csv\)$'|xargs rm -rf fi done fi } #-----------上传到日志服务器------------- upload_tgz(){ scp $backup_path/$date.tar.gz 192.168.119.133:/opt/ && rm $backup_path/$date.tar.gz #scp $backup_path/$date*-logs.tar 192.168.119.133:/opt/ && rm $backup_path/$date*-logs.tar } #-----------main------------------------- main(){ init_argv $argv backup_all_logs_dir # compress_range_date "$compress_days" "$backup_mode" # delete_days_log2 $del_days_num stay_tgz_log $stay_days # tar_file # upload_tgz } #----------执行部分---------------------- argv="" until [ "$#" -eq 0 ];do argv="$argv $1" shift done main $argv

日志服务器拉取脚本:

#!/bin/bash date=$(date +%Y%m%d) remote_logs_backup=/root/apps/logs_backup local_logs_backup=/root/apps/local_logs_backup hostsfile="$(dirname $0)/hosts.inc" while read line;do if [ ! -d "$local_logs_backup/$line" ];then mkdir -p "$local_logs_backup/$line" fi scp root@$line:$remote_logs_backup/*$date* $local_logs_backup/$line >/dev/null 2>&1 #&& ssh -nl root $line "rm $remote_logs_backup/* -rf" >/dev/null 2>&1 #ssh登陆或拷贝操作时若文件不存在会报错,可忽略 done < $hostsfile

[root@xiamihost3 script]# cat hosts.inc 192.168.119.131 #hosts.inc中是目标机器的ip地址 192.168.119.133

基本功能已实现,但脚本还有待改进,例如脚本中使用的grep可以换成egrep,可以少一层转义。

上传备份功能还未进行测试,可能会出现错误,当然,上传前肯定得先将本地公钥放入服务器的authorized_keys文件中,实现ssh无密登陆。

最后想感慨一句,还是python更强大

good good study,day day up